User login

Beyond Reporting Early Warning Score Sensitivity: The Temporal Relationship and Clinical Relevance of “True Positive” Alerts that Precede Critical Deterioration

Patients at risk for clinical deterioration in the inpatient setting may not be identified efficiently or effectively by health care providers. Early warning systems that link clinical observations to rapid response mechanisms (such as medical emergency teams) have the potential to improve outcomes, but rigorous studies are lacking.1 The pediatric Rothman Index (pRI) is an automated early warning system sold by the company PeraHealth that is integrated with the electronic health record. The system incorporates vital signs, labs, and nursing assessments from existing electronic health record data to provide a single numeric score that generates alerts based on low absolute scores and acute decreases in score (low scores indicate high mortality risk).2 Automated alerts or rules based on the pRI score are meant to bring important changes in clinical status to the attention of clinicians.

Adverse outcomes (eg, unplanned intensive care unit [ICU] transfers and mortality) are associated with low pRI scores, and scores appear to decline prior to such events.2 However, the limitation of this and other studies evaluating the sensitivity of early warning systems3-6 is that the generated alerts are assigned “true positive” status if they precede clinical deterioration, regardless of whether or not they provide meaningful information to the clinicians caring for the patients. There are two potential critiques of this approach. First, the alert may have preceded a deterioration event but may not have been clinically relevant (eg, an alert triggered by a finding unrelated to the patient’s acute health status, such as a scar that was newly documented as an abnormal skin finding and as a result led to a worsening in the pRI). Second, even if the preceding alert demonstrated clinical relevance to a deterioration event, the clinicians at the bedside may have been aware of the patient’s deterioration for hours and have already escalated care. In this situation, the alert would simply confirm what the clinician already knew.

To better understand the relationship between early warning system acuity alerts and clinical practice, we examined a cohort of hospitalized patients who experienced a critical deterioration event (CDE)7 and who would have triggered a preceding pRI alert. We evaluated the clinical relationship of the alert to the CDE (ie, whether the alert reflected physiologic changes related to a CDE or was instead an artifact of documentation) and identified whether the alert would have preceded evidence that clinicians recognized deterioration or escalated care.

METHODS

Patients and Setting

This retrospective cross-sectional study was performed at Children’s Hospital of Philadelphia (CHOP), a freestanding children’s hospital with 546 beds. Eligible patients were hospitalized on nonintensive care, noncardiology, surgical wards between January 1, 2013, and December 31, 2013. The CHOP Institutional Review Board (IRB) approved the study with waivers of consent and assent. A HIPAA Business Associate Agreement and an IRB Reliance Agreement were in place with PeraHealth to permit data transfer.

Definition of Critical Deterioration Events

Critical deterioration events (CDEs) were defined according to an existing, validated measure7 as unplanned transfers to the ICU with continuous or bilevel positive airway pressure, tracheal intubation, and/or vasopressor infusion in the 12 hours after transfer. At CHOP, all unplanned ICU transfers are routed through the hospital’s rapid response or code blue teams, so these patients were identified using an existing database managed by the CHOP Resuscitation Committee. In the database, the elements of CDEs are entered as part of ongoing quality improvement activities. The time of CDE was defined as the time of the rapid response call precipitating unplanned transfer to the ICU.

The Pediatric Rothman Index

The pRI is an automated acuity score that has been validated in hospitalized pediatric patients.2 The pRI is calculated using existing variables from the electronic health record, including manually entered vital signs, laboratory values, cardiac rhythm, and nursing assessments of organ systems. The weights assigned to continuous variables are a function of deviation from the norm.2,8 (See Supplement 1 for a complete list of variables.)

The pRI is integrated with the electronic health record and automatically generates a score each time a new data observation becomes available. Changes in score over time and low absolute scores generate a graduated series of alerts ranging from medium to very high acuity. This analysis used PeraHealth’s standard pRI alerts. Medium acuity alerts occurred when the pRI score decreased by ≥30% in 24 hours. A high acuity alert occurred when the pRI score decreased by ≥40% in 6 hours. A very high acuity alert occurred when the pRI absolute score was ≤ 30.

Development of the Source Dataset

In 2014, CHOP shared one year of clinical data with PeraHealth as part of the process of deciding whether or not to implement the pRI. The pRI algorithm retrospectively generated scores and acuity alerts for all CHOP patients who experienced CDEs between January 1, 2013, and December 31, 2013. The pRI algorithm was not active in the hospital environment during this time period; the scores and acuity alerts were not visible to clinicians. This dataset was provided to the investigators at CHOP to conduct this project.

Data Collection

Pediatric intensive care nurses trained in clinical research data abstraction from the CHOP Critical Care Center for Evidence and Outcomes performed the chart review for this study. Chart abstraction comparisons were completed on the first 15 charts to ensure interrater reliability, and additional quality assurance checks were performed on intermittent charts to ensure consistency and definition adherence. We managed all data using Research Electronic Data Capture.9

To study the value of alerts labeled as “true positives,” we restricted the dataset to CDEs in which acuity alert(s) within the prior 72 hours would have been triggered if the pRI had been in clinical use at the time.

To identify the clinical relationship between pRI and CDE, we reviewed each chart with the goal of determining whether the preceding acuity alerts were clinically associated with the etiology of the CDE. We determined the etiology of the CDE by reviewing the cause(s) identified in the note written by rapid response or code blue team responders or by the admitting clinical team after transfer to the ICU. We then used a tool provided by PeraHealth to identify the specific score components that led to worsening pRI. If the score components that worsened were (a) consistent with a clinical change as opposed to a documentation artifact and (b) an organ system change that was plausibly related to the CDE etiology, we concluded that the alert was clinically related to the etiology of the CDE.

We defined documentation artifacts as instances in nursing documentation in which a finding unrelated to the patient’s acute health status, such as a scar, was newly documented as abnormal and led to worsening pRI. Any cases in which the clinical relevance was unclear underwent review by additional members of the team

To determine the temporal relationship among pRI, CDE, and clinician awareness or action, we then sought to systematically determine whether the preceding acuity alerts preceded documented evidence of clinicians recognizing deterioration or escalation of care. We made the a priori decision that acuity alerts that occurred more than 24 hours prior to a deterioration event had questionable clinical actionability. Therefore, we restricted this next analysis to CDEs with acuity alerts during the 24 hours prior to a CDE. We reviewed time-stamped progress notes written by clinicians in the 24 hours period prior to the time of the CDE and identified whether the notes reflected an adverse change in patient status or a clinical intervention. We then compared the times of these notes with the times of the alerts and CDEs. Given that documentation of change in clinical status often occurs after clinical intervention, we also reviewed new orders placed in the 24 hours prior to each CDE to determine escalation of care. We identified the following orders as reflective of escalation of care independent of specific disease process: administration of intravenous fluid bolus, blood product, steroid, or antibiotic, increased respiratory support, new imaging studies, and new laboratory studies. We then compared the time of each order with the time of the alert and CDE.

RESULTS

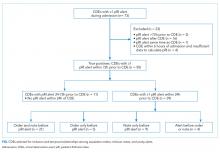

During the study period, 73 events met the CDE criteria and had a pRI alert during admission. Of the 73 events, 50 would have triggered at least one pRI alert in the 72-hour period leading up to the CDE (sensitivity 68%). Of the 50 events, 39 generated pRI alerts in the 24 hours leading up to the event, and 11 others generated pRI alerts between 24 and 72 hours prior to the event but did not generate any alerts during the 24 hours leading up to the event (Figure).

Patient Characteristics

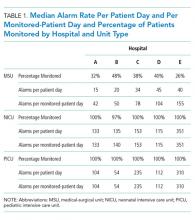

The 50 CDEs labeled as true positives occurred in 46 unique patients. Table 1 displays the event characteristics.

Acuity Alerts

A total of 79 pRI alerts preceded the 50 CDEs. Of these acuity alerts, 44 (56%) were medium acuity alerts, 17 (22%) were high acuity alerts, and 18 (23%) were very high acuity alerts. Of the 50 CDEs that would have triggered pRI alerts, 33 (66%) would have triggered a single acuity alert and 17 (34%) would have triggered multiple acuity alerts.

Of the 50 CDEs, 39 (78%) had a preceding acuity alert within 24 hours prior to the CDE. In these cases, the alert preceded the CDE by a median of 3.1 hours (interquartile range of 0.7 to 10.3 hours).

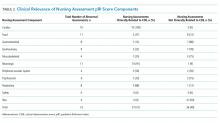

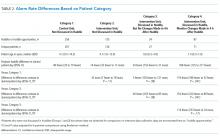

We assessed the score components that caused each alert to trigger. All of the vital sign and laboratory components were assessed as clinically related to the CDE’s etiology. By contrast, about half of nursing assessment components were assessed as clinically related to the etiology of the CDE (Table 2). Abnormal cardiac, respiratory, and neurologic assessments were most frequently assessed as clinically relevant.

Escalation Orders

To determine whether the pRI alert would have preceded the earliest documented treatment efforts, we restricted evaluation to the 39 CDEs that had at least one alert in the 24-hour window prior to the CDE. When we reviewed escalation orders placed by clinicians, we found that in 26 cases (67%), the first clinician order reflecting escalation of care would have preceded the first pRI alert within the 24-hour period prior to the CDE. In 13 cases (33%), the first pRI alert would have preceded the first escalation order placed by the clinician. The first pRI alert and the first escalation order would have occurred within the same 1-hour period in 6 of these cases.

Provider Notes

Temporal Relationships

In Supplement 2, we present the proportion of CDEs in which the order or note preceded the pRI alert for each abnormal organ system.

The Figure shows the temporal relationships among escalation orders, clinician notes, and acuity alerts for the 39 CDEs with one or more alerts in the 24 hours leading up to the event. In 21 cases (54%), both an escalation order and a note preceded the first acuity alert. In 14 cases (36%), either an escalation order or a note preceded the first acuity alert. In four cases (10%), the alert preceded any documented evidence that clinicians had recognized deterioration or escalating care.

DISCUSSION

The main finding of this study is that 90% of CDE events that generated “true positive” pRI alerts had evidence suggesting that clinicians had already recognized deterioration and/or were already escalating care before most pRI alerts would have been triggered.

The impacts of early warning scores on patient safety outcomes are not well established. In a recent 21-hospital cluster randomized trial of the BedsidePEWS, a pediatric early warning score system, investigators found that implementing the system does not significantly decrease all-cause mortality in hospitalized children, although hospitals using the BedsidePEWS have low rates of significant CDEs.10 In other studies, early warning scores were often coimplemented with rapid response teams, and separating the incremental benefit of the scoring tool from the availability of a rapid response team is usually not possible.11

Therefore, the benefits of early warning scores are often inferred based on their test characteristics (eg, sensitivity and positive predictive value).12 Sensitivity, which is the proportion of patients who deteriorated and also triggered the early warning score within a reasonable time window preceding the event, is an important consideration when deciding whether an early warning score is worth implementing. A challenging follow-up question that goes beyond sensitivity is how often an early warning score adds new knowledge by identifying patients on a path toward deterioration who were not yet recognized. This study is the first to address that follow-up question. Our results revealed that the score appeared to precede evidence of clinician recognition of deterioration in 10% of CDEs. In some patients, the alert could have contributed to a detection of deterioration that was not previously evident. In the portion of CDEs in which the alert and escalation order or note occurred within the same one-hour window, the alert could have been used as confirmation of clinical suspicion. Notably, we did not evaluate the 16 cases in which a CDE preceded any pRI alert because we chose to focus on “true positive” cases in which pRI alerts preceded CDEs. These events could have had timely recognition by clinicians that we did not capture, so these results may provide an overestimation of CDEs in which the pRI preceded clinician recognition.

Prior work has described a range of mechanisms by which early warning scores can impact patient safety.13 The results of this study suggest limited incremental benefit for the pRI to alert physicians and nurses to new concerning changes at this hospital, although the benefits to low-resourced community hospitals that care for children may be great. The pRI score may also serve as evidence that empowers nurses to overcome barriers to further escalate care, even if the process of escalation has already begun. In addition to empowering nurses, the score may support trainees and clinicians with varying levels of pediatric expertise in the decision to escalate care. Evaluating these potential benefits would require prospective study.

We used the pRI alerts as they were already defined by PeraHealth for CHOP, and different alert thresholds may change score performance. Our study did not identify additional variables to improve score performance, but they can be investigated in future research.

This study had several limitations. First, this work is a single-center study with highly skilled pediatric providers, a mature rapid response system, and low rates of cardiopulmonary arrest outside ICUs. Therefore, the results that we obtained were not immediately generalizable. In a community environment with nurses and physicians who are less experienced in caring for ill children, an early warning score with high sensitivity may be beneficial in ensuring patient safety.

Second, by using escalation orders and notes from the patient chart, we did not capture all the undocumented ways in which clinicians demonstrate awareness of deterioration. For example, a resident may alert the attending on service or a team may informally request consultation with a specialist. We also gave equal weight to escalation orders and clinician notes as evidence of recognition of deterioration. It could be that either orders or notes more closely correlated with clinician awareness.

Finally, the data were from 2013. Although the score components have not changed, efforts to standardize nursing assessments may have altered the performance of the score in the intervening years.

CONCLUSIONS

In most patients who had a CDE at a large freestanding children’s hospital, escalation orders or documented changes in patient status would have occurred before a pRI alert. However, in a minority of patients, the alert could have contributed to the detection of deterioration that was not previously evident.

Disclosures

The authors have nothing to disclose

Funding

The study was supported by funds from the Department of Biomedical and Health Informatics at Children’s Hospital of Philadelphia. PeraHealth, the company that sells the Rothman Index software, provided a service to the investigators but no funding. They applied their proprietary scoring algorithm to the data from Children’s Hospital of Philadelphia to generate alerts retrospectively. This service was provided free of charge in 2014 during the time period when Children’s Hospital of Philadelphia was considering purchasing and implementing PeraHealth software, which it subsequently did. We did not receive any funding for the study from PeraHealth. PeraHealth personnel did not influence the study design, the interpretation of data, the writing of the report, or the decision to submit the article for publication.

1. Alam N, Hobbelink EL, van Tienhoven AJ, van de Ven PM, Jansma EP, Nanayakkara PWB. The impact of the use of the Early Warning Score (EWS) on patient outcomes: a systematic review. Resuscitation. 2014;85(5):587-594. doi: 10.1016/j.resuscitation.2014.01.013. PubMed

2. Rothman MJ, Tepas JJ, Nowalk AJ, et al. Development and validation of a continuously age-adjusted measure of patient condition for hospitalized children using the electronic medical record. J Biomed Inform. 2017;66 (Supplement C):180-193. doi: 10.1016/j.jbi.2016.12.013. PubMed

3. Akre M, Finkelstein M, Erickson M, Liu M, Vanderbilt L, Billman G. Sensitivity of the pediatric early warning score to identify patient deterioration. Pediatrics. 2010;125(4):e763-e769. doi: 10.1542/peds.2009-0338. PubMed

4. Seiger N, Maconochie I, Oostenbrink R, Moll HA. Validity of different pediatric early warning scores in the emergency department. Pediatrics. 2013;132(4):e841-e850. doi: 10.1542/peds.2012-3594. PubMed

5. Parshuram CS, Hutchison J, Middaugh K. Development and initial validation of the Bedside Paediatric Early Warning System score. Crit Care Lond Engl. 2009;13(4):R135. doi: 10.1186/cc7998. PubMed

6. Hollis RH, Graham LA, Lazenby JP, et al. A role for the early warning score in early identification of critical postoperative complications. Ann Surg. 2016;263(5):918-923. doi: 10.1097/SLA.0000000000001514. PubMed

7. Bonafide CP, Roberts KE, Priestley MA, et al. Development of a pragmatic measure for evaluating and optimizing rapid response systems. Pediatrics. 2012;129(4):e874-e881. doi: 10.1542/peds.2011-2784. PubMed

8. Rothman MJ, Rothman SI, Beals J. Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46(5):837-848. doi: 10.1016/j.jbi.2013.06.011. PubMed

9. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi: 10.1016/j.jbi.2008.08.010. PubMed

10. Parshuram CS, Dryden-Palmer K, Farrell C, et al. Effect of a pediatric early warning system on all-cause mortality in hospitalized pediatric patients: the EPOCH randomized clinical trial. JAMA. 2018;319(10):1002-1012. doi: 10.1001/jama.2018.0948. PubMed

11. Bonafide CP, Localio AR, Roberts KE, Nadkarni VM, Weirich CM, Keren R. Impact of rapid response system implementation on critical deterioration events in children. JAMA Pediatr. 2014;168(1):25-33. doi: 10.1001/jamapediatrics.2013.3266. PubMed

12. Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19:285. doi: 10.1186/s13054-015-0999-1. PubMed

13. Bonafide CP, Roberts KE, Weirich CM, et al. Beyond statistical prediction: qualitative evaluation of the mechanisms by which pediatric early warning scores impact patient safety. J Hosp Med. 2013;8(5):248-253. doi: 10.1002/jhm.2026. PubMed

Patients at risk for clinical deterioration in the inpatient setting may not be identified efficiently or effectively by health care providers. Early warning systems that link clinical observations to rapid response mechanisms (such as medical emergency teams) have the potential to improve outcomes, but rigorous studies are lacking.1 The pediatric Rothman Index (pRI) is an automated early warning system sold by the company PeraHealth that is integrated with the electronic health record. The system incorporates vital signs, labs, and nursing assessments from existing electronic health record data to provide a single numeric score that generates alerts based on low absolute scores and acute decreases in score (low scores indicate high mortality risk).2 Automated alerts or rules based on the pRI score are meant to bring important changes in clinical status to the attention of clinicians.

Adverse outcomes (eg, unplanned intensive care unit [ICU] transfers and mortality) are associated with low pRI scores, and scores appear to decline prior to such events.2 However, the limitation of this and other studies evaluating the sensitivity of early warning systems3-6 is that the generated alerts are assigned “true positive” status if they precede clinical deterioration, regardless of whether or not they provide meaningful information to the clinicians caring for the patients. There are two potential critiques of this approach. First, the alert may have preceded a deterioration event but may not have been clinically relevant (eg, an alert triggered by a finding unrelated to the patient’s acute health status, such as a scar that was newly documented as an abnormal skin finding and as a result led to a worsening in the pRI). Second, even if the preceding alert demonstrated clinical relevance to a deterioration event, the clinicians at the bedside may have been aware of the patient’s deterioration for hours and have already escalated care. In this situation, the alert would simply confirm what the clinician already knew.

To better understand the relationship between early warning system acuity alerts and clinical practice, we examined a cohort of hospitalized patients who experienced a critical deterioration event (CDE)7 and who would have triggered a preceding pRI alert. We evaluated the clinical relationship of the alert to the CDE (ie, whether the alert reflected physiologic changes related to a CDE or was instead an artifact of documentation) and identified whether the alert would have preceded evidence that clinicians recognized deterioration or escalated care.

METHODS

Patients and Setting

This retrospective cross-sectional study was performed at Children’s Hospital of Philadelphia (CHOP), a freestanding children’s hospital with 546 beds. Eligible patients were hospitalized on nonintensive care, noncardiology, surgical wards between January 1, 2013, and December 31, 2013. The CHOP Institutional Review Board (IRB) approved the study with waivers of consent and assent. A HIPAA Business Associate Agreement and an IRB Reliance Agreement were in place with PeraHealth to permit data transfer.

Definition of Critical Deterioration Events

Critical deterioration events (CDEs) were defined according to an existing, validated measure7 as unplanned transfers to the ICU with continuous or bilevel positive airway pressure, tracheal intubation, and/or vasopressor infusion in the 12 hours after transfer. At CHOP, all unplanned ICU transfers are routed through the hospital’s rapid response or code blue teams, so these patients were identified using an existing database managed by the CHOP Resuscitation Committee. In the database, the elements of CDEs are entered as part of ongoing quality improvement activities. The time of CDE was defined as the time of the rapid response call precipitating unplanned transfer to the ICU.

The Pediatric Rothman Index

The pRI is an automated acuity score that has been validated in hospitalized pediatric patients.2 The pRI is calculated using existing variables from the electronic health record, including manually entered vital signs, laboratory values, cardiac rhythm, and nursing assessments of organ systems. The weights assigned to continuous variables are a function of deviation from the norm.2,8 (See Supplement 1 for a complete list of variables.)

The pRI is integrated with the electronic health record and automatically generates a score each time a new data observation becomes available. Changes in score over time and low absolute scores generate a graduated series of alerts ranging from medium to very high acuity. This analysis used PeraHealth’s standard pRI alerts. Medium acuity alerts occurred when the pRI score decreased by ≥30% in 24 hours. A high acuity alert occurred when the pRI score decreased by ≥40% in 6 hours. A very high acuity alert occurred when the pRI absolute score was ≤ 30.

Development of the Source Dataset

In 2014, CHOP shared one year of clinical data with PeraHealth as part of the process of deciding whether or not to implement the pRI. The pRI algorithm retrospectively generated scores and acuity alerts for all CHOP patients who experienced CDEs between January 1, 2013, and December 31, 2013. The pRI algorithm was not active in the hospital environment during this time period; the scores and acuity alerts were not visible to clinicians. This dataset was provided to the investigators at CHOP to conduct this project.

Data Collection

Pediatric intensive care nurses trained in clinical research data abstraction from the CHOP Critical Care Center for Evidence and Outcomes performed the chart review for this study. Chart abstraction comparisons were completed on the first 15 charts to ensure interrater reliability, and additional quality assurance checks were performed on intermittent charts to ensure consistency and definition adherence. We managed all data using Research Electronic Data Capture.9

To study the value of alerts labeled as “true positives,” we restricted the dataset to CDEs in which acuity alert(s) within the prior 72 hours would have been triggered if the pRI had been in clinical use at the time.

To identify the clinical relationship between pRI and CDE, we reviewed each chart with the goal of determining whether the preceding acuity alerts were clinically associated with the etiology of the CDE. We determined the etiology of the CDE by reviewing the cause(s) identified in the note written by rapid response or code blue team responders or by the admitting clinical team after transfer to the ICU. We then used a tool provided by PeraHealth to identify the specific score components that led to worsening pRI. If the score components that worsened were (a) consistent with a clinical change as opposed to a documentation artifact and (b) an organ system change that was plausibly related to the CDE etiology, we concluded that the alert was clinically related to the etiology of the CDE.

We defined documentation artifacts as instances in nursing documentation in which a finding unrelated to the patient’s acute health status, such as a scar, was newly documented as abnormal and led to worsening pRI. Any cases in which the clinical relevance was unclear underwent review by additional members of the team

To determine the temporal relationship among pRI, CDE, and clinician awareness or action, we then sought to systematically determine whether the preceding acuity alerts preceded documented evidence of clinicians recognizing deterioration or escalation of care. We made the a priori decision that acuity alerts that occurred more than 24 hours prior to a deterioration event had questionable clinical actionability. Therefore, we restricted this next analysis to CDEs with acuity alerts during the 24 hours prior to a CDE. We reviewed time-stamped progress notes written by clinicians in the 24 hours period prior to the time of the CDE and identified whether the notes reflected an adverse change in patient status or a clinical intervention. We then compared the times of these notes with the times of the alerts and CDEs. Given that documentation of change in clinical status often occurs after clinical intervention, we also reviewed new orders placed in the 24 hours prior to each CDE to determine escalation of care. We identified the following orders as reflective of escalation of care independent of specific disease process: administration of intravenous fluid bolus, blood product, steroid, or antibiotic, increased respiratory support, new imaging studies, and new laboratory studies. We then compared the time of each order with the time of the alert and CDE.

RESULTS

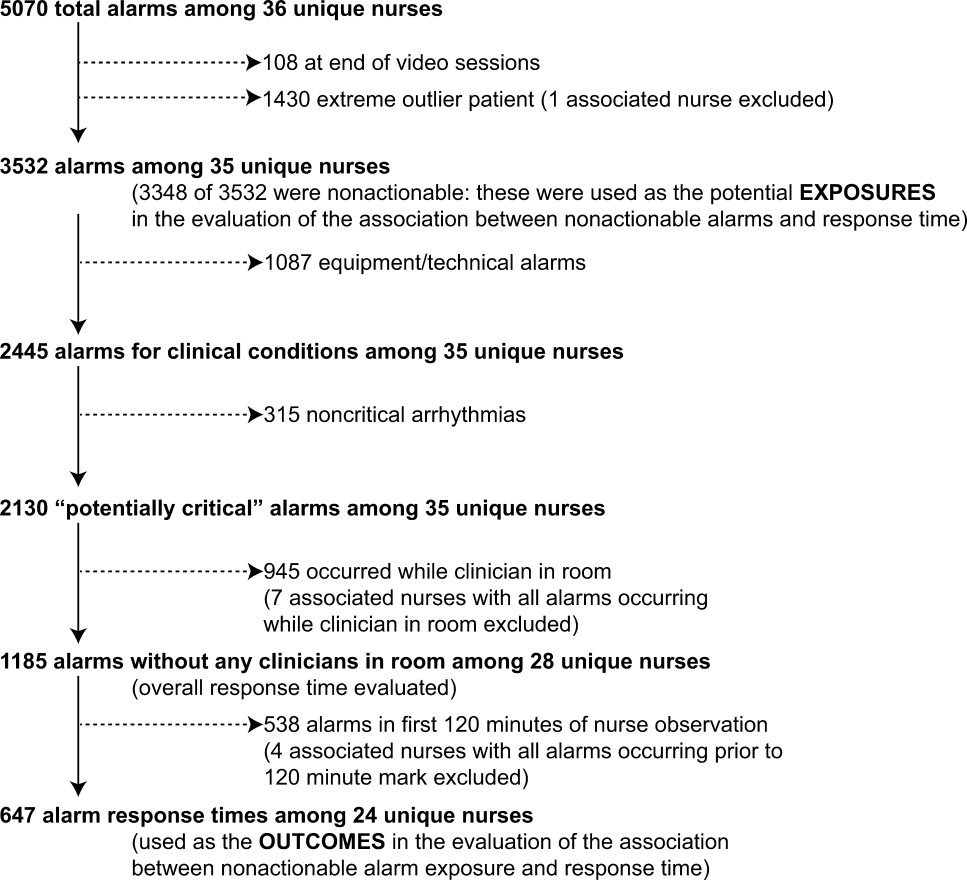

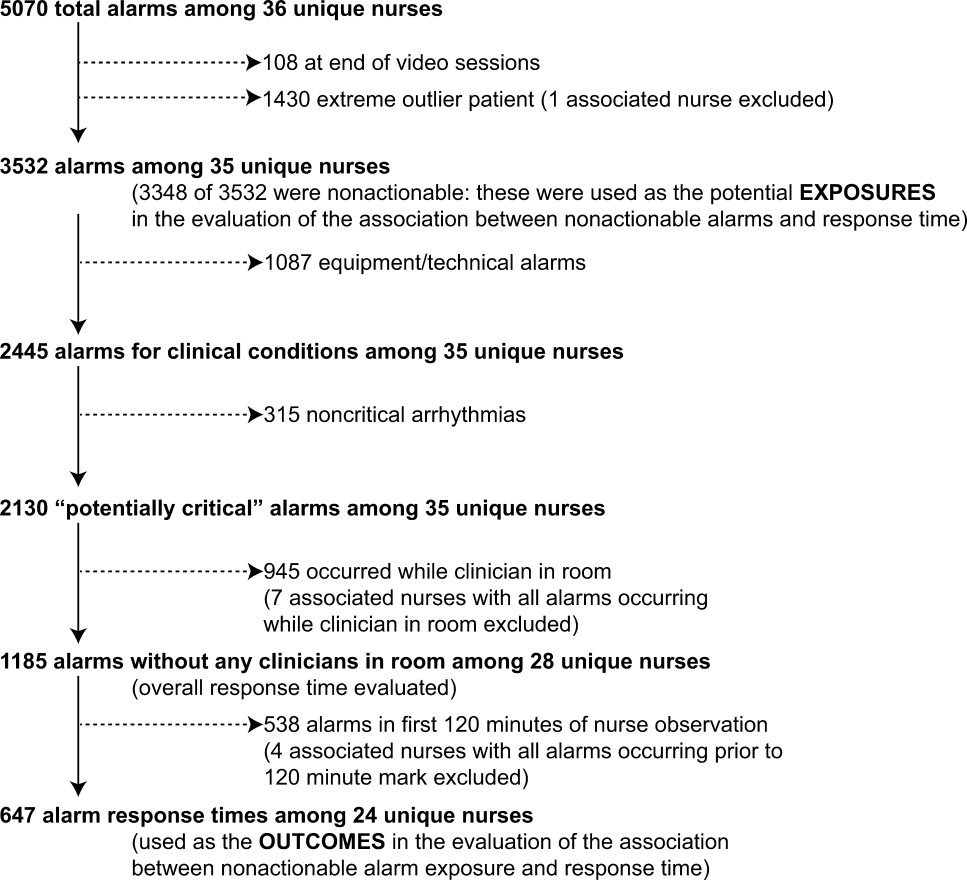

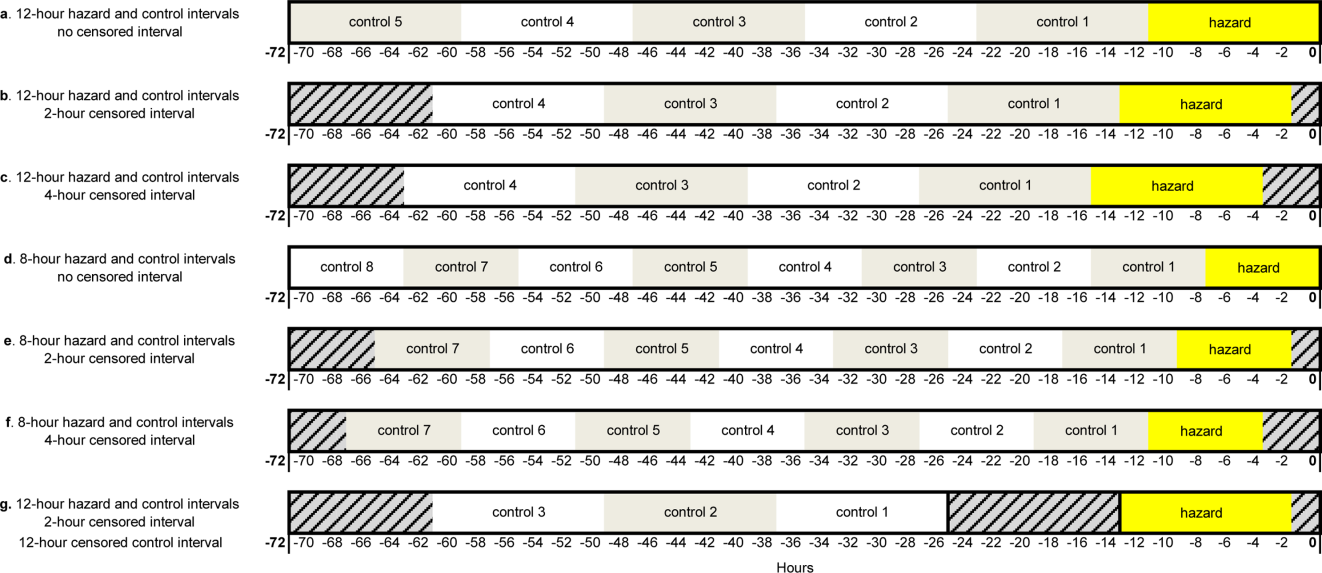

During the study period, 73 events met the CDE criteria and had a pRI alert during admission. Of the 73 events, 50 would have triggered at least one pRI alert in the 72-hour period leading up to the CDE (sensitivity 68%). Of the 50 events, 39 generated pRI alerts in the 24 hours leading up to the event, and 11 others generated pRI alerts between 24 and 72 hours prior to the event but did not generate any alerts during the 24 hours leading up to the event (Figure).

Patient Characteristics

The 50 CDEs labeled as true positives occurred in 46 unique patients. Table 1 displays the event characteristics.

Acuity Alerts

A total of 79 pRI alerts preceded the 50 CDEs. Of these acuity alerts, 44 (56%) were medium acuity alerts, 17 (22%) were high acuity alerts, and 18 (23%) were very high acuity alerts. Of the 50 CDEs that would have triggered pRI alerts, 33 (66%) would have triggered a single acuity alert and 17 (34%) would have triggered multiple acuity alerts.

Of the 50 CDEs, 39 (78%) had a preceding acuity alert within 24 hours prior to the CDE. In these cases, the alert preceded the CDE by a median of 3.1 hours (interquartile range of 0.7 to 10.3 hours).

We assessed the score components that caused each alert to trigger. All of the vital sign and laboratory components were assessed as clinically related to the CDE’s etiology. By contrast, about half of nursing assessment components were assessed as clinically related to the etiology of the CDE (Table 2). Abnormal cardiac, respiratory, and neurologic assessments were most frequently assessed as clinically relevant.

Escalation Orders

To determine whether the pRI alert would have preceded the earliest documented treatment efforts, we restricted evaluation to the 39 CDEs that had at least one alert in the 24-hour window prior to the CDE. When we reviewed escalation orders placed by clinicians, we found that in 26 cases (67%), the first clinician order reflecting escalation of care would have preceded the first pRI alert within the 24-hour period prior to the CDE. In 13 cases (33%), the first pRI alert would have preceded the first escalation order placed by the clinician. The first pRI alert and the first escalation order would have occurred within the same 1-hour period in 6 of these cases.

Provider Notes

Temporal Relationships

In Supplement 2, we present the proportion of CDEs in which the order or note preceded the pRI alert for each abnormal organ system.

The Figure shows the temporal relationships among escalation orders, clinician notes, and acuity alerts for the 39 CDEs with one or more alerts in the 24 hours leading up to the event. In 21 cases (54%), both an escalation order and a note preceded the first acuity alert. In 14 cases (36%), either an escalation order or a note preceded the first acuity alert. In four cases (10%), the alert preceded any documented evidence that clinicians had recognized deterioration or escalating care.

DISCUSSION

The main finding of this study is that 90% of CDE events that generated “true positive” pRI alerts had evidence suggesting that clinicians had already recognized deterioration and/or were already escalating care before most pRI alerts would have been triggered.

The impacts of early warning scores on patient safety outcomes are not well established. In a recent 21-hospital cluster randomized trial of the BedsidePEWS, a pediatric early warning score system, investigators found that implementing the system does not significantly decrease all-cause mortality in hospitalized children, although hospitals using the BedsidePEWS have low rates of significant CDEs.10 In other studies, early warning scores were often coimplemented with rapid response teams, and separating the incremental benefit of the scoring tool from the availability of a rapid response team is usually not possible.11

Therefore, the benefits of early warning scores are often inferred based on their test characteristics (eg, sensitivity and positive predictive value).12 Sensitivity, which is the proportion of patients who deteriorated and also triggered the early warning score within a reasonable time window preceding the event, is an important consideration when deciding whether an early warning score is worth implementing. A challenging follow-up question that goes beyond sensitivity is how often an early warning score adds new knowledge by identifying patients on a path toward deterioration who were not yet recognized. This study is the first to address that follow-up question. Our results revealed that the score appeared to precede evidence of clinician recognition of deterioration in 10% of CDEs. In some patients, the alert could have contributed to a detection of deterioration that was not previously evident. In the portion of CDEs in which the alert and escalation order or note occurred within the same one-hour window, the alert could have been used as confirmation of clinical suspicion. Notably, we did not evaluate the 16 cases in which a CDE preceded any pRI alert because we chose to focus on “true positive” cases in which pRI alerts preceded CDEs. These events could have had timely recognition by clinicians that we did not capture, so these results may provide an overestimation of CDEs in which the pRI preceded clinician recognition.

Prior work has described a range of mechanisms by which early warning scores can impact patient safety.13 The results of this study suggest limited incremental benefit for the pRI to alert physicians and nurses to new concerning changes at this hospital, although the benefits to low-resourced community hospitals that care for children may be great. The pRI score may also serve as evidence that empowers nurses to overcome barriers to further escalate care, even if the process of escalation has already begun. In addition to empowering nurses, the score may support trainees and clinicians with varying levels of pediatric expertise in the decision to escalate care. Evaluating these potential benefits would require prospective study.

We used the pRI alerts as they were already defined by PeraHealth for CHOP, and different alert thresholds may change score performance. Our study did not identify additional variables to improve score performance, but they can be investigated in future research.

This study had several limitations. First, this work is a single-center study with highly skilled pediatric providers, a mature rapid response system, and low rates of cardiopulmonary arrest outside ICUs. Therefore, the results that we obtained were not immediately generalizable. In a community environment with nurses and physicians who are less experienced in caring for ill children, an early warning score with high sensitivity may be beneficial in ensuring patient safety.

Second, by using escalation orders and notes from the patient chart, we did not capture all the undocumented ways in which clinicians demonstrate awareness of deterioration. For example, a resident may alert the attending on service or a team may informally request consultation with a specialist. We also gave equal weight to escalation orders and clinician notes as evidence of recognition of deterioration. It could be that either orders or notes more closely correlated with clinician awareness.

Finally, the data were from 2013. Although the score components have not changed, efforts to standardize nursing assessments may have altered the performance of the score in the intervening years.

CONCLUSIONS

In most patients who had a CDE at a large freestanding children’s hospital, escalation orders or documented changes in patient status would have occurred before a pRI alert. However, in a minority of patients, the alert could have contributed to the detection of deterioration that was not previously evident.

Disclosures

The authors have nothing to disclose

Funding

The study was supported by funds from the Department of Biomedical and Health Informatics at Children’s Hospital of Philadelphia. PeraHealth, the company that sells the Rothman Index software, provided a service to the investigators but no funding. They applied their proprietary scoring algorithm to the data from Children’s Hospital of Philadelphia to generate alerts retrospectively. This service was provided free of charge in 2014 during the time period when Children’s Hospital of Philadelphia was considering purchasing and implementing PeraHealth software, which it subsequently did. We did not receive any funding for the study from PeraHealth. PeraHealth personnel did not influence the study design, the interpretation of data, the writing of the report, or the decision to submit the article for publication.

Patients at risk for clinical deterioration in the inpatient setting may not be identified efficiently or effectively by health care providers. Early warning systems that link clinical observations to rapid response mechanisms (such as medical emergency teams) have the potential to improve outcomes, but rigorous studies are lacking.1 The pediatric Rothman Index (pRI) is an automated early warning system sold by the company PeraHealth that is integrated with the electronic health record. The system incorporates vital signs, labs, and nursing assessments from existing electronic health record data to provide a single numeric score that generates alerts based on low absolute scores and acute decreases in score (low scores indicate high mortality risk).2 Automated alerts or rules based on the pRI score are meant to bring important changes in clinical status to the attention of clinicians.

Adverse outcomes (eg, unplanned intensive care unit [ICU] transfers and mortality) are associated with low pRI scores, and scores appear to decline prior to such events.2 However, the limitation of this and other studies evaluating the sensitivity of early warning systems3-6 is that the generated alerts are assigned “true positive” status if they precede clinical deterioration, regardless of whether or not they provide meaningful information to the clinicians caring for the patients. There are two potential critiques of this approach. First, the alert may have preceded a deterioration event but may not have been clinically relevant (eg, an alert triggered by a finding unrelated to the patient’s acute health status, such as a scar that was newly documented as an abnormal skin finding and as a result led to a worsening in the pRI). Second, even if the preceding alert demonstrated clinical relevance to a deterioration event, the clinicians at the bedside may have been aware of the patient’s deterioration for hours and have already escalated care. In this situation, the alert would simply confirm what the clinician already knew.

To better understand the relationship between early warning system acuity alerts and clinical practice, we examined a cohort of hospitalized patients who experienced a critical deterioration event (CDE)7 and who would have triggered a preceding pRI alert. We evaluated the clinical relationship of the alert to the CDE (ie, whether the alert reflected physiologic changes related to a CDE or was instead an artifact of documentation) and identified whether the alert would have preceded evidence that clinicians recognized deterioration or escalated care.

METHODS

Patients and Setting

This retrospective cross-sectional study was performed at Children’s Hospital of Philadelphia (CHOP), a freestanding children’s hospital with 546 beds. Eligible patients were hospitalized on nonintensive care, noncardiology, surgical wards between January 1, 2013, and December 31, 2013. The CHOP Institutional Review Board (IRB) approved the study with waivers of consent and assent. A HIPAA Business Associate Agreement and an IRB Reliance Agreement were in place with PeraHealth to permit data transfer.

Definition of Critical Deterioration Events

Critical deterioration events (CDEs) were defined according to an existing, validated measure7 as unplanned transfers to the ICU with continuous or bilevel positive airway pressure, tracheal intubation, and/or vasopressor infusion in the 12 hours after transfer. At CHOP, all unplanned ICU transfers are routed through the hospital’s rapid response or code blue teams, so these patients were identified using an existing database managed by the CHOP Resuscitation Committee. In the database, the elements of CDEs are entered as part of ongoing quality improvement activities. The time of CDE was defined as the time of the rapid response call precipitating unplanned transfer to the ICU.

The Pediatric Rothman Index

The pRI is an automated acuity score that has been validated in hospitalized pediatric patients.2 The pRI is calculated using existing variables from the electronic health record, including manually entered vital signs, laboratory values, cardiac rhythm, and nursing assessments of organ systems. The weights assigned to continuous variables are a function of deviation from the norm.2,8 (See Supplement 1 for a complete list of variables.)

The pRI is integrated with the electronic health record and automatically generates a score each time a new data observation becomes available. Changes in score over time and low absolute scores generate a graduated series of alerts ranging from medium to very high acuity. This analysis used PeraHealth’s standard pRI alerts. Medium acuity alerts occurred when the pRI score decreased by ≥30% in 24 hours. A high acuity alert occurred when the pRI score decreased by ≥40% in 6 hours. A very high acuity alert occurred when the pRI absolute score was ≤ 30.

Development of the Source Dataset

In 2014, CHOP shared one year of clinical data with PeraHealth as part of the process of deciding whether or not to implement the pRI. The pRI algorithm retrospectively generated scores and acuity alerts for all CHOP patients who experienced CDEs between January 1, 2013, and December 31, 2013. The pRI algorithm was not active in the hospital environment during this time period; the scores and acuity alerts were not visible to clinicians. This dataset was provided to the investigators at CHOP to conduct this project.

Data Collection

Pediatric intensive care nurses trained in clinical research data abstraction from the CHOP Critical Care Center for Evidence and Outcomes performed the chart review for this study. Chart abstraction comparisons were completed on the first 15 charts to ensure interrater reliability, and additional quality assurance checks were performed on intermittent charts to ensure consistency and definition adherence. We managed all data using Research Electronic Data Capture.9

To study the value of alerts labeled as “true positives,” we restricted the dataset to CDEs in which acuity alert(s) within the prior 72 hours would have been triggered if the pRI had been in clinical use at the time.

To identify the clinical relationship between pRI and CDE, we reviewed each chart with the goal of determining whether the preceding acuity alerts were clinically associated with the etiology of the CDE. We determined the etiology of the CDE by reviewing the cause(s) identified in the note written by rapid response or code blue team responders or by the admitting clinical team after transfer to the ICU. We then used a tool provided by PeraHealth to identify the specific score components that led to worsening pRI. If the score components that worsened were (a) consistent with a clinical change as opposed to a documentation artifact and (b) an organ system change that was plausibly related to the CDE etiology, we concluded that the alert was clinically related to the etiology of the CDE.

We defined documentation artifacts as instances in nursing documentation in which a finding unrelated to the patient’s acute health status, such as a scar, was newly documented as abnormal and led to worsening pRI. Any cases in which the clinical relevance was unclear underwent review by additional members of the team

To determine the temporal relationship among pRI, CDE, and clinician awareness or action, we then sought to systematically determine whether the preceding acuity alerts preceded documented evidence of clinicians recognizing deterioration or escalation of care. We made the a priori decision that acuity alerts that occurred more than 24 hours prior to a deterioration event had questionable clinical actionability. Therefore, we restricted this next analysis to CDEs with acuity alerts during the 24 hours prior to a CDE. We reviewed time-stamped progress notes written by clinicians in the 24 hours period prior to the time of the CDE and identified whether the notes reflected an adverse change in patient status or a clinical intervention. We then compared the times of these notes with the times of the alerts and CDEs. Given that documentation of change in clinical status often occurs after clinical intervention, we also reviewed new orders placed in the 24 hours prior to each CDE to determine escalation of care. We identified the following orders as reflective of escalation of care independent of specific disease process: administration of intravenous fluid bolus, blood product, steroid, or antibiotic, increased respiratory support, new imaging studies, and new laboratory studies. We then compared the time of each order with the time of the alert and CDE.

RESULTS

During the study period, 73 events met the CDE criteria and had a pRI alert during admission. Of the 73 events, 50 would have triggered at least one pRI alert in the 72-hour period leading up to the CDE (sensitivity 68%). Of the 50 events, 39 generated pRI alerts in the 24 hours leading up to the event, and 11 others generated pRI alerts between 24 and 72 hours prior to the event but did not generate any alerts during the 24 hours leading up to the event (Figure).

Patient Characteristics

The 50 CDEs labeled as true positives occurred in 46 unique patients. Table 1 displays the event characteristics.

Acuity Alerts

A total of 79 pRI alerts preceded the 50 CDEs. Of these acuity alerts, 44 (56%) were medium acuity alerts, 17 (22%) were high acuity alerts, and 18 (23%) were very high acuity alerts. Of the 50 CDEs that would have triggered pRI alerts, 33 (66%) would have triggered a single acuity alert and 17 (34%) would have triggered multiple acuity alerts.

Of the 50 CDEs, 39 (78%) had a preceding acuity alert within 24 hours prior to the CDE. In these cases, the alert preceded the CDE by a median of 3.1 hours (interquartile range of 0.7 to 10.3 hours).

We assessed the score components that caused each alert to trigger. All of the vital sign and laboratory components were assessed as clinically related to the CDE’s etiology. By contrast, about half of nursing assessment components were assessed as clinically related to the etiology of the CDE (Table 2). Abnormal cardiac, respiratory, and neurologic assessments were most frequently assessed as clinically relevant.

Escalation Orders

To determine whether the pRI alert would have preceded the earliest documented treatment efforts, we restricted evaluation to the 39 CDEs that had at least one alert in the 24-hour window prior to the CDE. When we reviewed escalation orders placed by clinicians, we found that in 26 cases (67%), the first clinician order reflecting escalation of care would have preceded the first pRI alert within the 24-hour period prior to the CDE. In 13 cases (33%), the first pRI alert would have preceded the first escalation order placed by the clinician. The first pRI alert and the first escalation order would have occurred within the same 1-hour period in 6 of these cases.

Provider Notes

Temporal Relationships

In Supplement 2, we present the proportion of CDEs in which the order or note preceded the pRI alert for each abnormal organ system.

The Figure shows the temporal relationships among escalation orders, clinician notes, and acuity alerts for the 39 CDEs with one or more alerts in the 24 hours leading up to the event. In 21 cases (54%), both an escalation order and a note preceded the first acuity alert. In 14 cases (36%), either an escalation order or a note preceded the first acuity alert. In four cases (10%), the alert preceded any documented evidence that clinicians had recognized deterioration or escalating care.

DISCUSSION

The main finding of this study is that 90% of CDE events that generated “true positive” pRI alerts had evidence suggesting that clinicians had already recognized deterioration and/or were already escalating care before most pRI alerts would have been triggered.

The impacts of early warning scores on patient safety outcomes are not well established. In a recent 21-hospital cluster randomized trial of the BedsidePEWS, a pediatric early warning score system, investigators found that implementing the system does not significantly decrease all-cause mortality in hospitalized children, although hospitals using the BedsidePEWS have low rates of significant CDEs.10 In other studies, early warning scores were often coimplemented with rapid response teams, and separating the incremental benefit of the scoring tool from the availability of a rapid response team is usually not possible.11

Therefore, the benefits of early warning scores are often inferred based on their test characteristics (eg, sensitivity and positive predictive value).12 Sensitivity, which is the proportion of patients who deteriorated and also triggered the early warning score within a reasonable time window preceding the event, is an important consideration when deciding whether an early warning score is worth implementing. A challenging follow-up question that goes beyond sensitivity is how often an early warning score adds new knowledge by identifying patients on a path toward deterioration who were not yet recognized. This study is the first to address that follow-up question. Our results revealed that the score appeared to precede evidence of clinician recognition of deterioration in 10% of CDEs. In some patients, the alert could have contributed to a detection of deterioration that was not previously evident. In the portion of CDEs in which the alert and escalation order or note occurred within the same one-hour window, the alert could have been used as confirmation of clinical suspicion. Notably, we did not evaluate the 16 cases in which a CDE preceded any pRI alert because we chose to focus on “true positive” cases in which pRI alerts preceded CDEs. These events could have had timely recognition by clinicians that we did not capture, so these results may provide an overestimation of CDEs in which the pRI preceded clinician recognition.

Prior work has described a range of mechanisms by which early warning scores can impact patient safety.13 The results of this study suggest limited incremental benefit for the pRI to alert physicians and nurses to new concerning changes at this hospital, although the benefits to low-resourced community hospitals that care for children may be great. The pRI score may also serve as evidence that empowers nurses to overcome barriers to further escalate care, even if the process of escalation has already begun. In addition to empowering nurses, the score may support trainees and clinicians with varying levels of pediatric expertise in the decision to escalate care. Evaluating these potential benefits would require prospective study.

We used the pRI alerts as they were already defined by PeraHealth for CHOP, and different alert thresholds may change score performance. Our study did not identify additional variables to improve score performance, but they can be investigated in future research.

This study had several limitations. First, this work is a single-center study with highly skilled pediatric providers, a mature rapid response system, and low rates of cardiopulmonary arrest outside ICUs. Therefore, the results that we obtained were not immediately generalizable. In a community environment with nurses and physicians who are less experienced in caring for ill children, an early warning score with high sensitivity may be beneficial in ensuring patient safety.

Second, by using escalation orders and notes from the patient chart, we did not capture all the undocumented ways in which clinicians demonstrate awareness of deterioration. For example, a resident may alert the attending on service or a team may informally request consultation with a specialist. We also gave equal weight to escalation orders and clinician notes as evidence of recognition of deterioration. It could be that either orders or notes more closely correlated with clinician awareness.

Finally, the data were from 2013. Although the score components have not changed, efforts to standardize nursing assessments may have altered the performance of the score in the intervening years.

CONCLUSIONS

In most patients who had a CDE at a large freestanding children’s hospital, escalation orders or documented changes in patient status would have occurred before a pRI alert. However, in a minority of patients, the alert could have contributed to the detection of deterioration that was not previously evident.

Disclosures

The authors have nothing to disclose

Funding

The study was supported by funds from the Department of Biomedical and Health Informatics at Children’s Hospital of Philadelphia. PeraHealth, the company that sells the Rothman Index software, provided a service to the investigators but no funding. They applied their proprietary scoring algorithm to the data from Children’s Hospital of Philadelphia to generate alerts retrospectively. This service was provided free of charge in 2014 during the time period when Children’s Hospital of Philadelphia was considering purchasing and implementing PeraHealth software, which it subsequently did. We did not receive any funding for the study from PeraHealth. PeraHealth personnel did not influence the study design, the interpretation of data, the writing of the report, or the decision to submit the article for publication.

1. Alam N, Hobbelink EL, van Tienhoven AJ, van de Ven PM, Jansma EP, Nanayakkara PWB. The impact of the use of the Early Warning Score (EWS) on patient outcomes: a systematic review. Resuscitation. 2014;85(5):587-594. doi: 10.1016/j.resuscitation.2014.01.013. PubMed

2. Rothman MJ, Tepas JJ, Nowalk AJ, et al. Development and validation of a continuously age-adjusted measure of patient condition for hospitalized children using the electronic medical record. J Biomed Inform. 2017;66 (Supplement C):180-193. doi: 10.1016/j.jbi.2016.12.013. PubMed

3. Akre M, Finkelstein M, Erickson M, Liu M, Vanderbilt L, Billman G. Sensitivity of the pediatric early warning score to identify patient deterioration. Pediatrics. 2010;125(4):e763-e769. doi: 10.1542/peds.2009-0338. PubMed

4. Seiger N, Maconochie I, Oostenbrink R, Moll HA. Validity of different pediatric early warning scores in the emergency department. Pediatrics. 2013;132(4):e841-e850. doi: 10.1542/peds.2012-3594. PubMed

5. Parshuram CS, Hutchison J, Middaugh K. Development and initial validation of the Bedside Paediatric Early Warning System score. Crit Care Lond Engl. 2009;13(4):R135. doi: 10.1186/cc7998. PubMed

6. Hollis RH, Graham LA, Lazenby JP, et al. A role for the early warning score in early identification of critical postoperative complications. Ann Surg. 2016;263(5):918-923. doi: 10.1097/SLA.0000000000001514. PubMed

7. Bonafide CP, Roberts KE, Priestley MA, et al. Development of a pragmatic measure for evaluating and optimizing rapid response systems. Pediatrics. 2012;129(4):e874-e881. doi: 10.1542/peds.2011-2784. PubMed

8. Rothman MJ, Rothman SI, Beals J. Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46(5):837-848. doi: 10.1016/j.jbi.2013.06.011. PubMed

9. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi: 10.1016/j.jbi.2008.08.010. PubMed

10. Parshuram CS, Dryden-Palmer K, Farrell C, et al. Effect of a pediatric early warning system on all-cause mortality in hospitalized pediatric patients: the EPOCH randomized clinical trial. JAMA. 2018;319(10):1002-1012. doi: 10.1001/jama.2018.0948. PubMed

11. Bonafide CP, Localio AR, Roberts KE, Nadkarni VM, Weirich CM, Keren R. Impact of rapid response system implementation on critical deterioration events in children. JAMA Pediatr. 2014;168(1):25-33. doi: 10.1001/jamapediatrics.2013.3266. PubMed

12. Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19:285. doi: 10.1186/s13054-015-0999-1. PubMed

13. Bonafide CP, Roberts KE, Weirich CM, et al. Beyond statistical prediction: qualitative evaluation of the mechanisms by which pediatric early warning scores impact patient safety. J Hosp Med. 2013;8(5):248-253. doi: 10.1002/jhm.2026. PubMed

1. Alam N, Hobbelink EL, van Tienhoven AJ, van de Ven PM, Jansma EP, Nanayakkara PWB. The impact of the use of the Early Warning Score (EWS) on patient outcomes: a systematic review. Resuscitation. 2014;85(5):587-594. doi: 10.1016/j.resuscitation.2014.01.013. PubMed

2. Rothman MJ, Tepas JJ, Nowalk AJ, et al. Development and validation of a continuously age-adjusted measure of patient condition for hospitalized children using the electronic medical record. J Biomed Inform. 2017;66 (Supplement C):180-193. doi: 10.1016/j.jbi.2016.12.013. PubMed

3. Akre M, Finkelstein M, Erickson M, Liu M, Vanderbilt L, Billman G. Sensitivity of the pediatric early warning score to identify patient deterioration. Pediatrics. 2010;125(4):e763-e769. doi: 10.1542/peds.2009-0338. PubMed

4. Seiger N, Maconochie I, Oostenbrink R, Moll HA. Validity of different pediatric early warning scores in the emergency department. Pediatrics. 2013;132(4):e841-e850. doi: 10.1542/peds.2012-3594. PubMed

5. Parshuram CS, Hutchison J, Middaugh K. Development and initial validation of the Bedside Paediatric Early Warning System score. Crit Care Lond Engl. 2009;13(4):R135. doi: 10.1186/cc7998. PubMed

6. Hollis RH, Graham LA, Lazenby JP, et al. A role for the early warning score in early identification of critical postoperative complications. Ann Surg. 2016;263(5):918-923. doi: 10.1097/SLA.0000000000001514. PubMed

7. Bonafide CP, Roberts KE, Priestley MA, et al. Development of a pragmatic measure for evaluating and optimizing rapid response systems. Pediatrics. 2012;129(4):e874-e881. doi: 10.1542/peds.2011-2784. PubMed

8. Rothman MJ, Rothman SI, Beals J. Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46(5):837-848. doi: 10.1016/j.jbi.2013.06.011. PubMed

9. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi: 10.1016/j.jbi.2008.08.010. PubMed

10. Parshuram CS, Dryden-Palmer K, Farrell C, et al. Effect of a pediatric early warning system on all-cause mortality in hospitalized pediatric patients: the EPOCH randomized clinical trial. JAMA. 2018;319(10):1002-1012. doi: 10.1001/jama.2018.0948. PubMed

11. Bonafide CP, Localio AR, Roberts KE, Nadkarni VM, Weirich CM, Keren R. Impact of rapid response system implementation on critical deterioration events in children. JAMA Pediatr. 2014;168(1):25-33. doi: 10.1001/jamapediatrics.2013.3266. PubMed

12. Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19:285. doi: 10.1186/s13054-015-0999-1. PubMed

13. Bonafide CP, Roberts KE, Weirich CM, et al. Beyond statistical prediction: qualitative evaluation of the mechanisms by which pediatric early warning scores impact patient safety. J Hosp Med. 2013;8(5):248-253. doi: 10.1002/jhm.2026. PubMed

© 2018 Society of Hospital Medicine.

Association of Weekend Admission and Weekend Discharge with Length of Stay and 30-Day Readmission in Children’s Hospitals

Increasingly, metrics such as length of stay (LOS) and readmissions are being utilized in the United States to assess quality of healthcare because these factors may represent opportunities to reduce cost and improve healthcare delivery.1-8 However, the relatively low rate of pediatric readmissions,9 coupled with limited data regarding recommended LOS or best practices to prevent readmissions in children, challenges the ability of hospitals to safely reduce LOS and readmission rates for children.10–12

In adults, weekend admission is associated with prolonged LOS, increased readmission rates, and increased risk of mortality.13-21 This association is referred to as the “weekend effect.” While the weekend effect has been examined in children, the results of these studies have been variable, with some studies supporting this association and others refuting it.22-31 In contrast to patient demographic and clinical characteristics that are known to affect LOS and readmissions,32 the weekend effect represents a potentially modifiable aspect of a hospitalization that could be targeted to improve healthcare delivery.

With increasing national attention toward improving quality of care and reducing LOS and healthcare costs, more definitive evidence of the weekend effect is necessary to prioritize resource use at both the local and national levels. Therefore, we sought to determine the association of weekend admission and weekend discharge on LOS and 30-day readmissions, respectively, among a national cohort of children. We hypothesized that children admitted on the weekend would have longer LOS, whereas those discharged on the weekend would have higher readmission rates.

METHODS

Study Design and Data Source

We conducted a multicenter, retrospective, cross-sectional study. Data were obtained from the Pediatric Health Information System (PHIS), an administrative and billing database of 46 free-standing tertiary care pediatric hospitals affiliated with the Children’s Hospital Association (Lenexa, Kansas). Patient data are de-identified within PHIS; however, encrypted patient identifiers allow individual patients to be followed across visits. This study was not considered human subjects research by the policies of the Cincinnati Children’s Hospital Institutional Review Board.

Participants

We included hospitalizations to a PHIS-participating hospital for children aged 0-17 years between October 1, 2014 and September 30, 2015. We excluded children who were transferred from/to another institution, left against medical advice, or died in the hospital because these indications may result in incomplete LOS information and would not consistently contribute to readmission rates. We also excluded birth hospitalizations and children admitted for planned procedures. Birth hospitalizations were defined as hospitalizations that began on the day of birth.

Main Exposures

No standard definition of weekend admission or discharge was identified in the literature.33 Thus, we defined a weekend admission as an admission between 3:00

Main Outcomes

Our outcomes included LOS for weekend admission and 30-day readmissions for weekend discharge. LOS, measured in hours, was defined using the reported admission and discharge times. Readmissions were defined as a return to the same hospital within the subsequent 30 days following discharge.

Patient Demographics and Other Study Variables

Patient demographics included age, gender, race/ethnicity, payer, and median household income quartile based on the patient’s home ZIP code. Other study variables included presence of a complex chronic condition (CCC),34 technology dependence,34 number of chronic conditions of any complexity, admission through the emergency department, intensive care unit (ICU) admission, and case mix index. ICU admission and case mix index were chosen as markers for severity of illness. ICU admission was defined as any child who incurred ICU charges at any time following admission. Case mix index in PHIS is a relative weight assigned to each discharge based on the All-Patient Refined Diagnostic Group (APR-DRG; 3M) assignment and APR-DRG severity of illness, which ranges from 1 (minor) to 4 (extreme). The weights are derived by the Children’s Hospital Association from the HCUP KID 2012 database as the ratio of the average cost for discharges within a specific APR-DRG severity of illness combination to the average cost for all discharges in the database.

Statistical Analysis

Continuous variables were summarized with medians and interquartile ranges, while categorical variables were summarized with frequencies and percentages. Differences in admission and discharge characteristics between weekend and weekday were assessed using Wilcoxon rank sum tests for continuous variables and chi-square tests of association for categorical variables. We used generalized linear mixed modeling (GLMM) techniques to assess the impact of weekend admission on LOS and weekend discharge on readmission, adjusting for important patient demographic and clinical characteristics. Furthermore, we used GLMM point estimates to describe the variation across hospitals of the impact of weekday versus weekend care on LOS and readmissions. We assumed an underlying log-normal distribution for LOS and an underlying binomial distribution for 30-day readmission. All GLMMs included a random intercept for each hospital to account for patient clustering within a hospital. All statistical analyses were performed using SAS v.9.4 (SAS Institute, Cary, North Carolina), and P values <.05 were considered statistically significant.

RESULTS

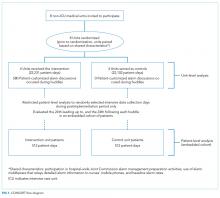

We identified 390,745 hospitalizations that met inclusion criteria (Supplementary Figure 1). The median LOS among our cohort was 41 hours (interquartile range [IQR] 24-71) and the median 30-day readmission rate was 8.2% (IQR 7.2-9.4).

Admission Demographics for Weekends and Weekdays

Among the included hospitalizations, 92,266 (23.6%) admissions occurred on a weekend (Supplementary Table 1). Overall, a higher percentage of children <5 years of age were admitted on a weekend compared with those admitted on a weekday (53.3% vs 49.1%, P < .001). We observed a small but statistically significant difference in the proportion of weekend versus weekday admissions according to gender, race/ethnicity, payer, and median household income quartile. Children with medical complexity and those with technology dependence were admitted less frequently on a weekend. A higher proportion of children were admitted through the emergency department on a weekend and a higher frequency of ICU utilization was observed for children admitted on a weekend compared with those admitted on a weekday.

Association Between Study Variables and Length of Stay

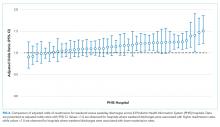

In comparing adjusted LOS for weekend versus weekday admissions across 43 hospitals, not only did LOS vary across hospitals (P < .001), but the association between LOS and weekend versus weekday care also varied across hospitals (P < .001) (Figure 1). Weekend admission was associated with a significantly longer LOS at eight (18.6%) hospitals and a significantly shorter LOS at four (9.3%) hospitals with nonstatistically significant differences at the remaining hospitals.

In adjusted analyses, we observed that infants ≤30 days of age, on average, had an adjusted LOS that was 24% longer than that of 15- to 17-year-olds, while children aged 1-14 years had an adjusted LOS that was 6%-18% shorter (Table 1). ICU utilization, admission through the emergency department, and number of chronic conditions had the greatest association with LOS. As the number of chronic conditions increased, the LOS increased. No association was found between weekend versus weekday admission and LOS (adjusted LOS [95% CI]: weekend 63.70 [61.01-66.52] hours versus weekday 63.40 [60.73-66.19] hours, P = .112).

Discharge Demographics for Weekends and Weekdays

Of the included hospitalizations, 127,421 (32.6%) discharges occurred on a weekend (Supplementary Table 2). Overall, a greater percentage of weekend discharges comprised children <5 years of age compared with the percentage of weekday discharges for children <5 years of age (51.5% vs 49.5%, P < .001). No statistically significant differences were found in gender, payer, or median household income quartile between those children discharged on a weekend versus those discharged on a weekday. We found small, statistically significant differences in the proportion of weekend versus weekday discharges according to race/ethnicity, with fewer non-Hispanic white children being discharged on the weekend versus weekday. Children with medical complexity, technology dependence, and patients with ICU utilization were less frequently discharged on a weekend compared with those discharged on a weekday.

Association Between Study Variables and Readmissions

In comparing the adjusted odds of readmissions for weekend versus weekday discharges across 43 PHIS hospitals, we observed significant variation (P < .001) in readmission rates from hospital to hospital (Figure 2). However, the direction of impact of weekend care on readmissions was similar (P = .314) across hospitals (ie, for 37 of 43 hospitals, the readmission rate was greater for weekend discharges compared with that for weekday discharges). For 17 (39.5%) of 43 hospitals, weekend discharge was associated with a significantly higher readmission rate, while the differences between weekday and weekend discharge were not statistically significant for the remaining hospitals.

In adjusted analyses, we observed that infants <1 year were more likely to be readmitted compared with 15- to 17-year-olds, while children 5-14 years of age were less likely to be readmitted (Table 2). Medical complexity and the number of chronic conditions had the greatest association with readmissions, with increased likelihood of readmission observed as the number of chronic conditions increased. Weekend discharge was associated with increased probability of readmission compared with weekday discharge (adjusted probability of readmission [95% CI]: weekend 0.13 [0.12-0.13] vs weekday 0.11 [0.11-0.12], P < .001).

DISCUSSION

While the reasons for the weekend effect are unclear, data supporting this difference have been observed across many diverse patient groups and health systems both nationally and internationally.13-27,31 Weekend care is thought to differ from weekday care because of differences in physician and nurse staffing, availability of ancillary services, access to diagnostic testing and therapeutic interventions, ability to arrange outpatient follow-up, and individual patient clinical factors, including acuity of illness. Few studies have assessed the effect of weekend discharges on patient or system outcomes. Among children within a single health system, readmission risk was associated with weekend admission but not with weekend discharge.22 This observation suggests that if differential care exists, then it occurs during initial clinical management rather than during discharge planning. Consequently, understanding the interaction of weekend admission and LOS is important. In addition, the relative paucity of pediatric data examining a weekend discharge effect limits the ability to generalize these findings across other hospitals or health systems.

In contrast to prior work, we observed a modest increased risk for readmission among those discharged on the weekend in a large sample of children. Auger and Davis reported a lack of association between weekend discharge and readmissions at one tertiary care children’s hospital, citing reduced discharge volumes on the weekend, especially among children with medical complexity, as a possible driver for their observation.22 The inclusion of a much larger population across 43 hospitals in our study may explain our different findings compared with previous research. In addition, the inclusion/exclusion criteria differed between the two studies; we excluded index admissions for planned procedures in this study (which are more likely to occur during the week), which may have contributed to the differing conclusions. Although Auger and Davis suggest that differences in initial clinical management may be responsible for the weekend effect,22 our observations suggest that discharge planning practices may also contribute to readmission risk. For example, a family’s inability to access compounded medications at a local pharmacy or to access primary care following discharge could reasonably contribute to treatment failure and increased readmission risk. Attention to improving and standardizing discharge practices may alleviate differences in readmission risk among children discharged on a weekend.

Individual patient characteristics greatly influence LOS and readmission risk. Congruent with prior studies, medical complexity and technology dependence were among the factors in our study that had the strongest association with LOS and readmission risk.32 As with prior studies22, we observed that children with medical complexity and technology dependence were less frequently admitted and discharged on a weekend than on a weekday, which suggests that physicians may avoid complicated discharges on the weekend. Children with medical complexity present a unique challenge to physicians when assessing discharge readiness, given that these children frequently require careful coordination of durable medical equipment, obtainment of special medication preparations, and possibly the resumption or establishment of home health services. Notably, we cannot discern from our data what proportion of discharges may be delayed over the weekend secondary to challenges involved in coordinating care for children with medical complexity. Future investigations aimed at assessing physician decision making and discharge readiness in relation to discharge timing among children with medical complexity may establish this relationship more clearly.

We observed substantial variation in LOS and readmission risk across 43 tertiary care children’s hospitals. Since the 1970s, numerous studies have reported worse outcomes among patients admitted on the weekend. While the majority of studies support the weekend effect, several recent studies suggest that patients admitted on the weekend are at no greater risk of adverse outcomes than those admitted during the week.35-37 Our work builds on the existing literature, demonstrating a complex and variable relationship between weekend admission/discharge, LOS, and readmission risk across hospitals. Notably, while many hospitals in our study experienced a significant weekend effect in LOS or readmission risk, only four hospitals experienced a statistically significant weekend effect for both LOS and readmission risk (three hospitals experienced increased risk for both, while one hospital experienced increased readmission risk but decreased LOS). Future investigations of the weekend effect should focus on exploring the differences in admission/discharge practices and staffing patterns of hospitals that did or did not experience a weekend effect.

This study has several limitations

CONCLUSION

In a study of 43 children’s hospitals, children discharged on the weekend had a slightly increased readmission risk compared with children discharged on a weekday. Wide variation in the weekend effect on LOS and readmission risk was evident across hospitals. Individual patient characteristics had a greater impact on LOS and readmission risk than the weekend effect. Future investigations aimed at understanding which factors contribute most strongly to a weekend effect within individual hospitals (eg, differences in institutional admission/discharge practices) may help alleviate the weekend effect and improve healthcare quality.

Acknowledgments

This manuscript resulted from “Paper in a Day,” a Pediatric Research in Inpatient Settings (PRIS) Network-sponsored workshop presented at the Pediatric Hospital Medicine 2017 annual meeting. Workshop participants learned how to ask and answer a health services research question and efficiently prepare a manuscript for publication. The following are the members of the PRIS Network who contributed to this work: Jessica L. Bettenhausen, MD; Rebecca M. Cantu, MD, MPH; Jillian M Cotter, MD; Megan Deisz, MD; Teresa Frazer, MD; Pratichi Goenka, MD; Ashley Jenkins, MD; Kathryn E. Kyler, MD; Janet T. Lau, MD; Brian E. Lee, MD; Christiane Lenzen, MD; Trisha Marshall, MD; John M. Morrison MD, PhD; Lauren Nassetta, MD; Raymond Parlar-Chun, MD; Sonya Tang Girdwood MD, PhD; Tony R Tarchichi, MD; Irina G. Trifonova, MD; Jacqueline M. Walker, MD, MHPE; and Susan C. Walley, MD. See appendix for contact information for members of the PRIS Network

Funding

The authors have no financial relationships relevant to this article to disclose.

Disclosures

The authors have no conflicts of interest to disclose.

1. Crossing the Quality Chasm: The IOM Health Care Quality Initiative : Health and Medicine Division. http://www.nationalacademies.org/hmd/Global/News%20Announcements/Crossing-the-Quality-Chasm-The-IOM-Health-Care-Quality-Initiative.aspx. Accessed November 20, 2017.