User login

Incidence, predictors, and outcomes of hospital-acquired anemia

Hospital-acquired anemia (HAA) is defined as having a normal hemoglobin value upon admission but developing anemia during the course of hospitalization. The condition is common, with an incidence ranging from approximately 25% when defined by using the hemoglobin value prior to discharge to 74% when using the nadir hemoglobin value during hospitalization.1-5 While there are many potential etiologies for HAA, given that iatrogenic blood loss from phlebotomy may lead to its development,6,7 HAA has been postulated to be a hazard of hospitalization that is potentially preventable.8 However, it is unclear whether the development of HAA portends worse outcomes after hospital discharge.

The limited number of studies on the association between HAA and postdischarge outcomes has been restricted to patients hospitalized for acute myocardial infarction (AMI).3,9,10 Among this subpopulation, HAA is independently associated with greater morbidity and mortality following hospital discharge.3,9,10 In a more broadly representative population of hospitalized adults, Koch et al.2 found that the development of HAA is associated with greater length of stay (LOS), hospital charges, and inpatient mortality. However, given that HAA was defined by the lowest hemoglobin level during hospitalization (and not necessarily the last value prior to discharge), it is unclear if the worse outcomes observed were the cause of the HAA, rather than its effect, since hospital LOS is a robust predictor for the development of HAA, as well as a major driver of hospital costs and a prognostic marker for inpatient mortality.3,9 Furthermore, this study evaluated outcomes only during the index hospitalization, so it is unclear if patients who develop HAA have worse clinical outcomes after discharge.

Therefore, in this study, we used clinically granular electronic health record (EHR) data from a diverse cohort of consecutive medicine inpatients hospitalized for any reason at 1 of 6 hospitals to: 1) describe the epidemiology of HAA; 2) identify predictors of its development; and 3) examine its association with 30-day postdischarge adverse outcomes. We hypothesized that the development of HAA would be independently associated with 30-day readmission and mortality in a dose-dependent fashion, with increasing severity of HAA associated with worse outcomes.

METHODS

Study Design, Population, and Data Sources

We conducted a retrospective observational cohort study using EHR data collected from November 1, 2009 to October 30, 2010 from 6 hospitals in the north Texas region. One site was a university-affiliated safety-net hospital; the remaining 5 community hospitals were a mix of teaching and nonteaching sites. All hospitals used the Epic EHR system (Epic Systems Corporation, Verona, Wisconsin). Details of this cohort have been published.11,12This study included consecutive hospitalizations among adults age 18 years or older who were discharged from a medicine inpatient service with any diagnosis. We excluded hospitalizations by individuals who were anemic within the first 24 hours of admission (hematocrit less than 36% for women and less than 40% for men), were missing a hematocrit value within the first 24 hours of hospitalization or a repeat hematocrit value prior to discharge, had a hospitalization in the preceding 30 days (ie, index hospitalization was considered a readmission), died in the hospital, were transferred to another hospital, or left against medical advice. For individuals with multiple eligible hospitalizations during the study period, we included only the first hospitalization. We also excluded those discharged to hospice, given that this population of individuals may have intentionally desired less aggressive care.

Definition of Hospital-Acquired Anemia

HAA was defined as having a normal hematocrit value (36% or greater for women and 40% or greater for men) within the first 24 hours of admission and a hematocrit value at the time of hospital discharge lower than the World Health Organization’s sex-specific cut points.13 If there was more than 1 hematocrit value on the same day, we chose the lowest value. Based on prior studies, HAA was further categorized by severity as mild (hematocrit greater than 33% and less than 36% in women; and greater than 33% and less than 40% in men), moderate (hematocrit greater than 27% and 33% or less for all), or severe (hematocrit 27% or less for all).2,14

Characteristics

We extracted information on sociodemographic characteristics, comorbidities, LOS, procedures, blood transfusions, and laboratory values from the EHR. Hospitalizations in the 12 months preceding the index hospitalization were ascertained from the EHR and from an all-payer regional hospitalization database that captures hospitalizations from 75 acute care hospitals within a 100-mile radius of Dallas-Fort Worth. International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) discharge diagnosis codes were categorized according to the Agency for Healthcare Research and Quality (AHRQ) Clinical Classifications Software (CCS).15 We defined a diagnosis for hemorrhage and coagulation, and hemorrhagic disorder as the presence of any ICD-9-CM code (primary or secondary) that mapped to the AHRQ CCS diagnoses 60 and 153, and 62, respectively. Procedures were categorized as minor diagnostic, minor therapeutic, major diagnostic, and major therapeutic using the AHRQ Healthcare Cost and Utilization Procedure Classes tool.16

Outcomes

The primary outcome was a composite of death or readmission within 30 days of hospital discharge. Hospital readmissions were ascertained at the index hospital and at any of 75 acute care hospitals in the region as described earlier. Death was ascertained from each of the hospitals’ EHR and administrative data and the Social Security Death Index. Individuals who had both outcomes (eg, a 30-day readmission and death) were considered to have only 1 occurrence of the composite primary outcome measure. Our secondary outcomes were death and readmission within 30 days of discharge, considered as separate outcomes.

Statistical Analysis

We used logistic regression models to evaluate predictors of HAA and to estimate the association of HAA on subsequent 30-day adverse outcomes after hospital discharge. All models accounted for clustering of patients by hospital. For the outcomes analyses, models were adjusted for potential confounders based on prior literature and our group’s expertise, which included age, sex, race/ethnicity, Charlson comorbidity index, prior hospitalizations, nonelective admission status, creatinine level on admission, blood urea nitrogen (BUN) to creatinine ratio of more than 20:1 on admission, LOS, receipt of a major diagnostic or therapeutic procedure during the index hospitalization, a discharge diagnosis for hemorrhage, and a discharge diagnosis for a coagulation or hemorrhagic disorder. For the mortality analyses, given the limited number of 30-day deaths after hospital discharge in our cohort, we collapsed moderate and severe HAA into a single category. In sensitivity analyses, we repeated the adjusted model, but excluded patients in our cohort who had received at least 1 blood transfusion during the index hospitalization (2.6%) given its potential for harm, and patients with a primary discharge diagnosis for AMI (3.1%).17

The functional forms of continuous variables were assessed using restricted cubic splines and locally weighted scatterplot smoothing techniques. All analyses were performed using STATA statistical software version 12.0 (StataCorp, College Station, Texas). The University of Texas Southwestern Medical Center institutional review board approved this study.

RESULTS

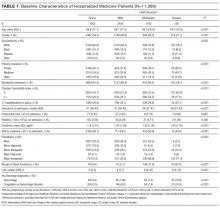

Of 53,995 consecutive medicine hospitalizations among adults age 18 years or older during our study period, 11,309 index hospitalizations were included in our study cohort (Supplemental Figure 1). The majority of patients excluded were because of having documented anemia within the first 24 hours of admission (n=24,950). With increasing severity of HAA, patients were older, more likely to be female, non-Hispanic white, electively admitted, have fewer comorbidities, less likely to be hospitalized in the past year, more likely to have had a major procedure, receive a blood transfusion, have a longer LOS, and have a primary or secondary discharge diagnosis for a hemorrhage or a coagulation or hemorrhagic disorder (Table 1).

Epidemiology of HAA

Among this cohort of patients without anemia on admission, the median hematocrit value on admission was 40.6 g/dL and on discharge was 38.9 g/dL. One-third of patients with normal hematocrit value at admission developed HAA, with 21.6% developing mild HAA, 10.1% developing moderate HAA, and 1.4% developing severe HAA. The median discharge hematocrit value was 36 g/dL (interquartile range [IQR]), 35-38 g/dL) for the group of patients who developed mild HAA, 31 g/dL (IQR, 30-32 g/dL) for moderate HAA, and 26 g/dL (IQR, 25-27 g/dL) for severe HAA (Supplemental Figure 2). Among the severe HAA group, 135 of the 159 patients (85%) had a major procedure (n=123, accounting for 219 unique major procedures), a diagnosis for hemorrhage (n=30), and/or a diagnosis for a coagulation or hemorrhagic disorder (n=23) during the index hospitalization. Of the 219 major procedures among patients with severe HAA, most were musculoskeletal (92 procedures), cardiovascular (61 procedures), or digestive system-related (41 procedures). The most common types of procedures were coronary artery bypass graft (36 procedures), hip replacement (25 procedures), knee replacement (17 procedures), and femur fracture reduction (15 procedures). The 10 most common principal discharge diagnoses of the index hospitalization by HAA group are shown in Supplemental Table 1. For the severe HAA group, the most common diagnosis was hip fracture (20.8%).

Predictors of HAA

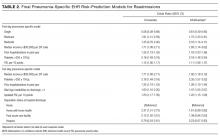

Compared to no or mild HAA, female sex, elective admission status, serum creatinine on admission, BUN to creatinine ratio greater than 20 to 1, hospital LOS, and undergoing a major diagnostic or therapeutic procedure were predictors for the development of moderate or severe HAA (Table 2). The model explained 23% of the variance (McFadden’s pseudo R2).

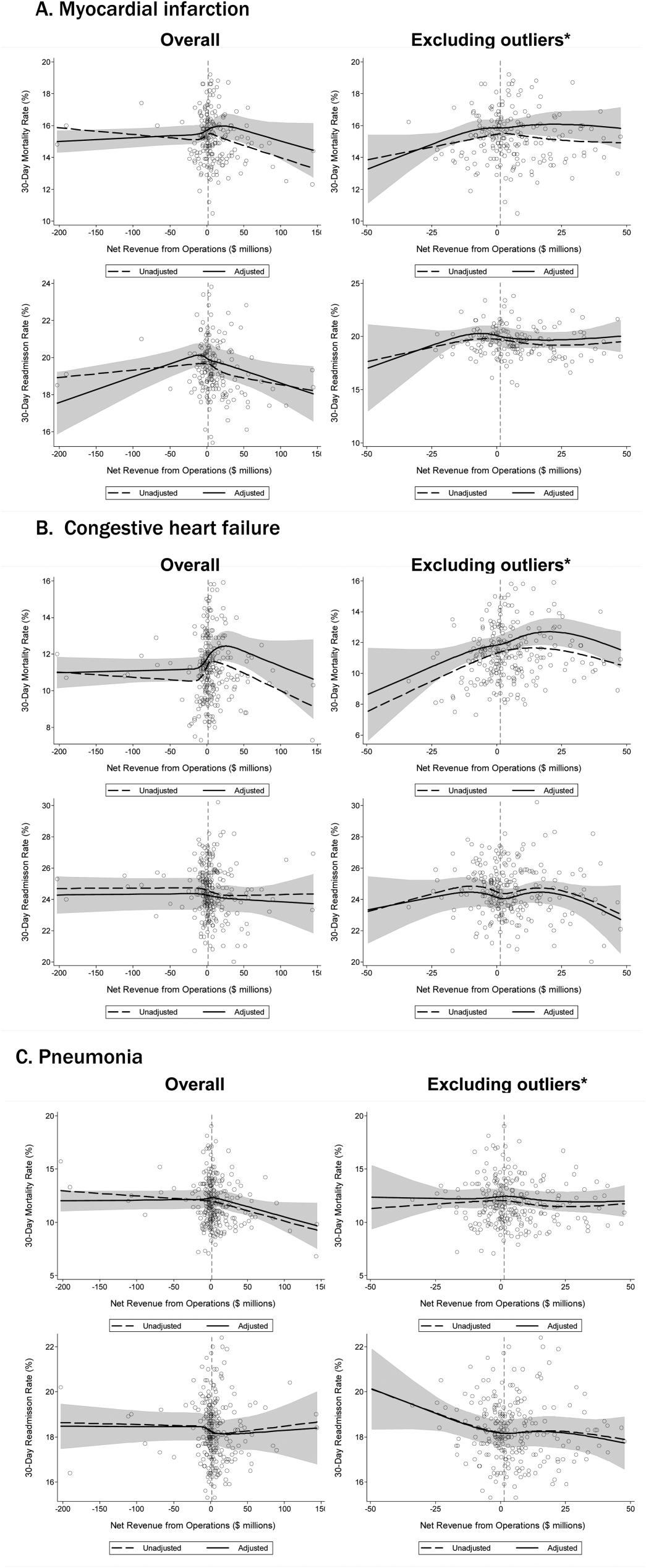

Incidence of Postdischarge Outcomes by Severity of HAA

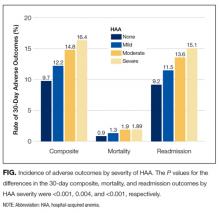

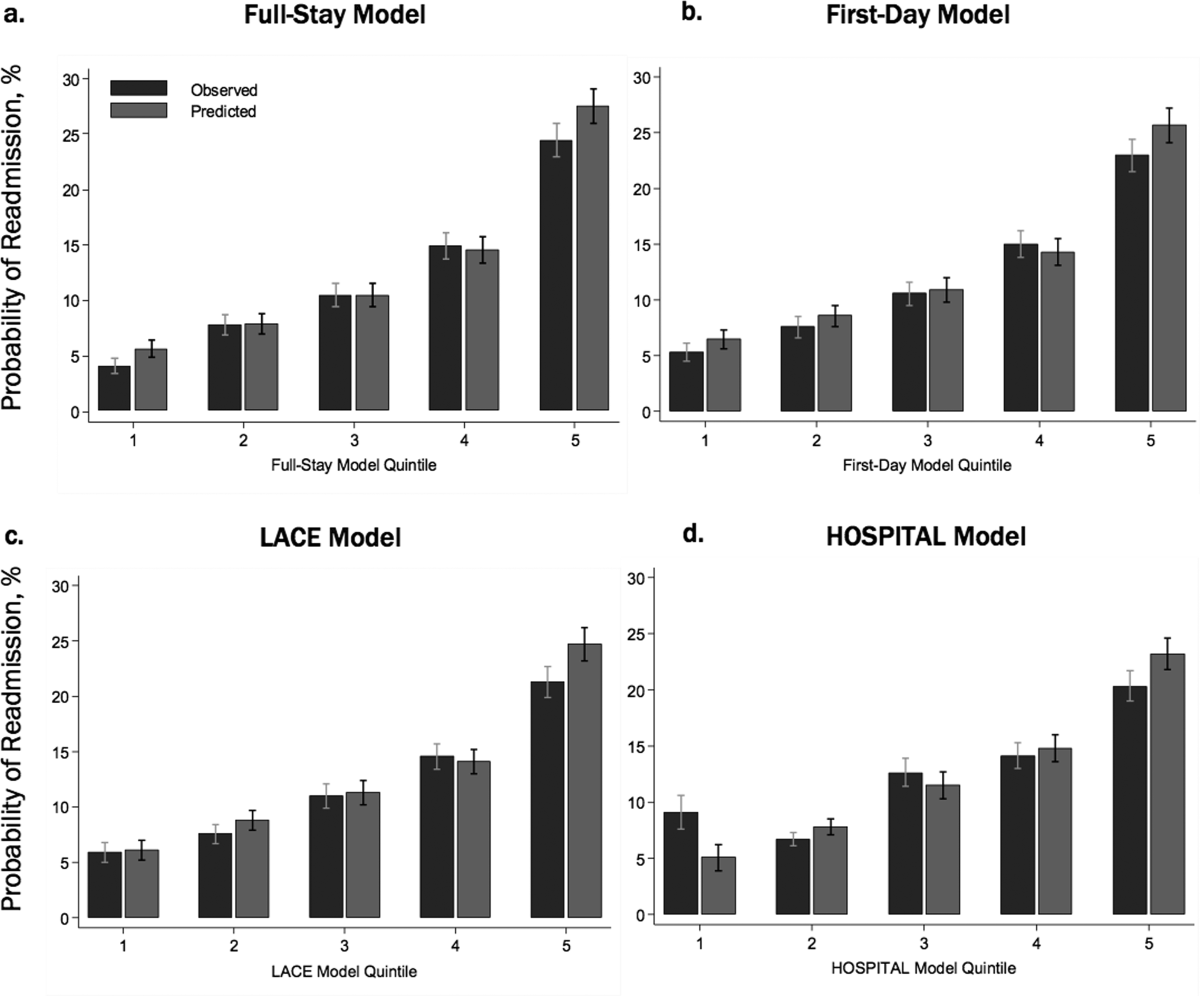

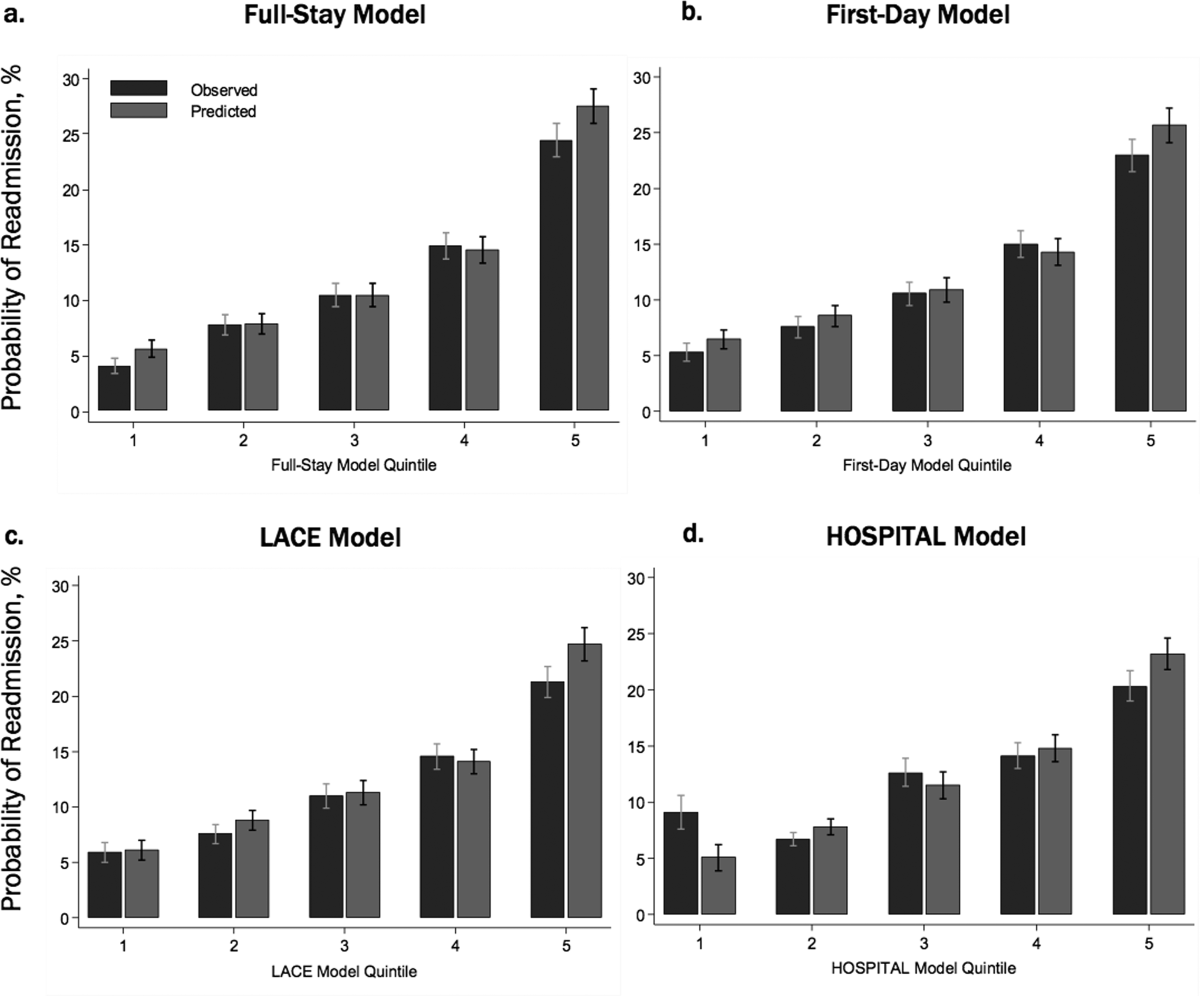

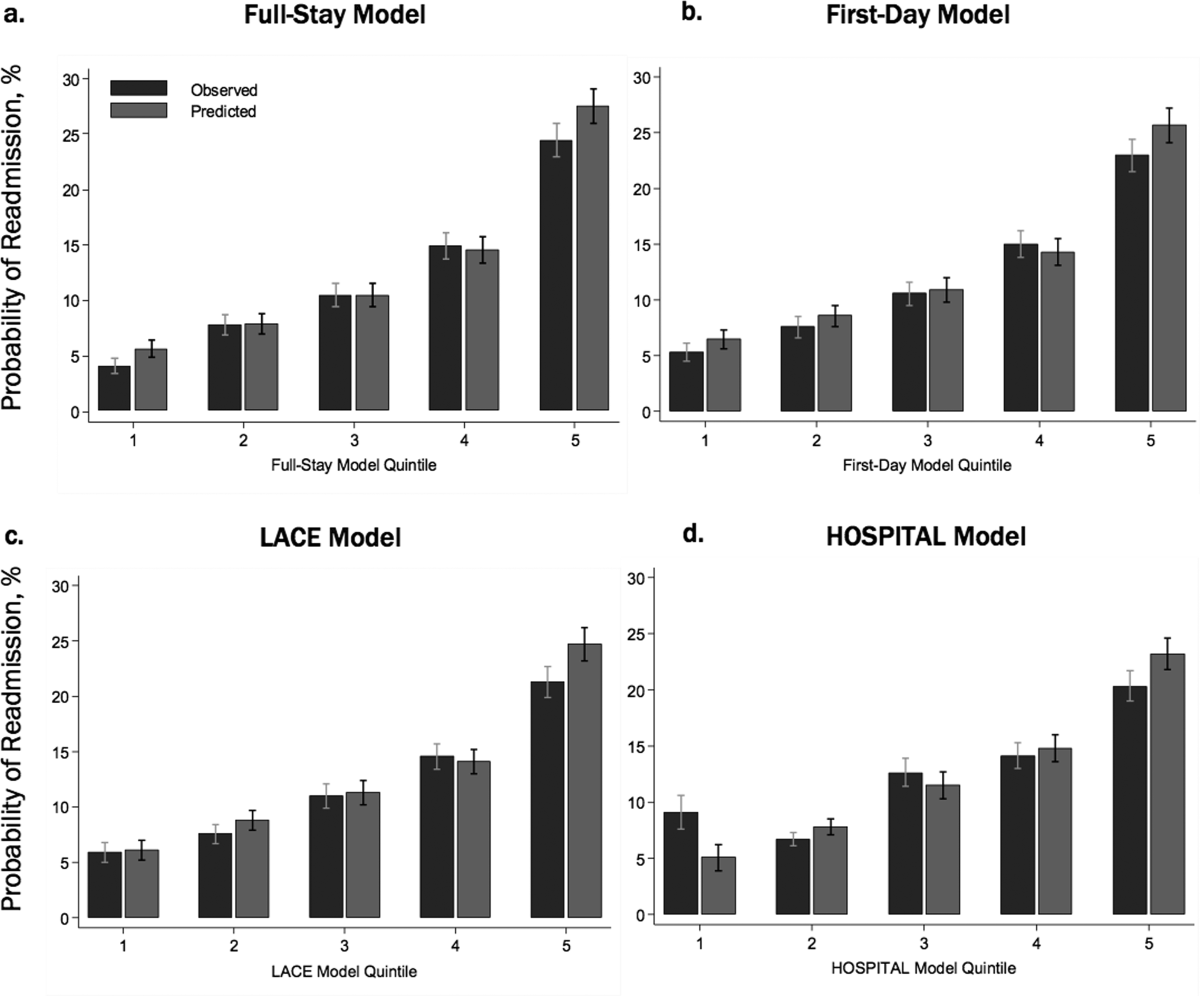

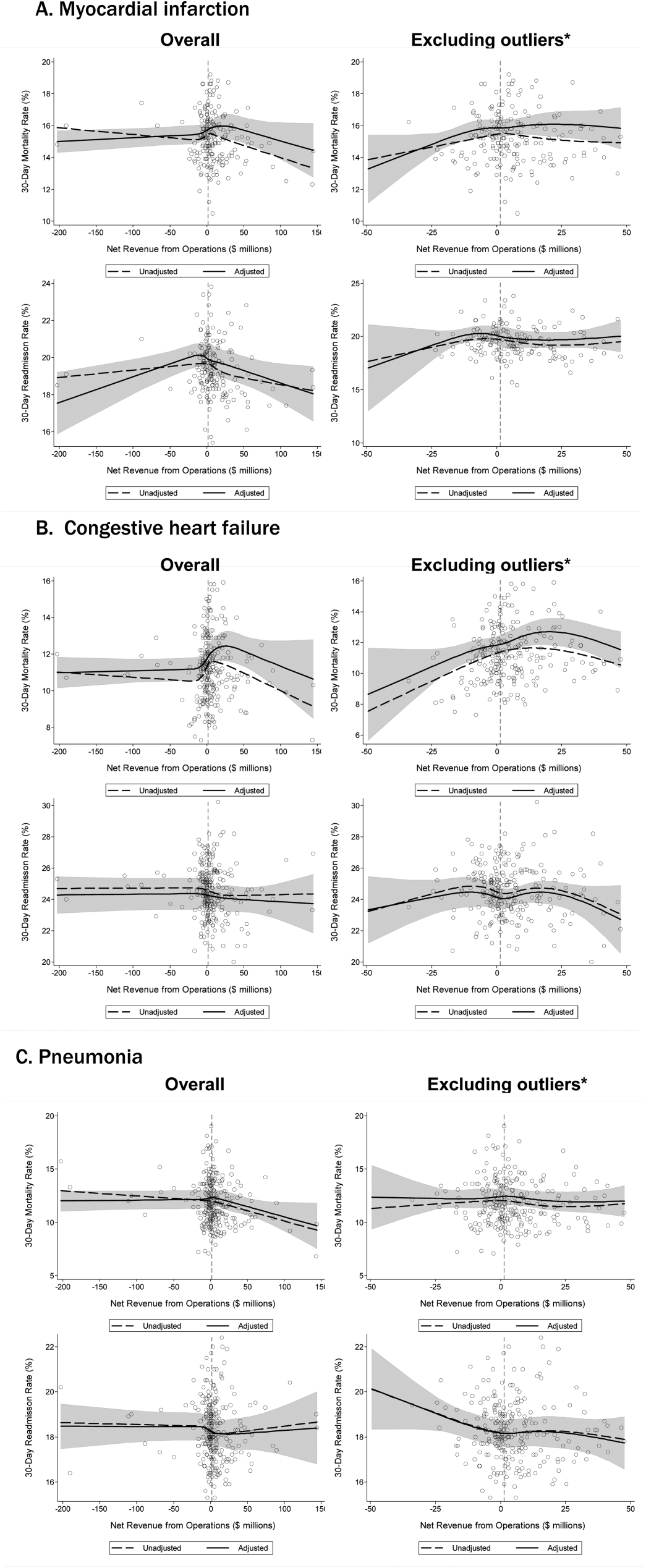

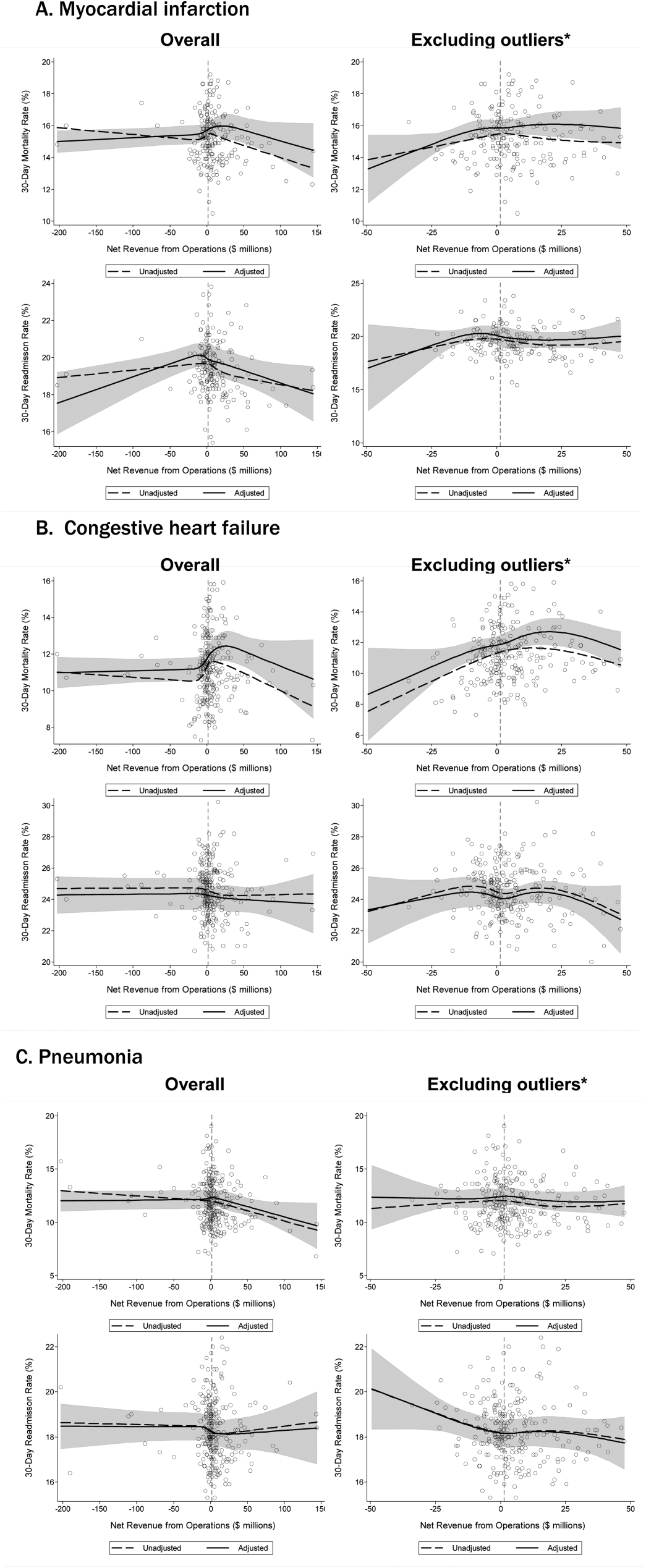

The severity of HAA was associated with a dose-dependent increase in the incidence of 30-day adverse outcomes, such that patients with increasing severity of HAA had greater 30-day composite, mortality, and readmission outcomes (P < 0.001; Figure). The 30-day postdischarge composite outcome was primarily driven by hospital readmissions given the low mortality rate in our cohort. Patients who did not develop HAA had an incidence of 9.7% for the composite outcome, whereas patients with severe HAA had an incidence of 16.4%. Among the 24 patients with severe HAA but who had not undergone a major procedure or had a discharge diagnosis for hemorrhage or for a coagulation or hemorrhagic disorder, only 3 (12.5%) had a composite postdischarge adverse outcome (2 readmissions and 1 death). The median time to readmission was similar between groups, but more patients with severe HAA had an early readmission within 7 days of hospital discharge than patients who did not develop HAA (6.9% vs. 2.9%, P = 0.001; Supplemental Table 2).

Association of HAA and Postdischarge Outcomes

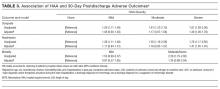

In unadjusted analyses, compared to not developing HAA, mild, moderate, and severe HAA were associated with a 29%, 61%, and 81% increase in the odds for a composite outcome, respectively (Table 3). After adjustment for confounders, the effect size for HAA attenuated and was no longer statistically significant for mild and moderate HAA. However, severe HAA was significantly associated with a 39% increase in the odds for the composite outcome and a 41% increase in the odds for 30-day readmission (P = 0.008 and P = 0.02, respectively).

In sensitivity analyses, the exclusion of individuals who received at least 1 blood transfusion during the index hospitalization (n=298) and individuals who had a primary discharge diagnosis for AMI (n=353) did not substantively change the estimates of the association between severe HAA and postdischarge outcomes (Supplemental Tables 3 and 4). However, because of the fewer number of adverse events for each analysis, the confidence intervals were wider and the association of severe HAA and the composite outcome and readmission were no longer statistically significant in these subcohorts.

DISCUSSION

In this large and diverse sample of medical inpatients, we found that HAA occurs in one-third of adults with normal hematocrit value at admission, where 10.1% of the cohort developed moderately severe HAA and 1.4% developed severe HAA by the time of discharge. Length of stay and undergoing a major diagnostic or therapeutic procedure were the 2 strongest potentially modifiable predictors of developing moderate or severe HAA. Severe HAA was independently associated with a 39% increase in the odds of being readmitted or dying within 30 days after hospital discharge compared to not developing HAA. However, the associations between mild or moderate HAA with adverse outcomes were attenuated after adjusting for confounders and were no longer statistically significant.

To our knowledge, this is the first study on the postdischarge adverse outcomes of HAA among a diverse cohort of medical inpatients hospitalized for any reason. In a more restricted population, Salisbury et al.3 found that patients hospitalized for AMI who developed moderate to severe HAA (hemoglobin value at discharge of 11 g/dL or less) had greater 1-year mortality than those without HAA (8.4% vs. 2.6%, P < 0.001), and had an 82% increase in the hazard for mortality (95% confidence interval, hazard ratio 1.11-2.98). Others have similarly shown that HAA is common among patients hospitalized with AMI and is associated with greater mortality.5,9,18 Our study extends upon this prior research by showing that severe HAA increases the risk for adverse outcomes for all adult inpatients, not only those hospitalized for AMI or among those receiving blood transfusions.

Despite the increased harm associated with severe HAA, it is unclear whether HAA is a preventable hazard of hospitalization, as suggested by others.6,8 Most patients in our cohort who developed severe HAA underwent a major procedure, had a discharge diagnosis for hemorrhage, and/or had a discharge diagnosis for a coagulation or hemorrhagic disorder. Thus, blood loss due to phlebotomy, 1 of the more modifiable etiologies of HAA, was unlikely to have been the primary driver for most patients who developed severe HAA. Since it has been estimated to take 15 days of daily phlebotomy of 53 mL of whole blood in females of average body weight (and 20 days for average weight males) with no bone marrow synthesis for severe anemia to develop, it is even less likely that phlebotomy was the principal etiology given an 8-day median LOS among patients with severe HAA.19,20 However, since the etiology of HAA can be multifactorial, limiting blood loss due to phlebotomy by using smaller volume tubes, blood conservation devices, or reducing unnecessary testing may mitigate the development of severe HAA.21,22 Additionally, since more than three-quarters of patients who developed severe HAA underwent a major procedure, more care and attention to minimizing operative blood loss could lessen the severity of HAA and facilitate better recovery. If minimizing blood loss is not feasible, in the absence of symptoms related to anemia or ongoing blood loss, randomized controlled trials overwhelmingly support a restrictive transfusion strategy using a hemoglobin value threshold of 7 mg/dL, even in the postoperative setting.23-25

The implications of mild to moderate HAA are less clear. The odds ratios for mild and moderate HAA, while not statistically significant, suggest a small increase in harm compared to not developing HAA. Furthermore, the upper boundary of the confidence intervals for mild and moderate HAA cannot exclude a possible 30% and 56% increase in the odds for the 30-day composite outcome, respectively. Thus, a better powered study, including more patients and extending the time interval for ascertaining postdischarge adverse events beyond 30 days, may reveal a harmful association. Lastly, our study assessed only the association of HAA with 30-day readmission and mortality. Examining the association between HAA and other patient-centered outcomes such as fatigue, functional impairment, and prolonged posthospitalization recovery time may uncover other important adverse effects of mild and moderate HAA, both of which occur far more frequently than severe HAA.

Our findings should be interpreted in the context of several limitations. First, although we included a diverse group of patients from a multihospital cohort, generalizability to other settings is uncertain. Second, as this was a retrospective study using EHR data, we had limited information to infer the precise mechanism of HAA for each patient. However, procedure codes and discharge diagnoses enabled us to assess which patients underwent a major procedure or had a hemorrhage or hemorrhagic disorder during the hospitalization. Third, given the relatively few number of patients with severe HAA in our cohort, we were unable to assess if the association of severe HAA differed by suspected etiology. Lastly, because we were unable to ascertain the timing of the hematocrit values within the first 24 hours of admission, we excluded both patients with preexisting anemia on admission and those who developed HAA within the first 24 hours of admission, which is not uncommon.26 Thus, we were unable to assess the effect of acute on chronic anemia arising during hospitalization and HAA that develops within the first 24 hours, both of which may also be harmful.18,27,28

In conclusion, severe HAA occurs in 1.4% of all medical hospitalizations and is associated with increased odds of death or readmission within 30 days. Since most patients with severe HAA had undergone a major procedure or had a discharge diagnosis of hemorrhage or a coagulation or hemorrhagic disorder, it is unclear if severe HAA is potentially preventable through preventing blood loss from phlebotomy or by reducing iatrogenic injury during procedures. Future research should assess the potential preventability of severe HAA, and examine other patient-centered outcomes potentially related to anemia, including fatigue, functional impairment, and trajectory of posthospital recovery.

Acknowledgments

The authors would like to acknowledge Ruben Amarasingham, MD, MBA, President and Chief Executive Officer of the Parkland Center for Clinical Innovation, and Ferdinand Velasco, MD, Chief Health Information Officer at Texas Health Resources, for their assistance in assembling the 6 hospital cohort used in this study. The authors would also like to thank Valy Fontil, MD, MAS, Assistant Professor of Medicine at the University of California San Francisco School of Medicine, and Elizabeth Rogers, MD, MAS, Assistant Professor of Internal Medicine and Pediatrics at the University of Minnesota Medical School, for their constructive feedback on an earlier version of this manuscript.

Disclosures

This work was supported by the Agency for Healthcare Research and Quality-funded UT Southwestern Center for Patient-Centered Outcomes Research (R24 HS022418-01); the Commonwealth Foundation (#20100323); the UT Southwestern KL2 Scholars Program supported by the National Institutes of Health (KL2 TR001103); the National Center for Advancing Translational Sciences at the National Institute of Health (U54 RFA-TR-12-006); and the National Institute on Aging (K23AG052603). The study sponsors had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors have no financial conflicts of interest to disclose.

1. Kurniali PC, Curry S, Brennan KW, et al. A retrospective study investigating the incidence and predisposing factors of hospital-acquired anemia. Anemia. 2014;2014:634582. PubMed

2. Koch CG, Li L, Sun Z, et al. Hospital-acquired anemia: prevalence, outcomes, and healthcare implications. J Hosp Med. 2013;8(9):506-512. PubMed

3. Salisbury AC, Alexander KP, Reid KJ, et al. Incidence, correlates, and outcomes of acute, hospital-acquired anemia in patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2010;3(4):337-346. PubMed

4. Salisbury AC, Amin AP, Reid KJ, et al. Hospital-acquired anemia and in-hospital mortality in patients with acute myocardial infarction. Am Heart J. 2011;162(2):300-309 e303. PubMed

5. Meroño O, Cladellas M, Recasens L, et al. In-hospital acquired anemia in acute coronary syndrome. Predictors, in-hospital prognosis and one-year mortality. Rev Esp Cardiol (Engl Ed). 2012;65(8):742-748. PubMed

6. Salisbury AC, Reid KJ, Alexander KP, et al. Diagnostic blood loss from phlebotomy and hospital-acquired anemia during acute myocardial infarction. Arch Intern Med. 2011;171(18):1646-1653. PubMed

7. Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? The effect of diagnostic phlebotomy on hemoglobin and hematocrit levels. J Gen Intern Med. 2005;20(6):520-524. PubMed

8. Rennke S, Fang MC. Hazards of hospitalization: more than just “never events”. Arch Intern Med. 2011;171(18):1653-1654. PubMed

9. Choi JS, Kim YA, Kang YU, et al. Clinical impact of hospital-acquired anemia in association with acute kidney injury and chronic kidney disease in patients with acute myocardial infarction. PLoS One. 2013;8(9):e75583. PubMed

10. Salisbury AC, Kosiborod M, Amin AP, et al. Recovery from hospital-acquired anemia after acute myocardial infarction and effect on outcomes. Am J Cardiol. 2011;108(7):949-954. PubMed

11. Nguyen OK, Makam AN, Clark C, et al. Predicting all-cause readmissions using electronic health record data from the entire hospitalization: Model development and comparison. J Hosp Med. 2016;11(7):473-480. PubMed

12. Amarasingham R, Velasco F, Xie B, et al. Electronic medical record-based multicondition models to predict the risk of 30 day readmission or death among adult medicine patients: validation and comparison to existing models. BMC Med Inform Decis Mak. 2015;15:39. PubMed

13. World Health Organization. Hemoglobin concentrations for the diagnosis of anaemia and assessment of severity. http://www.who.int/vmnis/indicators/haemoglobin.pdf. Accessed March 15, 2016.

14. Martin ND, Scantling D. Hospital-acquired anemia: a contemporary review of etiologies and prevention strategies. J Infus Nurs. 2015;38(5):330-338. PubMed

15. Agency for Healthcare Research and Quality, Rockville, MD. Clinical classification software (CCS) for ICD-9-CM. Healthcare Cost and Utilization Project. 2015 http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp. Accessed November 18, 2015.

16. Agency for Healthcare Research and Quality, Rockville, MD. Procedure classes2015. Healthcare Cost and Utilization Project. 2015. https://www.hcup-us.ahrq.gov/toolssoftware/procedure/procedure.jsp. Accessed November 18, 2015.

17. Corwin HL, Gettinger A, Pearl RG, et al. The CRIT Study: Anemia and blood transfusion in the critically ill--current clinical practice in the United States. Crit Care Med. 2004;32(1):39-52. PubMed

18. Aronson D, Suleiman M, Agmon Y, et al. Changes in haemoglobin levels during hospital course and long-term outcome after acute myocardial infarction. Eur Heart J. 2007;28(11):1289-1296. PubMed

19. Lyon AW, Chin AC, Slotsve GA, Lyon ME. Simulation of repetitive diagnostic blood loss and onset of iatrogenic anemia in critical care patients with a mathematical model. Comput Biol Med. 2013;43(2):84-90. PubMed

20. van der Bom JG, Cannegieter SC. Hospital-acquired anemia: the contribution of diagnostic blood loss. J Thromb Haemost. 2015;13(6):1157-1159. PubMed

21. Sanchez-Giron F, Alvarez-Mora F. Reduction of blood loss from laboratory testing in hospitalized adult patients using small-volume (pediatric) tubes. Arch Pathol Lab Med. 2008;132(12):1916-1919. PubMed

22. Smoller BR, Kruskall MS. Phlebotomy for diagnostic laboratory tests in adults. Pattern of use and effect on transfusion requirements. N Engl J Med. 1986;314(19):1233-1235. PubMed

23. Carson JL, Carless PA, Hebert PC. Transfusion thresholds and other strategies for guiding allogeneic red blood cell transfusion. Cochrane Database Syst Rev. 2012(4):CD002042. PubMed

24. Carson JL, Carless PA, Hébert PC. Outcomes using lower vs higher hemoglobin thresholds for red blood cell transfusion. JAMA. 2013;309(1):83-84. PubMed

25. Carson JL, Sieber F, Cook DR, et al. Liberal versus restrictive blood transfusion strategy: 3-year survival and cause of death results from the FOCUS randomised controlled trial. Lancet. 2015;385(9974):1183-1189. PubMed

26. Rajkomar A, McCulloch CE, Fang MC. Low diagnostic utility of rechecking hemoglobins within 24 hours in hospitalized patients. Am J Med. 2016;129(11):1194-1197. PubMed

27. Reade MC, Weissfeld L, Angus DC, Kellum JA, Milbrandt EB. The prevalence of anemia and its association with 90-day mortality in hospitalized community-acquired pneumonia. BMC Pulm Med. 2010;10:15. PubMed

28. Halm EA, Wang JJ, Boockvar K, et al. The effect of perioperative anemia on clinical and functional outcomes in patients with hip fracture. J Orthop Trauma. 2004;18(6):369-374. PubMed

Hospital-acquired anemia (HAA) is defined as having a normal hemoglobin value upon admission but developing anemia during the course of hospitalization. The condition is common, with an incidence ranging from approximately 25% when defined by using the hemoglobin value prior to discharge to 74% when using the nadir hemoglobin value during hospitalization.1-5 While there are many potential etiologies for HAA, given that iatrogenic blood loss from phlebotomy may lead to its development,6,7 HAA has been postulated to be a hazard of hospitalization that is potentially preventable.8 However, it is unclear whether the development of HAA portends worse outcomes after hospital discharge.

The limited number of studies on the association between HAA and postdischarge outcomes has been restricted to patients hospitalized for acute myocardial infarction (AMI).3,9,10 Among this subpopulation, HAA is independently associated with greater morbidity and mortality following hospital discharge.3,9,10 In a more broadly representative population of hospitalized adults, Koch et al.2 found that the development of HAA is associated with greater length of stay (LOS), hospital charges, and inpatient mortality. However, given that HAA was defined by the lowest hemoglobin level during hospitalization (and not necessarily the last value prior to discharge), it is unclear if the worse outcomes observed were the cause of the HAA, rather than its effect, since hospital LOS is a robust predictor for the development of HAA, as well as a major driver of hospital costs and a prognostic marker for inpatient mortality.3,9 Furthermore, this study evaluated outcomes only during the index hospitalization, so it is unclear if patients who develop HAA have worse clinical outcomes after discharge.

Therefore, in this study, we used clinically granular electronic health record (EHR) data from a diverse cohort of consecutive medicine inpatients hospitalized for any reason at 1 of 6 hospitals to: 1) describe the epidemiology of HAA; 2) identify predictors of its development; and 3) examine its association with 30-day postdischarge adverse outcomes. We hypothesized that the development of HAA would be independently associated with 30-day readmission and mortality in a dose-dependent fashion, with increasing severity of HAA associated with worse outcomes.

METHODS

Study Design, Population, and Data Sources

We conducted a retrospective observational cohort study using EHR data collected from November 1, 2009 to October 30, 2010 from 6 hospitals in the north Texas region. One site was a university-affiliated safety-net hospital; the remaining 5 community hospitals were a mix of teaching and nonteaching sites. All hospitals used the Epic EHR system (Epic Systems Corporation, Verona, Wisconsin). Details of this cohort have been published.11,12This study included consecutive hospitalizations among adults age 18 years or older who were discharged from a medicine inpatient service with any diagnosis. We excluded hospitalizations by individuals who were anemic within the first 24 hours of admission (hematocrit less than 36% for women and less than 40% for men), were missing a hematocrit value within the first 24 hours of hospitalization or a repeat hematocrit value prior to discharge, had a hospitalization in the preceding 30 days (ie, index hospitalization was considered a readmission), died in the hospital, were transferred to another hospital, or left against medical advice. For individuals with multiple eligible hospitalizations during the study period, we included only the first hospitalization. We also excluded those discharged to hospice, given that this population of individuals may have intentionally desired less aggressive care.

Definition of Hospital-Acquired Anemia

HAA was defined as having a normal hematocrit value (36% or greater for women and 40% or greater for men) within the first 24 hours of admission and a hematocrit value at the time of hospital discharge lower than the World Health Organization’s sex-specific cut points.13 If there was more than 1 hematocrit value on the same day, we chose the lowest value. Based on prior studies, HAA was further categorized by severity as mild (hematocrit greater than 33% and less than 36% in women; and greater than 33% and less than 40% in men), moderate (hematocrit greater than 27% and 33% or less for all), or severe (hematocrit 27% or less for all).2,14

Characteristics

We extracted information on sociodemographic characteristics, comorbidities, LOS, procedures, blood transfusions, and laboratory values from the EHR. Hospitalizations in the 12 months preceding the index hospitalization were ascertained from the EHR and from an all-payer regional hospitalization database that captures hospitalizations from 75 acute care hospitals within a 100-mile radius of Dallas-Fort Worth. International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) discharge diagnosis codes were categorized according to the Agency for Healthcare Research and Quality (AHRQ) Clinical Classifications Software (CCS).15 We defined a diagnosis for hemorrhage and coagulation, and hemorrhagic disorder as the presence of any ICD-9-CM code (primary or secondary) that mapped to the AHRQ CCS diagnoses 60 and 153, and 62, respectively. Procedures were categorized as minor diagnostic, minor therapeutic, major diagnostic, and major therapeutic using the AHRQ Healthcare Cost and Utilization Procedure Classes tool.16

Outcomes

The primary outcome was a composite of death or readmission within 30 days of hospital discharge. Hospital readmissions were ascertained at the index hospital and at any of 75 acute care hospitals in the region as described earlier. Death was ascertained from each of the hospitals’ EHR and administrative data and the Social Security Death Index. Individuals who had both outcomes (eg, a 30-day readmission and death) were considered to have only 1 occurrence of the composite primary outcome measure. Our secondary outcomes were death and readmission within 30 days of discharge, considered as separate outcomes.

Statistical Analysis

We used logistic regression models to evaluate predictors of HAA and to estimate the association of HAA on subsequent 30-day adverse outcomes after hospital discharge. All models accounted for clustering of patients by hospital. For the outcomes analyses, models were adjusted for potential confounders based on prior literature and our group’s expertise, which included age, sex, race/ethnicity, Charlson comorbidity index, prior hospitalizations, nonelective admission status, creatinine level on admission, blood urea nitrogen (BUN) to creatinine ratio of more than 20:1 on admission, LOS, receipt of a major diagnostic or therapeutic procedure during the index hospitalization, a discharge diagnosis for hemorrhage, and a discharge diagnosis for a coagulation or hemorrhagic disorder. For the mortality analyses, given the limited number of 30-day deaths after hospital discharge in our cohort, we collapsed moderate and severe HAA into a single category. In sensitivity analyses, we repeated the adjusted model, but excluded patients in our cohort who had received at least 1 blood transfusion during the index hospitalization (2.6%) given its potential for harm, and patients with a primary discharge diagnosis for AMI (3.1%).17

The functional forms of continuous variables were assessed using restricted cubic splines and locally weighted scatterplot smoothing techniques. All analyses were performed using STATA statistical software version 12.0 (StataCorp, College Station, Texas). The University of Texas Southwestern Medical Center institutional review board approved this study.

RESULTS

Of 53,995 consecutive medicine hospitalizations among adults age 18 years or older during our study period, 11,309 index hospitalizations were included in our study cohort (Supplemental Figure 1). The majority of patients excluded were because of having documented anemia within the first 24 hours of admission (n=24,950). With increasing severity of HAA, patients were older, more likely to be female, non-Hispanic white, electively admitted, have fewer comorbidities, less likely to be hospitalized in the past year, more likely to have had a major procedure, receive a blood transfusion, have a longer LOS, and have a primary or secondary discharge diagnosis for a hemorrhage or a coagulation or hemorrhagic disorder (Table 1).

Epidemiology of HAA

Among this cohort of patients without anemia on admission, the median hematocrit value on admission was 40.6 g/dL and on discharge was 38.9 g/dL. One-third of patients with normal hematocrit value at admission developed HAA, with 21.6% developing mild HAA, 10.1% developing moderate HAA, and 1.4% developing severe HAA. The median discharge hematocrit value was 36 g/dL (interquartile range [IQR]), 35-38 g/dL) for the group of patients who developed mild HAA, 31 g/dL (IQR, 30-32 g/dL) for moderate HAA, and 26 g/dL (IQR, 25-27 g/dL) for severe HAA (Supplemental Figure 2). Among the severe HAA group, 135 of the 159 patients (85%) had a major procedure (n=123, accounting for 219 unique major procedures), a diagnosis for hemorrhage (n=30), and/or a diagnosis for a coagulation or hemorrhagic disorder (n=23) during the index hospitalization. Of the 219 major procedures among patients with severe HAA, most were musculoskeletal (92 procedures), cardiovascular (61 procedures), or digestive system-related (41 procedures). The most common types of procedures were coronary artery bypass graft (36 procedures), hip replacement (25 procedures), knee replacement (17 procedures), and femur fracture reduction (15 procedures). The 10 most common principal discharge diagnoses of the index hospitalization by HAA group are shown in Supplemental Table 1. For the severe HAA group, the most common diagnosis was hip fracture (20.8%).

Predictors of HAA

Compared to no or mild HAA, female sex, elective admission status, serum creatinine on admission, BUN to creatinine ratio greater than 20 to 1, hospital LOS, and undergoing a major diagnostic or therapeutic procedure were predictors for the development of moderate or severe HAA (Table 2). The model explained 23% of the variance (McFadden’s pseudo R2).

Incidence of Postdischarge Outcomes by Severity of HAA

The severity of HAA was associated with a dose-dependent increase in the incidence of 30-day adverse outcomes, such that patients with increasing severity of HAA had greater 30-day composite, mortality, and readmission outcomes (P < 0.001; Figure). The 30-day postdischarge composite outcome was primarily driven by hospital readmissions given the low mortality rate in our cohort. Patients who did not develop HAA had an incidence of 9.7% for the composite outcome, whereas patients with severe HAA had an incidence of 16.4%. Among the 24 patients with severe HAA but who had not undergone a major procedure or had a discharge diagnosis for hemorrhage or for a coagulation or hemorrhagic disorder, only 3 (12.5%) had a composite postdischarge adverse outcome (2 readmissions and 1 death). The median time to readmission was similar between groups, but more patients with severe HAA had an early readmission within 7 days of hospital discharge than patients who did not develop HAA (6.9% vs. 2.9%, P = 0.001; Supplemental Table 2).

Association of HAA and Postdischarge Outcomes

In unadjusted analyses, compared to not developing HAA, mild, moderate, and severe HAA were associated with a 29%, 61%, and 81% increase in the odds for a composite outcome, respectively (Table 3). After adjustment for confounders, the effect size for HAA attenuated and was no longer statistically significant for mild and moderate HAA. However, severe HAA was significantly associated with a 39% increase in the odds for the composite outcome and a 41% increase in the odds for 30-day readmission (P = 0.008 and P = 0.02, respectively).

In sensitivity analyses, the exclusion of individuals who received at least 1 blood transfusion during the index hospitalization (n=298) and individuals who had a primary discharge diagnosis for AMI (n=353) did not substantively change the estimates of the association between severe HAA and postdischarge outcomes (Supplemental Tables 3 and 4). However, because of the fewer number of adverse events for each analysis, the confidence intervals were wider and the association of severe HAA and the composite outcome and readmission were no longer statistically significant in these subcohorts.

DISCUSSION

In this large and diverse sample of medical inpatients, we found that HAA occurs in one-third of adults with normal hematocrit value at admission, where 10.1% of the cohort developed moderately severe HAA and 1.4% developed severe HAA by the time of discharge. Length of stay and undergoing a major diagnostic or therapeutic procedure were the 2 strongest potentially modifiable predictors of developing moderate or severe HAA. Severe HAA was independently associated with a 39% increase in the odds of being readmitted or dying within 30 days after hospital discharge compared to not developing HAA. However, the associations between mild or moderate HAA with adverse outcomes were attenuated after adjusting for confounders and were no longer statistically significant.

To our knowledge, this is the first study on the postdischarge adverse outcomes of HAA among a diverse cohort of medical inpatients hospitalized for any reason. In a more restricted population, Salisbury et al.3 found that patients hospitalized for AMI who developed moderate to severe HAA (hemoglobin value at discharge of 11 g/dL or less) had greater 1-year mortality than those without HAA (8.4% vs. 2.6%, P < 0.001), and had an 82% increase in the hazard for mortality (95% confidence interval, hazard ratio 1.11-2.98). Others have similarly shown that HAA is common among patients hospitalized with AMI and is associated with greater mortality.5,9,18 Our study extends upon this prior research by showing that severe HAA increases the risk for adverse outcomes for all adult inpatients, not only those hospitalized for AMI or among those receiving blood transfusions.

Despite the increased harm associated with severe HAA, it is unclear whether HAA is a preventable hazard of hospitalization, as suggested by others.6,8 Most patients in our cohort who developed severe HAA underwent a major procedure, had a discharge diagnosis for hemorrhage, and/or had a discharge diagnosis for a coagulation or hemorrhagic disorder. Thus, blood loss due to phlebotomy, 1 of the more modifiable etiologies of HAA, was unlikely to have been the primary driver for most patients who developed severe HAA. Since it has been estimated to take 15 days of daily phlebotomy of 53 mL of whole blood in females of average body weight (and 20 days for average weight males) with no bone marrow synthesis for severe anemia to develop, it is even less likely that phlebotomy was the principal etiology given an 8-day median LOS among patients with severe HAA.19,20 However, since the etiology of HAA can be multifactorial, limiting blood loss due to phlebotomy by using smaller volume tubes, blood conservation devices, or reducing unnecessary testing may mitigate the development of severe HAA.21,22 Additionally, since more than three-quarters of patients who developed severe HAA underwent a major procedure, more care and attention to minimizing operative blood loss could lessen the severity of HAA and facilitate better recovery. If minimizing blood loss is not feasible, in the absence of symptoms related to anemia or ongoing blood loss, randomized controlled trials overwhelmingly support a restrictive transfusion strategy using a hemoglobin value threshold of 7 mg/dL, even in the postoperative setting.23-25

The implications of mild to moderate HAA are less clear. The odds ratios for mild and moderate HAA, while not statistically significant, suggest a small increase in harm compared to not developing HAA. Furthermore, the upper boundary of the confidence intervals for mild and moderate HAA cannot exclude a possible 30% and 56% increase in the odds for the 30-day composite outcome, respectively. Thus, a better powered study, including more patients and extending the time interval for ascertaining postdischarge adverse events beyond 30 days, may reveal a harmful association. Lastly, our study assessed only the association of HAA with 30-day readmission and mortality. Examining the association between HAA and other patient-centered outcomes such as fatigue, functional impairment, and prolonged posthospitalization recovery time may uncover other important adverse effects of mild and moderate HAA, both of which occur far more frequently than severe HAA.

Our findings should be interpreted in the context of several limitations. First, although we included a diverse group of patients from a multihospital cohort, generalizability to other settings is uncertain. Second, as this was a retrospective study using EHR data, we had limited information to infer the precise mechanism of HAA for each patient. However, procedure codes and discharge diagnoses enabled us to assess which patients underwent a major procedure or had a hemorrhage or hemorrhagic disorder during the hospitalization. Third, given the relatively few number of patients with severe HAA in our cohort, we were unable to assess if the association of severe HAA differed by suspected etiology. Lastly, because we were unable to ascertain the timing of the hematocrit values within the first 24 hours of admission, we excluded both patients with preexisting anemia on admission and those who developed HAA within the first 24 hours of admission, which is not uncommon.26 Thus, we were unable to assess the effect of acute on chronic anemia arising during hospitalization and HAA that develops within the first 24 hours, both of which may also be harmful.18,27,28

In conclusion, severe HAA occurs in 1.4% of all medical hospitalizations and is associated with increased odds of death or readmission within 30 days. Since most patients with severe HAA had undergone a major procedure or had a discharge diagnosis of hemorrhage or a coagulation or hemorrhagic disorder, it is unclear if severe HAA is potentially preventable through preventing blood loss from phlebotomy or by reducing iatrogenic injury during procedures. Future research should assess the potential preventability of severe HAA, and examine other patient-centered outcomes potentially related to anemia, including fatigue, functional impairment, and trajectory of posthospital recovery.

Acknowledgments

The authors would like to acknowledge Ruben Amarasingham, MD, MBA, President and Chief Executive Officer of the Parkland Center for Clinical Innovation, and Ferdinand Velasco, MD, Chief Health Information Officer at Texas Health Resources, for their assistance in assembling the 6 hospital cohort used in this study. The authors would also like to thank Valy Fontil, MD, MAS, Assistant Professor of Medicine at the University of California San Francisco School of Medicine, and Elizabeth Rogers, MD, MAS, Assistant Professor of Internal Medicine and Pediatrics at the University of Minnesota Medical School, for their constructive feedback on an earlier version of this manuscript.

Disclosures

This work was supported by the Agency for Healthcare Research and Quality-funded UT Southwestern Center for Patient-Centered Outcomes Research (R24 HS022418-01); the Commonwealth Foundation (#20100323); the UT Southwestern KL2 Scholars Program supported by the National Institutes of Health (KL2 TR001103); the National Center for Advancing Translational Sciences at the National Institute of Health (U54 RFA-TR-12-006); and the National Institute on Aging (K23AG052603). The study sponsors had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors have no financial conflicts of interest to disclose.

Hospital-acquired anemia (HAA) is defined as having a normal hemoglobin value upon admission but developing anemia during the course of hospitalization. The condition is common, with an incidence ranging from approximately 25% when defined by using the hemoglobin value prior to discharge to 74% when using the nadir hemoglobin value during hospitalization.1-5 While there are many potential etiologies for HAA, given that iatrogenic blood loss from phlebotomy may lead to its development,6,7 HAA has been postulated to be a hazard of hospitalization that is potentially preventable.8 However, it is unclear whether the development of HAA portends worse outcomes after hospital discharge.

The limited number of studies on the association between HAA and postdischarge outcomes has been restricted to patients hospitalized for acute myocardial infarction (AMI).3,9,10 Among this subpopulation, HAA is independently associated with greater morbidity and mortality following hospital discharge.3,9,10 In a more broadly representative population of hospitalized adults, Koch et al.2 found that the development of HAA is associated with greater length of stay (LOS), hospital charges, and inpatient mortality. However, given that HAA was defined by the lowest hemoglobin level during hospitalization (and not necessarily the last value prior to discharge), it is unclear if the worse outcomes observed were the cause of the HAA, rather than its effect, since hospital LOS is a robust predictor for the development of HAA, as well as a major driver of hospital costs and a prognostic marker for inpatient mortality.3,9 Furthermore, this study evaluated outcomes only during the index hospitalization, so it is unclear if patients who develop HAA have worse clinical outcomes after discharge.

Therefore, in this study, we used clinically granular electronic health record (EHR) data from a diverse cohort of consecutive medicine inpatients hospitalized for any reason at 1 of 6 hospitals to: 1) describe the epidemiology of HAA; 2) identify predictors of its development; and 3) examine its association with 30-day postdischarge adverse outcomes. We hypothesized that the development of HAA would be independently associated with 30-day readmission and mortality in a dose-dependent fashion, with increasing severity of HAA associated with worse outcomes.

METHODS

Study Design, Population, and Data Sources

We conducted a retrospective observational cohort study using EHR data collected from November 1, 2009 to October 30, 2010 from 6 hospitals in the north Texas region. One site was a university-affiliated safety-net hospital; the remaining 5 community hospitals were a mix of teaching and nonteaching sites. All hospitals used the Epic EHR system (Epic Systems Corporation, Verona, Wisconsin). Details of this cohort have been published.11,12This study included consecutive hospitalizations among adults age 18 years or older who were discharged from a medicine inpatient service with any diagnosis. We excluded hospitalizations by individuals who were anemic within the first 24 hours of admission (hematocrit less than 36% for women and less than 40% for men), were missing a hematocrit value within the first 24 hours of hospitalization or a repeat hematocrit value prior to discharge, had a hospitalization in the preceding 30 days (ie, index hospitalization was considered a readmission), died in the hospital, were transferred to another hospital, or left against medical advice. For individuals with multiple eligible hospitalizations during the study period, we included only the first hospitalization. We also excluded those discharged to hospice, given that this population of individuals may have intentionally desired less aggressive care.

Definition of Hospital-Acquired Anemia

HAA was defined as having a normal hematocrit value (36% or greater for women and 40% or greater for men) within the first 24 hours of admission and a hematocrit value at the time of hospital discharge lower than the World Health Organization’s sex-specific cut points.13 If there was more than 1 hematocrit value on the same day, we chose the lowest value. Based on prior studies, HAA was further categorized by severity as mild (hematocrit greater than 33% and less than 36% in women; and greater than 33% and less than 40% in men), moderate (hematocrit greater than 27% and 33% or less for all), or severe (hematocrit 27% or less for all).2,14

Characteristics

We extracted information on sociodemographic characteristics, comorbidities, LOS, procedures, blood transfusions, and laboratory values from the EHR. Hospitalizations in the 12 months preceding the index hospitalization were ascertained from the EHR and from an all-payer regional hospitalization database that captures hospitalizations from 75 acute care hospitals within a 100-mile radius of Dallas-Fort Worth. International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) discharge diagnosis codes were categorized according to the Agency for Healthcare Research and Quality (AHRQ) Clinical Classifications Software (CCS).15 We defined a diagnosis for hemorrhage and coagulation, and hemorrhagic disorder as the presence of any ICD-9-CM code (primary or secondary) that mapped to the AHRQ CCS diagnoses 60 and 153, and 62, respectively. Procedures were categorized as minor diagnostic, minor therapeutic, major diagnostic, and major therapeutic using the AHRQ Healthcare Cost and Utilization Procedure Classes tool.16

Outcomes

The primary outcome was a composite of death or readmission within 30 days of hospital discharge. Hospital readmissions were ascertained at the index hospital and at any of 75 acute care hospitals in the region as described earlier. Death was ascertained from each of the hospitals’ EHR and administrative data and the Social Security Death Index. Individuals who had both outcomes (eg, a 30-day readmission and death) were considered to have only 1 occurrence of the composite primary outcome measure. Our secondary outcomes were death and readmission within 30 days of discharge, considered as separate outcomes.

Statistical Analysis

We used logistic regression models to evaluate predictors of HAA and to estimate the association of HAA on subsequent 30-day adverse outcomes after hospital discharge. All models accounted for clustering of patients by hospital. For the outcomes analyses, models were adjusted for potential confounders based on prior literature and our group’s expertise, which included age, sex, race/ethnicity, Charlson comorbidity index, prior hospitalizations, nonelective admission status, creatinine level on admission, blood urea nitrogen (BUN) to creatinine ratio of more than 20:1 on admission, LOS, receipt of a major diagnostic or therapeutic procedure during the index hospitalization, a discharge diagnosis for hemorrhage, and a discharge diagnosis for a coagulation or hemorrhagic disorder. For the mortality analyses, given the limited number of 30-day deaths after hospital discharge in our cohort, we collapsed moderate and severe HAA into a single category. In sensitivity analyses, we repeated the adjusted model, but excluded patients in our cohort who had received at least 1 blood transfusion during the index hospitalization (2.6%) given its potential for harm, and patients with a primary discharge diagnosis for AMI (3.1%).17

The functional forms of continuous variables were assessed using restricted cubic splines and locally weighted scatterplot smoothing techniques. All analyses were performed using STATA statistical software version 12.0 (StataCorp, College Station, Texas). The University of Texas Southwestern Medical Center institutional review board approved this study.

RESULTS

Of 53,995 consecutive medicine hospitalizations among adults age 18 years or older during our study period, 11,309 index hospitalizations were included in our study cohort (Supplemental Figure 1). The majority of patients excluded were because of having documented anemia within the first 24 hours of admission (n=24,950). With increasing severity of HAA, patients were older, more likely to be female, non-Hispanic white, electively admitted, have fewer comorbidities, less likely to be hospitalized in the past year, more likely to have had a major procedure, receive a blood transfusion, have a longer LOS, and have a primary or secondary discharge diagnosis for a hemorrhage or a coagulation or hemorrhagic disorder (Table 1).

Epidemiology of HAA

Among this cohort of patients without anemia on admission, the median hematocrit value on admission was 40.6 g/dL and on discharge was 38.9 g/dL. One-third of patients with normal hematocrit value at admission developed HAA, with 21.6% developing mild HAA, 10.1% developing moderate HAA, and 1.4% developing severe HAA. The median discharge hematocrit value was 36 g/dL (interquartile range [IQR]), 35-38 g/dL) for the group of patients who developed mild HAA, 31 g/dL (IQR, 30-32 g/dL) for moderate HAA, and 26 g/dL (IQR, 25-27 g/dL) for severe HAA (Supplemental Figure 2). Among the severe HAA group, 135 of the 159 patients (85%) had a major procedure (n=123, accounting for 219 unique major procedures), a diagnosis for hemorrhage (n=30), and/or a diagnosis for a coagulation or hemorrhagic disorder (n=23) during the index hospitalization. Of the 219 major procedures among patients with severe HAA, most were musculoskeletal (92 procedures), cardiovascular (61 procedures), or digestive system-related (41 procedures). The most common types of procedures were coronary artery bypass graft (36 procedures), hip replacement (25 procedures), knee replacement (17 procedures), and femur fracture reduction (15 procedures). The 10 most common principal discharge diagnoses of the index hospitalization by HAA group are shown in Supplemental Table 1. For the severe HAA group, the most common diagnosis was hip fracture (20.8%).

Predictors of HAA

Compared to no or mild HAA, female sex, elective admission status, serum creatinine on admission, BUN to creatinine ratio greater than 20 to 1, hospital LOS, and undergoing a major diagnostic or therapeutic procedure were predictors for the development of moderate or severe HAA (Table 2). The model explained 23% of the variance (McFadden’s pseudo R2).

Incidence of Postdischarge Outcomes by Severity of HAA

The severity of HAA was associated with a dose-dependent increase in the incidence of 30-day adverse outcomes, such that patients with increasing severity of HAA had greater 30-day composite, mortality, and readmission outcomes (P < 0.001; Figure). The 30-day postdischarge composite outcome was primarily driven by hospital readmissions given the low mortality rate in our cohort. Patients who did not develop HAA had an incidence of 9.7% for the composite outcome, whereas patients with severe HAA had an incidence of 16.4%. Among the 24 patients with severe HAA but who had not undergone a major procedure or had a discharge diagnosis for hemorrhage or for a coagulation or hemorrhagic disorder, only 3 (12.5%) had a composite postdischarge adverse outcome (2 readmissions and 1 death). The median time to readmission was similar between groups, but more patients with severe HAA had an early readmission within 7 days of hospital discharge than patients who did not develop HAA (6.9% vs. 2.9%, P = 0.001; Supplemental Table 2).

Association of HAA and Postdischarge Outcomes

In unadjusted analyses, compared to not developing HAA, mild, moderate, and severe HAA were associated with a 29%, 61%, and 81% increase in the odds for a composite outcome, respectively (Table 3). After adjustment for confounders, the effect size for HAA attenuated and was no longer statistically significant for mild and moderate HAA. However, severe HAA was significantly associated with a 39% increase in the odds for the composite outcome and a 41% increase in the odds for 30-day readmission (P = 0.008 and P = 0.02, respectively).

In sensitivity analyses, the exclusion of individuals who received at least 1 blood transfusion during the index hospitalization (n=298) and individuals who had a primary discharge diagnosis for AMI (n=353) did not substantively change the estimates of the association between severe HAA and postdischarge outcomes (Supplemental Tables 3 and 4). However, because of the fewer number of adverse events for each analysis, the confidence intervals were wider and the association of severe HAA and the composite outcome and readmission were no longer statistically significant in these subcohorts.

DISCUSSION

In this large and diverse sample of medical inpatients, we found that HAA occurs in one-third of adults with normal hematocrit value at admission, where 10.1% of the cohort developed moderately severe HAA and 1.4% developed severe HAA by the time of discharge. Length of stay and undergoing a major diagnostic or therapeutic procedure were the 2 strongest potentially modifiable predictors of developing moderate or severe HAA. Severe HAA was independently associated with a 39% increase in the odds of being readmitted or dying within 30 days after hospital discharge compared to not developing HAA. However, the associations between mild or moderate HAA with adverse outcomes were attenuated after adjusting for confounders and were no longer statistically significant.

To our knowledge, this is the first study on the postdischarge adverse outcomes of HAA among a diverse cohort of medical inpatients hospitalized for any reason. In a more restricted population, Salisbury et al.3 found that patients hospitalized for AMI who developed moderate to severe HAA (hemoglobin value at discharge of 11 g/dL or less) had greater 1-year mortality than those without HAA (8.4% vs. 2.6%, P < 0.001), and had an 82% increase in the hazard for mortality (95% confidence interval, hazard ratio 1.11-2.98). Others have similarly shown that HAA is common among patients hospitalized with AMI and is associated with greater mortality.5,9,18 Our study extends upon this prior research by showing that severe HAA increases the risk for adverse outcomes for all adult inpatients, not only those hospitalized for AMI or among those receiving blood transfusions.

Despite the increased harm associated with severe HAA, it is unclear whether HAA is a preventable hazard of hospitalization, as suggested by others.6,8 Most patients in our cohort who developed severe HAA underwent a major procedure, had a discharge diagnosis for hemorrhage, and/or had a discharge diagnosis for a coagulation or hemorrhagic disorder. Thus, blood loss due to phlebotomy, 1 of the more modifiable etiologies of HAA, was unlikely to have been the primary driver for most patients who developed severe HAA. Since it has been estimated to take 15 days of daily phlebotomy of 53 mL of whole blood in females of average body weight (and 20 days for average weight males) with no bone marrow synthesis for severe anemia to develop, it is even less likely that phlebotomy was the principal etiology given an 8-day median LOS among patients with severe HAA.19,20 However, since the etiology of HAA can be multifactorial, limiting blood loss due to phlebotomy by using smaller volume tubes, blood conservation devices, or reducing unnecessary testing may mitigate the development of severe HAA.21,22 Additionally, since more than three-quarters of patients who developed severe HAA underwent a major procedure, more care and attention to minimizing operative blood loss could lessen the severity of HAA and facilitate better recovery. If minimizing blood loss is not feasible, in the absence of symptoms related to anemia or ongoing blood loss, randomized controlled trials overwhelmingly support a restrictive transfusion strategy using a hemoglobin value threshold of 7 mg/dL, even in the postoperative setting.23-25

The implications of mild to moderate HAA are less clear. The odds ratios for mild and moderate HAA, while not statistically significant, suggest a small increase in harm compared to not developing HAA. Furthermore, the upper boundary of the confidence intervals for mild and moderate HAA cannot exclude a possible 30% and 56% increase in the odds for the 30-day composite outcome, respectively. Thus, a better powered study, including more patients and extending the time interval for ascertaining postdischarge adverse events beyond 30 days, may reveal a harmful association. Lastly, our study assessed only the association of HAA with 30-day readmission and mortality. Examining the association between HAA and other patient-centered outcomes such as fatigue, functional impairment, and prolonged posthospitalization recovery time may uncover other important adverse effects of mild and moderate HAA, both of which occur far more frequently than severe HAA.

Our findings should be interpreted in the context of several limitations. First, although we included a diverse group of patients from a multihospital cohort, generalizability to other settings is uncertain. Second, as this was a retrospective study using EHR data, we had limited information to infer the precise mechanism of HAA for each patient. However, procedure codes and discharge diagnoses enabled us to assess which patients underwent a major procedure or had a hemorrhage or hemorrhagic disorder during the hospitalization. Third, given the relatively few number of patients with severe HAA in our cohort, we were unable to assess if the association of severe HAA differed by suspected etiology. Lastly, because we were unable to ascertain the timing of the hematocrit values within the first 24 hours of admission, we excluded both patients with preexisting anemia on admission and those who developed HAA within the first 24 hours of admission, which is not uncommon.26 Thus, we were unable to assess the effect of acute on chronic anemia arising during hospitalization and HAA that develops within the first 24 hours, both of which may also be harmful.18,27,28

In conclusion, severe HAA occurs in 1.4% of all medical hospitalizations and is associated with increased odds of death or readmission within 30 days. Since most patients with severe HAA had undergone a major procedure or had a discharge diagnosis of hemorrhage or a coagulation or hemorrhagic disorder, it is unclear if severe HAA is potentially preventable through preventing blood loss from phlebotomy or by reducing iatrogenic injury during procedures. Future research should assess the potential preventability of severe HAA, and examine other patient-centered outcomes potentially related to anemia, including fatigue, functional impairment, and trajectory of posthospital recovery.

Acknowledgments

The authors would like to acknowledge Ruben Amarasingham, MD, MBA, President and Chief Executive Officer of the Parkland Center for Clinical Innovation, and Ferdinand Velasco, MD, Chief Health Information Officer at Texas Health Resources, for their assistance in assembling the 6 hospital cohort used in this study. The authors would also like to thank Valy Fontil, MD, MAS, Assistant Professor of Medicine at the University of California San Francisco School of Medicine, and Elizabeth Rogers, MD, MAS, Assistant Professor of Internal Medicine and Pediatrics at the University of Minnesota Medical School, for their constructive feedback on an earlier version of this manuscript.

Disclosures

This work was supported by the Agency for Healthcare Research and Quality-funded UT Southwestern Center for Patient-Centered Outcomes Research (R24 HS022418-01); the Commonwealth Foundation (#20100323); the UT Southwestern KL2 Scholars Program supported by the National Institutes of Health (KL2 TR001103); the National Center for Advancing Translational Sciences at the National Institute of Health (U54 RFA-TR-12-006); and the National Institute on Aging (K23AG052603). The study sponsors had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors have no financial conflicts of interest to disclose.

1. Kurniali PC, Curry S, Brennan KW, et al. A retrospective study investigating the incidence and predisposing factors of hospital-acquired anemia. Anemia. 2014;2014:634582. PubMed

2. Koch CG, Li L, Sun Z, et al. Hospital-acquired anemia: prevalence, outcomes, and healthcare implications. J Hosp Med. 2013;8(9):506-512. PubMed

3. Salisbury AC, Alexander KP, Reid KJ, et al. Incidence, correlates, and outcomes of acute, hospital-acquired anemia in patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2010;3(4):337-346. PubMed

4. Salisbury AC, Amin AP, Reid KJ, et al. Hospital-acquired anemia and in-hospital mortality in patients with acute myocardial infarction. Am Heart J. 2011;162(2):300-309 e303. PubMed

5. Meroño O, Cladellas M, Recasens L, et al. In-hospital acquired anemia in acute coronary syndrome. Predictors, in-hospital prognosis and one-year mortality. Rev Esp Cardiol (Engl Ed). 2012;65(8):742-748. PubMed

6. Salisbury AC, Reid KJ, Alexander KP, et al. Diagnostic blood loss from phlebotomy and hospital-acquired anemia during acute myocardial infarction. Arch Intern Med. 2011;171(18):1646-1653. PubMed

7. Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? The effect of diagnostic phlebotomy on hemoglobin and hematocrit levels. J Gen Intern Med. 2005;20(6):520-524. PubMed

8. Rennke S, Fang MC. Hazards of hospitalization: more than just “never events”. Arch Intern Med. 2011;171(18):1653-1654. PubMed

9. Choi JS, Kim YA, Kang YU, et al. Clinical impact of hospital-acquired anemia in association with acute kidney injury and chronic kidney disease in patients with acute myocardial infarction. PLoS One. 2013;8(9):e75583. PubMed

10. Salisbury AC, Kosiborod M, Amin AP, et al. Recovery from hospital-acquired anemia after acute myocardial infarction and effect on outcomes. Am J Cardiol. 2011;108(7):949-954. PubMed

11. Nguyen OK, Makam AN, Clark C, et al. Predicting all-cause readmissions using electronic health record data from the entire hospitalization: Model development and comparison. J Hosp Med. 2016;11(7):473-480. PubMed

12. Amarasingham R, Velasco F, Xie B, et al. Electronic medical record-based multicondition models to predict the risk of 30 day readmission or death among adult medicine patients: validation and comparison to existing models. BMC Med Inform Decis Mak. 2015;15:39. PubMed

13. World Health Organization. Hemoglobin concentrations for the diagnosis of anaemia and assessment of severity. http://www.who.int/vmnis/indicators/haemoglobin.pdf. Accessed March 15, 2016.

14. Martin ND, Scantling D. Hospital-acquired anemia: a contemporary review of etiologies and prevention strategies. J Infus Nurs. 2015;38(5):330-338. PubMed

15. Agency for Healthcare Research and Quality, Rockville, MD. Clinical classification software (CCS) for ICD-9-CM. Healthcare Cost and Utilization Project. 2015 http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp. Accessed November 18, 2015.

16. Agency for Healthcare Research and Quality, Rockville, MD. Procedure classes2015. Healthcare Cost and Utilization Project. 2015. https://www.hcup-us.ahrq.gov/toolssoftware/procedure/procedure.jsp. Accessed November 18, 2015.

17. Corwin HL, Gettinger A, Pearl RG, et al. The CRIT Study: Anemia and blood transfusion in the critically ill--current clinical practice in the United States. Crit Care Med. 2004;32(1):39-52. PubMed

18. Aronson D, Suleiman M, Agmon Y, et al. Changes in haemoglobin levels during hospital course and long-term outcome after acute myocardial infarction. Eur Heart J. 2007;28(11):1289-1296. PubMed

19. Lyon AW, Chin AC, Slotsve GA, Lyon ME. Simulation of repetitive diagnostic blood loss and onset of iatrogenic anemia in critical care patients with a mathematical model. Comput Biol Med. 2013;43(2):84-90. PubMed

20. van der Bom JG, Cannegieter SC. Hospital-acquired anemia: the contribution of diagnostic blood loss. J Thromb Haemost. 2015;13(6):1157-1159. PubMed

21. Sanchez-Giron F, Alvarez-Mora F. Reduction of blood loss from laboratory testing in hospitalized adult patients using small-volume (pediatric) tubes. Arch Pathol Lab Med. 2008;132(12):1916-1919. PubMed

22. Smoller BR, Kruskall MS. Phlebotomy for diagnostic laboratory tests in adults. Pattern of use and effect on transfusion requirements. N Engl J Med. 1986;314(19):1233-1235. PubMed

23. Carson JL, Carless PA, Hebert PC. Transfusion thresholds and other strategies for guiding allogeneic red blood cell transfusion. Cochrane Database Syst Rev. 2012(4):CD002042. PubMed

24. Carson JL, Carless PA, Hébert PC. Outcomes using lower vs higher hemoglobin thresholds for red blood cell transfusion. JAMA. 2013;309(1):83-84. PubMed

25. Carson JL, Sieber F, Cook DR, et al. Liberal versus restrictive blood transfusion strategy: 3-year survival and cause of death results from the FOCUS randomised controlled trial. Lancet. 2015;385(9974):1183-1189. PubMed

26. Rajkomar A, McCulloch CE, Fang MC. Low diagnostic utility of rechecking hemoglobins within 24 hours in hospitalized patients. Am J Med. 2016;129(11):1194-1197. PubMed

27. Reade MC, Weissfeld L, Angus DC, Kellum JA, Milbrandt EB. The prevalence of anemia and its association with 90-day mortality in hospitalized community-acquired pneumonia. BMC Pulm Med. 2010;10:15. PubMed

28. Halm EA, Wang JJ, Boockvar K, et al. The effect of perioperative anemia on clinical and functional outcomes in patients with hip fracture. J Orthop Trauma. 2004;18(6):369-374. PubMed

1. Kurniali PC, Curry S, Brennan KW, et al. A retrospective study investigating the incidence and predisposing factors of hospital-acquired anemia. Anemia. 2014;2014:634582. PubMed

2. Koch CG, Li L, Sun Z, et al. Hospital-acquired anemia: prevalence, outcomes, and healthcare implications. J Hosp Med. 2013;8(9):506-512. PubMed

3. Salisbury AC, Alexander KP, Reid KJ, et al. Incidence, correlates, and outcomes of acute, hospital-acquired anemia in patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2010;3(4):337-346. PubMed

4. Salisbury AC, Amin AP, Reid KJ, et al. Hospital-acquired anemia and in-hospital mortality in patients with acute myocardial infarction. Am Heart J. 2011;162(2):300-309 e303. PubMed

5. Meroño O, Cladellas M, Recasens L, et al. In-hospital acquired anemia in acute coronary syndrome. Predictors, in-hospital prognosis and one-year mortality. Rev Esp Cardiol (Engl Ed). 2012;65(8):742-748. PubMed

6. Salisbury AC, Reid KJ, Alexander KP, et al. Diagnostic blood loss from phlebotomy and hospital-acquired anemia during acute myocardial infarction. Arch Intern Med. 2011;171(18):1646-1653. PubMed

7. Thavendiranathan P, Bagai A, Ebidia A, Detsky AS, Choudhry NK. Do blood tests cause anemia in hospitalized patients? The effect of diagnostic phlebotomy on hemoglobin and hematocrit levels. J Gen Intern Med. 2005;20(6):520-524. PubMed

8. Rennke S, Fang MC. Hazards of hospitalization: more than just “never events”. Arch Intern Med. 2011;171(18):1653-1654. PubMed

9. Choi JS, Kim YA, Kang YU, et al. Clinical impact of hospital-acquired anemia in association with acute kidney injury and chronic kidney disease in patients with acute myocardial infarction. PLoS One. 2013;8(9):e75583. PubMed

10. Salisbury AC, Kosiborod M, Amin AP, et al. Recovery from hospital-acquired anemia after acute myocardial infarction and effect on outcomes. Am J Cardiol. 2011;108(7):949-954. PubMed

11. Nguyen OK, Makam AN, Clark C, et al. Predicting all-cause readmissions using electronic health record data from the entire hospitalization: Model development and comparison. J Hosp Med. 2016;11(7):473-480. PubMed

12. Amarasingham R, Velasco F, Xie B, et al. Electronic medical record-based multicondition models to predict the risk of 30 day readmission or death among adult medicine patients: validation and comparison to existing models. BMC Med Inform Decis Mak. 2015;15:39. PubMed

13. World Health Organization. Hemoglobin concentrations for the diagnosis of anaemia and assessment of severity. http://www.who.int/vmnis/indicators/haemoglobin.pdf. Accessed March 15, 2016.

14. Martin ND, Scantling D. Hospital-acquired anemia: a contemporary review of etiologies and prevention strategies. J Infus Nurs. 2015;38(5):330-338. PubMed

15. Agency for Healthcare Research and Quality, Rockville, MD. Clinical classification software (CCS) for ICD-9-CM. Healthcare Cost and Utilization Project. 2015 http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp. Accessed November 18, 2015.

16. Agency for Healthcare Research and Quality, Rockville, MD. Procedure classes2015. Healthcare Cost and Utilization Project. 2015. https://www.hcup-us.ahrq.gov/toolssoftware/procedure/procedure.jsp. Accessed November 18, 2015.

17. Corwin HL, Gettinger A, Pearl RG, et al. The CRIT Study: Anemia and blood transfusion in the critically ill--current clinical practice in the United States. Crit Care Med. 2004;32(1):39-52. PubMed

18. Aronson D, Suleiman M, Agmon Y, et al. Changes in haemoglobin levels during hospital course and long-term outcome after acute myocardial infarction. Eur Heart J. 2007;28(11):1289-1296. PubMed

19. Lyon AW, Chin AC, Slotsve GA, Lyon ME. Simulation of repetitive diagnostic blood loss and onset of iatrogenic anemia in critical care patients with a mathematical model. Comput Biol Med. 2013;43(2):84-90. PubMed

20. van der Bom JG, Cannegieter SC. Hospital-acquired anemia: the contribution of diagnostic blood loss. J Thromb Haemost. 2015;13(6):1157-1159. PubMed

21. Sanchez-Giron F, Alvarez-Mora F. Reduction of blood loss from laboratory testing in hospitalized adult patients using small-volume (pediatric) tubes. Arch Pathol Lab Med. 2008;132(12):1916-1919. PubMed

22. Smoller BR, Kruskall MS. Phlebotomy for diagnostic laboratory tests in adults. Pattern of use and effect on transfusion requirements. N Engl J Med. 1986;314(19):1233-1235. PubMed

23. Carson JL, Carless PA, Hebert PC. Transfusion thresholds and other strategies for guiding allogeneic red blood cell transfusion. Cochrane Database Syst Rev. 2012(4):CD002042. PubMed

24. Carson JL, Carless PA, Hébert PC. Outcomes using lower vs higher hemoglobin thresholds for red blood cell transfusion. JAMA. 2013;309(1):83-84. PubMed

25. Carson JL, Sieber F, Cook DR, et al. Liberal versus restrictive blood transfusion strategy: 3-year survival and cause of death results from the FOCUS randomised controlled trial. Lancet. 2015;385(9974):1183-1189. PubMed

26. Rajkomar A, McCulloch CE, Fang MC. Low diagnostic utility of rechecking hemoglobins within 24 hours in hospitalized patients. Am J Med. 2016;129(11):1194-1197. PubMed

27. Reade MC, Weissfeld L, Angus DC, Kellum JA, Milbrandt EB. The prevalence of anemia and its association with 90-day mortality in hospitalized community-acquired pneumonia. BMC Pulm Med. 2010;10:15. PubMed

28. Halm EA, Wang JJ, Boockvar K, et al. The effect of perioperative anemia on clinical and functional outcomes in patients with hip fracture. J Orthop Trauma. 2004;18(6):369-374. PubMed

© 2017 Society of Hospital Medicine

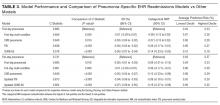

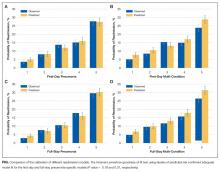

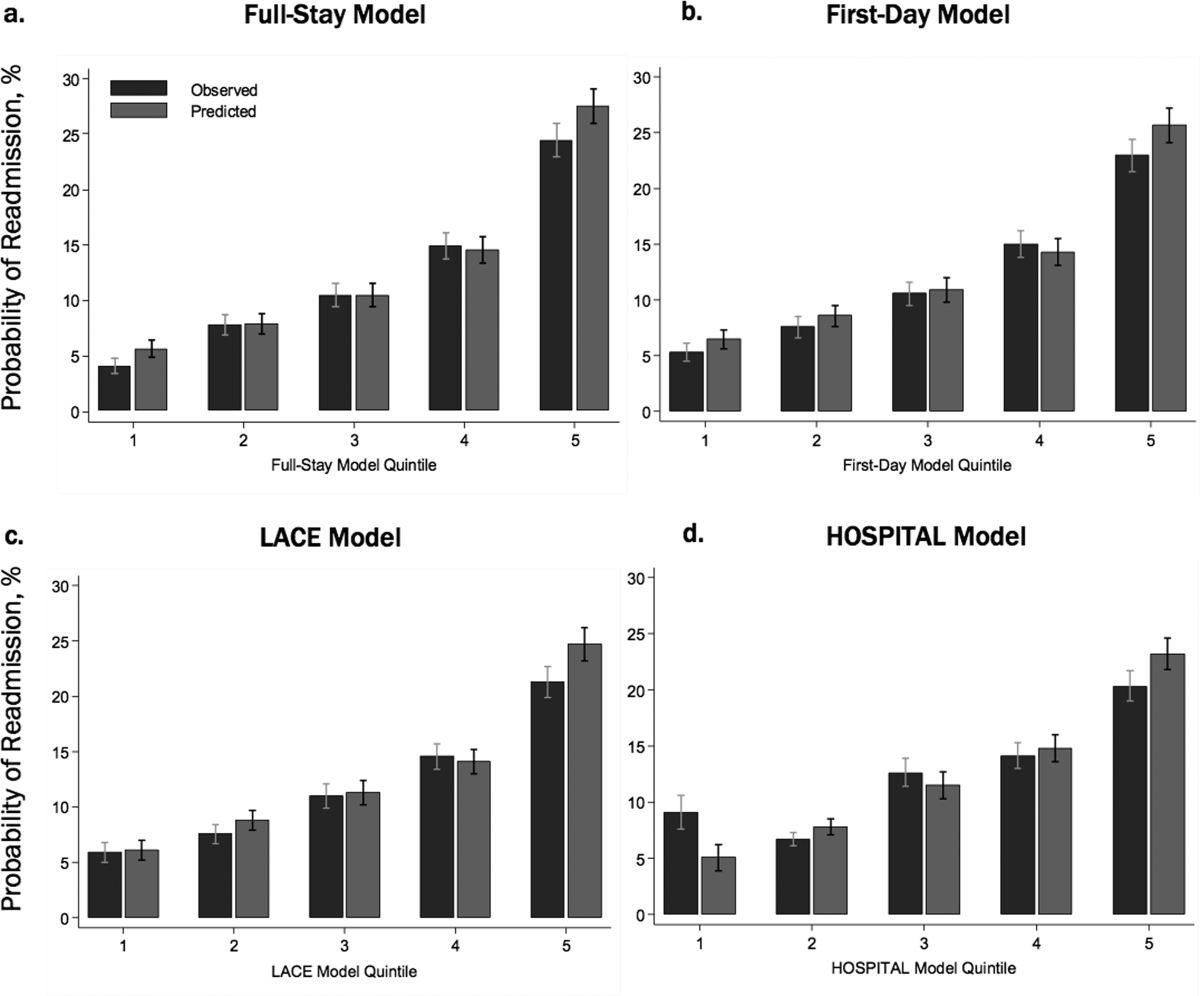

Predicting 30-day pneumonia readmissions using electronic health record data

Pneumonia is a leading cause of hospitalizations in the U.S., accounting for more than 1.1 million discharges annually.1 Pneumonia is frequently complicated by hospital readmission, which is costly and potentially avoidable.2,3 Due to financial penalties imposed on hospitals for higher than expected 30-day readmission rates, there is increasing attention to implementing interventions to reduce readmissions in this population.4,5 However, because these programs are resource-intensive, interventions are thought to be most cost-effective if they are targeted to high-risk individuals who are most likely to benefit.6-8

Current pneumonia-specific readmission risk-prediction models that could enable identification of high-risk patients suffer from poor predictive ability, greatly limiting their use, and most were validated among older adults or by using data from single academic medical centers, limiting their generalizability.9-14 A potential reason for poor predictive accuracy is the omission of known robust clinical predictors of pneumonia-related outcomes, including pneumonia severity of illness and stability on discharge.15-17 Approaches using electronic health record (EHR) data, which include this clinically granular data, could enable hospitals to more accurately and pragmatically identify high-risk patients during the index hospitalization and enable interventions to be initiated prior to discharge.