User login

Predicting 30-day pneumonia readmissions using electronic health record data

Pneumonia is a leading cause of hospitalizations in the U.S., accounting for more than 1.1 million discharges annually.1 Pneumonia is frequently complicated by hospital readmission, which is costly and potentially avoidable.2,3 Due to financial penalties imposed on hospitals for higher than expected 30-day readmission rates, there is increasing attention to implementing interventions to reduce readmissions in this population.4,5 However, because these programs are resource-intensive, interventions are thought to be most cost-effective if they are targeted to high-risk individuals who are most likely to benefit.6-8

Current pneumonia-specific readmission risk-prediction models that could enable identification of high-risk patients suffer from poor predictive ability, greatly limiting their use, and most were validated among older adults or by using data from single academic medical centers, limiting their generalizability.9-14 A potential reason for poor predictive accuracy is the omission of known robust clinical predictors of pneumonia-related outcomes, including pneumonia severity of illness and stability on discharge.15-17 Approaches using electronic health record (EHR) data, which include this clinically granular data, could enable hospitals to more accurately and pragmatically identify high-risk patients during the index hospitalization and enable interventions to be initiated prior to discharge.

An alternative strategy to identifying high-risk patients for readmission is to use a multi-condition risk-prediction model. Developing and implementing models for every condition may be time-consuming and costly. We have derived and validated 2 multi-condition risk-prediction models using EHR data—1 using data from the first day of hospital admission (‘first-day’ model), and the second incorporating data from the entire hospitalization (‘full-stay’ model) to reflect in-hospital complications and clinical stability at discharge.18,19 However, it is unknown if a multi-condition model for pneumonia would perform as well as a disease-specific model.

This study aimed to develop 2 EHR-based pneumonia-specific readmission risk-prediction models using data routinely collected in clinical practice—a ‘first-day’ and a ‘full-stay’ model—and compare the performance of each model to: 1) one another; 2) the corresponding multi-condition EHR model; and 3) to other potentially useful models in predicting pneumonia readmissions (the Centers for Medicare and Medicaid Services [CMS] pneumonia model, and 2 commonly used pneumonia severity of illness scores validated for predicting mortality). We hypothesized that the pneumonia-specific EHR models would outperform other models; and the full-stay pneumonia-specific model would outperform the first-day pneumonia-specific model.

METHODS

Study Design, Population, and Data Sources

We conducted an observational study using EHR data collected from 6 hospitals (including safety net, community, teaching, and nonteaching hospitals) in north Texas between November 2009 and October 2010, All hospitals used the Epic EHR (Epic Systems Corporation, Verona, WI). Details of this cohort have been published.18,19

We included consecutive hospitalizations among adults 18 years and older discharged from any medicine service with principal discharge diagnoses of pneumonia (ICD-9-CM codes 480-483, 485, 486-487), sepsis (ICD-9-CM codes 038, 995.91, 995.92, 785.52), or respiratory failure (ICD-9-CM codes 518.81, 518.82, 518.84, 799.1) when the latter 2 were also accompanied by a secondary diagnosis of pneumonia.20 For individuals with multiple hospitalizations during the study period, we included only the first hospitalization. We excluded individuals who died during the index hospitalization or within 30 days of discharge, were transferred to another acute care facility, or left against medical advice.

Outcomes

The primary outcome was all-cause 30-day readmission, defined as a nonelective hospitalization within 30 days of discharge to any of 75 acute care hospitals within a 100-mile radius of Dallas, ascertained from an all-payer regional hospitalization database.

Predictor Variables for the Pneumonia-Specific Readmission Models

The selection of candidate predictors was informed by our validated multi-condition risk-prediction models using EHR data available within 24 hours of admission (‘first-day’ multi-condition EHR model) or during the entire hospitalization (‘full-stay’ multi-condition EHR model).18,19 For the pneumonia-specific models, we included all variables in our published multi-condition models as candidate predictors, including sociodemographics, prior utilization, Charlson Comorbidity Index, select laboratory and vital sign abnormalities, length of stay, hospital complications (eg, venous thromboembolism), vital sign instabilities, and disposition status (see Supplemental Table 1 for complete list of variables). We also assessed additional variables specific to pneumonia for inclusion that were: (1) available in the EHR of all participating hospitals; (2) routinely collected or available at the time of admission or discharge; and (3) plausible predictors of adverse outcomes based on literature and clinical expertise. These included select comorbidities (eg, psychiatric conditions, chronic lung disease, history of pneumonia),10,11,21,22 the pneumonia severity index (PSI),16,23,24 intensive care unit stay, and receipt of invasive or noninvasive ventilation. We used a modified PSI score because certain data elements were missing. The modified PSI (henceforth referred to as PSI) did not include nursing home residence and included diagnostic codes as proxies for the presence of pleural effusion (ICD-9-CM codes 510, 511.1, and 511.9) and altered mental status (ICD-9-CM codes 780.0X, 780.97, 293.0, 293.1, and 348.3X).

Statistical Analysis

Model Derivation. Candidate predictor variables were classified as available in the EHR within 24 hours of admission and/or at the time of discharge. For example, socioeconomic factors could be ascertained within the first day of hospitalization, whereas length of stay would not be available until the day of discharge. Predictors with missing values were assumed to be normal (less than 1% missing for each variable). Univariate relationships between readmission and each candidate predictor were assessed in the overall cohort using a pre-specified significance threshold of P ≤ 0.10. Significant variables were entered in the respective first-day and full-stay pneumonia-specific multivariable logistic regression models using stepwise-backward selection with a pre-specified significance threshold of P ≤ 0.05. In sensitivity analyses, we alternately derived our models using stepwise-forward selection, as well as stepwise-backward selection minimizing the Bayesian information criterion and Akaike information criterion separately. These alternate modeling strategies yielded identical predictors to our final models.

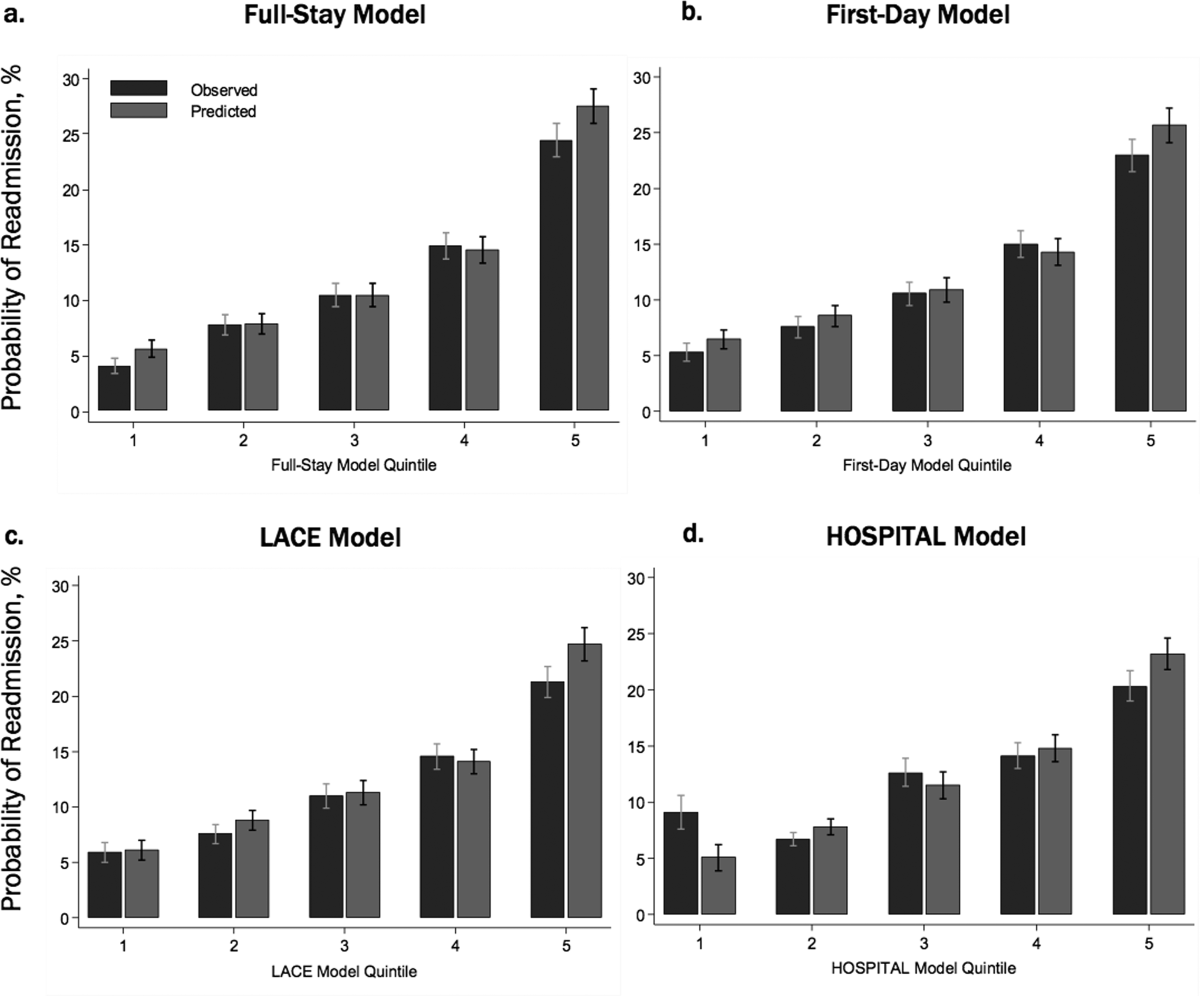

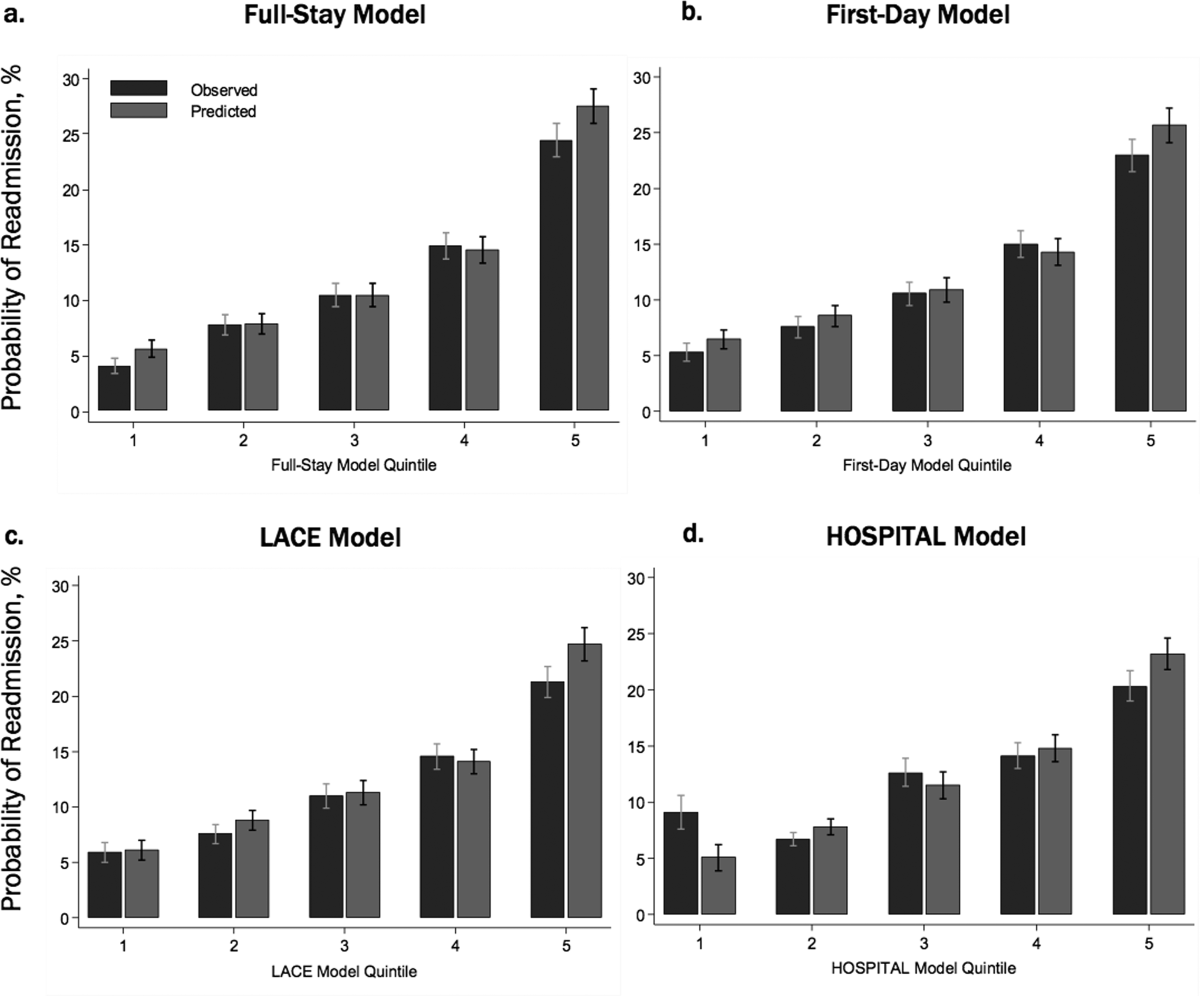

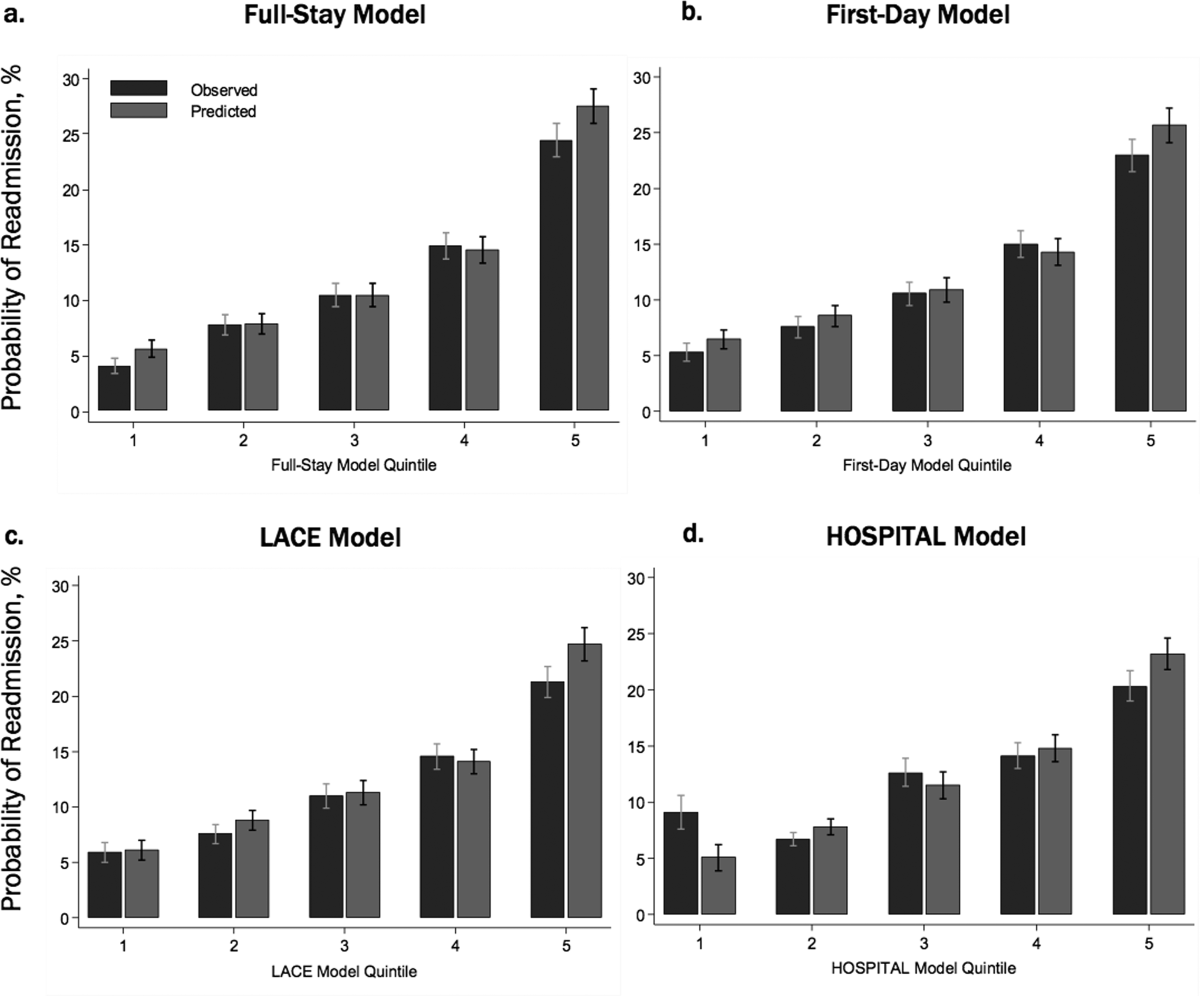

Model Validation. Model validation was performed using 5-fold cross-validation, with the overall cohort randomly divided into 5 equal-size subsets.25 For each cycle, 4 subsets were used for training to estimate model coefficients, and the fifth subset was used for validation. This cycle was repeated 5 times with each randomly-divided subset used once as the validation set. We repeated this entire process 50 times and averaged the C statistic estimates to derive an optimism-corrected C statistic. Model calibration was assessed qualitatively by comparing predicted to observed probabilities of readmission by quintiles of predicted risk, and with the Hosmer-Lemeshow goodness-of-fit test.

Comparison to Other Models. The main comparisons of the first-day and full-stay pneumonia-specific EHR model performance were to each other and the corresponding multi-condition EHR model.18,19 The multi-condition EHR models were separately derived and validated within the larger parent cohort from which this study cohort was derived, and outperformed the CMS all-cause model, the HOSPITAL model, and the LACE index.19 To further triangulate our findings, given the lack of other rigorously validated pneumonia-specific risk-prediction models for readmission,14 we compared the pneumonia-specific EHR models to the CMS pneumonia model derived from administrative claims data,10 and 2 commonly used risk-prediction scores for short-term mortality among patients with community-acquired pneumonia, the PSI and CURB-65 scores.16 Although derived and validated using patient-level data, the CMS model was developed to benchmark hospitals according to hospital-level readmission rates.10 The CURB-65 score in this study was also modified to include the same altered mental status diagnostic codes according to the modified PSI as a proxy for “confusion.” Both the PSI and CURB-65 scores were calculated using the most abnormal values within the first 24 hours of admission. The ‘updated’ PSI and the ‘updated’ CURB-65 were calculated using the most abnormal values within 24 hours prior to discharge, or the last known observation prior to discharge if no results were recorded within this time period. A complete list of variables for each of the comparison models are shown in Supplemental Table 1.

We assessed model performance by calculating the C statistic, integrated discrimination index, and net reclassification index (NRI) compared to our pneumonia-specific models. The integrated discrimination index is the difference in the mean predicted probability of readmission between patients who were and were not actually readmitted between 2 models, where more positive values suggest improvement in model performance compared to a reference model.26 The NRI is defined as the sum of the net proportions of correctly reclassified persons with and without the event of interest.27 Here, we calculated a category-based NRI to evaluate the performance of pneumonia-specific models in correctly classifying individuals with and without readmissions into the 2 highest readmission risk quintiles vs the lowest 3 risk quintiles compared to other models.27 This pre-specified cutoff is relevant for hospitals interested in identifying the highest risk individuals for targeted intervention.7 Finally, we assessed calibration of comparator models in our cohort by comparing predicted probability to observed probability of readmission by quintiles of risk for each model. We conducted all analyses using Stata 12.1 (StataCorp, College Station, Texas). This study was approved by the University of Texas Southwestern Medical Center Institutional Review Board.

RESULTS

Of 1463 index hospitalizations (Supplemental Figure 1), the 30-day all-cause readmission rate was 13.6%. Individuals with a 30-day readmission had markedly different sociodemographic and clinical characteristics compared to those not readmitted (Table 1; see Supplemental Table 2 for additional clinical characteristics).

Derivation, Validation, and Performance of the Pneumonia-Specific Readmission Risk-Prediction Models

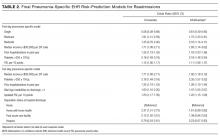

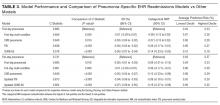

The final first-day pneumonia-specific EHR model included 7 variables, including sociodemographic characteristics; prior hospitalizations; thrombocytosis, and PSI (Table 2). The first-day pneumonia-specific model had adequate discrimination (C statistic, 0.695; optimism-corrected C statistic 0.675, 95% confidence interval [CI], 0.667-0.685; Table 3). It also effectively stratified individuals across a broad range of risk (average predicted decile of risk ranged from 4% to 33%; Table 3) and was well calibrated (Supplemental Table 3).

The final full-stay pneumonia-specific EHR readmission model included 8 predictors, including 3 variables from the first-day model (median income, thrombocytosis, and prior hospitalizations; Table 2). The full-stay pneumonia-specific EHR model also included vital sign instabilities on discharge, updated PSI, and disposition status (ie, being discharged with home health or to a post-acute care facility was associated with greater odds of readmission, and hospice with lower odds). The full-stay pneumonia-specific EHR model had good discrimination (C statistic, 0.731; optimism-corrected C statistic, 0.714; 95% CI, 0.706-0.720), and stratified individuals across a broad range of risk (average predicted decile of risk ranged from 3% to 37%; Table 3), and was also well calibrated (Supplemental Table 3).

First-Day Pneumonia-Specific EHR Model vs First-Day Multi-Condition EHR Model

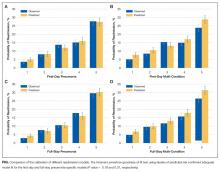

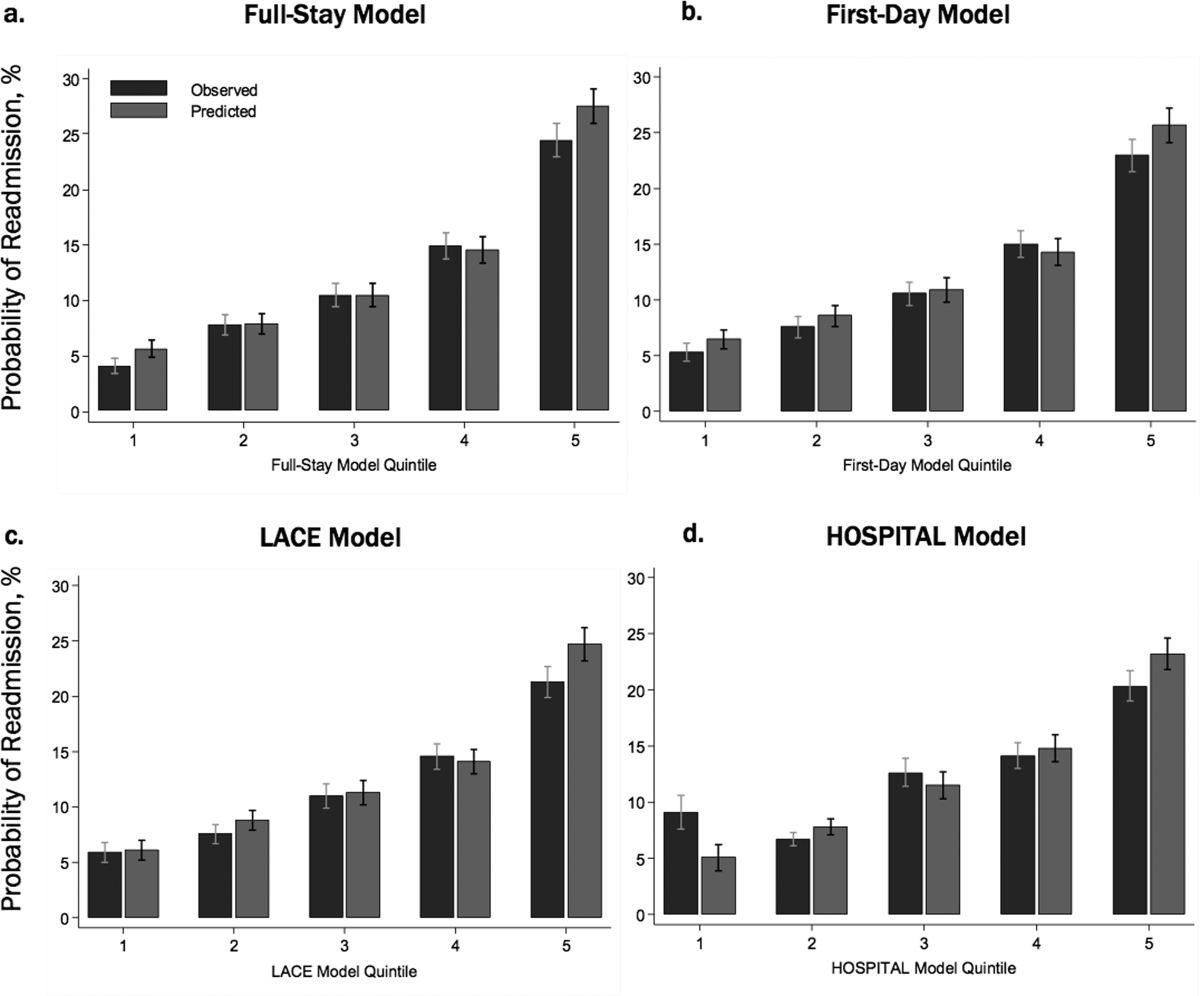

The first-day pneumonia-specific EHR model outperformed the first-day multi-condition EHR model with better discrimination (P = 0.029) and more correctly classified individuals in the top 2 highest risk quintiles vs the bottom 3 risk quintiles (Table 3, Supplemental Table 4, and Supplemental Figure 2A). With respect to calibration, the first-day multi-condition EHR model overestimated risk among the highest quintile risk group compared to the first-day pneumonia-specific EHR model (Figure 1A, 1B).

Full-Stay Pneumonia-Specific EHR Model vs Other Models

The full-stay pneumonia-specific EHR model comparatively outperformed the corresponding full-stay multi-condition EHR model, as well as the first-day pneumonia-specific EHR model, the CMS pneumonia model, the updated PSI, and the updated CURB-65 (Table 3, Supplemental Table 5, Supplemental Table 6, and Supplemental Figures 2B and 2C). Compared to the full-stay multi-condition and first-day pneumonia-specific EHR models, the full-stay pneumonia-specific EHR model had better discrimination, better reclassification (NRI, 0.09 and 0.08, respectively), and was able to stratify individuals across a broader range of readmission risk (Table 3). It also had better calibration in the highest quintile risk group compared to the full-stay multi-condition EHR model (Figure 1C and 1D).

Updated vs First-Day Modified PSI and CURB-65 Scores

The updated PSI was more strongly predictive of readmission than the PSI calculated on the day of admission (Wald test, 9.83; P = 0.002). Each 10-point increase in the updated PSI was associated with a 22% increased odds of readmission vs an 11% increase for the PSI calculated upon admission (Table 2). The improved predictive ability of the updated PSI and CURB-65 scores was also reflected in the superior discrimination and calibration vs the respective first-day pneumonia severity of illness scores (Table 3).

DISCUSSION

Using routinely available EHR data from 6 diverse hospitals, we developed 2 pneumonia-specific readmission risk-prediction models that aimed to allow hospitals to identify patients hospitalized with pneumonia at high risk for readmission. Overall, we found that a pneumonia-specific model using EHR data from the entire hospitalization outperformed all other models—including the first-day pneumonia-specific model using data present only on admission, our own multi-condition EHR models, and the CMS pneumonia model based on administrative claims data—in all aspects of model performance (discrimination, calibration, and reclassification). We found that socioeconomic status, prior hospitalizations, thrombocytosis, and measures of clinical severity and stability were important predictors of 30-day all-cause readmissions among patients hospitalized with pneumonia. Additionally, an updated discharge PSI score was a stronger independent predictor of readmissions compared to the PSI score calculated upon admission; and inclusion of the updated PSI in our full-stay pneumonia model led to improved prediction of 30-day readmissions.

The marked improvement in performance of the full-stay pneumonia-specific EHR model compared to the first-day pneumonia-specific model suggests that clinical stability and trajectory during hospitalization (as modeled through disposition status, updated PSI, and vital sign instabilities at discharge) are important predictors of 30-day readmission among patients hospitalized for pneumonia, which was not the case for our EHR-based multi-condition models.19 With the inclusion of these measures, the full-stay pneumonia-specific model correctly reclassified an additional 8% of patients according to their true risk compared to the first-day pneumonia-specific model. One implication of these findings is that hospitals interested in targeting their highest risk individuals with pneumonia for transitional care interventions could do so using the first-day pneumonia-specific EHR model and could refine their targeted strategy at the time of discharge by using the full-stay pneumonia model. This staged risk-prediction strategy would enable hospitals to initiate transitional care interventions for high-risk individuals in the inpatient setting (ie, patient education).7 Then, hospitals could enroll both persistent and newly identified high-risk individuals for outpatient interventions (ie, follow-up telephone call) in the immediate post-discharge period, an interval characterized by heightened vulnerability for adverse events,28 based on patients’ illness severity and stability at discharge. This approach can be implemented by hospitals by building these risk-prediction models directly into the EHR, or by extracting EHR data in near real time as our group has done successfully for heart failure.7

Another key implication of our study is that, for pneumonia, a disease-specific modeling approach has better predictive ability than using a multi-condition model. Compared to multi-condition models, the first-day and full-stay pneumonia-specific EHR models correctly reclassified an additional 6% and 9% of patients, respectively. Thus, hospitals interested in identifying the highest risk patients with pneumonia for targeted interventions should do so using the disease-specific models, if the costs and resources of doing so are within reach of the healthcare system.

An additional novel finding of our study is the added value of an updated PSI for predicting adverse events. Studies of pneumonia severity of illness scores have calculated the PSI and CURB-65 scores using data present only on admission.16,24 While our study also confirms that the PSI calculated upon admission is a significant predictor of readmission,23,29 this study extends this work by showing that an updated PSI score calculated at the time of discharge is an even stronger predictor for readmission, and its inclusion in the model significantly improves risk stratification and prognostication.

Our study was noteworthy for several strengths. First, we used data from a common EHR system, thus potentially allowing for the implementation of the pneumonia-specific models in real time across a number of hospitals. The use of routinely collected data for risk-prediction modeling makes this approach scalable and sustainable, because it obviates the need for burdensome data collection and entry. Second, to our knowledge, this is the first study to measure the additive influence of illness severity and stability at discharge on the readmission risk among patients hospitalized with pneumonia. Third, our study population was derived from 6 hospitals diverse in payer status, age, race/ethnicity, and socioeconomic status. Fourth, our models are less likely to be overfit to the idiosyncrasies of our data given that several predictors included in our final pneumonia-specific models have been associated with readmission in this population, including marital status,13,30 income,11,31 prior hospitalizations,11,13 thrombocytosis,32-34 and vital sign instabilities on discharge.17 Lastly, the discrimination of the CMS pneumonia model in our cohort (C statistic, 0.64) closely matched the discrimination observed in 4 independent cohorts (C statistic, 0.63), suggesting adequate generalizability of our study setting and population.10,12

Our results should be interpreted in the context of several limitations. First, generalizability to other regions beyond north Texas is unknown. Second, although we included a diverse cohort of safety net, community, teaching, and nonteaching hospitals, the pneumonia-specific models were not externally validated in a separate cohort, which may lead to more optimistic estimates of model performance. Third, PSI and CURB-65 scores were modified to use diagnostic codes for altered mental status and pleural effusion, and omitted nursing home residence. Thus, the independent associations for the PSI and CURB-65 scores and their predictive ability are likely attenuated. Fourth, we were unable to include data on medications (antibiotics and steroid use) and outpatient visits, which may influence readmission risk.2,9,13,35-40 Fifth, we included only the first pneumonia hospitalization per patient in this study. Had we included multiple hospitalizations per patient, we anticipate better model performance for the 2 pneumonia-specific EHR models since prior hospitalization was a robust predictor of readmission.

In conclusion, the full-stay pneumonia-specific EHR readmission risk-prediction model outperformed the first-day pneumonia-specific model, multi-condition EHR models, and the CMS pneumonia model. This suggests that: measures of clinical severity and stability at the time of discharge are important predictors for identifying patients at highest risk for readmission; and that EHR data routinely collected for clinical practice can be used to accurately predict risk of readmission among patients hospitalized for pneumonia.

Acknowledgments

The authors would like to acknowledge Ruben Amarasingham, MD, MBA, president and chief executive officer of Parkland Center for Clinical Innovation, and Ferdinand Velasco, MD, chief health information officer at Texas Health Resources for their assistance in assembling the 6-hospital cohort used in this study.

Disclosures

This work was supported by the Agency for Healthcare Research and Quality-funded UT Southwestern Center for Patient-Centered Outcomes Research (R24 HS022418-01); the Commonwealth Foundation (#20100323); the UT Southwestern KL2 Scholars Program supported by the National Institutes of Health (KL2 TR001103 to ANM and OKN); and the National Center for Advancing Translational Sciences at the National Institute of Health (U54 RFA-TR-12-006 to E.A.H.). The study sponsors had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors have no financial conflicts of interest to disclose

1. Centers for Disease Control and Prevention. Pneumonia. http://www.cdc.gov/nchs/fastats/pneumonia.htm. Accessed January 26, 2016.

33. Prina E, Ferrer M, Ranzani OT, et al. Thrombocytosis is a marker of poor outcome in community-acquired pneumonia. Chest. 2013;143(3):767-775. PubMed

34. Violi F, Cangemi R, Calvieri C. Pneumonia, thrombosis and vascular disease. J Thromb Haemost. 2014;12(9):1391-1400. PubMed

35. Weinberger M, Oddone EZ, Henderson WG. Does increased access to primary care reduce hospital readmissions? Veterans Affairs Cooperative Study Group on Primary Care and Hospital Readmission. N Engl J Med. 1996;334(22):1441-1447. PubMed

36. Field TS, Ogarek J, Garber L, Reed G, Gurwitz JH. Association of early post-discharge follow-up by a primary care physician and 30-day rehospitalization among older adults. J Gen Intern Med. 2015;30(5):565-571. PubMed

37. Spatz ES, Sheth SD, Gosch KL, et al. Usual source of care and outcomes following acute myocardial infarction. J Gen Intern Med. 2014;29(6):862-869. PubMed

38. Brooke BS, Stone DH, Cronenwett JL, et al. Early primary care provider follow-up and readmission after high-risk surgery. JAMA Surg. 2014;149(8):821-828. PubMed

39. Adamuz J, Viasus D, Campreciós-Rodriguez P, et al. A prospective cohort study of healthcare visits and rehospitalizations after discharge of patients with community-acquired pneumonia. Respirology. 2011;16(7):1119-1126. PubMed

40. Shorr AF, Zilberberg MD, Reichley R, et al. Readmission following hospitalization for pneumonia: the impact of pneumonia type and its implication for hospitals. Clin Infect Dis. 2013;57(3):362-367. PubMed

Pneumonia is a leading cause of hospitalizations in the U.S., accounting for more than 1.1 million discharges annually.1 Pneumonia is frequently complicated by hospital readmission, which is costly and potentially avoidable.2,3 Due to financial penalties imposed on hospitals for higher than expected 30-day readmission rates, there is increasing attention to implementing interventions to reduce readmissions in this population.4,5 However, because these programs are resource-intensive, interventions are thought to be most cost-effective if they are targeted to high-risk individuals who are most likely to benefit.6-8

Current pneumonia-specific readmission risk-prediction models that could enable identification of high-risk patients suffer from poor predictive ability, greatly limiting their use, and most were validated among older adults or by using data from single academic medical centers, limiting their generalizability.9-14 A potential reason for poor predictive accuracy is the omission of known robust clinical predictors of pneumonia-related outcomes, including pneumonia severity of illness and stability on discharge.15-17 Approaches using electronic health record (EHR) data, which include this clinically granular data, could enable hospitals to more accurately and pragmatically identify high-risk patients during the index hospitalization and enable interventions to be initiated prior to discharge.

An alternative strategy to identifying high-risk patients for readmission is to use a multi-condition risk-prediction model. Developing and implementing models for every condition may be time-consuming and costly. We have derived and validated 2 multi-condition risk-prediction models using EHR data—1 using data from the first day of hospital admission (‘first-day’ model), and the second incorporating data from the entire hospitalization (‘full-stay’ model) to reflect in-hospital complications and clinical stability at discharge.18,19 However, it is unknown if a multi-condition model for pneumonia would perform as well as a disease-specific model.

This study aimed to develop 2 EHR-based pneumonia-specific readmission risk-prediction models using data routinely collected in clinical practice—a ‘first-day’ and a ‘full-stay’ model—and compare the performance of each model to: 1) one another; 2) the corresponding multi-condition EHR model; and 3) to other potentially useful models in predicting pneumonia readmissions (the Centers for Medicare and Medicaid Services [CMS] pneumonia model, and 2 commonly used pneumonia severity of illness scores validated for predicting mortality). We hypothesized that the pneumonia-specific EHR models would outperform other models; and the full-stay pneumonia-specific model would outperform the first-day pneumonia-specific model.

METHODS

Study Design, Population, and Data Sources

We conducted an observational study using EHR data collected from 6 hospitals (including safety net, community, teaching, and nonteaching hospitals) in north Texas between November 2009 and October 2010, All hospitals used the Epic EHR (Epic Systems Corporation, Verona, WI). Details of this cohort have been published.18,19

We included consecutive hospitalizations among adults 18 years and older discharged from any medicine service with principal discharge diagnoses of pneumonia (ICD-9-CM codes 480-483, 485, 486-487), sepsis (ICD-9-CM codes 038, 995.91, 995.92, 785.52), or respiratory failure (ICD-9-CM codes 518.81, 518.82, 518.84, 799.1) when the latter 2 were also accompanied by a secondary diagnosis of pneumonia.20 For individuals with multiple hospitalizations during the study period, we included only the first hospitalization. We excluded individuals who died during the index hospitalization or within 30 days of discharge, were transferred to another acute care facility, or left against medical advice.

Outcomes

The primary outcome was all-cause 30-day readmission, defined as a nonelective hospitalization within 30 days of discharge to any of 75 acute care hospitals within a 100-mile radius of Dallas, ascertained from an all-payer regional hospitalization database.

Predictor Variables for the Pneumonia-Specific Readmission Models

The selection of candidate predictors was informed by our validated multi-condition risk-prediction models using EHR data available within 24 hours of admission (‘first-day’ multi-condition EHR model) or during the entire hospitalization (‘full-stay’ multi-condition EHR model).18,19 For the pneumonia-specific models, we included all variables in our published multi-condition models as candidate predictors, including sociodemographics, prior utilization, Charlson Comorbidity Index, select laboratory and vital sign abnormalities, length of stay, hospital complications (eg, venous thromboembolism), vital sign instabilities, and disposition status (see Supplemental Table 1 for complete list of variables). We also assessed additional variables specific to pneumonia for inclusion that were: (1) available in the EHR of all participating hospitals; (2) routinely collected or available at the time of admission or discharge; and (3) plausible predictors of adverse outcomes based on literature and clinical expertise. These included select comorbidities (eg, psychiatric conditions, chronic lung disease, history of pneumonia),10,11,21,22 the pneumonia severity index (PSI),16,23,24 intensive care unit stay, and receipt of invasive or noninvasive ventilation. We used a modified PSI score because certain data elements were missing. The modified PSI (henceforth referred to as PSI) did not include nursing home residence and included diagnostic codes as proxies for the presence of pleural effusion (ICD-9-CM codes 510, 511.1, and 511.9) and altered mental status (ICD-9-CM codes 780.0X, 780.97, 293.0, 293.1, and 348.3X).

Statistical Analysis

Model Derivation. Candidate predictor variables were classified as available in the EHR within 24 hours of admission and/or at the time of discharge. For example, socioeconomic factors could be ascertained within the first day of hospitalization, whereas length of stay would not be available until the day of discharge. Predictors with missing values were assumed to be normal (less than 1% missing for each variable). Univariate relationships between readmission and each candidate predictor were assessed in the overall cohort using a pre-specified significance threshold of P ≤ 0.10. Significant variables were entered in the respective first-day and full-stay pneumonia-specific multivariable logistic regression models using stepwise-backward selection with a pre-specified significance threshold of P ≤ 0.05. In sensitivity analyses, we alternately derived our models using stepwise-forward selection, as well as stepwise-backward selection minimizing the Bayesian information criterion and Akaike information criterion separately. These alternate modeling strategies yielded identical predictors to our final models.

Model Validation. Model validation was performed using 5-fold cross-validation, with the overall cohort randomly divided into 5 equal-size subsets.25 For each cycle, 4 subsets were used for training to estimate model coefficients, and the fifth subset was used for validation. This cycle was repeated 5 times with each randomly-divided subset used once as the validation set. We repeated this entire process 50 times and averaged the C statistic estimates to derive an optimism-corrected C statistic. Model calibration was assessed qualitatively by comparing predicted to observed probabilities of readmission by quintiles of predicted risk, and with the Hosmer-Lemeshow goodness-of-fit test.

Comparison to Other Models. The main comparisons of the first-day and full-stay pneumonia-specific EHR model performance were to each other and the corresponding multi-condition EHR model.18,19 The multi-condition EHR models were separately derived and validated within the larger parent cohort from which this study cohort was derived, and outperformed the CMS all-cause model, the HOSPITAL model, and the LACE index.19 To further triangulate our findings, given the lack of other rigorously validated pneumonia-specific risk-prediction models for readmission,14 we compared the pneumonia-specific EHR models to the CMS pneumonia model derived from administrative claims data,10 and 2 commonly used risk-prediction scores for short-term mortality among patients with community-acquired pneumonia, the PSI and CURB-65 scores.16 Although derived and validated using patient-level data, the CMS model was developed to benchmark hospitals according to hospital-level readmission rates.10 The CURB-65 score in this study was also modified to include the same altered mental status diagnostic codes according to the modified PSI as a proxy for “confusion.” Both the PSI and CURB-65 scores were calculated using the most abnormal values within the first 24 hours of admission. The ‘updated’ PSI and the ‘updated’ CURB-65 were calculated using the most abnormal values within 24 hours prior to discharge, or the last known observation prior to discharge if no results were recorded within this time period. A complete list of variables for each of the comparison models are shown in Supplemental Table 1.

We assessed model performance by calculating the C statistic, integrated discrimination index, and net reclassification index (NRI) compared to our pneumonia-specific models. The integrated discrimination index is the difference in the mean predicted probability of readmission between patients who were and were not actually readmitted between 2 models, where more positive values suggest improvement in model performance compared to a reference model.26 The NRI is defined as the sum of the net proportions of correctly reclassified persons with and without the event of interest.27 Here, we calculated a category-based NRI to evaluate the performance of pneumonia-specific models in correctly classifying individuals with and without readmissions into the 2 highest readmission risk quintiles vs the lowest 3 risk quintiles compared to other models.27 This pre-specified cutoff is relevant for hospitals interested in identifying the highest risk individuals for targeted intervention.7 Finally, we assessed calibration of comparator models in our cohort by comparing predicted probability to observed probability of readmission by quintiles of risk for each model. We conducted all analyses using Stata 12.1 (StataCorp, College Station, Texas). This study was approved by the University of Texas Southwestern Medical Center Institutional Review Board.

RESULTS

Of 1463 index hospitalizations (Supplemental Figure 1), the 30-day all-cause readmission rate was 13.6%. Individuals with a 30-day readmission had markedly different sociodemographic and clinical characteristics compared to those not readmitted (Table 1; see Supplemental Table 2 for additional clinical characteristics).

Derivation, Validation, and Performance of the Pneumonia-Specific Readmission Risk-Prediction Models

The final first-day pneumonia-specific EHR model included 7 variables, including sociodemographic characteristics; prior hospitalizations; thrombocytosis, and PSI (Table 2). The first-day pneumonia-specific model had adequate discrimination (C statistic, 0.695; optimism-corrected C statistic 0.675, 95% confidence interval [CI], 0.667-0.685; Table 3). It also effectively stratified individuals across a broad range of risk (average predicted decile of risk ranged from 4% to 33%; Table 3) and was well calibrated (Supplemental Table 3).

The final full-stay pneumonia-specific EHR readmission model included 8 predictors, including 3 variables from the first-day model (median income, thrombocytosis, and prior hospitalizations; Table 2). The full-stay pneumonia-specific EHR model also included vital sign instabilities on discharge, updated PSI, and disposition status (ie, being discharged with home health or to a post-acute care facility was associated with greater odds of readmission, and hospice with lower odds). The full-stay pneumonia-specific EHR model had good discrimination (C statistic, 0.731; optimism-corrected C statistic, 0.714; 95% CI, 0.706-0.720), and stratified individuals across a broad range of risk (average predicted decile of risk ranged from 3% to 37%; Table 3), and was also well calibrated (Supplemental Table 3).

First-Day Pneumonia-Specific EHR Model vs First-Day Multi-Condition EHR Model

The first-day pneumonia-specific EHR model outperformed the first-day multi-condition EHR model with better discrimination (P = 0.029) and more correctly classified individuals in the top 2 highest risk quintiles vs the bottom 3 risk quintiles (Table 3, Supplemental Table 4, and Supplemental Figure 2A). With respect to calibration, the first-day multi-condition EHR model overestimated risk among the highest quintile risk group compared to the first-day pneumonia-specific EHR model (Figure 1A, 1B).

Full-Stay Pneumonia-Specific EHR Model vs Other Models

The full-stay pneumonia-specific EHR model comparatively outperformed the corresponding full-stay multi-condition EHR model, as well as the first-day pneumonia-specific EHR model, the CMS pneumonia model, the updated PSI, and the updated CURB-65 (Table 3, Supplemental Table 5, Supplemental Table 6, and Supplemental Figures 2B and 2C). Compared to the full-stay multi-condition and first-day pneumonia-specific EHR models, the full-stay pneumonia-specific EHR model had better discrimination, better reclassification (NRI, 0.09 and 0.08, respectively), and was able to stratify individuals across a broader range of readmission risk (Table 3). It also had better calibration in the highest quintile risk group compared to the full-stay multi-condition EHR model (Figure 1C and 1D).

Updated vs First-Day Modified PSI and CURB-65 Scores

The updated PSI was more strongly predictive of readmission than the PSI calculated on the day of admission (Wald test, 9.83; P = 0.002). Each 10-point increase in the updated PSI was associated with a 22% increased odds of readmission vs an 11% increase for the PSI calculated upon admission (Table 2). The improved predictive ability of the updated PSI and CURB-65 scores was also reflected in the superior discrimination and calibration vs the respective first-day pneumonia severity of illness scores (Table 3).

DISCUSSION

Using routinely available EHR data from 6 diverse hospitals, we developed 2 pneumonia-specific readmission risk-prediction models that aimed to allow hospitals to identify patients hospitalized with pneumonia at high risk for readmission. Overall, we found that a pneumonia-specific model using EHR data from the entire hospitalization outperformed all other models—including the first-day pneumonia-specific model using data present only on admission, our own multi-condition EHR models, and the CMS pneumonia model based on administrative claims data—in all aspects of model performance (discrimination, calibration, and reclassification). We found that socioeconomic status, prior hospitalizations, thrombocytosis, and measures of clinical severity and stability were important predictors of 30-day all-cause readmissions among patients hospitalized with pneumonia. Additionally, an updated discharge PSI score was a stronger independent predictor of readmissions compared to the PSI score calculated upon admission; and inclusion of the updated PSI in our full-stay pneumonia model led to improved prediction of 30-day readmissions.

The marked improvement in performance of the full-stay pneumonia-specific EHR model compared to the first-day pneumonia-specific model suggests that clinical stability and trajectory during hospitalization (as modeled through disposition status, updated PSI, and vital sign instabilities at discharge) are important predictors of 30-day readmission among patients hospitalized for pneumonia, which was not the case for our EHR-based multi-condition models.19 With the inclusion of these measures, the full-stay pneumonia-specific model correctly reclassified an additional 8% of patients according to their true risk compared to the first-day pneumonia-specific model. One implication of these findings is that hospitals interested in targeting their highest risk individuals with pneumonia for transitional care interventions could do so using the first-day pneumonia-specific EHR model and could refine their targeted strategy at the time of discharge by using the full-stay pneumonia model. This staged risk-prediction strategy would enable hospitals to initiate transitional care interventions for high-risk individuals in the inpatient setting (ie, patient education).7 Then, hospitals could enroll both persistent and newly identified high-risk individuals for outpatient interventions (ie, follow-up telephone call) in the immediate post-discharge period, an interval characterized by heightened vulnerability for adverse events,28 based on patients’ illness severity and stability at discharge. This approach can be implemented by hospitals by building these risk-prediction models directly into the EHR, or by extracting EHR data in near real time as our group has done successfully for heart failure.7

Another key implication of our study is that, for pneumonia, a disease-specific modeling approach has better predictive ability than using a multi-condition model. Compared to multi-condition models, the first-day and full-stay pneumonia-specific EHR models correctly reclassified an additional 6% and 9% of patients, respectively. Thus, hospitals interested in identifying the highest risk patients with pneumonia for targeted interventions should do so using the disease-specific models, if the costs and resources of doing so are within reach of the healthcare system.

An additional novel finding of our study is the added value of an updated PSI for predicting adverse events. Studies of pneumonia severity of illness scores have calculated the PSI and CURB-65 scores using data present only on admission.16,24 While our study also confirms that the PSI calculated upon admission is a significant predictor of readmission,23,29 this study extends this work by showing that an updated PSI score calculated at the time of discharge is an even stronger predictor for readmission, and its inclusion in the model significantly improves risk stratification and prognostication.

Our study was noteworthy for several strengths. First, we used data from a common EHR system, thus potentially allowing for the implementation of the pneumonia-specific models in real time across a number of hospitals. The use of routinely collected data for risk-prediction modeling makes this approach scalable and sustainable, because it obviates the need for burdensome data collection and entry. Second, to our knowledge, this is the first study to measure the additive influence of illness severity and stability at discharge on the readmission risk among patients hospitalized with pneumonia. Third, our study population was derived from 6 hospitals diverse in payer status, age, race/ethnicity, and socioeconomic status. Fourth, our models are less likely to be overfit to the idiosyncrasies of our data given that several predictors included in our final pneumonia-specific models have been associated with readmission in this population, including marital status,13,30 income,11,31 prior hospitalizations,11,13 thrombocytosis,32-34 and vital sign instabilities on discharge.17 Lastly, the discrimination of the CMS pneumonia model in our cohort (C statistic, 0.64) closely matched the discrimination observed in 4 independent cohorts (C statistic, 0.63), suggesting adequate generalizability of our study setting and population.10,12

Our results should be interpreted in the context of several limitations. First, generalizability to other regions beyond north Texas is unknown. Second, although we included a diverse cohort of safety net, community, teaching, and nonteaching hospitals, the pneumonia-specific models were not externally validated in a separate cohort, which may lead to more optimistic estimates of model performance. Third, PSI and CURB-65 scores were modified to use diagnostic codes for altered mental status and pleural effusion, and omitted nursing home residence. Thus, the independent associations for the PSI and CURB-65 scores and their predictive ability are likely attenuated. Fourth, we were unable to include data on medications (antibiotics and steroid use) and outpatient visits, which may influence readmission risk.2,9,13,35-40 Fifth, we included only the first pneumonia hospitalization per patient in this study. Had we included multiple hospitalizations per patient, we anticipate better model performance for the 2 pneumonia-specific EHR models since prior hospitalization was a robust predictor of readmission.

In conclusion, the full-stay pneumonia-specific EHR readmission risk-prediction model outperformed the first-day pneumonia-specific model, multi-condition EHR models, and the CMS pneumonia model. This suggests that: measures of clinical severity and stability at the time of discharge are important predictors for identifying patients at highest risk for readmission; and that EHR data routinely collected for clinical practice can be used to accurately predict risk of readmission among patients hospitalized for pneumonia.

Acknowledgments

The authors would like to acknowledge Ruben Amarasingham, MD, MBA, president and chief executive officer of Parkland Center for Clinical Innovation, and Ferdinand Velasco, MD, chief health information officer at Texas Health Resources for their assistance in assembling the 6-hospital cohort used in this study.

Disclosures

This work was supported by the Agency for Healthcare Research and Quality-funded UT Southwestern Center for Patient-Centered Outcomes Research (R24 HS022418-01); the Commonwealth Foundation (#20100323); the UT Southwestern KL2 Scholars Program supported by the National Institutes of Health (KL2 TR001103 to ANM and OKN); and the National Center for Advancing Translational Sciences at the National Institute of Health (U54 RFA-TR-12-006 to E.A.H.). The study sponsors had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors have no financial conflicts of interest to disclose

Pneumonia is a leading cause of hospitalizations in the U.S., accounting for more than 1.1 million discharges annually.1 Pneumonia is frequently complicated by hospital readmission, which is costly and potentially avoidable.2,3 Due to financial penalties imposed on hospitals for higher than expected 30-day readmission rates, there is increasing attention to implementing interventions to reduce readmissions in this population.4,5 However, because these programs are resource-intensive, interventions are thought to be most cost-effective if they are targeted to high-risk individuals who are most likely to benefit.6-8

Current pneumonia-specific readmission risk-prediction models that could enable identification of high-risk patients suffer from poor predictive ability, greatly limiting their use, and most were validated among older adults or by using data from single academic medical centers, limiting their generalizability.9-14 A potential reason for poor predictive accuracy is the omission of known robust clinical predictors of pneumonia-related outcomes, including pneumonia severity of illness and stability on discharge.15-17 Approaches using electronic health record (EHR) data, which include this clinically granular data, could enable hospitals to more accurately and pragmatically identify high-risk patients during the index hospitalization and enable interventions to be initiated prior to discharge.

An alternative strategy to identifying high-risk patients for readmission is to use a multi-condition risk-prediction model. Developing and implementing models for every condition may be time-consuming and costly. We have derived and validated 2 multi-condition risk-prediction models using EHR data—1 using data from the first day of hospital admission (‘first-day’ model), and the second incorporating data from the entire hospitalization (‘full-stay’ model) to reflect in-hospital complications and clinical stability at discharge.18,19 However, it is unknown if a multi-condition model for pneumonia would perform as well as a disease-specific model.

This study aimed to develop 2 EHR-based pneumonia-specific readmission risk-prediction models using data routinely collected in clinical practice—a ‘first-day’ and a ‘full-stay’ model—and compare the performance of each model to: 1) one another; 2) the corresponding multi-condition EHR model; and 3) to other potentially useful models in predicting pneumonia readmissions (the Centers for Medicare and Medicaid Services [CMS] pneumonia model, and 2 commonly used pneumonia severity of illness scores validated for predicting mortality). We hypothesized that the pneumonia-specific EHR models would outperform other models; and the full-stay pneumonia-specific model would outperform the first-day pneumonia-specific model.

METHODS

Study Design, Population, and Data Sources

We conducted an observational study using EHR data collected from 6 hospitals (including safety net, community, teaching, and nonteaching hospitals) in north Texas between November 2009 and October 2010, All hospitals used the Epic EHR (Epic Systems Corporation, Verona, WI). Details of this cohort have been published.18,19

We included consecutive hospitalizations among adults 18 years and older discharged from any medicine service with principal discharge diagnoses of pneumonia (ICD-9-CM codes 480-483, 485, 486-487), sepsis (ICD-9-CM codes 038, 995.91, 995.92, 785.52), or respiratory failure (ICD-9-CM codes 518.81, 518.82, 518.84, 799.1) when the latter 2 were also accompanied by a secondary diagnosis of pneumonia.20 For individuals with multiple hospitalizations during the study period, we included only the first hospitalization. We excluded individuals who died during the index hospitalization or within 30 days of discharge, were transferred to another acute care facility, or left against medical advice.

Outcomes

The primary outcome was all-cause 30-day readmission, defined as a nonelective hospitalization within 30 days of discharge to any of 75 acute care hospitals within a 100-mile radius of Dallas, ascertained from an all-payer regional hospitalization database.

Predictor Variables for the Pneumonia-Specific Readmission Models

The selection of candidate predictors was informed by our validated multi-condition risk-prediction models using EHR data available within 24 hours of admission (‘first-day’ multi-condition EHR model) or during the entire hospitalization (‘full-stay’ multi-condition EHR model).18,19 For the pneumonia-specific models, we included all variables in our published multi-condition models as candidate predictors, including sociodemographics, prior utilization, Charlson Comorbidity Index, select laboratory and vital sign abnormalities, length of stay, hospital complications (eg, venous thromboembolism), vital sign instabilities, and disposition status (see Supplemental Table 1 for complete list of variables). We also assessed additional variables specific to pneumonia for inclusion that were: (1) available in the EHR of all participating hospitals; (2) routinely collected or available at the time of admission or discharge; and (3) plausible predictors of adverse outcomes based on literature and clinical expertise. These included select comorbidities (eg, psychiatric conditions, chronic lung disease, history of pneumonia),10,11,21,22 the pneumonia severity index (PSI),16,23,24 intensive care unit stay, and receipt of invasive or noninvasive ventilation. We used a modified PSI score because certain data elements were missing. The modified PSI (henceforth referred to as PSI) did not include nursing home residence and included diagnostic codes as proxies for the presence of pleural effusion (ICD-9-CM codes 510, 511.1, and 511.9) and altered mental status (ICD-9-CM codes 780.0X, 780.97, 293.0, 293.1, and 348.3X).

Statistical Analysis

Model Derivation. Candidate predictor variables were classified as available in the EHR within 24 hours of admission and/or at the time of discharge. For example, socioeconomic factors could be ascertained within the first day of hospitalization, whereas length of stay would not be available until the day of discharge. Predictors with missing values were assumed to be normal (less than 1% missing for each variable). Univariate relationships between readmission and each candidate predictor were assessed in the overall cohort using a pre-specified significance threshold of P ≤ 0.10. Significant variables were entered in the respective first-day and full-stay pneumonia-specific multivariable logistic regression models using stepwise-backward selection with a pre-specified significance threshold of P ≤ 0.05. In sensitivity analyses, we alternately derived our models using stepwise-forward selection, as well as stepwise-backward selection minimizing the Bayesian information criterion and Akaike information criterion separately. These alternate modeling strategies yielded identical predictors to our final models.

Model Validation. Model validation was performed using 5-fold cross-validation, with the overall cohort randomly divided into 5 equal-size subsets.25 For each cycle, 4 subsets were used for training to estimate model coefficients, and the fifth subset was used for validation. This cycle was repeated 5 times with each randomly-divided subset used once as the validation set. We repeated this entire process 50 times and averaged the C statistic estimates to derive an optimism-corrected C statistic. Model calibration was assessed qualitatively by comparing predicted to observed probabilities of readmission by quintiles of predicted risk, and with the Hosmer-Lemeshow goodness-of-fit test.

Comparison to Other Models. The main comparisons of the first-day and full-stay pneumonia-specific EHR model performance were to each other and the corresponding multi-condition EHR model.18,19 The multi-condition EHR models were separately derived and validated within the larger parent cohort from which this study cohort was derived, and outperformed the CMS all-cause model, the HOSPITAL model, and the LACE index.19 To further triangulate our findings, given the lack of other rigorously validated pneumonia-specific risk-prediction models for readmission,14 we compared the pneumonia-specific EHR models to the CMS pneumonia model derived from administrative claims data,10 and 2 commonly used risk-prediction scores for short-term mortality among patients with community-acquired pneumonia, the PSI and CURB-65 scores.16 Although derived and validated using patient-level data, the CMS model was developed to benchmark hospitals according to hospital-level readmission rates.10 The CURB-65 score in this study was also modified to include the same altered mental status diagnostic codes according to the modified PSI as a proxy for “confusion.” Both the PSI and CURB-65 scores were calculated using the most abnormal values within the first 24 hours of admission. The ‘updated’ PSI and the ‘updated’ CURB-65 were calculated using the most abnormal values within 24 hours prior to discharge, or the last known observation prior to discharge if no results were recorded within this time period. A complete list of variables for each of the comparison models are shown in Supplemental Table 1.

We assessed model performance by calculating the C statistic, integrated discrimination index, and net reclassification index (NRI) compared to our pneumonia-specific models. The integrated discrimination index is the difference in the mean predicted probability of readmission between patients who were and were not actually readmitted between 2 models, where more positive values suggest improvement in model performance compared to a reference model.26 The NRI is defined as the sum of the net proportions of correctly reclassified persons with and without the event of interest.27 Here, we calculated a category-based NRI to evaluate the performance of pneumonia-specific models in correctly classifying individuals with and without readmissions into the 2 highest readmission risk quintiles vs the lowest 3 risk quintiles compared to other models.27 This pre-specified cutoff is relevant for hospitals interested in identifying the highest risk individuals for targeted intervention.7 Finally, we assessed calibration of comparator models in our cohort by comparing predicted probability to observed probability of readmission by quintiles of risk for each model. We conducted all analyses using Stata 12.1 (StataCorp, College Station, Texas). This study was approved by the University of Texas Southwestern Medical Center Institutional Review Board.

RESULTS

Of 1463 index hospitalizations (Supplemental Figure 1), the 30-day all-cause readmission rate was 13.6%. Individuals with a 30-day readmission had markedly different sociodemographic and clinical characteristics compared to those not readmitted (Table 1; see Supplemental Table 2 for additional clinical characteristics).

Derivation, Validation, and Performance of the Pneumonia-Specific Readmission Risk-Prediction Models

The final first-day pneumonia-specific EHR model included 7 variables, including sociodemographic characteristics; prior hospitalizations; thrombocytosis, and PSI (Table 2). The first-day pneumonia-specific model had adequate discrimination (C statistic, 0.695; optimism-corrected C statistic 0.675, 95% confidence interval [CI], 0.667-0.685; Table 3). It also effectively stratified individuals across a broad range of risk (average predicted decile of risk ranged from 4% to 33%; Table 3) and was well calibrated (Supplemental Table 3).

The final full-stay pneumonia-specific EHR readmission model included 8 predictors, including 3 variables from the first-day model (median income, thrombocytosis, and prior hospitalizations; Table 2). The full-stay pneumonia-specific EHR model also included vital sign instabilities on discharge, updated PSI, and disposition status (ie, being discharged with home health or to a post-acute care facility was associated with greater odds of readmission, and hospice with lower odds). The full-stay pneumonia-specific EHR model had good discrimination (C statistic, 0.731; optimism-corrected C statistic, 0.714; 95% CI, 0.706-0.720), and stratified individuals across a broad range of risk (average predicted decile of risk ranged from 3% to 37%; Table 3), and was also well calibrated (Supplemental Table 3).

First-Day Pneumonia-Specific EHR Model vs First-Day Multi-Condition EHR Model

The first-day pneumonia-specific EHR model outperformed the first-day multi-condition EHR model with better discrimination (P = 0.029) and more correctly classified individuals in the top 2 highest risk quintiles vs the bottom 3 risk quintiles (Table 3, Supplemental Table 4, and Supplemental Figure 2A). With respect to calibration, the first-day multi-condition EHR model overestimated risk among the highest quintile risk group compared to the first-day pneumonia-specific EHR model (Figure 1A, 1B).

Full-Stay Pneumonia-Specific EHR Model vs Other Models

The full-stay pneumonia-specific EHR model comparatively outperformed the corresponding full-stay multi-condition EHR model, as well as the first-day pneumonia-specific EHR model, the CMS pneumonia model, the updated PSI, and the updated CURB-65 (Table 3, Supplemental Table 5, Supplemental Table 6, and Supplemental Figures 2B and 2C). Compared to the full-stay multi-condition and first-day pneumonia-specific EHR models, the full-stay pneumonia-specific EHR model had better discrimination, better reclassification (NRI, 0.09 and 0.08, respectively), and was able to stratify individuals across a broader range of readmission risk (Table 3). It also had better calibration in the highest quintile risk group compared to the full-stay multi-condition EHR model (Figure 1C and 1D).

Updated vs First-Day Modified PSI and CURB-65 Scores

The updated PSI was more strongly predictive of readmission than the PSI calculated on the day of admission (Wald test, 9.83; P = 0.002). Each 10-point increase in the updated PSI was associated with a 22% increased odds of readmission vs an 11% increase for the PSI calculated upon admission (Table 2). The improved predictive ability of the updated PSI and CURB-65 scores was also reflected in the superior discrimination and calibration vs the respective first-day pneumonia severity of illness scores (Table 3).

DISCUSSION

Using routinely available EHR data from 6 diverse hospitals, we developed 2 pneumonia-specific readmission risk-prediction models that aimed to allow hospitals to identify patients hospitalized with pneumonia at high risk for readmission. Overall, we found that a pneumonia-specific model using EHR data from the entire hospitalization outperformed all other models—including the first-day pneumonia-specific model using data present only on admission, our own multi-condition EHR models, and the CMS pneumonia model based on administrative claims data—in all aspects of model performance (discrimination, calibration, and reclassification). We found that socioeconomic status, prior hospitalizations, thrombocytosis, and measures of clinical severity and stability were important predictors of 30-day all-cause readmissions among patients hospitalized with pneumonia. Additionally, an updated discharge PSI score was a stronger independent predictor of readmissions compared to the PSI score calculated upon admission; and inclusion of the updated PSI in our full-stay pneumonia model led to improved prediction of 30-day readmissions.

The marked improvement in performance of the full-stay pneumonia-specific EHR model compared to the first-day pneumonia-specific model suggests that clinical stability and trajectory during hospitalization (as modeled through disposition status, updated PSI, and vital sign instabilities at discharge) are important predictors of 30-day readmission among patients hospitalized for pneumonia, which was not the case for our EHR-based multi-condition models.19 With the inclusion of these measures, the full-stay pneumonia-specific model correctly reclassified an additional 8% of patients according to their true risk compared to the first-day pneumonia-specific model. One implication of these findings is that hospitals interested in targeting their highest risk individuals with pneumonia for transitional care interventions could do so using the first-day pneumonia-specific EHR model and could refine their targeted strategy at the time of discharge by using the full-stay pneumonia model. This staged risk-prediction strategy would enable hospitals to initiate transitional care interventions for high-risk individuals in the inpatient setting (ie, patient education).7 Then, hospitals could enroll both persistent and newly identified high-risk individuals for outpatient interventions (ie, follow-up telephone call) in the immediate post-discharge period, an interval characterized by heightened vulnerability for adverse events,28 based on patients’ illness severity and stability at discharge. This approach can be implemented by hospitals by building these risk-prediction models directly into the EHR, or by extracting EHR data in near real time as our group has done successfully for heart failure.7

Another key implication of our study is that, for pneumonia, a disease-specific modeling approach has better predictive ability than using a multi-condition model. Compared to multi-condition models, the first-day and full-stay pneumonia-specific EHR models correctly reclassified an additional 6% and 9% of patients, respectively. Thus, hospitals interested in identifying the highest risk patients with pneumonia for targeted interventions should do so using the disease-specific models, if the costs and resources of doing so are within reach of the healthcare system.

An additional novel finding of our study is the added value of an updated PSI for predicting adverse events. Studies of pneumonia severity of illness scores have calculated the PSI and CURB-65 scores using data present only on admission.16,24 While our study also confirms that the PSI calculated upon admission is a significant predictor of readmission,23,29 this study extends this work by showing that an updated PSI score calculated at the time of discharge is an even stronger predictor for readmission, and its inclusion in the model significantly improves risk stratification and prognostication.

Our study was noteworthy for several strengths. First, we used data from a common EHR system, thus potentially allowing for the implementation of the pneumonia-specific models in real time across a number of hospitals. The use of routinely collected data for risk-prediction modeling makes this approach scalable and sustainable, because it obviates the need for burdensome data collection and entry. Second, to our knowledge, this is the first study to measure the additive influence of illness severity and stability at discharge on the readmission risk among patients hospitalized with pneumonia. Third, our study population was derived from 6 hospitals diverse in payer status, age, race/ethnicity, and socioeconomic status. Fourth, our models are less likely to be overfit to the idiosyncrasies of our data given that several predictors included in our final pneumonia-specific models have been associated with readmission in this population, including marital status,13,30 income,11,31 prior hospitalizations,11,13 thrombocytosis,32-34 and vital sign instabilities on discharge.17 Lastly, the discrimination of the CMS pneumonia model in our cohort (C statistic, 0.64) closely matched the discrimination observed in 4 independent cohorts (C statistic, 0.63), suggesting adequate generalizability of our study setting and population.10,12

Our results should be interpreted in the context of several limitations. First, generalizability to other regions beyond north Texas is unknown. Second, although we included a diverse cohort of safety net, community, teaching, and nonteaching hospitals, the pneumonia-specific models were not externally validated in a separate cohort, which may lead to more optimistic estimates of model performance. Third, PSI and CURB-65 scores were modified to use diagnostic codes for altered mental status and pleural effusion, and omitted nursing home residence. Thus, the independent associations for the PSI and CURB-65 scores and their predictive ability are likely attenuated. Fourth, we were unable to include data on medications (antibiotics and steroid use) and outpatient visits, which may influence readmission risk.2,9,13,35-40 Fifth, we included only the first pneumonia hospitalization per patient in this study. Had we included multiple hospitalizations per patient, we anticipate better model performance for the 2 pneumonia-specific EHR models since prior hospitalization was a robust predictor of readmission.

In conclusion, the full-stay pneumonia-specific EHR readmission risk-prediction model outperformed the first-day pneumonia-specific model, multi-condition EHR models, and the CMS pneumonia model. This suggests that: measures of clinical severity and stability at the time of discharge are important predictors for identifying patients at highest risk for readmission; and that EHR data routinely collected for clinical practice can be used to accurately predict risk of readmission among patients hospitalized for pneumonia.

Acknowledgments

The authors would like to acknowledge Ruben Amarasingham, MD, MBA, president and chief executive officer of Parkland Center for Clinical Innovation, and Ferdinand Velasco, MD, chief health information officer at Texas Health Resources for their assistance in assembling the 6-hospital cohort used in this study.

Disclosures

This work was supported by the Agency for Healthcare Research and Quality-funded UT Southwestern Center for Patient-Centered Outcomes Research (R24 HS022418-01); the Commonwealth Foundation (#20100323); the UT Southwestern KL2 Scholars Program supported by the National Institutes of Health (KL2 TR001103 to ANM and OKN); and the National Center for Advancing Translational Sciences at the National Institute of Health (U54 RFA-TR-12-006 to E.A.H.). The study sponsors had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The authors have no financial conflicts of interest to disclose

1. Centers for Disease Control and Prevention. Pneumonia. http://www.cdc.gov/nchs/fastats/pneumonia.htm. Accessed January 26, 2016.

33. Prina E, Ferrer M, Ranzani OT, et al. Thrombocytosis is a marker of poor outcome in community-acquired pneumonia. Chest. 2013;143(3):767-775. PubMed

34. Violi F, Cangemi R, Calvieri C. Pneumonia, thrombosis and vascular disease. J Thromb Haemost. 2014;12(9):1391-1400. PubMed

35. Weinberger M, Oddone EZ, Henderson WG. Does increased access to primary care reduce hospital readmissions? Veterans Affairs Cooperative Study Group on Primary Care and Hospital Readmission. N Engl J Med. 1996;334(22):1441-1447. PubMed

36. Field TS, Ogarek J, Garber L, Reed G, Gurwitz JH. Association of early post-discharge follow-up by a primary care physician and 30-day rehospitalization among older adults. J Gen Intern Med. 2015;30(5):565-571. PubMed

37. Spatz ES, Sheth SD, Gosch KL, et al. Usual source of care and outcomes following acute myocardial infarction. J Gen Intern Med. 2014;29(6):862-869. PubMed

38. Brooke BS, Stone DH, Cronenwett JL, et al. Early primary care provider follow-up and readmission after high-risk surgery. JAMA Surg. 2014;149(8):821-828. PubMed

39. Adamuz J, Viasus D, Campreciós-Rodriguez P, et al. A prospective cohort study of healthcare visits and rehospitalizations after discharge of patients with community-acquired pneumonia. Respirology. 2011;16(7):1119-1126. PubMed

40. Shorr AF, Zilberberg MD, Reichley R, et al. Readmission following hospitalization for pneumonia: the impact of pneumonia type and its implication for hospitals. Clin Infect Dis. 2013;57(3):362-367. PubMed

1. Centers for Disease Control and Prevention. Pneumonia. http://www.cdc.gov/nchs/fastats/pneumonia.htm. Accessed January 26, 2016.

33. Prina E, Ferrer M, Ranzani OT, et al. Thrombocytosis is a marker of poor outcome in community-acquired pneumonia. Chest. 2013;143(3):767-775. PubMed

34. Violi F, Cangemi R, Calvieri C. Pneumonia, thrombosis and vascular disease. J Thromb Haemost. 2014;12(9):1391-1400. PubMed

35. Weinberger M, Oddone EZ, Henderson WG. Does increased access to primary care reduce hospital readmissions? Veterans Affairs Cooperative Study Group on Primary Care and Hospital Readmission. N Engl J Med. 1996;334(22):1441-1447. PubMed

36. Field TS, Ogarek J, Garber L, Reed G, Gurwitz JH. Association of early post-discharge follow-up by a primary care physician and 30-day rehospitalization among older adults. J Gen Intern Med. 2015;30(5):565-571. PubMed

37. Spatz ES, Sheth SD, Gosch KL, et al. Usual source of care and outcomes following acute myocardial infarction. J Gen Intern Med. 2014;29(6):862-869. PubMed

38. Brooke BS, Stone DH, Cronenwett JL, et al. Early primary care provider follow-up and readmission after high-risk surgery. JAMA Surg. 2014;149(8):821-828. PubMed

39. Adamuz J, Viasus D, Campreciós-Rodriguez P, et al. A prospective cohort study of healthcare visits and rehospitalizations after discharge of patients with community-acquired pneumonia. Respirology. 2011;16(7):1119-1126. PubMed

40. Shorr AF, Zilberberg MD, Reichley R, et al. Readmission following hospitalization for pneumonia: the impact of pneumonia type and its implication for hospitals. Clin Infect Dis. 2013;57(3):362-367. PubMed

© 2017 Society of Hospital Medicine

Sneak Peek: Journal of Hospital Medicine

BACKGROUND: Readmissions after hospitalization for pneumonia are common, but the few risk-prediction models have poor to modest predictive ability. Data routinely collected in the EHR may improve prediction.

DESIGN: Observational cohort study using backward-stepwise selection and cross validation.

SUBJECTS: Consecutive pneumonia hospitalizations from six diverse hospitals in north Texas from 2009 to 2010.

MEASURES: All-cause, nonelective, 30-day readmissions, ascertained from 75 regional hospitals.

RESULTS: Of 1,463 patients, 13.6% were readmitted. The first-day, pneumonia-specific model included sociodemographic factors, prior hospitalizations, thrombocytosis, and a modified pneumonia severity index. The full-stay model included disposition status, vital sign instabilities on discharge, and an updated pneumonia severity index calculated using values from the day of discharge as additional predictors. The full-stay, pneumonia-specific model outperformed the first-day model (C-statistic, 0.731 vs. 0.695; P = .02; net reclassification index = 0.08). Compared with a validated multicondition readmission model, the Centers for Medicare & Medicaid Services pneumonia model, and two commonly used pneumonia severity of illness scores, the full-stay pneumonia-specific model had better discrimination (C-statistic, 0.604-0.681; P less than 0.01 for all comparisons), predicted a broader range of risk, and better reclassified individuals by their true risk (net reclassification index range, 0.09-0.18).

CONCLUSIONS: EHR data collected from the entire hospitalization can accurately predict readmission risk among patients hospitalized for pneumonia. This approach outperforms a first-day, pneumonia-specific model, the Centers for Medicare & Medicaid Services pneumonia model, and two commonly used pneumonia severity of illness scores.

Also In JHM This Month

Evaluating automated rules for rapid response system alarm triggers in medical and surgical patients

AUTHORS: Santiago Romero-Brufau, MD; Bruce W. Morlan, MS; Matthew Johnson, MPH; Joel Hickman; Lisa L. Kirkland, MD; James M. Naessens, ScD; Jeanne Huddleston, MD, FACP, FHM

Prognosticating with the Hospital-Patient One-year Mortality Risk score using information abstracted from the medical record

AUTHORS: Genevieve Casey, MD, and Carl van Walraven, MD, FRCPC, MSc

Automating venous thromboembolism risk calculation using electronic health record data upon hospital admission: The Automated Padua Prediction Score

AUTHORS: Pierre Elias, MD; Raman Khanna, MD; Adams Dudley, MD, MBA; Jason Davies, MD, PhD; Ronald Jacolbia, MSN; Kara McArthur, BA; Andrew D. Auerbach, MD, MPH, SFHM

The value of ultrasound in cellulitis to rule out deep venous thrombosis

AUTHORS: Hyung J. Cho, MD, and Andrew S. Dunn, MD, SFHM

Hospital medicine and perioperative care: A framework for high quality, high value collaborative care

AUTHORS: Rachel E. Thompson, MD, MPH, SFHM; Kurt Pfeifer, MD, FHM; Paul Grant, MD, SFHM; Cornelia Taylor, MD; Barbara Slawski, MD, FACP, MS, SFHM; Christopher Whinney, MD, FACP, FHM; Laurence Wellikson, MD, MHM; Amir K. Jaffer, MD, MBA, SFHM

BACKGROUND: Readmissions after hospitalization for pneumonia are common, but the few risk-prediction models have poor to modest predictive ability. Data routinely collected in the EHR may improve prediction.

DESIGN: Observational cohort study using backward-stepwise selection and cross validation.

SUBJECTS: Consecutive pneumonia hospitalizations from six diverse hospitals in north Texas from 2009 to 2010.

MEASURES: All-cause, nonelective, 30-day readmissions, ascertained from 75 regional hospitals.

RESULTS: Of 1,463 patients, 13.6% were readmitted. The first-day, pneumonia-specific model included sociodemographic factors, prior hospitalizations, thrombocytosis, and a modified pneumonia severity index. The full-stay model included disposition status, vital sign instabilities on discharge, and an updated pneumonia severity index calculated using values from the day of discharge as additional predictors. The full-stay, pneumonia-specific model outperformed the first-day model (C-statistic, 0.731 vs. 0.695; P = .02; net reclassification index = 0.08). Compared with a validated multicondition readmission model, the Centers for Medicare & Medicaid Services pneumonia model, and two commonly used pneumonia severity of illness scores, the full-stay pneumonia-specific model had better discrimination (C-statistic, 0.604-0.681; P less than 0.01 for all comparisons), predicted a broader range of risk, and better reclassified individuals by their true risk (net reclassification index range, 0.09-0.18).

CONCLUSIONS: EHR data collected from the entire hospitalization can accurately predict readmission risk among patients hospitalized for pneumonia. This approach outperforms a first-day, pneumonia-specific model, the Centers for Medicare & Medicaid Services pneumonia model, and two commonly used pneumonia severity of illness scores.

Also In JHM This Month

Evaluating automated rules for rapid response system alarm triggers in medical and surgical patients

AUTHORS: Santiago Romero-Brufau, MD; Bruce W. Morlan, MS; Matthew Johnson, MPH; Joel Hickman; Lisa L. Kirkland, MD; James M. Naessens, ScD; Jeanne Huddleston, MD, FACP, FHM

Prognosticating with the Hospital-Patient One-year Mortality Risk score using information abstracted from the medical record

AUTHORS: Genevieve Casey, MD, and Carl van Walraven, MD, FRCPC, MSc

Automating venous thromboembolism risk calculation using electronic health record data upon hospital admission: The Automated Padua Prediction Score

AUTHORS: Pierre Elias, MD; Raman Khanna, MD; Adams Dudley, MD, MBA; Jason Davies, MD, PhD; Ronald Jacolbia, MSN; Kara McArthur, BA; Andrew D. Auerbach, MD, MPH, SFHM

The value of ultrasound in cellulitis to rule out deep venous thrombosis

AUTHORS: Hyung J. Cho, MD, and Andrew S. Dunn, MD, SFHM

Hospital medicine and perioperative care: A framework for high quality, high value collaborative care

AUTHORS: Rachel E. Thompson, MD, MPH, SFHM; Kurt Pfeifer, MD, FHM; Paul Grant, MD, SFHM; Cornelia Taylor, MD; Barbara Slawski, MD, FACP, MS, SFHM; Christopher Whinney, MD, FACP, FHM; Laurence Wellikson, MD, MHM; Amir K. Jaffer, MD, MBA, SFHM

BACKGROUND: Readmissions after hospitalization for pneumonia are common, but the few risk-prediction models have poor to modest predictive ability. Data routinely collected in the EHR may improve prediction.