User login

Faculty Development for Hospitalists: A Call to Arms

Over the past two decades, the field of hospital medicine has gone from relative obscurity to a viable career pathway for approximately 50,000 physicians in this country.1 A subset of hospitalists pursue careers in academic medicine, which is a pathway that traditionally includes education and scholarship in addition to patient care. While the academic career pathway is well paved in many clinical specialties, it is still relatively underdeveloped for academic hospitalists, and thus what defines career success for this group is even less clear.

In this issue of the Journal of Hospital Medicine, Cumbler et al. performed a qualitative analysis to explore how early career academic hospitalists self-define and perceive their career success.2 Drawing on interviews with 17 early-career hospitalists at 3 academic medical centers, the authors created a theoretical framework organized around a traditional conceptual model of career success that is divided into intrinsic and extrinsic motivating factors. They found that early-career academic hospitalists, (clinician-educators in first 2-5 years), defined their career success almost exclusively around factors intrinsic to their day-to-day job. These factors included such things as excitement about their daily work, developing proficiency in the delivery of high-quality clinical care, and passion for doing work that is meaningful to them. In addition to these immediate job satisfiers, many hospitalists emphasized long-term career success factors such as becoming an expert in a particular domain of hospital medicine and gaining respect and recognition within their local or national environment. Surprisingly, compensation and career advancement through promotion, two traditional external career success factors, were not uniformly valued.

These findings come at a critical time for our field in which early-career faculty outnumber mid- and late-career faculty by an order of magnitude. Indeed, how to develop, promote, sustain, and retain young hospitalists is a topic on the minds of most hospital medicine group directors. Putting aside the impact of hospitalist turnover on productivity, patient care outcomes, and morale within an individual hospital medicine group, we agree with the authors that understanding and cultivating career success for academic hospitalists is imperative for the future of our field. For this reason, we launched a formal faculty development program at Penn this year, which focuses on supporting the growth of hospitalists in their first two years on faculty. The findings of this study provide interesting new perspectives and encourage us to continue our focus on early-career academic hospitalists. We laud the previous efforts in this area and hope that the paper by Cumbler et al. encourages and inspires other programs to start or accelerate their hospitalist faculty development efforts.3-5

However, some findings from this study are somewhat perplexing or even a bit discouraging for those who are invested in faculty development in academia. For example, the authors raise the possibility that there may be a disconnect in the minds of early-career hospitalists as it pertains to their thoughts on career success. On the one hand, the hospitalists interviewed in this study are happy doing their clinical work and cite this as a primary driver of their career success. On the other hand, they equate career success with things such as developing expertise within a particular domain of hospital medicine, acquiring leadership roles, collaborating academically with other specialties or professions, or developing new innovations. Presumably this is part of the reason that they selected a job in an academic setting as opposed to a community setting. However, in order to achieve these goals, one must devote time and effort to purposefully developing them. Therefore, identifying and developing mentors who can assist early-career hospitalists with identifying, articulating, and developing strategies to achieve both their short- and long-term career goals is critical. One mentor–mentee conversation may reveal that an individual hospitalist values being an excellent clinician and has little interest in developing a niche within hospital medicine; another may reveal a lack of awareness of available professional development resources; still another may uncover a lack of realism regarding the time or skills it takes to achieve a particular career goal. These realities highlight an imperative for our field to develop robust and sustainable mentorship programs for not only early-career hospitalists but also some mid-career hospitalists whose careers may not yet be fully developed. Indeed, one of the biggest challenges that have emerged in our experience with a faculty development program at Penn is creating meaningful mentorship and career development advice for mid-career hospitalists (late assistant or early associate professors who are typically 5-10 years into their careers).

We found it interesting that the hospitalists interviewed did not mention three of the four pillars of career satisfaction outlined in the white paper on Hospitalist Career Satisfaction from the Society for Hospital Medicine: workload schedule, autonomy control, and community/environment.6 Perhaps this is because hospitalists, like many other professionals, recognize that feeling satisfied in one’s career is not the same as feeling successful. Satisfaction in one’s career refers to the foundational needs that one requires in order to feel content, whereas success is more often equated with achievement, even if that achievement is simply the acquisition of one’s goals for themselves. The reality is that given the constant growth and change within teaching hospitals, and therefore academic hospital medicine groups, tending to the satisfiers for hospitalists (eg, schedule and workload) often takes a front seat to assisting faculty in achieving their individual career potential. We assert that despite the inherent difficulty, academic hospital medicine group leaders need to focus their attention on both the satisfaction and career success of their early-career faculty.

Finally, this paper raises many interesting questions

In conclusion, the findings of Cumbler et al. should promote unrest among leaders of academic hospital medicine groups and their departments of medicine. While it is inspiring to see so many early-career hospitalists focused on their daily happiness at work, we are unsure about whether they have the knowledge, tools, and guidance to achieve their self-professed academic goals, which many equate with career success. Given the continued growth of the hospital medicine workforce, we view this important new work as a national call to arms for the purposeful development of academic hospitalist faculty development programs.

Disclosures

Dr. Myers and Dr. Greysen have nothing to disclose.

1. Wachter RM, Goldman L. Zero to 50,000-The 20th anniversary of the hospitalist. N Engl J Med. 2016;375(11):1009-1011. PubMed

2. Cumbler E, Yirdaw E, Kneeland P, et al. What is career success for academic hospitalists? A qualitative analysis of early-career faculty perspectives. J Hosp Med. 2018;13(5):372-377. doi: 10.12788/jhm.2924. Published online first January 31, 2018. PubMed

3. Nagarur A, O’Neill RM, Lawton D, Greenwald JL. Supporting faculty development in hospital medicine: design and implementation of a personalized structured mentoring program. J Hosp Med. 2018;13(2):96-99. PubMed

4. Sehgal NL, Sharpe BA, Auerbach AA, Wachter RM. Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161-166. PubMed

5. Howell E, Kravet S, Kisuule F, Wright SM. An innovative approach to supporting hospitalist physicians towards academic success. J Hosp Med. 2008;3(4):314-318. PubMed

6. Society of Hospital Medicine Career Satisfaction Taskforce: A Challenge for a new Specialty. A White paper on hospitalist career satisfaction. http://dev.hospitalmedicine.org/Web/Media_Center/shm_white_papers.aspx . Accessed February 9, 2018.

Over the past two decades, the field of hospital medicine has gone from relative obscurity to a viable career pathway for approximately 50,000 physicians in this country.1 A subset of hospitalists pursue careers in academic medicine, which is a pathway that traditionally includes education and scholarship in addition to patient care. While the academic career pathway is well paved in many clinical specialties, it is still relatively underdeveloped for academic hospitalists, and thus what defines career success for this group is even less clear.

In this issue of the Journal of Hospital Medicine, Cumbler et al. performed a qualitative analysis to explore how early career academic hospitalists self-define and perceive their career success.2 Drawing on interviews with 17 early-career hospitalists at 3 academic medical centers, the authors created a theoretical framework organized around a traditional conceptual model of career success that is divided into intrinsic and extrinsic motivating factors. They found that early-career academic hospitalists, (clinician-educators in first 2-5 years), defined their career success almost exclusively around factors intrinsic to their day-to-day job. These factors included such things as excitement about their daily work, developing proficiency in the delivery of high-quality clinical care, and passion for doing work that is meaningful to them. In addition to these immediate job satisfiers, many hospitalists emphasized long-term career success factors such as becoming an expert in a particular domain of hospital medicine and gaining respect and recognition within their local or national environment. Surprisingly, compensation and career advancement through promotion, two traditional external career success factors, were not uniformly valued.

These findings come at a critical time for our field in which early-career faculty outnumber mid- and late-career faculty by an order of magnitude. Indeed, how to develop, promote, sustain, and retain young hospitalists is a topic on the minds of most hospital medicine group directors. Putting aside the impact of hospitalist turnover on productivity, patient care outcomes, and morale within an individual hospital medicine group, we agree with the authors that understanding and cultivating career success for academic hospitalists is imperative for the future of our field. For this reason, we launched a formal faculty development program at Penn this year, which focuses on supporting the growth of hospitalists in their first two years on faculty. The findings of this study provide interesting new perspectives and encourage us to continue our focus on early-career academic hospitalists. We laud the previous efforts in this area and hope that the paper by Cumbler et al. encourages and inspires other programs to start or accelerate their hospitalist faculty development efforts.3-5

However, some findings from this study are somewhat perplexing or even a bit discouraging for those who are invested in faculty development in academia. For example, the authors raise the possibility that there may be a disconnect in the minds of early-career hospitalists as it pertains to their thoughts on career success. On the one hand, the hospitalists interviewed in this study are happy doing their clinical work and cite this as a primary driver of their career success. On the other hand, they equate career success with things such as developing expertise within a particular domain of hospital medicine, acquiring leadership roles, collaborating academically with other specialties or professions, or developing new innovations. Presumably this is part of the reason that they selected a job in an academic setting as opposed to a community setting. However, in order to achieve these goals, one must devote time and effort to purposefully developing them. Therefore, identifying and developing mentors who can assist early-career hospitalists with identifying, articulating, and developing strategies to achieve both their short- and long-term career goals is critical. One mentor–mentee conversation may reveal that an individual hospitalist values being an excellent clinician and has little interest in developing a niche within hospital medicine; another may reveal a lack of awareness of available professional development resources; still another may uncover a lack of realism regarding the time or skills it takes to achieve a particular career goal. These realities highlight an imperative for our field to develop robust and sustainable mentorship programs for not only early-career hospitalists but also some mid-career hospitalists whose careers may not yet be fully developed. Indeed, one of the biggest challenges that have emerged in our experience with a faculty development program at Penn is creating meaningful mentorship and career development advice for mid-career hospitalists (late assistant or early associate professors who are typically 5-10 years into their careers).

We found it interesting that the hospitalists interviewed did not mention three of the four pillars of career satisfaction outlined in the white paper on Hospitalist Career Satisfaction from the Society for Hospital Medicine: workload schedule, autonomy control, and community/environment.6 Perhaps this is because hospitalists, like many other professionals, recognize that feeling satisfied in one’s career is not the same as feeling successful. Satisfaction in one’s career refers to the foundational needs that one requires in order to feel content, whereas success is more often equated with achievement, even if that achievement is simply the acquisition of one’s goals for themselves. The reality is that given the constant growth and change within teaching hospitals, and therefore academic hospital medicine groups, tending to the satisfiers for hospitalists (eg, schedule and workload) often takes a front seat to assisting faculty in achieving their individual career potential. We assert that despite the inherent difficulty, academic hospital medicine group leaders need to focus their attention on both the satisfaction and career success of their early-career faculty.

Finally, this paper raises many interesting questions

In conclusion, the findings of Cumbler et al. should promote unrest among leaders of academic hospital medicine groups and their departments of medicine. While it is inspiring to see so many early-career hospitalists focused on their daily happiness at work, we are unsure about whether they have the knowledge, tools, and guidance to achieve their self-professed academic goals, which many equate with career success. Given the continued growth of the hospital medicine workforce, we view this important new work as a national call to arms for the purposeful development of academic hospitalist faculty development programs.

Disclosures

Dr. Myers and Dr. Greysen have nothing to disclose.

Over the past two decades, the field of hospital medicine has gone from relative obscurity to a viable career pathway for approximately 50,000 physicians in this country.1 A subset of hospitalists pursue careers in academic medicine, which is a pathway that traditionally includes education and scholarship in addition to patient care. While the academic career pathway is well paved in many clinical specialties, it is still relatively underdeveloped for academic hospitalists, and thus what defines career success for this group is even less clear.

In this issue of the Journal of Hospital Medicine, Cumbler et al. performed a qualitative analysis to explore how early career academic hospitalists self-define and perceive their career success.2 Drawing on interviews with 17 early-career hospitalists at 3 academic medical centers, the authors created a theoretical framework organized around a traditional conceptual model of career success that is divided into intrinsic and extrinsic motivating factors. They found that early-career academic hospitalists, (clinician-educators in first 2-5 years), defined their career success almost exclusively around factors intrinsic to their day-to-day job. These factors included such things as excitement about their daily work, developing proficiency in the delivery of high-quality clinical care, and passion for doing work that is meaningful to them. In addition to these immediate job satisfiers, many hospitalists emphasized long-term career success factors such as becoming an expert in a particular domain of hospital medicine and gaining respect and recognition within their local or national environment. Surprisingly, compensation and career advancement through promotion, two traditional external career success factors, were not uniformly valued.

These findings come at a critical time for our field in which early-career faculty outnumber mid- and late-career faculty by an order of magnitude. Indeed, how to develop, promote, sustain, and retain young hospitalists is a topic on the minds of most hospital medicine group directors. Putting aside the impact of hospitalist turnover on productivity, patient care outcomes, and morale within an individual hospital medicine group, we agree with the authors that understanding and cultivating career success for academic hospitalists is imperative for the future of our field. For this reason, we launched a formal faculty development program at Penn this year, which focuses on supporting the growth of hospitalists in their first two years on faculty. The findings of this study provide interesting new perspectives and encourage us to continue our focus on early-career academic hospitalists. We laud the previous efforts in this area and hope that the paper by Cumbler et al. encourages and inspires other programs to start or accelerate their hospitalist faculty development efforts.3-5

However, some findings from this study are somewhat perplexing or even a bit discouraging for those who are invested in faculty development in academia. For example, the authors raise the possibility that there may be a disconnect in the minds of early-career hospitalists as it pertains to their thoughts on career success. On the one hand, the hospitalists interviewed in this study are happy doing their clinical work and cite this as a primary driver of their career success. On the other hand, they equate career success with things such as developing expertise within a particular domain of hospital medicine, acquiring leadership roles, collaborating academically with other specialties or professions, or developing new innovations. Presumably this is part of the reason that they selected a job in an academic setting as opposed to a community setting. However, in order to achieve these goals, one must devote time and effort to purposefully developing them. Therefore, identifying and developing mentors who can assist early-career hospitalists with identifying, articulating, and developing strategies to achieve both their short- and long-term career goals is critical. One mentor–mentee conversation may reveal that an individual hospitalist values being an excellent clinician and has little interest in developing a niche within hospital medicine; another may reveal a lack of awareness of available professional development resources; still another may uncover a lack of realism regarding the time or skills it takes to achieve a particular career goal. These realities highlight an imperative for our field to develop robust and sustainable mentorship programs for not only early-career hospitalists but also some mid-career hospitalists whose careers may not yet be fully developed. Indeed, one of the biggest challenges that have emerged in our experience with a faculty development program at Penn is creating meaningful mentorship and career development advice for mid-career hospitalists (late assistant or early associate professors who are typically 5-10 years into their careers).

We found it interesting that the hospitalists interviewed did not mention three of the four pillars of career satisfaction outlined in the white paper on Hospitalist Career Satisfaction from the Society for Hospital Medicine: workload schedule, autonomy control, and community/environment.6 Perhaps this is because hospitalists, like many other professionals, recognize that feeling satisfied in one’s career is not the same as feeling successful. Satisfaction in one’s career refers to the foundational needs that one requires in order to feel content, whereas success is more often equated with achievement, even if that achievement is simply the acquisition of one’s goals for themselves. The reality is that given the constant growth and change within teaching hospitals, and therefore academic hospital medicine groups, tending to the satisfiers for hospitalists (eg, schedule and workload) often takes a front seat to assisting faculty in achieving their individual career potential. We assert that despite the inherent difficulty, academic hospital medicine group leaders need to focus their attention on both the satisfaction and career success of their early-career faculty.

Finally, this paper raises many interesting questions

In conclusion, the findings of Cumbler et al. should promote unrest among leaders of academic hospital medicine groups and their departments of medicine. While it is inspiring to see so many early-career hospitalists focused on their daily happiness at work, we are unsure about whether they have the knowledge, tools, and guidance to achieve their self-professed academic goals, which many equate with career success. Given the continued growth of the hospital medicine workforce, we view this important new work as a national call to arms for the purposeful development of academic hospitalist faculty development programs.

Disclosures

Dr. Myers and Dr. Greysen have nothing to disclose.

1. Wachter RM, Goldman L. Zero to 50,000-The 20th anniversary of the hospitalist. N Engl J Med. 2016;375(11):1009-1011. PubMed

2. Cumbler E, Yirdaw E, Kneeland P, et al. What is career success for academic hospitalists? A qualitative analysis of early-career faculty perspectives. J Hosp Med. 2018;13(5):372-377. doi: 10.12788/jhm.2924. Published online first January 31, 2018. PubMed

3. Nagarur A, O’Neill RM, Lawton D, Greenwald JL. Supporting faculty development in hospital medicine: design and implementation of a personalized structured mentoring program. J Hosp Med. 2018;13(2):96-99. PubMed

4. Sehgal NL, Sharpe BA, Auerbach AA, Wachter RM. Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161-166. PubMed

5. Howell E, Kravet S, Kisuule F, Wright SM. An innovative approach to supporting hospitalist physicians towards academic success. J Hosp Med. 2008;3(4):314-318. PubMed

6. Society of Hospital Medicine Career Satisfaction Taskforce: A Challenge for a new Specialty. A White paper on hospitalist career satisfaction. http://dev.hospitalmedicine.org/Web/Media_Center/shm_white_papers.aspx . Accessed February 9, 2018.

1. Wachter RM, Goldman L. Zero to 50,000-The 20th anniversary of the hospitalist. N Engl J Med. 2016;375(11):1009-1011. PubMed

2. Cumbler E, Yirdaw E, Kneeland P, et al. What is career success for academic hospitalists? A qualitative analysis of early-career faculty perspectives. J Hosp Med. 2018;13(5):372-377. doi: 10.12788/jhm.2924. Published online first January 31, 2018. PubMed

3. Nagarur A, O’Neill RM, Lawton D, Greenwald JL. Supporting faculty development in hospital medicine: design and implementation of a personalized structured mentoring program. J Hosp Med. 2018;13(2):96-99. PubMed

4. Sehgal NL, Sharpe BA, Auerbach AA, Wachter RM. Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161-166. PubMed

5. Howell E, Kravet S, Kisuule F, Wright SM. An innovative approach to supporting hospitalist physicians towards academic success. J Hosp Med. 2008;3(4):314-318. PubMed

6. Society of Hospital Medicine Career Satisfaction Taskforce: A Challenge for a new Specialty. A White paper on hospitalist career satisfaction. http://dev.hospitalmedicine.org/Web/Media_Center/shm_white_papers.aspx . Accessed February 9, 2018.

©2018 Society of Hospital Medicine

If You Book It, Will They Come? Attendance at Postdischarge Follow-Up Visits Scheduled by Inpatient Providers

Given growing incentives to reduce readmission rates, predischarge checklists and bundles have recommended that inpatient providers schedule postdischarge follow-up visits (PDFVs) for their hospitalized patients.1-4 PDFVs have been linked to lower readmission rates in patients with chronic conditions, including congestive heart failure, psychiatric illnesses, and chronic obstructive pulmonary disease.5-8 In contrast, the impact of PDFVs on readmissions in hospitalized general medicine populations has been mixed.9-12 Beyond the presence or absence of PDFVs, it may be a patient’s inability to keep scheduled PDFVs that contributes more strongly to preventable readmissions.11

This challenge, dealing with the 12% to 37% of patients who miss their visits (“no-shows”), is not new.13-17 In high-risk patient populations, such as those with substance abuse, diabetes, or human immunodeficiency virus, no-shows (NSs) have been linked to poorer short-term and long-term clinical outcomes.16,18-20 Additionally, NSs pose a challenge for outpatient clinics and the healthcare system at large. The financial cost of NSs ranges from approximately $200 per patient in 2 analyses to $7 million in cumulative lost revenue per year at 1 large academic health system.13,17,21 As such, increasing attendance at PDFVs is a potential target for improving both patient outcomes and clinic productivity.

Most prior PDFV research has focused on readmission risk rather than PDFV attendance as the primary outcome.5-12 However, given the patient-oriented benefits of attending PDFVs and the clinic-oriented benefits of avoiding vacant time slots, NS PDFVs represent an important missed opportunity for our healthcare delivery system. To our knowledge, risk factors for PDFV nonattendance have not yet been systematically studied. The aim of our study was to analyze PDFV nonattendance, particularly NSs and same-day cancellations (SDCs), for hospitalizations and clinics within our healthcare system.

METHODS

Study Design

We conducted an observational cohort study of adult patients from 10 medical units at the Hospital of the University of Pennsylvania (a 789-bed quaternary-care hospital within an urban, academic medical system) who were scheduled with at least 1 PDFV. Specifically, the patients included in our analysis were hospitalized on general internal medicine services or medical subspecialty services with discharge dates between April 1, 2014, and March 31, 2015. Hospitalizations included in our study had at least 1 PDFV scheduled with an outpatient provider affiliated with the University of Pennsylvania Health System (UPHS). PDFVs scheduled with unaffiliated providers were not examined.

Each PDFV was requested by a patient’s inpatient care team. Once the care team had determined that a PDFV was clinically warranted, a member of the team (generally a resident, advanced practice provider, medical student, or designee) either called the UPHS clinic to schedule an appointment time or e-mailed the outpatient UPHS provider directly to facilitate a more urgent PDFV appointment time. Once a PDFV time was confirmed, PDFV details (ie, date, time, location, and phone number) were electronically entered into the patient’s discharge instructions by the inpatient care team. At the time of discharge, nurses reviewed these instructions with their patients. All patients left the hospital with a physical copy of these instructions. As part of routine care at our institution, patients then received automated telephone reminders from their UPHS-affiliated outpatient clinic 48 hours prior to each PDFV.

Data Collection

Our study was determined to meet criteria for quality improvement by the University of Pennsylvania’s Institutional Review Board. We used our healthcare system’s integrated electronic medical record system to track the dates of initial PDFV requests, the dates of hospitalization, and actual PDFV dates. PDFVs were included if the appointment request was made while a patient was hospitalized, including the day of discharge. Our study methodology only allowed us to investigate PDFVs scheduled with UPHS outpatient providers. We did not review discharge instructions or survey non-UPHS clinics to quantify visits scheduled with other providers, for example, community health centers or external private practices.

Exclusion criteria included the following: (1) office visits with nonproviders, for example, scheduled diagnostic procedures or pharmacist appointments for warfarin dosing; (2) visits cancelled by inpatient providers prior to discharge; (3) visits for patients not otherwise eligible for UPHS outpatient care because of insurance reasons; and (4) visits scheduled for dates after a patient’s death. Our motivation for the third exclusion criterion was the infrequent and irregular process by which PDFVs were authorized for these patients. These patients and their characteristics are described in Supplementary Table 1 in more detail.

For each PDFV, we recorded age, gender, race, insurance status, driving distance, length of stay for index hospitalization, discharging service (general internal medicine vs subspecialty), postdischarge disposition (home, home with home care services such as nursing or physical therapy, or facility), the number of PDFVs scheduled per index hospitalization, PDFV specialty type (oncologic subspecialty, nononcologic medical subspecialty, nononcologic surgical subspecialty, primary care, or other specialty), PDFV season, and PDFV lead time (the number of days between the discharge date and PDFV). We consolidated oncologic specialties into 1 group given the integrated nature of our healthcare system’s comprehensive cancer center. “Other” PDFV specialty subtypes are described in Supplementary Table 2. Driving distances between patient postal codes and our hospital were calculated using Excel VBA Master (Salt Lake City, Utah) and were subsequently categorized into patient-level quartiles for further analysis. For cancelled PDFVs, we collected dates of cancellation relative to the date of the appointment itself.

Study Outcomes

The primary study outcome was PDFV attendance. Each PDFV’s status was categorized by outpatient clinic staff as attended, cancelled, or NS. For cancelled appointments, cancellation dates and reasons (if entered by clinic representatives) were collected. In keeping with prior studies investigating outpatient nonattendance,we calculated collective NS/SDC rates for the variables listed above.17,22-25 We additionally calculated NS/SDC and attendance-as-scheduled rates stratified by the number of PDFVs per patient to assess for a “high-utilizer” effect with regard to PDFV attendance.

Statistical Analysis

We used multivariable mixed-effects regression with a logit link to assess associations between age, gender, race, insurance, driving distance quartile, length of stay, discharging service, postdischarge disposition, the number of PDFVs per hospitalization, PDFV specialty type, PDFV season, PDFV lead time, and our NS/SDC outcome. The mixed-effects approach was used to account for correlation structures induced by patients who had multiple visits and for patients with multiple hospitalizations. Specifically, our model specified 2 levels of nesting (PDFVs nested within each hospitalization, which were nested within each patient) to obtain appropriate standard error estimates for our adjusted odds ratios (ORs). Correlation matrices and multivariable variance inflation factors were used to assess collinearity among the predictor variables. These assessments did not indicate strong collinearity; hence, all predictors were included in the model. Only driving distance had a small amount of missing data (0.18% of driving distances were unavailable), so multiple imputation was not undertaken. Analyses were performed using R version 3.3.1 (R Foundation for Statistical Computing, Vienna, Austria).

RESULTS

Baseline Characteristics

During the 1-year study period, there were 11,829 discrete hospitalizations in medical units at our hospital. Of these hospitalizations, 6136 (52%) had at least 1 UPHS-affiliated PDFV meeting our inclusion and exclusion criteria, as detailed in the Figure. Across these hospitalizations, 9258 PDFVs were scheduled on behalf of 4653 patients. Demographic characteristics for these patients, hospitalizations, and visits are detailed in Table 1. The median age of patients in our cohort was 61 years old (interquartile range [IQR] 49-70, range 18-101). The median driving distance was 17 miles (IQR 4.3-38.8, range 0-2891). For hospitalizations, the median length of stay was 5 days (IQR 3-10, range 0-97). The median PDFV lead time, which is defined as the number of days between discharge and PDFV, was 12 days (IQR 6-23, range 1-60). Overall, 41% of patients (n = 1927) attended all of their PDFVs as scheduled; Supplementary Figure 1 lists patient-level PDFV attendance-as-scheduled percentages in more detail.

Incidence of NSs and SDCs

Twenty-five percent of PDFVs (n = 2303) were ultimately NS/SDCs; this included 1658 NSs (18% of all appointments) and 645 SDCs (7% of all appointments). Fifty-two percent of PDFVs (n = 4847) were kept as scheduled, while 23% (n = 2108) were cancelled before the day of the visit. Of the 2558 cancellations with valid cancellation dates, 49% (n = 1252) were cancelled 2 or fewer days beforehand, as shown in Supplementary Figure 2.

The presence of exactly 2 PDFVs per hospitalization was also associated with higher NS/SDC rates (OR 1.17, 95% CI, 1.01-1.36), compared to a single PDFV per hospitalization; however, the presence of more than 2 PDFVs per hospitalization was associated with lower NS/SDC rates (OR 0.82, 95% CI, 0.69-0.98). A separate analysis (data not shown) of potential high utilizers revealed a 15% NS/SDC rate for the top 0.5% of patients (median: 18 PDFVs each) and an 18% NS/SDC rate for the top 1% of patients (median: 14 PDFVs each) with regard to the numbers of PDFVs scheduled, compared to the 25% overall NS/SDC rate for all patients.

NS/SDC rates and adjusted ORs with regard to individual PDFV characteristics are displayed in Table 3. Nononcologic visits had higher NS/SDC rates than oncologic visits; for example, the NS/SDC rate for primary care visits was 39% (OR 2.62, 95% CI, 2.03-3.38), compared to 12% for oncologic visits. Appointments in the “other” specialty category also had high nonattendance rates, as further described in Supplementary Table B. Summertime appointments were more likely to be attended (OR 0.81, 95% CI, 0.68-0.97) compared to those in the spring. PDFV lead time (the time interval between the discharge date and appointment date) was not associated with changes in visit attendance.

DISCUSSION

When comparing PDFV characteristics themselves, oncologic visits had the lowest NS/SDC incidence of any group analyzed in our study. This may be related to the inherent life-altering nature of a cancer diagnosis or our cancer center’s use of patient navigators.23,30 In contrast, primary care clinics suffered from NS/SDC rates approaching 40%, which is a concerning finding given the importance of primary care coordination in the posthospitalization period.9,31 Why are primary care appointments so commonly missed? Some studies suggest that forgetting about a primary care appointment is a leading reason.15,32,33 For PDFVs, this phenomenon may be augmented because the visits are not scheduled by patients themselves. Additionally, patients may paradoxically undervalue the benefit of an all-encompassing primary care visit, compared to a PDFV focused on a specific problem, (eg, a cardiology follow-up appointment for a patient with congestive heart failure). In particular, patients with limited health literacy may potentially undervalue the capabilities of their primary care clinics.34,35

The low absolute number of primary care PDFVs (only 8% of all visits) scheduled for patients at our hospital was an unexpected finding. This low percentage is likely a function of the patient population hospitalized at our large, urban quaternary-care facility. First, an unknown number of patients may have had PDFVs manually scheduled with primary care providers external to our health system; these PDFVs were not captured within our study. Second, 71% of the hospitalizations in our study occurred in subspecialty services, for which specific primary care follow-up may not be as urgent. Supporting this fact, further analysis of the 6136 hospitalizations in our study (data not shown) revealed that 28% of the hospitalizations in general internal medicine were scheduled with at least 1 primary care PDFV as opposed to only 5% of subspecialty-service hospitalizations.

In contrast to several previous studies of outpatient nonattendance,we did not find that visits scheduled for time points further in the future were more likely to be missed.14,24,25,36,37 Unlike other appointments, it may be that PDFV lead time does not affect attendance because of the unique manner in which PDFV times are scheduled and conveyed to patients. Unlike other appointments, patients do not schedule PDFVs themselves but instead learn about their PDFV dates as part of a large set of discharge instructions. This practice may result in poor recall of PDFV dates in recently hospitalized patients38, regardless of the lead time between discharge and the visit itself.

Supplementary Table 1 details a 51% NS/SDC rate for the small number of PDFVs (n = 65) that were excluded a priori from our analysis because of general ineligibility for UPHS outpatient care. We specifically chose to exclude this population because of the infrequent and irregular process by which these PDFVs were authorized on a case-by-case basis, typically via active engagement by our hospital’s social work department. We did not study this population further but postulate that the 51% NS/SDC rate may reflect other social determinants of health that contribute to appointment nonadherence in a predominantly uninsured population.

Beyond their effect on patient outcomes, improving PDFV-related processes has the potential to boost both inpatient and outpatient provider satisfaction. From the standpoint of frontline inpatient providers (often resident physicians), calling outpatient clinics to request PDFVs is viewed as 1 of the top 5 administrative tasks that interfere with house staff education.39 Future interventions that involve patients in the PDFV scheduling process may improve inpatient workflow while simultaneously engaging patients in their own care. For example, asking clinic representatives to directly schedule PDFVs with hospitalized patients, either by phone or in person, has been shown in pilot studies to improve PDFV attendance and decrease readmissions.40-42 Conversely, NS/SDC visits harm outpatient provider productivity and decrease provider availability for other patients.13,17,43 Strategies to mitigate the impact of unfilled appointment slots (eg, deliberately overbooking time slots in advance) carry their own risks, including provider burnout.44 As such, preventing NSs may be superior to curing their adverse impacts. Many such strategies exist in the ambulatory setting,13,43,45 for example, better communication with patients through texting or goal-directed, personalized phone reminders.46-48Our study methodology has several limitations. Most importantly, we were unable to measure PDFVs made with providers unaffiliated with UPHS. As previously noted, our low proportion of primary care PDFVs may specifically reflect patients with primary care providers outside of our health system. Similarly, our low percentage of Medicaid patients receiving PDFVs may be related to follow-up visits with nonaffiliated community health centers. We were unable to measure patient acuity and health literacy as potential predictors of NS/SDC rates. Driving distances were calculated from patient postal codes to our hospital, not to individual outpatient clinics. However, the majority of our hospital-affiliated clinics are located adjacent to our hospital; additionally, we grouped driving distances into quartiles for our analysis. We had initially attempted to differentiate between clinic-initiated and patient-initiated cancellations, but unfortunately, we found that the data were too unreliable to be used for further analysis (outlined in Supplementary Table 3). Lastly, because we studied patients in medical units at a single large, urban, academic center, our results are not generalizable to other settings (eg, community hospitals, hospitals with smaller networks of outpatient providers, or patients being discharged from surgical services or observation units).

CONCLUSION

Given national efforts to enhance postdischarge transitions of care, we aimed to analyze attendance at provider-scheduled PDFV appointments. Our finding that 25% of PDFVs resulted in NS/SDCs raises both questions and opportunities for inpatient and outpatient providers. Further research is needed to understand why so many patients miss their PDFVs, and we should work as a field to develop creative solutions to improve PDFV scheduling and attendance.

Acknowledgments

The authors acknowledge Marie Synnestvedt, PhD, and Manik Chhabra, MD, for their assistance with data gathering and statistical analysis. They also acknowledge Allison DeKosky, MD, Michael Serpa, BS, Michael McFall, and Scott Schlegel, MBA, for their assistance with researching this topic. They did not receive external compensation for their assistance outside of their usual salary support.

DISCLOSURE

Nothing to report.

1. Halasyamani L, Kripalani S, Coleman E, et al. Transition of care for hospitalized elderly patients - development of a discharge checklist for hospitalists. J Hosp Med. 2006;1(6):354-360. PubMed

2. Koehler BE, Richter KM, Youngblood L, et al. Reduction of 30-day postdischarge hospital readmission or emergency department (ED) visit rates in high-risk elderly medical patients through delivery of a targeted care bundle. J Hosp Med. 2009;4(4):211-218. PubMed

3. Soong C, Daub S, Lee JG, et al. Development of a checklist of safe discharge practices for hospital patients. J Hosp Med. 2013;8(8):444-449. PubMed

4. Rice YB, Barnes CA, Rastogi R, Hillstrom TJ, Steinkeler CN. Tackling 30-day, all-cause readmissions with a patient-centered transitional care bundle. Popul Health Manag. 2016;19(1):56-62. PubMed

5. Nelson EA, Maruish MM, Axler JL. Effects of discharge planning and compliance with outpatient appointments on readmission rates. Psych Serv. 2000;51(7):885-889. PubMed

6. Gavish R, Levy A, Dekel OK, Karp E, Maimon N. The association between hospital readmission and pulmonologist follow-up visits in patients with chronic obstructive pulmonary disease. Chest. 2015;148(2):375-381. PubMed

7. Jackson C, Shahsahebi M, Wedlake T, DuBard CA. Timeliness of outpatient follow-up: an evidence-based approach for planning after hospital discharge. Ann Fam Med. 2015;13(2):115-122. PubMed

8. Donaho EK, Hall AC, Gass JA, et al. Protocol-driven allied health post-discharge transition clinic to reduce hospital readmissions in heart failure. J Am Heart Assoc. 2015;4(12):e002296. PubMed

9. Misky GJ, Wald HL, Coleman EA. Post-hospitalization transitions: Examining the effects of timing of primary care provider follow-up. J Hosp Med. 2010;5(7):392-397. PubMed

10. Grafft CA, McDonald FS, Ruud KL, Liesinger JT, Johnson MG, Naessens JM. Effect of hospital follow-up appointment on clinical event outcomes and mortality. Arch Intern Med. 2010;171(11):955-960. PubMed

11. Auerbach AD, Kripalani S, Vasilevskis EE, et al. Preventability and causes of readmissions in a national cohort of general medicine patients. JAMA Intern Med. 2016;176(4):484-493. PubMed

12. Field TS, Ogarek J, Garber L, Reed G, Gurwitz JH. Association of early post-discharge follow-up by a primary care physician and 30-day rehospitalization among older adults. J Gen Intern Med. 2015;30(5):565-571. PubMed

13. Quinn K. It’s no-show time! Med Group Manage Assoc Connexion. 2007;7(6):44-49. PubMed

14. Whittle J, Schectman G, Lu N, Baar B, Mayo-Smith MF. Relationship of scheduling interval to missed and cancelled clinic appointments. J Ambulatory Care Manage. 2008;31(4):290-302. PubMed

15. Kaplan-Lewis E, Percac-Lima S. No-show to primary care appointments: Why patients do not come. J Prim Care Community Health. 2013;4(4):251-255. PubMed

16. Molfenter T. Reducing appointment no-shows: Going from theory to practice. Subst Use Misuse. 2013;48(9):743-749. PubMed

17. Kheirkhah P, Feng Q, Travis LM, Tavakoli-Tabasi S, Sharafkhaneh A. Prevalence, predictors and economic consequences of no-shows. BMC Health Serv Res. 2016;16(1):13. PubMed

18. Colubi MM, Perez-Elias MJ, Elias L, et al. Missing scheduled visits in the outpatient clinic as a marker of short-term admissions and death. HIV Clin Trials. 2012;13(5):289-295. PubMed

19. Obialo CI, Hunt WC, Bashir K, Zager PG. Relationship of missed and shortened hemodialysis treatments to hospitalization and mortality: Observations from a US dialysis network. Clin Kidney J. 2012;5(4):315-319. PubMed

20. Hwang AS, Atlas SJ, Cronin P, et al. Appointment “no-shows” are an independent predictor of subsequent quality of care and resource utilization outcomes. J Gen Intern Med. 2015;30(10):1426-1433. PubMed

21. Perez FD, Xie J, Sin A, et al. Characteristics and direct costs of academic pediatric subspecialty outpatient no-show events. J Healthc Qual. 2014;36(4):32-42. PubMed

22. Huang Y, Zuniga P. Effective cancellation policy to reduce the negative impact of patient no-show. Journal of the Operational Research Society. 2013;65(5):605-615.

23. Percac-Lima S, Cronin PR, Ryan DP, Chabner BA, Daly EA, Kimball AB. Patient navigation based on predictive modeling decreases no-show rates in cancer care. Cancer. 2015;121(10):1662-1670. PubMed

24. Torres O, Rothberg MB, Garb J, Ogunneye O, Onyema J, Higgins T. Risk factor model to predict a missed clinic appointment in an urban, academic, and underserved setting. Popul Health Manag. 2015;18(2):131-136. PubMed

25. Eid WE, Shehata SF, Cole DA, Doerman KL. Predictors of nonattendance at an endocrinology outpatient clinic. Endocr Pract. 2016;22(8):983-989. PubMed

26. Kashiwagi DT, Burton MC, Kirkland LL, Cha S, Varkey P. Do timely outpatient follow-up visits decrease hospital readmission rates? Am J Med Qual. 2012;27(1):11-15. PubMed

27. Miller AJ, Chae E, Peterson E, Ko AB. Predictors of repeated “no-showing” to clinic appointments. Am J Otolaryngol. 2015;36(3):411-414. PubMed

28. ASCO. Billing challenges for residents of Skilled Nursing Facilities. J Oncol Pract. 2008;4(5):245-248. PubMed

29. Centers for Medicare & Medicaid Services (2013). “SE0433: Skilled Nursing Facility consolidated billing as it relates to ambulance services.” Medicare Learning Network Matters. https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNMattersArticles/downloads/se0433.pdf. Accessed on February 14, 2017.

30. Luckett R, Pena N, Vitonis A, Bernstein MR, Feldman S. Effect of patient navigator program on no-show rates at an academic referral colposcopy clinic. J Womens Health (Larchmt). 2015;24(7):608-615. PubMed

31. Jones CD, Vu MB, O’Donnell CM, et al. A failure to communicate: A qualitative exploration of care coordination between hospitalists and primary care providers around patient hospitalizations. J Gen Intern Med. 2015;30(4):417-424. PubMed

32. George A, Rubin G. Non-attendance in general practice: a systematic review and its implications for access to primary health care. Fam Pract. 2003;20(2):178-184. 2016;31(12):1460-1466.J Gen Intern Med. PubMed

48. Shah SJ, Cronin P, Hong CS, et al. Targeted reminder phone calls to patients at high risk of no-show for primary care appointment: A randomized trial. 2010;123(6):542-548.Am J Med. PubMed

47. Parikh A, Gupta K, Wilson AC, Fields K, Cosgrove NM, Kostis JB. The effectiveness of outpatient appointment reminder systems in reducing no-show rates. 2009;20:142-144.Int J STD AIDS. PubMed

46. Price H, Waters AM, Mighty D, et al. Texting appointment reminders reduces ‘Did not attend’ rates, is popular with patients and is cost-effective. 2009;25(3):166-170.J Med Practice Management. PubMed

45. Hills LS. How to handle patients who miss appointments or show up late.

2009;39(3):271-287.Interfaces. PubMed

44. Kros J, Dellana S, West D. Overbooking Increases Patient Access at East Carolina University’s Student Health Services Clinic. 2012;344(3):211-219.Am J Med Sci.

43. Stubbs ND, Geraci SA, Stephenson PL, Jones DB, Sanders S. Methods to reduce outpatient non-attendance. PubMed

42. Haftka A, Cerasale MT, Paje D. Direct patient participation in discharge follow-up appointment scheduling. Paper presented at: Society of Hospital Medicine, Annual Meeting 2015; National Harbor, MD. 2012;5(1):27-32.Patient.

41. Chang R, Spahlinger D, Kim CS. Re-engineering the post-discharge appointment process for general medicine patients. PubMed

40. Coffey C, Kufta J. Patient-centered post-discharge appointment scheduling improves readmission rates. Paper presented at: Society of Hospital Medicine, Annual Meeting 2011; Grapevine, Texas. 2006;81(1):76-81.Acad Med.

39. Vidyarthi AR, Katz PP, Wall SD, Wachter RM, Auerbach AD. Impact of reduced duty hours on residents’ education satistfaction at the University of California, San Francisco.

2013;173(18):1715-1722.JAMA Intern Med. PubMed

38. Horwitz LI, Moriarty JP, Chen C, et al. Quality of discharge practices and patient understanding at an academic medical center. 2010;16(4):246-259.Health Informatics J. PubMed

37. Daggy J, Lawley M, Willis D, et al. Using no-show modeling to improve clinic performance. 2005;5:51.BMC Health Serv Res. PubMed

36. Lee VJ, Earnest A, Chen MI, Krishnan B. Predictors of failed attendances in a multi-specialty outpatient centre using electronic databases. 2013;3(9):e003212.BMJ Open. PubMed

35. Long T, Genao I, Horwitz LI. Reasons for readmission in an underserved high-risk population: A qualitative analysis of a series of inpatient interviews. 2013;32(7):1196-1203.Health Aff (Millwood). PubMed

34. Kangovi S, Barg FK, Carter T, Long JA, Shannon R, Grande D. Understanding why patients of low socioeconomic status prefer hospitals over ambulatory care. 2015;54(10):976-982.Clin Pediatr (Phila). PubMed

33. Samuels RC, Ward VL, Melvin P, et al. Missed Appointments: Factors Contributing to High No-Show Rates in an Urban Pediatrics Primary Care Clinic. PubMed

Given growing incentives to reduce readmission rates, predischarge checklists and bundles have recommended that inpatient providers schedule postdischarge follow-up visits (PDFVs) for their hospitalized patients.1-4 PDFVs have been linked to lower readmission rates in patients with chronic conditions, including congestive heart failure, psychiatric illnesses, and chronic obstructive pulmonary disease.5-8 In contrast, the impact of PDFVs on readmissions in hospitalized general medicine populations has been mixed.9-12 Beyond the presence or absence of PDFVs, it may be a patient’s inability to keep scheduled PDFVs that contributes more strongly to preventable readmissions.11

This challenge, dealing with the 12% to 37% of patients who miss their visits (“no-shows”), is not new.13-17 In high-risk patient populations, such as those with substance abuse, diabetes, or human immunodeficiency virus, no-shows (NSs) have been linked to poorer short-term and long-term clinical outcomes.16,18-20 Additionally, NSs pose a challenge for outpatient clinics and the healthcare system at large. The financial cost of NSs ranges from approximately $200 per patient in 2 analyses to $7 million in cumulative lost revenue per year at 1 large academic health system.13,17,21 As such, increasing attendance at PDFVs is a potential target for improving both patient outcomes and clinic productivity.

Most prior PDFV research has focused on readmission risk rather than PDFV attendance as the primary outcome.5-12 However, given the patient-oriented benefits of attending PDFVs and the clinic-oriented benefits of avoiding vacant time slots, NS PDFVs represent an important missed opportunity for our healthcare delivery system. To our knowledge, risk factors for PDFV nonattendance have not yet been systematically studied. The aim of our study was to analyze PDFV nonattendance, particularly NSs and same-day cancellations (SDCs), for hospitalizations and clinics within our healthcare system.

METHODS

Study Design

We conducted an observational cohort study of adult patients from 10 medical units at the Hospital of the University of Pennsylvania (a 789-bed quaternary-care hospital within an urban, academic medical system) who were scheduled with at least 1 PDFV. Specifically, the patients included in our analysis were hospitalized on general internal medicine services or medical subspecialty services with discharge dates between April 1, 2014, and March 31, 2015. Hospitalizations included in our study had at least 1 PDFV scheduled with an outpatient provider affiliated with the University of Pennsylvania Health System (UPHS). PDFVs scheduled with unaffiliated providers were not examined.

Each PDFV was requested by a patient’s inpatient care team. Once the care team had determined that a PDFV was clinically warranted, a member of the team (generally a resident, advanced practice provider, medical student, or designee) either called the UPHS clinic to schedule an appointment time or e-mailed the outpatient UPHS provider directly to facilitate a more urgent PDFV appointment time. Once a PDFV time was confirmed, PDFV details (ie, date, time, location, and phone number) were electronically entered into the patient’s discharge instructions by the inpatient care team. At the time of discharge, nurses reviewed these instructions with their patients. All patients left the hospital with a physical copy of these instructions. As part of routine care at our institution, patients then received automated telephone reminders from their UPHS-affiliated outpatient clinic 48 hours prior to each PDFV.

Data Collection

Our study was determined to meet criteria for quality improvement by the University of Pennsylvania’s Institutional Review Board. We used our healthcare system’s integrated electronic medical record system to track the dates of initial PDFV requests, the dates of hospitalization, and actual PDFV dates. PDFVs were included if the appointment request was made while a patient was hospitalized, including the day of discharge. Our study methodology only allowed us to investigate PDFVs scheduled with UPHS outpatient providers. We did not review discharge instructions or survey non-UPHS clinics to quantify visits scheduled with other providers, for example, community health centers or external private practices.

Exclusion criteria included the following: (1) office visits with nonproviders, for example, scheduled diagnostic procedures or pharmacist appointments for warfarin dosing; (2) visits cancelled by inpatient providers prior to discharge; (3) visits for patients not otherwise eligible for UPHS outpatient care because of insurance reasons; and (4) visits scheduled for dates after a patient’s death. Our motivation for the third exclusion criterion was the infrequent and irregular process by which PDFVs were authorized for these patients. These patients and their characteristics are described in Supplementary Table 1 in more detail.

For each PDFV, we recorded age, gender, race, insurance status, driving distance, length of stay for index hospitalization, discharging service (general internal medicine vs subspecialty), postdischarge disposition (home, home with home care services such as nursing or physical therapy, or facility), the number of PDFVs scheduled per index hospitalization, PDFV specialty type (oncologic subspecialty, nononcologic medical subspecialty, nononcologic surgical subspecialty, primary care, or other specialty), PDFV season, and PDFV lead time (the number of days between the discharge date and PDFV). We consolidated oncologic specialties into 1 group given the integrated nature of our healthcare system’s comprehensive cancer center. “Other” PDFV specialty subtypes are described in Supplementary Table 2. Driving distances between patient postal codes and our hospital were calculated using Excel VBA Master (Salt Lake City, Utah) and were subsequently categorized into patient-level quartiles for further analysis. For cancelled PDFVs, we collected dates of cancellation relative to the date of the appointment itself.

Study Outcomes

The primary study outcome was PDFV attendance. Each PDFV’s status was categorized by outpatient clinic staff as attended, cancelled, or NS. For cancelled appointments, cancellation dates and reasons (if entered by clinic representatives) were collected. In keeping with prior studies investigating outpatient nonattendance,we calculated collective NS/SDC rates for the variables listed above.17,22-25 We additionally calculated NS/SDC and attendance-as-scheduled rates stratified by the number of PDFVs per patient to assess for a “high-utilizer” effect with regard to PDFV attendance.

Statistical Analysis

We used multivariable mixed-effects regression with a logit link to assess associations between age, gender, race, insurance, driving distance quartile, length of stay, discharging service, postdischarge disposition, the number of PDFVs per hospitalization, PDFV specialty type, PDFV season, PDFV lead time, and our NS/SDC outcome. The mixed-effects approach was used to account for correlation structures induced by patients who had multiple visits and for patients with multiple hospitalizations. Specifically, our model specified 2 levels of nesting (PDFVs nested within each hospitalization, which were nested within each patient) to obtain appropriate standard error estimates for our adjusted odds ratios (ORs). Correlation matrices and multivariable variance inflation factors were used to assess collinearity among the predictor variables. These assessments did not indicate strong collinearity; hence, all predictors were included in the model. Only driving distance had a small amount of missing data (0.18% of driving distances were unavailable), so multiple imputation was not undertaken. Analyses were performed using R version 3.3.1 (R Foundation for Statistical Computing, Vienna, Austria).

RESULTS

Baseline Characteristics

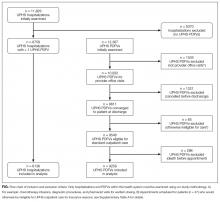

During the 1-year study period, there were 11,829 discrete hospitalizations in medical units at our hospital. Of these hospitalizations, 6136 (52%) had at least 1 UPHS-affiliated PDFV meeting our inclusion and exclusion criteria, as detailed in the Figure. Across these hospitalizations, 9258 PDFVs were scheduled on behalf of 4653 patients. Demographic characteristics for these patients, hospitalizations, and visits are detailed in Table 1. The median age of patients in our cohort was 61 years old (interquartile range [IQR] 49-70, range 18-101). The median driving distance was 17 miles (IQR 4.3-38.8, range 0-2891). For hospitalizations, the median length of stay was 5 days (IQR 3-10, range 0-97). The median PDFV lead time, which is defined as the number of days between discharge and PDFV, was 12 days (IQR 6-23, range 1-60). Overall, 41% of patients (n = 1927) attended all of their PDFVs as scheduled; Supplementary Figure 1 lists patient-level PDFV attendance-as-scheduled percentages in more detail.

Incidence of NSs and SDCs

Twenty-five percent of PDFVs (n = 2303) were ultimately NS/SDCs; this included 1658 NSs (18% of all appointments) and 645 SDCs (7% of all appointments). Fifty-two percent of PDFVs (n = 4847) were kept as scheduled, while 23% (n = 2108) were cancelled before the day of the visit. Of the 2558 cancellations with valid cancellation dates, 49% (n = 1252) were cancelled 2 or fewer days beforehand, as shown in Supplementary Figure 2.

The presence of exactly 2 PDFVs per hospitalization was also associated with higher NS/SDC rates (OR 1.17, 95% CI, 1.01-1.36), compared to a single PDFV per hospitalization; however, the presence of more than 2 PDFVs per hospitalization was associated with lower NS/SDC rates (OR 0.82, 95% CI, 0.69-0.98). A separate analysis (data not shown) of potential high utilizers revealed a 15% NS/SDC rate for the top 0.5% of patients (median: 18 PDFVs each) and an 18% NS/SDC rate for the top 1% of patients (median: 14 PDFVs each) with regard to the numbers of PDFVs scheduled, compared to the 25% overall NS/SDC rate for all patients.

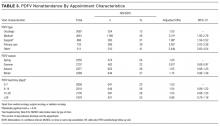

NS/SDC rates and adjusted ORs with regard to individual PDFV characteristics are displayed in Table 3. Nononcologic visits had higher NS/SDC rates than oncologic visits; for example, the NS/SDC rate for primary care visits was 39% (OR 2.62, 95% CI, 2.03-3.38), compared to 12% for oncologic visits. Appointments in the “other” specialty category also had high nonattendance rates, as further described in Supplementary Table B. Summertime appointments were more likely to be attended (OR 0.81, 95% CI, 0.68-0.97) compared to those in the spring. PDFV lead time (the time interval between the discharge date and appointment date) was not associated with changes in visit attendance.

DISCUSSION

When comparing PDFV characteristics themselves, oncologic visits had the lowest NS/SDC incidence of any group analyzed in our study. This may be related to the inherent life-altering nature of a cancer diagnosis or our cancer center’s use of patient navigators.23,30 In contrast, primary care clinics suffered from NS/SDC rates approaching 40%, which is a concerning finding given the importance of primary care coordination in the posthospitalization period.9,31 Why are primary care appointments so commonly missed? Some studies suggest that forgetting about a primary care appointment is a leading reason.15,32,33 For PDFVs, this phenomenon may be augmented because the visits are not scheduled by patients themselves. Additionally, patients may paradoxically undervalue the benefit of an all-encompassing primary care visit, compared to a PDFV focused on a specific problem, (eg, a cardiology follow-up appointment for a patient with congestive heart failure). In particular, patients with limited health literacy may potentially undervalue the capabilities of their primary care clinics.34,35

The low absolute number of primary care PDFVs (only 8% of all visits) scheduled for patients at our hospital was an unexpected finding. This low percentage is likely a function of the patient population hospitalized at our large, urban quaternary-care facility. First, an unknown number of patients may have had PDFVs manually scheduled with primary care providers external to our health system; these PDFVs were not captured within our study. Second, 71% of the hospitalizations in our study occurred in subspecialty services, for which specific primary care follow-up may not be as urgent. Supporting this fact, further analysis of the 6136 hospitalizations in our study (data not shown) revealed that 28% of the hospitalizations in general internal medicine were scheduled with at least 1 primary care PDFV as opposed to only 5% of subspecialty-service hospitalizations.

In contrast to several previous studies of outpatient nonattendance,we did not find that visits scheduled for time points further in the future were more likely to be missed.14,24,25,36,37 Unlike other appointments, it may be that PDFV lead time does not affect attendance because of the unique manner in which PDFV times are scheduled and conveyed to patients. Unlike other appointments, patients do not schedule PDFVs themselves but instead learn about their PDFV dates as part of a large set of discharge instructions. This practice may result in poor recall of PDFV dates in recently hospitalized patients38, regardless of the lead time between discharge and the visit itself.

Supplementary Table 1 details a 51% NS/SDC rate for the small number of PDFVs (n = 65) that were excluded a priori from our analysis because of general ineligibility for UPHS outpatient care. We specifically chose to exclude this population because of the infrequent and irregular process by which these PDFVs were authorized on a case-by-case basis, typically via active engagement by our hospital’s social work department. We did not study this population further but postulate that the 51% NS/SDC rate may reflect other social determinants of health that contribute to appointment nonadherence in a predominantly uninsured population.

Beyond their effect on patient outcomes, improving PDFV-related processes has the potential to boost both inpatient and outpatient provider satisfaction. From the standpoint of frontline inpatient providers (often resident physicians), calling outpatient clinics to request PDFVs is viewed as 1 of the top 5 administrative tasks that interfere with house staff education.39 Future interventions that involve patients in the PDFV scheduling process may improve inpatient workflow while simultaneously engaging patients in their own care. For example, asking clinic representatives to directly schedule PDFVs with hospitalized patients, either by phone or in person, has been shown in pilot studies to improve PDFV attendance and decrease readmissions.40-42 Conversely, NS/SDC visits harm outpatient provider productivity and decrease provider availability for other patients.13,17,43 Strategies to mitigate the impact of unfilled appointment slots (eg, deliberately overbooking time slots in advance) carry their own risks, including provider burnout.44 As such, preventing NSs may be superior to curing their adverse impacts. Many such strategies exist in the ambulatory setting,13,43,45 for example, better communication with patients through texting or goal-directed, personalized phone reminders.46-48Our study methodology has several limitations. Most importantly, we were unable to measure PDFVs made with providers unaffiliated with UPHS. As previously noted, our low proportion of primary care PDFVs may specifically reflect patients with primary care providers outside of our health system. Similarly, our low percentage of Medicaid patients receiving PDFVs may be related to follow-up visits with nonaffiliated community health centers. We were unable to measure patient acuity and health literacy as potential predictors of NS/SDC rates. Driving distances were calculated from patient postal codes to our hospital, not to individual outpatient clinics. However, the majority of our hospital-affiliated clinics are located adjacent to our hospital; additionally, we grouped driving distances into quartiles for our analysis. We had initially attempted to differentiate between clinic-initiated and patient-initiated cancellations, but unfortunately, we found that the data were too unreliable to be used for further analysis (outlined in Supplementary Table 3). Lastly, because we studied patients in medical units at a single large, urban, academic center, our results are not generalizable to other settings (eg, community hospitals, hospitals with smaller networks of outpatient providers, or patients being discharged from surgical services or observation units).

CONCLUSION

Given national efforts to enhance postdischarge transitions of care, we aimed to analyze attendance at provider-scheduled PDFV appointments. Our finding that 25% of PDFVs resulted in NS/SDCs raises both questions and opportunities for inpatient and outpatient providers. Further research is needed to understand why so many patients miss their PDFVs, and we should work as a field to develop creative solutions to improve PDFV scheduling and attendance.

Acknowledgments

The authors acknowledge Marie Synnestvedt, PhD, and Manik Chhabra, MD, for their assistance with data gathering and statistical analysis. They also acknowledge Allison DeKosky, MD, Michael Serpa, BS, Michael McFall, and Scott Schlegel, MBA, for their assistance with researching this topic. They did not receive external compensation for their assistance outside of their usual salary support.

DISCLOSURE

Nothing to report.

Given growing incentives to reduce readmission rates, predischarge checklists and bundles have recommended that inpatient providers schedule postdischarge follow-up visits (PDFVs) for their hospitalized patients.1-4 PDFVs have been linked to lower readmission rates in patients with chronic conditions, including congestive heart failure, psychiatric illnesses, and chronic obstructive pulmonary disease.5-8 In contrast, the impact of PDFVs on readmissions in hospitalized general medicine populations has been mixed.9-12 Beyond the presence or absence of PDFVs, it may be a patient’s inability to keep scheduled PDFVs that contributes more strongly to preventable readmissions.11

This challenge, dealing with the 12% to 37% of patients who miss their visits (“no-shows”), is not new.13-17 In high-risk patient populations, such as those with substance abuse, diabetes, or human immunodeficiency virus, no-shows (NSs) have been linked to poorer short-term and long-term clinical outcomes.16,18-20 Additionally, NSs pose a challenge for outpatient clinics and the healthcare system at large. The financial cost of NSs ranges from approximately $200 per patient in 2 analyses to $7 million in cumulative lost revenue per year at 1 large academic health system.13,17,21 As such, increasing attendance at PDFVs is a potential target for improving both patient outcomes and clinic productivity.

Most prior PDFV research has focused on readmission risk rather than PDFV attendance as the primary outcome.5-12 However, given the patient-oriented benefits of attending PDFVs and the clinic-oriented benefits of avoiding vacant time slots, NS PDFVs represent an important missed opportunity for our healthcare delivery system. To our knowledge, risk factors for PDFV nonattendance have not yet been systematically studied. The aim of our study was to analyze PDFV nonattendance, particularly NSs and same-day cancellations (SDCs), for hospitalizations and clinics within our healthcare system.

METHODS

Study Design

We conducted an observational cohort study of adult patients from 10 medical units at the Hospital of the University of Pennsylvania (a 789-bed quaternary-care hospital within an urban, academic medical system) who were scheduled with at least 1 PDFV. Specifically, the patients included in our analysis were hospitalized on general internal medicine services or medical subspecialty services with discharge dates between April 1, 2014, and March 31, 2015. Hospitalizations included in our study had at least 1 PDFV scheduled with an outpatient provider affiliated with the University of Pennsylvania Health System (UPHS). PDFVs scheduled with unaffiliated providers were not examined.

Each PDFV was requested by a patient’s inpatient care team. Once the care team had determined that a PDFV was clinically warranted, a member of the team (generally a resident, advanced practice provider, medical student, or designee) either called the UPHS clinic to schedule an appointment time or e-mailed the outpatient UPHS provider directly to facilitate a more urgent PDFV appointment time. Once a PDFV time was confirmed, PDFV details (ie, date, time, location, and phone number) were electronically entered into the patient’s discharge instructions by the inpatient care team. At the time of discharge, nurses reviewed these instructions with their patients. All patients left the hospital with a physical copy of these instructions. As part of routine care at our institution, patients then received automated telephone reminders from their UPHS-affiliated outpatient clinic 48 hours prior to each PDFV.

Data Collection

Our study was determined to meet criteria for quality improvement by the University of Pennsylvania’s Institutional Review Board. We used our healthcare system’s integrated electronic medical record system to track the dates of initial PDFV requests, the dates of hospitalization, and actual PDFV dates. PDFVs were included if the appointment request was made while a patient was hospitalized, including the day of discharge. Our study methodology only allowed us to investigate PDFVs scheduled with UPHS outpatient providers. We did not review discharge instructions or survey non-UPHS clinics to quantify visits scheduled with other providers, for example, community health centers or external private practices.

Exclusion criteria included the following: (1) office visits with nonproviders, for example, scheduled diagnostic procedures or pharmacist appointments for warfarin dosing; (2) visits cancelled by inpatient providers prior to discharge; (3) visits for patients not otherwise eligible for UPHS outpatient care because of insurance reasons; and (4) visits scheduled for dates after a patient’s death. Our motivation for the third exclusion criterion was the infrequent and irregular process by which PDFVs were authorized for these patients. These patients and their characteristics are described in Supplementary Table 1 in more detail.

For each PDFV, we recorded age, gender, race, insurance status, driving distance, length of stay for index hospitalization, discharging service (general internal medicine vs subspecialty), postdischarge disposition (home, home with home care services such as nursing or physical therapy, or facility), the number of PDFVs scheduled per index hospitalization, PDFV specialty type (oncologic subspecialty, nononcologic medical subspecialty, nononcologic surgical subspecialty, primary care, or other specialty), PDFV season, and PDFV lead time (the number of days between the discharge date and PDFV). We consolidated oncologic specialties into 1 group given the integrated nature of our healthcare system’s comprehensive cancer center. “Other” PDFV specialty subtypes are described in Supplementary Table 2. Driving distances between patient postal codes and our hospital were calculated using Excel VBA Master (Salt Lake City, Utah) and were subsequently categorized into patient-level quartiles for further analysis. For cancelled PDFVs, we collected dates of cancellation relative to the date of the appointment itself.

Study Outcomes

The primary study outcome was PDFV attendance. Each PDFV’s status was categorized by outpatient clinic staff as attended, cancelled, or NS. For cancelled appointments, cancellation dates and reasons (if entered by clinic representatives) were collected. In keeping with prior studies investigating outpatient nonattendance,we calculated collective NS/SDC rates for the variables listed above.17,22-25 We additionally calculated NS/SDC and attendance-as-scheduled rates stratified by the number of PDFVs per patient to assess for a “high-utilizer” effect with regard to PDFV attendance.

Statistical Analysis

We used multivariable mixed-effects regression with a logit link to assess associations between age, gender, race, insurance, driving distance quartile, length of stay, discharging service, postdischarge disposition, the number of PDFVs per hospitalization, PDFV specialty type, PDFV season, PDFV lead time, and our NS/SDC outcome. The mixed-effects approach was used to account for correlation structures induced by patients who had multiple visits and for patients with multiple hospitalizations. Specifically, our model specified 2 levels of nesting (PDFVs nested within each hospitalization, which were nested within each patient) to obtain appropriate standard error estimates for our adjusted odds ratios (ORs). Correlation matrices and multivariable variance inflation factors were used to assess collinearity among the predictor variables. These assessments did not indicate strong collinearity; hence, all predictors were included in the model. Only driving distance had a small amount of missing data (0.18% of driving distances were unavailable), so multiple imputation was not undertaken. Analyses were performed using R version 3.3.1 (R Foundation for Statistical Computing, Vienna, Austria).

RESULTS

Baseline Characteristics

During the 1-year study period, there were 11,829 discrete hospitalizations in medical units at our hospital. Of these hospitalizations, 6136 (52%) had at least 1 UPHS-affiliated PDFV meeting our inclusion and exclusion criteria, as detailed in the Figure. Across these hospitalizations, 9258 PDFVs were scheduled on behalf of 4653 patients. Demographic characteristics for these patients, hospitalizations, and visits are detailed in Table 1. The median age of patients in our cohort was 61 years old (interquartile range [IQR] 49-70, range 18-101). The median driving distance was 17 miles (IQR 4.3-38.8, range 0-2891). For hospitalizations, the median length of stay was 5 days (IQR 3-10, range 0-97). The median PDFV lead time, which is defined as the number of days between discharge and PDFV, was 12 days (IQR 6-23, range 1-60). Overall, 41% of patients (n = 1927) attended all of their PDFVs as scheduled; Supplementary Figure 1 lists patient-level PDFV attendance-as-scheduled percentages in more detail.

Incidence of NSs and SDCs

Twenty-five percent of PDFVs (n = 2303) were ultimately NS/SDCs; this included 1658 NSs (18% of all appointments) and 645 SDCs (7% of all appointments). Fifty-two percent of PDFVs (n = 4847) were kept as scheduled, while 23% (n = 2108) were cancelled before the day of the visit. Of the 2558 cancellations with valid cancellation dates, 49% (n = 1252) were cancelled 2 or fewer days beforehand, as shown in Supplementary Figure 2.

The presence of exactly 2 PDFVs per hospitalization was also associated with higher NS/SDC rates (OR 1.17, 95% CI, 1.01-1.36), compared to a single PDFV per hospitalization; however, the presence of more than 2 PDFVs per hospitalization was associated with lower NS/SDC rates (OR 0.82, 95% CI, 0.69-0.98). A separate analysis (data not shown) of potential high utilizers revealed a 15% NS/SDC rate for the top 0.5% of patients (median: 18 PDFVs each) and an 18% NS/SDC rate for the top 1% of patients (median: 14 PDFVs each) with regard to the numbers of PDFVs scheduled, compared to the 25% overall NS/SDC rate for all patients.