User login

State of Research in Adult Hospital Medicine: Results of a National Survey

Almost all specialties in internal medicine have a sound scientific research base through which clinical practice is informed.1 For the field of Hospital Medicine (HM), this evidence has largely comprised research generated from fields outside of the specialty. The need to develop, invest, and grow investigators in hospital-based medicine remains unmet as HM and its footprint in hospital systems continue to grow.2,3

Despite this fact, little is known about the current state of research in HM. A 2014 survey of the members of the Society of Hospital Medicine (SHM) found that research output across the field of HM, as measured on the basis of peer-reviewed publications, was growing.4 Since then, however, the numbers of individuals engaged in research activities, their background and training, publication output, or funding sources have not been quantified. Similarly, little is known about which institutions support the development of junior investigators (ie, HM research fellowships), how these programs are funded, and whether or not matriculants enter the field as investigators. These gaps must be measured, evaluated, and ideally addressed through strategic policy and funding initiatives to advance the state of science within HM.

Members of the SHM Research Committee developed, designed, and deployed a survey to improve the understanding of the state of research in HM. In this study, we aimed to establish the baseline of research in HM to enable the measurement of progress through periodic waves of data collection. Specifically, we sought to quantify and describe the characteristics of existing research programs, the sources and types of funding, the number and background of faculty, and the availability of resources for training researchers in HM.

METHODS

Study Setting and Participants

Given that no defined list, database, or external resource that identifies research programs and contacts in HM exists, we began by creating a strategy to identify and sample adult

Survey Development

A workgroup within the SHM Research Committee was tasked to create a survey that would achieve four distinct goals: (1) identify institutions currently engaging in hospital-based research; (2) define the characteristics, including sources of research funding, training opportunities, criteria for promotion, and grant support, of research programs within institutions; (3) understand the prevalence of research fellowship programs, including size, training curricula, and funding sources; and (4) evaluate the productivity and funding sources of HM investigators at each site.

Survey questions that target each of these domains were drafted by the workgroup. Questions were pretested with colleagues outside the workgroup focused on this project (ie, from the main research committee). The instrument was refined and edited to improve the readability and clarity of questions on the basis of the feedback obtained through the iterative process. The revised instrument was then programmed into an online survey administration tool (SurveyMonkey®) to facilitate electronic dissemination. Finally, the members of the workgroup tested the online survey to ensure functionality. No identifiable information was collected from respondents, and no monetary incentive was offered for the completion of the survey. An invitation to participate in the survey was sent via e-mail to each of the program contacts identified.

Statistical Analysis

Descriptive statistics, including proportions, means, and percentages, were used to tabulate results. All analyses were conducted using Stata 13 MP/SE (StataCorp, College Station, Texas).

Ethical and Regulatory Considerations

The study was reviewed and deemed exempt from regulation by the University of Michigan Institutional Review Board (HUM000138628).

RESULTS

General Characteristics of Research Programs and Faculty

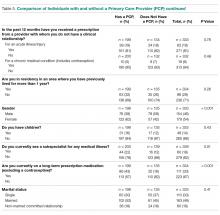

Out of 100 program contacts, 28 (representing 1,586 faculty members) responded and were included in the survey (program response rate = 28%). When comparing programs that did respond with those that did not, a greater proportion of programs in university settings were noted among respondents (79% vs 21%). Respondents represented programs from all regions of the United States, with most representing university-based (79%), university-affiliated (14%) or Veterans Health Administration (VHA; 11%) programs. Most respondents were in leadership roles, including division chiefs (32%), research directors/leads (21%), section chiefs (18%), and related titles, such as program director. Respondents indicated that the total number of faculty members in their programs (including nonclinicians and advance practice providers) varied from eight to 152 (mean [SD] = 57 [36]) members, with physicians representing the majority of faculty members (Table 1).

Among the 1,586 faculty members within the 28 programs, respondents identified 192 faculty members (12%) as currently receiving extra- or intramural support for research activities. Of these faculty, over half (58%) received <25% of effort from intra or extramural sources, and 28 (15%) and 52 (27%) faculty members received 25%-50% or >50% of support for their effort, respectively. The number of investigators who received funding across programs ranged from 0 to 28 faculty members. Compared with the 192 funded investigators, respondents indicated that a larger number of faculty in their programs (n = 656 or 41%) were involved in local quality improvement (QI) efforts. Of the 656 faculty members involved in QI efforts, 241 individuals (37%) were internally funded and received protected time/effort for their work.

Key Attributes of Research Programs

In the evaluation of the amount of total grant funding, respondents from 17 programs indicated that they received $500,000 in annual extra and intramural funding, and those from three programs stated that they received $500,000 to $999,999 in funding. Five respondents indicated that their programs currently received $1 million to $5 million in grant funding, and three reported >$5 million in research support. The sources of research funding included several divisions within the National Institute of Health (NIH, 12 programs), Agency for Healthcare Research and Quality (AHRQ, four programs), foundations (four programs), and internal grants (six programs). Additionally, six programs indicated “other” sources of funding that included the VHA, Patient-Centered Outcomes Research Institute (PCORI), Centers for Medicare and Medicaid Services, Centers for Disease Control (CDC), and industry sources.

A range of grants, including career development awards (11 programs); small grants, such as R21 and R03s (eight programs); R-level grants, including VA merit awards (five programs); program series grants, such as P and U grants (five programs), and foundation grants (eight programs), were reported as types of awards. Respondents from 16 programs indicated that they provided internal pilot grants. Amounts for such grants ranged from <$50,000 (14 programs) to $50,000-$100,000 (two programs).

Research Fellowship Programs/Training Programs

Only five of the 28 surveyed programs indicated that they currently had a research training or fellowship program for developing hospitalist investigators. The age of these programs varied from <1 year to 10 years. Three of the five programs stated that they had two fellows per year, and two stated they had spots for one trainee annually. All respondents indicated that fellows received training on study design, research methods, quantitative (eg, large database and secondary analyses) and qualitative data analysis. In addition, two programs included training in systematic review and meta-analyses, and three included focused courses on healthcare policy. Four of the five programs included training in QI tools, such as LEAN and Six Sigma. Funding for four of the five fellowship programs came from internal sources (eg, department and CTSA). However, two programs added they received some support from extramural funding and philanthropy. Following training, respondents from programs indicated that the majority of their graduates (60%) went on to hybrid research/QI roles (50/50 research/clinical effort), whereas 40% obtained dedicated research investigator (80/20) positions (Table 2).

The 23 institutions without research training programs cited that the most important barrier for establishing such programs was lack of funding (12 programs) and the lack of a pipeline of hospitalists seeking such training (six programs). However, 15 programs indicated that opportunities for hospitalists to gain research training in the form of courses were available internally (eg, courses in the department or medical school) or externally (eg, School of Public Health). Seven programs indicated that they were planning to start a HM research fellowship within the next five years.

Research Faculty

Among the 28 respondents, 15 stated that they have faculty members who conduct research as their main professional activity (ie, >50% effort). The number of faculty members in each program in such roles varied from one to 10. Respondents indicated that faculty members in this category were most often midcareer assistant or associate professors with few full professors. All programs indicated that scholarship in the form of peer-reviewed publications was required for the promotion of faculty. Faculty members who performed research as their main activity had all received formal fellowship training and consequently had dual degrees (MD with MPH or MD, with MSc being the two most common combinations). With respect to clinical activities, most respondents indicated that research faculty spent 10% to 49% of their effort on clinical work. However, five respondents indicated that research faculty had <10% effort on clinical duties (Table 3).

Eleven respondents (39%) identified the main focus of faculty as health service research, where four (14%) identified their main focus as clinical trials. Regardless of funding status, all respondents stated that their faculty were interested in studying quality and process improvement efforts (eg, transitions or readmissions, n = 19), patient safety initiatives (eg, hospital-acquired complications, n = 17), and disease-specific areas (eg, thrombosis, n = 15).

In terms of research output, 12 respondents stated that their research/QI faculty collectively published 11-50 peer-reviewed papers during the academic year, and 10 programs indicated that their faculty published 0-10 papers per year. Only three programs reported that their faculty collectively published 50-99 peer-reviewed papers per year. With respect to abstract presentations at national conferences, 13 programs indicated that they presented 0-10 abstracts, and 12 indicated that they presented 11-50.

DISCUSSION

In this first survey quantifying research activities in HM, respondents from 28 programs shared important insights into research activities at their institutions. Although our sample size was small, substantial variation in the size, composition, and structure of research programs in HM among respondents was observed. For example, few respondents indicated the availability of training programs for research in HM at their institutions. Similarly, among faculty who focused mainly on research, variation in funding streams and effort protection was observed. A preponderance of midcareer faculty with a range of funding sources, including NIH, AHRQ, VHA, CMS, and CDC was reported. Collectively, these data not only provide a unique glimpse into the state of research in HM but also help establish a baseline of the status of the field at large.

Some findings of our study are intuitive given our sampling strategy and the types of programs that responded. For example, the fact that most respondents for research programs represented university-based or affiliated institutions is expected given the tripartite academic mission. However, even within our sample of highly motivated programs, some findings are surprising and merit further exploration. For example, the observation that some respondents identified HM investigators within their program with <25% in intra- or extramural funding was unexpected. On the other extreme, we were surprised to find that three programs reported >$5 million in research funding. Understanding whether specific factors, such as the availability of experienced mentors within and outside departments or assistance from support staff (eg, statisticians and project managers), are associated with success and funding within these programs are important questions to answer. By focusing on these issues, we will be well poised as a field to understand what works, what does not work, and why.

Likewise, the finding that few programs within our sample offer formal training in the form of fellowships to research investigators represents an improvement opportunity. A pipeline for growing investigators is critical for the specialty that is HM. Notably, this call is not new; rather, previous investigators have highlighted the importance of developing academically oriented hospitalists for the future of the field.5 The implementation of faculty scholarship development programs has improved the scholarly output, mentoring activities, and succession planning of academics within HM.6,7 Conversely, lack of adequate mentorship and support for academic activities remains a challenge and as a factor associated with the failure to produce academic work.8 Without a cadre of investigators asking critical questions related to care delivery, the legitimacy of our field may be threatened.

While extrapolating to the field is difficult given the small number of our respondents, highlighting the progress that has been made is important. For example, while misalignment between funding and clinical and research mission persists, our survey found that several programs have been successful in securing extramural funding for their investigators. Additionally, internal funding for QI work appears to be increasing, with hospitalists receiving dedicated effort for much of this work. Innovation in how best to support and develop these types of efforts have also emerged. For example, the University of Michigan Specialist Hospitalist Allied Research Program offers dedicated effort and funding for hospitalists tackling projects germane to HM (eg, ordering of blood cultures for febrile inpatients) that overlap with subspecialists (eg, infectious diseases).9 Thus, hospitalists are linked with other specialties in the development of research agendas and academic products. Similarly, the launch of the HOMERUN network, a coalition of investigators who bridge health systems to study problems central to HM, has helped usher in a new era of research opportunities in the specialty.10 Fundamentally, the culture of HM has begun to place an emphasis on academic and scholarly productivity in addition to clinical prowess.11-13 Increased support and funding for training programs geared toward innovation and research in HM is needed to continue this mission. The Society for General Internal Medicine, American College of Physicians, and SHM have important roles to play as the largest professional organizations for generalists in this respect. Support for research, QI, and investigators in HM remains an urgent and largely unmet need.

Our study has limitations. First, our response rate was low at 28% but is consistent with the response rates of other surveys of physician groups.14 Caution in making inferences to the field at large is necessary given the potential for selection and nonresponse bias. However, we expect that respondents are likely biased toward programs actively conducting research and engaged in QI, thus better reflecting the state of these activities in HM. Second, given that we did not ask for any identifying information, we have no way of establishing the accuracy of the data provided by respondents. However, we have no reason to believe that responses would be altered in a systematic fashion. Future studies that link our findings to publicly available data (eg, databases of active grants and funding) might be useful. Third, while our survey instrument was created and internally validated by hospitalist researchers, its lack of external validation could limit findings. Finally, our results vary on the basis of how respondents answered questions related to effort and time allocation given that these measures differ across programs.

In summary, the findings from this study highlight substantial variations in the number, training, and funding of research faculty across HM programs. Understanding the factors behind the success of some programs and the failures of others appears important in informing and growing the research in the field. Future studies that aim to expand survey participation, raise the awareness of the state of research in HM, and identify barriers and facilitators to academic success in HM are needed.

Disclosures

Dr. Chopra discloses grant funding from the Agency for Healthcare Research and Quality (AHRQ), VA Health Services and Research Department, and Centers for Disease Control. Dr. Jones discloses grant funding from AHRQ. All other authors disclose no conflicts of interest.

1. International Working Party to Promote and Revitalise Academic Medicine. Academic medicine: the evidence base. BMJ. 2004;329(7469):789-792. PubMed

2. Flanders SA, Saint S, McMahon LF, Howell JD. Where should hospitalists sit within the academic medical center? J Gen Intern Med. 2008;23(8):1269-1272. PubMed

3. Flanders SA, Centor B, Weber V, McGinn T, Desalvo K, Auerbach A. Challenges and opportunities in academic hospital medicine: report from the academic hospital medicine summit. J Gen Intern Med. 2009;24(5):636-641. PubMed

4. Dang Do AN, Munchhof AM, Terry C, Emmett T, Kara A. Research and publication trends in hospital medicine. J Hosp Med. 2014;9(3):148-154. PubMed

5. Harrison R, Hunter AJ, Sharpe B, Auerbach AD. Survey of US academic hospitalist leaders about mentorship and academic activities in hospitalist groups. J Hosp Med. 2011;6(1):5-9. PubMed

6. Sehgal NL, Sharpe BA, Auerbach AA, Wachter RM. Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161-166. PubMed

7. Nagarur A, O’Neill RM, Lawton D, Greenwald JL. Supporting faculty development in hospital medicine: design and implementation of a personalized structured mentoring program. J Hosp Med. 2018;13(2):96-99. PubMed

8. Reid MB, Misky GJ, Harrison RA, Sharpe B, Auerbach A, Glasheen JJ. Mentorship, productivity, and promotion among academic hospitalists. J Gen Intern Med. 2012;27(1):23-27. PubMed

9. Flanders SA, Kaufman SR, Nallamothu BK, Saint S. The University of Michigan Specialist-Hospitalist Allied Research Program: jumpstarting hospital medicine research. J Hosp Med. 2008;3(4):308-313. PubMed

10. Auerbach AD, Patel MS, Metlay JP, et al. The Hospital Medicine Reengineering Network (HOMERuN): a learning organization focused on improving hospital care. Acad Med. 2014;89(3):415-420. PubMed

11. Souba WW. Academic medicine’s core values: what do they mean? J Surg Res. 2003;115(2):171-173. PubMed

12. Bonsall J, Chopra V. Building an academic pipeline: a combined society of hospital medicine committee initiative. J Hosp Med. 2016;11(10):735-736. PubMed

13. Sweigart JR, Tad YD, Kneeland P, Williams MV, Glasheen JJ. Hospital medicine resident training tracks: developing the hospital medicine pipeline. J Hosp Med. 2017;12(3):173-176. PubMed

14. Cunningham CT, Quan H, Hemmelgarn B, et al. Exploring physician specialist response rates to web-based surveys. BMC Med Res Methodol. 2015;15(1):32. PubMed

Almost all specialties in internal medicine have a sound scientific research base through which clinical practice is informed.1 For the field of Hospital Medicine (HM), this evidence has largely comprised research generated from fields outside of the specialty. The need to develop, invest, and grow investigators in hospital-based medicine remains unmet as HM and its footprint in hospital systems continue to grow.2,3

Despite this fact, little is known about the current state of research in HM. A 2014 survey of the members of the Society of Hospital Medicine (SHM) found that research output across the field of HM, as measured on the basis of peer-reviewed publications, was growing.4 Since then, however, the numbers of individuals engaged in research activities, their background and training, publication output, or funding sources have not been quantified. Similarly, little is known about which institutions support the development of junior investigators (ie, HM research fellowships), how these programs are funded, and whether or not matriculants enter the field as investigators. These gaps must be measured, evaluated, and ideally addressed through strategic policy and funding initiatives to advance the state of science within HM.

Members of the SHM Research Committee developed, designed, and deployed a survey to improve the understanding of the state of research in HM. In this study, we aimed to establish the baseline of research in HM to enable the measurement of progress through periodic waves of data collection. Specifically, we sought to quantify and describe the characteristics of existing research programs, the sources and types of funding, the number and background of faculty, and the availability of resources for training researchers in HM.

METHODS

Study Setting and Participants

Given that no defined list, database, or external resource that identifies research programs and contacts in HM exists, we began by creating a strategy to identify and sample adult

Survey Development

A workgroup within the SHM Research Committee was tasked to create a survey that would achieve four distinct goals: (1) identify institutions currently engaging in hospital-based research; (2) define the characteristics, including sources of research funding, training opportunities, criteria for promotion, and grant support, of research programs within institutions; (3) understand the prevalence of research fellowship programs, including size, training curricula, and funding sources; and (4) evaluate the productivity and funding sources of HM investigators at each site.

Survey questions that target each of these domains were drafted by the workgroup. Questions were pretested with colleagues outside the workgroup focused on this project (ie, from the main research committee). The instrument was refined and edited to improve the readability and clarity of questions on the basis of the feedback obtained through the iterative process. The revised instrument was then programmed into an online survey administration tool (SurveyMonkey®) to facilitate electronic dissemination. Finally, the members of the workgroup tested the online survey to ensure functionality. No identifiable information was collected from respondents, and no monetary incentive was offered for the completion of the survey. An invitation to participate in the survey was sent via e-mail to each of the program contacts identified.

Statistical Analysis

Descriptive statistics, including proportions, means, and percentages, were used to tabulate results. All analyses were conducted using Stata 13 MP/SE (StataCorp, College Station, Texas).

Ethical and Regulatory Considerations

The study was reviewed and deemed exempt from regulation by the University of Michigan Institutional Review Board (HUM000138628).

RESULTS

General Characteristics of Research Programs and Faculty

Out of 100 program contacts, 28 (representing 1,586 faculty members) responded and were included in the survey (program response rate = 28%). When comparing programs that did respond with those that did not, a greater proportion of programs in university settings were noted among respondents (79% vs 21%). Respondents represented programs from all regions of the United States, with most representing university-based (79%), university-affiliated (14%) or Veterans Health Administration (VHA; 11%) programs. Most respondents were in leadership roles, including division chiefs (32%), research directors/leads (21%), section chiefs (18%), and related titles, such as program director. Respondents indicated that the total number of faculty members in their programs (including nonclinicians and advance practice providers) varied from eight to 152 (mean [SD] = 57 [36]) members, with physicians representing the majority of faculty members (Table 1).

Among the 1,586 faculty members within the 28 programs, respondents identified 192 faculty members (12%) as currently receiving extra- or intramural support for research activities. Of these faculty, over half (58%) received <25% of effort from intra or extramural sources, and 28 (15%) and 52 (27%) faculty members received 25%-50% or >50% of support for their effort, respectively. The number of investigators who received funding across programs ranged from 0 to 28 faculty members. Compared with the 192 funded investigators, respondents indicated that a larger number of faculty in their programs (n = 656 or 41%) were involved in local quality improvement (QI) efforts. Of the 656 faculty members involved in QI efforts, 241 individuals (37%) were internally funded and received protected time/effort for their work.

Key Attributes of Research Programs

In the evaluation of the amount of total grant funding, respondents from 17 programs indicated that they received $500,000 in annual extra and intramural funding, and those from three programs stated that they received $500,000 to $999,999 in funding. Five respondents indicated that their programs currently received $1 million to $5 million in grant funding, and three reported >$5 million in research support. The sources of research funding included several divisions within the National Institute of Health (NIH, 12 programs), Agency for Healthcare Research and Quality (AHRQ, four programs), foundations (four programs), and internal grants (six programs). Additionally, six programs indicated “other” sources of funding that included the VHA, Patient-Centered Outcomes Research Institute (PCORI), Centers for Medicare and Medicaid Services, Centers for Disease Control (CDC), and industry sources.

A range of grants, including career development awards (11 programs); small grants, such as R21 and R03s (eight programs); R-level grants, including VA merit awards (five programs); program series grants, such as P and U grants (five programs), and foundation grants (eight programs), were reported as types of awards. Respondents from 16 programs indicated that they provided internal pilot grants. Amounts for such grants ranged from <$50,000 (14 programs) to $50,000-$100,000 (two programs).

Research Fellowship Programs/Training Programs

Only five of the 28 surveyed programs indicated that they currently had a research training or fellowship program for developing hospitalist investigators. The age of these programs varied from <1 year to 10 years. Three of the five programs stated that they had two fellows per year, and two stated they had spots for one trainee annually. All respondents indicated that fellows received training on study design, research methods, quantitative (eg, large database and secondary analyses) and qualitative data analysis. In addition, two programs included training in systematic review and meta-analyses, and three included focused courses on healthcare policy. Four of the five programs included training in QI tools, such as LEAN and Six Sigma. Funding for four of the five fellowship programs came from internal sources (eg, department and CTSA). However, two programs added they received some support from extramural funding and philanthropy. Following training, respondents from programs indicated that the majority of their graduates (60%) went on to hybrid research/QI roles (50/50 research/clinical effort), whereas 40% obtained dedicated research investigator (80/20) positions (Table 2).

The 23 institutions without research training programs cited that the most important barrier for establishing such programs was lack of funding (12 programs) and the lack of a pipeline of hospitalists seeking such training (six programs). However, 15 programs indicated that opportunities for hospitalists to gain research training in the form of courses were available internally (eg, courses in the department or medical school) or externally (eg, School of Public Health). Seven programs indicated that they were planning to start a HM research fellowship within the next five years.

Research Faculty

Among the 28 respondents, 15 stated that they have faculty members who conduct research as their main professional activity (ie, >50% effort). The number of faculty members in each program in such roles varied from one to 10. Respondents indicated that faculty members in this category were most often midcareer assistant or associate professors with few full professors. All programs indicated that scholarship in the form of peer-reviewed publications was required for the promotion of faculty. Faculty members who performed research as their main activity had all received formal fellowship training and consequently had dual degrees (MD with MPH or MD, with MSc being the two most common combinations). With respect to clinical activities, most respondents indicated that research faculty spent 10% to 49% of their effort on clinical work. However, five respondents indicated that research faculty had <10% effort on clinical duties (Table 3).

Eleven respondents (39%) identified the main focus of faculty as health service research, where four (14%) identified their main focus as clinical trials. Regardless of funding status, all respondents stated that their faculty were interested in studying quality and process improvement efforts (eg, transitions or readmissions, n = 19), patient safety initiatives (eg, hospital-acquired complications, n = 17), and disease-specific areas (eg, thrombosis, n = 15).

In terms of research output, 12 respondents stated that their research/QI faculty collectively published 11-50 peer-reviewed papers during the academic year, and 10 programs indicated that their faculty published 0-10 papers per year. Only three programs reported that their faculty collectively published 50-99 peer-reviewed papers per year. With respect to abstract presentations at national conferences, 13 programs indicated that they presented 0-10 abstracts, and 12 indicated that they presented 11-50.

DISCUSSION

In this first survey quantifying research activities in HM, respondents from 28 programs shared important insights into research activities at their institutions. Although our sample size was small, substantial variation in the size, composition, and structure of research programs in HM among respondents was observed. For example, few respondents indicated the availability of training programs for research in HM at their institutions. Similarly, among faculty who focused mainly on research, variation in funding streams and effort protection was observed. A preponderance of midcareer faculty with a range of funding sources, including NIH, AHRQ, VHA, CMS, and CDC was reported. Collectively, these data not only provide a unique glimpse into the state of research in HM but also help establish a baseline of the status of the field at large.

Some findings of our study are intuitive given our sampling strategy and the types of programs that responded. For example, the fact that most respondents for research programs represented university-based or affiliated institutions is expected given the tripartite academic mission. However, even within our sample of highly motivated programs, some findings are surprising and merit further exploration. For example, the observation that some respondents identified HM investigators within their program with <25% in intra- or extramural funding was unexpected. On the other extreme, we were surprised to find that three programs reported >$5 million in research funding. Understanding whether specific factors, such as the availability of experienced mentors within and outside departments or assistance from support staff (eg, statisticians and project managers), are associated with success and funding within these programs are important questions to answer. By focusing on these issues, we will be well poised as a field to understand what works, what does not work, and why.

Likewise, the finding that few programs within our sample offer formal training in the form of fellowships to research investigators represents an improvement opportunity. A pipeline for growing investigators is critical for the specialty that is HM. Notably, this call is not new; rather, previous investigators have highlighted the importance of developing academically oriented hospitalists for the future of the field.5 The implementation of faculty scholarship development programs has improved the scholarly output, mentoring activities, and succession planning of academics within HM.6,7 Conversely, lack of adequate mentorship and support for academic activities remains a challenge and as a factor associated with the failure to produce academic work.8 Without a cadre of investigators asking critical questions related to care delivery, the legitimacy of our field may be threatened.

While extrapolating to the field is difficult given the small number of our respondents, highlighting the progress that has been made is important. For example, while misalignment between funding and clinical and research mission persists, our survey found that several programs have been successful in securing extramural funding for their investigators. Additionally, internal funding for QI work appears to be increasing, with hospitalists receiving dedicated effort for much of this work. Innovation in how best to support and develop these types of efforts have also emerged. For example, the University of Michigan Specialist Hospitalist Allied Research Program offers dedicated effort and funding for hospitalists tackling projects germane to HM (eg, ordering of blood cultures for febrile inpatients) that overlap with subspecialists (eg, infectious diseases).9 Thus, hospitalists are linked with other specialties in the development of research agendas and academic products. Similarly, the launch of the HOMERUN network, a coalition of investigators who bridge health systems to study problems central to HM, has helped usher in a new era of research opportunities in the specialty.10 Fundamentally, the culture of HM has begun to place an emphasis on academic and scholarly productivity in addition to clinical prowess.11-13 Increased support and funding for training programs geared toward innovation and research in HM is needed to continue this mission. The Society for General Internal Medicine, American College of Physicians, and SHM have important roles to play as the largest professional organizations for generalists in this respect. Support for research, QI, and investigators in HM remains an urgent and largely unmet need.

Our study has limitations. First, our response rate was low at 28% but is consistent with the response rates of other surveys of physician groups.14 Caution in making inferences to the field at large is necessary given the potential for selection and nonresponse bias. However, we expect that respondents are likely biased toward programs actively conducting research and engaged in QI, thus better reflecting the state of these activities in HM. Second, given that we did not ask for any identifying information, we have no way of establishing the accuracy of the data provided by respondents. However, we have no reason to believe that responses would be altered in a systematic fashion. Future studies that link our findings to publicly available data (eg, databases of active grants and funding) might be useful. Third, while our survey instrument was created and internally validated by hospitalist researchers, its lack of external validation could limit findings. Finally, our results vary on the basis of how respondents answered questions related to effort and time allocation given that these measures differ across programs.

In summary, the findings from this study highlight substantial variations in the number, training, and funding of research faculty across HM programs. Understanding the factors behind the success of some programs and the failures of others appears important in informing and growing the research in the field. Future studies that aim to expand survey participation, raise the awareness of the state of research in HM, and identify barriers and facilitators to academic success in HM are needed.

Disclosures

Dr. Chopra discloses grant funding from the Agency for Healthcare Research and Quality (AHRQ), VA Health Services and Research Department, and Centers for Disease Control. Dr. Jones discloses grant funding from AHRQ. All other authors disclose no conflicts of interest.

Almost all specialties in internal medicine have a sound scientific research base through which clinical practice is informed.1 For the field of Hospital Medicine (HM), this evidence has largely comprised research generated from fields outside of the specialty. The need to develop, invest, and grow investigators in hospital-based medicine remains unmet as HM and its footprint in hospital systems continue to grow.2,3

Despite this fact, little is known about the current state of research in HM. A 2014 survey of the members of the Society of Hospital Medicine (SHM) found that research output across the field of HM, as measured on the basis of peer-reviewed publications, was growing.4 Since then, however, the numbers of individuals engaged in research activities, their background and training, publication output, or funding sources have not been quantified. Similarly, little is known about which institutions support the development of junior investigators (ie, HM research fellowships), how these programs are funded, and whether or not matriculants enter the field as investigators. These gaps must be measured, evaluated, and ideally addressed through strategic policy and funding initiatives to advance the state of science within HM.

Members of the SHM Research Committee developed, designed, and deployed a survey to improve the understanding of the state of research in HM. In this study, we aimed to establish the baseline of research in HM to enable the measurement of progress through periodic waves of data collection. Specifically, we sought to quantify and describe the characteristics of existing research programs, the sources and types of funding, the number and background of faculty, and the availability of resources for training researchers in HM.

METHODS

Study Setting and Participants

Given that no defined list, database, or external resource that identifies research programs and contacts in HM exists, we began by creating a strategy to identify and sample adult

Survey Development

A workgroup within the SHM Research Committee was tasked to create a survey that would achieve four distinct goals: (1) identify institutions currently engaging in hospital-based research; (2) define the characteristics, including sources of research funding, training opportunities, criteria for promotion, and grant support, of research programs within institutions; (3) understand the prevalence of research fellowship programs, including size, training curricula, and funding sources; and (4) evaluate the productivity and funding sources of HM investigators at each site.

Survey questions that target each of these domains were drafted by the workgroup. Questions were pretested with colleagues outside the workgroup focused on this project (ie, from the main research committee). The instrument was refined and edited to improve the readability and clarity of questions on the basis of the feedback obtained through the iterative process. The revised instrument was then programmed into an online survey administration tool (SurveyMonkey®) to facilitate electronic dissemination. Finally, the members of the workgroup tested the online survey to ensure functionality. No identifiable information was collected from respondents, and no monetary incentive was offered for the completion of the survey. An invitation to participate in the survey was sent via e-mail to each of the program contacts identified.

Statistical Analysis

Descriptive statistics, including proportions, means, and percentages, were used to tabulate results. All analyses were conducted using Stata 13 MP/SE (StataCorp, College Station, Texas).

Ethical and Regulatory Considerations

The study was reviewed and deemed exempt from regulation by the University of Michigan Institutional Review Board (HUM000138628).

RESULTS

General Characteristics of Research Programs and Faculty

Out of 100 program contacts, 28 (representing 1,586 faculty members) responded and were included in the survey (program response rate = 28%). When comparing programs that did respond with those that did not, a greater proportion of programs in university settings were noted among respondents (79% vs 21%). Respondents represented programs from all regions of the United States, with most representing university-based (79%), university-affiliated (14%) or Veterans Health Administration (VHA; 11%) programs. Most respondents were in leadership roles, including division chiefs (32%), research directors/leads (21%), section chiefs (18%), and related titles, such as program director. Respondents indicated that the total number of faculty members in their programs (including nonclinicians and advance practice providers) varied from eight to 152 (mean [SD] = 57 [36]) members, with physicians representing the majority of faculty members (Table 1).

Among the 1,586 faculty members within the 28 programs, respondents identified 192 faculty members (12%) as currently receiving extra- or intramural support for research activities. Of these faculty, over half (58%) received <25% of effort from intra or extramural sources, and 28 (15%) and 52 (27%) faculty members received 25%-50% or >50% of support for their effort, respectively. The number of investigators who received funding across programs ranged from 0 to 28 faculty members. Compared with the 192 funded investigators, respondents indicated that a larger number of faculty in their programs (n = 656 or 41%) were involved in local quality improvement (QI) efforts. Of the 656 faculty members involved in QI efforts, 241 individuals (37%) were internally funded and received protected time/effort for their work.

Key Attributes of Research Programs

In the evaluation of the amount of total grant funding, respondents from 17 programs indicated that they received $500,000 in annual extra and intramural funding, and those from three programs stated that they received $500,000 to $999,999 in funding. Five respondents indicated that their programs currently received $1 million to $5 million in grant funding, and three reported >$5 million in research support. The sources of research funding included several divisions within the National Institute of Health (NIH, 12 programs), Agency for Healthcare Research and Quality (AHRQ, four programs), foundations (four programs), and internal grants (six programs). Additionally, six programs indicated “other” sources of funding that included the VHA, Patient-Centered Outcomes Research Institute (PCORI), Centers for Medicare and Medicaid Services, Centers for Disease Control (CDC), and industry sources.

A range of grants, including career development awards (11 programs); small grants, such as R21 and R03s (eight programs); R-level grants, including VA merit awards (five programs); program series grants, such as P and U grants (five programs), and foundation grants (eight programs), were reported as types of awards. Respondents from 16 programs indicated that they provided internal pilot grants. Amounts for such grants ranged from <$50,000 (14 programs) to $50,000-$100,000 (two programs).

Research Fellowship Programs/Training Programs

Only five of the 28 surveyed programs indicated that they currently had a research training or fellowship program for developing hospitalist investigators. The age of these programs varied from <1 year to 10 years. Three of the five programs stated that they had two fellows per year, and two stated they had spots for one trainee annually. All respondents indicated that fellows received training on study design, research methods, quantitative (eg, large database and secondary analyses) and qualitative data analysis. In addition, two programs included training in systematic review and meta-analyses, and three included focused courses on healthcare policy. Four of the five programs included training in QI tools, such as LEAN and Six Sigma. Funding for four of the five fellowship programs came from internal sources (eg, department and CTSA). However, two programs added they received some support from extramural funding and philanthropy. Following training, respondents from programs indicated that the majority of their graduates (60%) went on to hybrid research/QI roles (50/50 research/clinical effort), whereas 40% obtained dedicated research investigator (80/20) positions (Table 2).

The 23 institutions without research training programs cited that the most important barrier for establishing such programs was lack of funding (12 programs) and the lack of a pipeline of hospitalists seeking such training (six programs). However, 15 programs indicated that opportunities for hospitalists to gain research training in the form of courses were available internally (eg, courses in the department or medical school) or externally (eg, School of Public Health). Seven programs indicated that they were planning to start a HM research fellowship within the next five years.

Research Faculty

Among the 28 respondents, 15 stated that they have faculty members who conduct research as their main professional activity (ie, >50% effort). The number of faculty members in each program in such roles varied from one to 10. Respondents indicated that faculty members in this category were most often midcareer assistant or associate professors with few full professors. All programs indicated that scholarship in the form of peer-reviewed publications was required for the promotion of faculty. Faculty members who performed research as their main activity had all received formal fellowship training and consequently had dual degrees (MD with MPH or MD, with MSc being the two most common combinations). With respect to clinical activities, most respondents indicated that research faculty spent 10% to 49% of their effort on clinical work. However, five respondents indicated that research faculty had <10% effort on clinical duties (Table 3).

Eleven respondents (39%) identified the main focus of faculty as health service research, where four (14%) identified their main focus as clinical trials. Regardless of funding status, all respondents stated that their faculty were interested in studying quality and process improvement efforts (eg, transitions or readmissions, n = 19), patient safety initiatives (eg, hospital-acquired complications, n = 17), and disease-specific areas (eg, thrombosis, n = 15).

In terms of research output, 12 respondents stated that their research/QI faculty collectively published 11-50 peer-reviewed papers during the academic year, and 10 programs indicated that their faculty published 0-10 papers per year. Only three programs reported that their faculty collectively published 50-99 peer-reviewed papers per year. With respect to abstract presentations at national conferences, 13 programs indicated that they presented 0-10 abstracts, and 12 indicated that they presented 11-50.

DISCUSSION

In this first survey quantifying research activities in HM, respondents from 28 programs shared important insights into research activities at their institutions. Although our sample size was small, substantial variation in the size, composition, and structure of research programs in HM among respondents was observed. For example, few respondents indicated the availability of training programs for research in HM at their institutions. Similarly, among faculty who focused mainly on research, variation in funding streams and effort protection was observed. A preponderance of midcareer faculty with a range of funding sources, including NIH, AHRQ, VHA, CMS, and CDC was reported. Collectively, these data not only provide a unique glimpse into the state of research in HM but also help establish a baseline of the status of the field at large.

Some findings of our study are intuitive given our sampling strategy and the types of programs that responded. For example, the fact that most respondents for research programs represented university-based or affiliated institutions is expected given the tripartite academic mission. However, even within our sample of highly motivated programs, some findings are surprising and merit further exploration. For example, the observation that some respondents identified HM investigators within their program with <25% in intra- or extramural funding was unexpected. On the other extreme, we were surprised to find that three programs reported >$5 million in research funding. Understanding whether specific factors, such as the availability of experienced mentors within and outside departments or assistance from support staff (eg, statisticians and project managers), are associated with success and funding within these programs are important questions to answer. By focusing on these issues, we will be well poised as a field to understand what works, what does not work, and why.

Likewise, the finding that few programs within our sample offer formal training in the form of fellowships to research investigators represents an improvement opportunity. A pipeline for growing investigators is critical for the specialty that is HM. Notably, this call is not new; rather, previous investigators have highlighted the importance of developing academically oriented hospitalists for the future of the field.5 The implementation of faculty scholarship development programs has improved the scholarly output, mentoring activities, and succession planning of academics within HM.6,7 Conversely, lack of adequate mentorship and support for academic activities remains a challenge and as a factor associated with the failure to produce academic work.8 Without a cadre of investigators asking critical questions related to care delivery, the legitimacy of our field may be threatened.

While extrapolating to the field is difficult given the small number of our respondents, highlighting the progress that has been made is important. For example, while misalignment between funding and clinical and research mission persists, our survey found that several programs have been successful in securing extramural funding for their investigators. Additionally, internal funding for QI work appears to be increasing, with hospitalists receiving dedicated effort for much of this work. Innovation in how best to support and develop these types of efforts have also emerged. For example, the University of Michigan Specialist Hospitalist Allied Research Program offers dedicated effort and funding for hospitalists tackling projects germane to HM (eg, ordering of blood cultures for febrile inpatients) that overlap with subspecialists (eg, infectious diseases).9 Thus, hospitalists are linked with other specialties in the development of research agendas and academic products. Similarly, the launch of the HOMERUN network, a coalition of investigators who bridge health systems to study problems central to HM, has helped usher in a new era of research opportunities in the specialty.10 Fundamentally, the culture of HM has begun to place an emphasis on academic and scholarly productivity in addition to clinical prowess.11-13 Increased support and funding for training programs geared toward innovation and research in HM is needed to continue this mission. The Society for General Internal Medicine, American College of Physicians, and SHM have important roles to play as the largest professional organizations for generalists in this respect. Support for research, QI, and investigators in HM remains an urgent and largely unmet need.

Our study has limitations. First, our response rate was low at 28% but is consistent with the response rates of other surveys of physician groups.14 Caution in making inferences to the field at large is necessary given the potential for selection and nonresponse bias. However, we expect that respondents are likely biased toward programs actively conducting research and engaged in QI, thus better reflecting the state of these activities in HM. Second, given that we did not ask for any identifying information, we have no way of establishing the accuracy of the data provided by respondents. However, we have no reason to believe that responses would be altered in a systematic fashion. Future studies that link our findings to publicly available data (eg, databases of active grants and funding) might be useful. Third, while our survey instrument was created and internally validated by hospitalist researchers, its lack of external validation could limit findings. Finally, our results vary on the basis of how respondents answered questions related to effort and time allocation given that these measures differ across programs.

In summary, the findings from this study highlight substantial variations in the number, training, and funding of research faculty across HM programs. Understanding the factors behind the success of some programs and the failures of others appears important in informing and growing the research in the field. Future studies that aim to expand survey participation, raise the awareness of the state of research in HM, and identify barriers and facilitators to academic success in HM are needed.

Disclosures

Dr. Chopra discloses grant funding from the Agency for Healthcare Research and Quality (AHRQ), VA Health Services and Research Department, and Centers for Disease Control. Dr. Jones discloses grant funding from AHRQ. All other authors disclose no conflicts of interest.

1. International Working Party to Promote and Revitalise Academic Medicine. Academic medicine: the evidence base. BMJ. 2004;329(7469):789-792. PubMed

2. Flanders SA, Saint S, McMahon LF, Howell JD. Where should hospitalists sit within the academic medical center? J Gen Intern Med. 2008;23(8):1269-1272. PubMed

3. Flanders SA, Centor B, Weber V, McGinn T, Desalvo K, Auerbach A. Challenges and opportunities in academic hospital medicine: report from the academic hospital medicine summit. J Gen Intern Med. 2009;24(5):636-641. PubMed

4. Dang Do AN, Munchhof AM, Terry C, Emmett T, Kara A. Research and publication trends in hospital medicine. J Hosp Med. 2014;9(3):148-154. PubMed

5. Harrison R, Hunter AJ, Sharpe B, Auerbach AD. Survey of US academic hospitalist leaders about mentorship and academic activities in hospitalist groups. J Hosp Med. 2011;6(1):5-9. PubMed

6. Sehgal NL, Sharpe BA, Auerbach AA, Wachter RM. Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161-166. PubMed

7. Nagarur A, O’Neill RM, Lawton D, Greenwald JL. Supporting faculty development in hospital medicine: design and implementation of a personalized structured mentoring program. J Hosp Med. 2018;13(2):96-99. PubMed

8. Reid MB, Misky GJ, Harrison RA, Sharpe B, Auerbach A, Glasheen JJ. Mentorship, productivity, and promotion among academic hospitalists. J Gen Intern Med. 2012;27(1):23-27. PubMed

9. Flanders SA, Kaufman SR, Nallamothu BK, Saint S. The University of Michigan Specialist-Hospitalist Allied Research Program: jumpstarting hospital medicine research. J Hosp Med. 2008;3(4):308-313. PubMed

10. Auerbach AD, Patel MS, Metlay JP, et al. The Hospital Medicine Reengineering Network (HOMERuN): a learning organization focused on improving hospital care. Acad Med. 2014;89(3):415-420. PubMed

11. Souba WW. Academic medicine’s core values: what do they mean? J Surg Res. 2003;115(2):171-173. PubMed

12. Bonsall J, Chopra V. Building an academic pipeline: a combined society of hospital medicine committee initiative. J Hosp Med. 2016;11(10):735-736. PubMed

13. Sweigart JR, Tad YD, Kneeland P, Williams MV, Glasheen JJ. Hospital medicine resident training tracks: developing the hospital medicine pipeline. J Hosp Med. 2017;12(3):173-176. PubMed

14. Cunningham CT, Quan H, Hemmelgarn B, et al. Exploring physician specialist response rates to web-based surveys. BMC Med Res Methodol. 2015;15(1):32. PubMed

1. International Working Party to Promote and Revitalise Academic Medicine. Academic medicine: the evidence base. BMJ. 2004;329(7469):789-792. PubMed

2. Flanders SA, Saint S, McMahon LF, Howell JD. Where should hospitalists sit within the academic medical center? J Gen Intern Med. 2008;23(8):1269-1272. PubMed

3. Flanders SA, Centor B, Weber V, McGinn T, Desalvo K, Auerbach A. Challenges and opportunities in academic hospital medicine: report from the academic hospital medicine summit. J Gen Intern Med. 2009;24(5):636-641. PubMed

4. Dang Do AN, Munchhof AM, Terry C, Emmett T, Kara A. Research and publication trends in hospital medicine. J Hosp Med. 2014;9(3):148-154. PubMed

5. Harrison R, Hunter AJ, Sharpe B, Auerbach AD. Survey of US academic hospitalist leaders about mentorship and academic activities in hospitalist groups. J Hosp Med. 2011;6(1):5-9. PubMed

6. Sehgal NL, Sharpe BA, Auerbach AA, Wachter RM. Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161-166. PubMed

7. Nagarur A, O’Neill RM, Lawton D, Greenwald JL. Supporting faculty development in hospital medicine: design and implementation of a personalized structured mentoring program. J Hosp Med. 2018;13(2):96-99. PubMed

8. Reid MB, Misky GJ, Harrison RA, Sharpe B, Auerbach A, Glasheen JJ. Mentorship, productivity, and promotion among academic hospitalists. J Gen Intern Med. 2012;27(1):23-27. PubMed

9. Flanders SA, Kaufman SR, Nallamothu BK, Saint S. The University of Michigan Specialist-Hospitalist Allied Research Program: jumpstarting hospital medicine research. J Hosp Med. 2008;3(4):308-313. PubMed

10. Auerbach AD, Patel MS, Metlay JP, et al. The Hospital Medicine Reengineering Network (HOMERuN): a learning organization focused on improving hospital care. Acad Med. 2014;89(3):415-420. PubMed

11. Souba WW. Academic medicine’s core values: what do they mean? J Surg Res. 2003;115(2):171-173. PubMed

12. Bonsall J, Chopra V. Building an academic pipeline: a combined society of hospital medicine committee initiative. J Hosp Med. 2016;11(10):735-736. PubMed

13. Sweigart JR, Tad YD, Kneeland P, Williams MV, Glasheen JJ. Hospital medicine resident training tracks: developing the hospital medicine pipeline. J Hosp Med. 2017;12(3):173-176. PubMed

14. Cunningham CT, Quan H, Hemmelgarn B, et al. Exploring physician specialist response rates to web-based surveys. BMC Med Res Methodol. 2015;15(1):32. PubMed

© 2019 Society of Hospital Medicine

Leadership & Professional Development: Know Your TLR

“Better to remain silent and be thought a fool than to speak and remove all doubt..”

—Abraham Lincoln

Have you ever been in a meeting with a supervisor wondering when you will get a chance to speak? Or have you walked away from an interview not knowing much about the candidate because you were talking all the time? If so, it might be time to consider your TLR: Talking to Listening Ratio. The TLR is a leadership pearl of great value. By keeping track of how much you talk versus how much you listen, you learn how and when to keep quiet.

As Mark Goulston wrote, “There are three stages of speaking to other people. In the first stage, you are on task, relevant and concise . . . the second stage (is) when it feels so good to talk, you don’t even notice the other person is not listening. The third stage occurs after you have lost track of what you were saying and begin to realize you might need to reel the other person back in.” Rather than finding a way to re-engage the other person by giving them a chance to talk while you listen, “. . . the usual impulse is to talk even more in an effort to regain their interest.”1

When you are talking, you are not listening—and when you are not listening, you are not learning. Executives who do all the talking at meetings do not have the opportunity to hear the ideas of others. Poor listening can make it appear as if you don’t care what others think. Worse, being a hypocompetent listener can turn you into an ineffective leader—one who does not have the trust or respect of others.

The TLR is highly relevant for hospitalists: physicians and nurses who do all the talking are not noticing what patients or families want to say or what potentially mistaken conclusions they are drawing. Similarly, quality improvement and patient safety champions who do all the talking are not discovering what frontline clinicians think about an initiative or what barriers need to be overcome for success. They are also not hearing novel approaches to the problem or different priorities that should be addressed instead.

Your goal: ensure that your TLR is less than 1. How? Make it a habit to reflect on your TLR after an encounter with a patient, colleague, or supervisor and ask yourself, “Did I listen well?” In addition to its value in monitoring your own talkativeness, use the TLR to measure others. For example, when interviewing a new hire, apply TLR to discover how much patience would be required to work with a candidate. We once interviewed a physician whose TLR was north of 20 . . . we passed on hiring them. The TLR is also helpful for managing meetings. If you find yourself in one with an over-talker (TLR >5), point to the agenda and redirect the discussion. If it’s a direct report or colleague that’s doing all the talking, remind them that you have another meeting in 30 minutes, so they will need to move things along. Better yet: share the TLR pearl with them so that they can reflect on their performance. If you’re dealing with an under-talker (eg, TLR<0.5), encourage them to voice their opinion. Who knows—you might learn a thing or two.

The most surprising aspect to us about TLR is how oblivious people tend to be about it. High TLR’ers have little idea about the effect they have on people while those with an extremely low TLR (less than 0.2) wonder why they didn’t get picked for a project or promotion. Aim for a TLR between 0.5 and 0.7. Doing so will make you a better leader and follower.

Disclosures

Drs. Saint and Chopra are co-authors of the upcoming book, “Thirty Rules for Healthcare Leaders,” from which this article is adapted. Both authors have no other relevant conflicts of interest.

1. Goulston M. How to Know If You Talk Too Much. Harvard Business Review. https://hbr.org/2015/06/how-to-know-if-you-talk-too-much. Accessed January 30, 2019.

“Better to remain silent and be thought a fool than to speak and remove all doubt..”

—Abraham Lincoln

Have you ever been in a meeting with a supervisor wondering when you will get a chance to speak? Or have you walked away from an interview not knowing much about the candidate because you were talking all the time? If so, it might be time to consider your TLR: Talking to Listening Ratio. The TLR is a leadership pearl of great value. By keeping track of how much you talk versus how much you listen, you learn how and when to keep quiet.

As Mark Goulston wrote, “There are three stages of speaking to other people. In the first stage, you are on task, relevant and concise . . . the second stage (is) when it feels so good to talk, you don’t even notice the other person is not listening. The third stage occurs after you have lost track of what you were saying and begin to realize you might need to reel the other person back in.” Rather than finding a way to re-engage the other person by giving them a chance to talk while you listen, “. . . the usual impulse is to talk even more in an effort to regain their interest.”1

When you are talking, you are not listening—and when you are not listening, you are not learning. Executives who do all the talking at meetings do not have the opportunity to hear the ideas of others. Poor listening can make it appear as if you don’t care what others think. Worse, being a hypocompetent listener can turn you into an ineffective leader—one who does not have the trust or respect of others.

The TLR is highly relevant for hospitalists: physicians and nurses who do all the talking are not noticing what patients or families want to say or what potentially mistaken conclusions they are drawing. Similarly, quality improvement and patient safety champions who do all the talking are not discovering what frontline clinicians think about an initiative or what barriers need to be overcome for success. They are also not hearing novel approaches to the problem or different priorities that should be addressed instead.

Your goal: ensure that your TLR is less than 1. How? Make it a habit to reflect on your TLR after an encounter with a patient, colleague, or supervisor and ask yourself, “Did I listen well?” In addition to its value in monitoring your own talkativeness, use the TLR to measure others. For example, when interviewing a new hire, apply TLR to discover how much patience would be required to work with a candidate. We once interviewed a physician whose TLR was north of 20 . . . we passed on hiring them. The TLR is also helpful for managing meetings. If you find yourself in one with an over-talker (TLR >5), point to the agenda and redirect the discussion. If it’s a direct report or colleague that’s doing all the talking, remind them that you have another meeting in 30 minutes, so they will need to move things along. Better yet: share the TLR pearl with them so that they can reflect on their performance. If you’re dealing with an under-talker (eg, TLR<0.5), encourage them to voice their opinion. Who knows—you might learn a thing or two.

The most surprising aspect to us about TLR is how oblivious people tend to be about it. High TLR’ers have little idea about the effect they have on people while those with an extremely low TLR (less than 0.2) wonder why they didn’t get picked for a project or promotion. Aim for a TLR between 0.5 and 0.7. Doing so will make you a better leader and follower.

Disclosures

Drs. Saint and Chopra are co-authors of the upcoming book, “Thirty Rules for Healthcare Leaders,” from which this article is adapted. Both authors have no other relevant conflicts of interest.

“Better to remain silent and be thought a fool than to speak and remove all doubt..”

—Abraham Lincoln

Have you ever been in a meeting with a supervisor wondering when you will get a chance to speak? Or have you walked away from an interview not knowing much about the candidate because you were talking all the time? If so, it might be time to consider your TLR: Talking to Listening Ratio. The TLR is a leadership pearl of great value. By keeping track of how much you talk versus how much you listen, you learn how and when to keep quiet.

As Mark Goulston wrote, “There are three stages of speaking to other people. In the first stage, you are on task, relevant and concise . . . the second stage (is) when it feels so good to talk, you don’t even notice the other person is not listening. The third stage occurs after you have lost track of what you were saying and begin to realize you might need to reel the other person back in.” Rather than finding a way to re-engage the other person by giving them a chance to talk while you listen, “. . . the usual impulse is to talk even more in an effort to regain their interest.”1

When you are talking, you are not listening—and when you are not listening, you are not learning. Executives who do all the talking at meetings do not have the opportunity to hear the ideas of others. Poor listening can make it appear as if you don’t care what others think. Worse, being a hypocompetent listener can turn you into an ineffective leader—one who does not have the trust or respect of others.

The TLR is highly relevant for hospitalists: physicians and nurses who do all the talking are not noticing what patients or families want to say or what potentially mistaken conclusions they are drawing. Similarly, quality improvement and patient safety champions who do all the talking are not discovering what frontline clinicians think about an initiative or what barriers need to be overcome for success. They are also not hearing novel approaches to the problem or different priorities that should be addressed instead.

Your goal: ensure that your TLR is less than 1. How? Make it a habit to reflect on your TLR after an encounter with a patient, colleague, or supervisor and ask yourself, “Did I listen well?” In addition to its value in monitoring your own talkativeness, use the TLR to measure others. For example, when interviewing a new hire, apply TLR to discover how much patience would be required to work with a candidate. We once interviewed a physician whose TLR was north of 20 . . . we passed on hiring them. The TLR is also helpful for managing meetings. If you find yourself in one with an over-talker (TLR >5), point to the agenda and redirect the discussion. If it’s a direct report or colleague that’s doing all the talking, remind them that you have another meeting in 30 minutes, so they will need to move things along. Better yet: share the TLR pearl with them so that they can reflect on their performance. If you’re dealing with an under-talker (eg, TLR<0.5), encourage them to voice their opinion. Who knows—you might learn a thing or two.

The most surprising aspect to us about TLR is how oblivious people tend to be about it. High TLR’ers have little idea about the effect they have on people while those with an extremely low TLR (less than 0.2) wonder why they didn’t get picked for a project or promotion. Aim for a TLR between 0.5 and 0.7. Doing so will make you a better leader and follower.

Disclosures

Drs. Saint and Chopra are co-authors of the upcoming book, “Thirty Rules for Healthcare Leaders,” from which this article is adapted. Both authors have no other relevant conflicts of interest.

1. Goulston M. How to Know If You Talk Too Much. Harvard Business Review. https://hbr.org/2015/06/how-to-know-if-you-talk-too-much. Accessed January 30, 2019.

1. Goulston M. How to Know If You Talk Too Much. Harvard Business Review. https://hbr.org/2015/06/how-to-know-if-you-talk-too-much. Accessed January 30, 2019.

Introducing Leadership & Professional Development: A New Series in JHM

“I cannot say whether things will get better if we change; what I can say is they must change if they are to get better.”

—Georg C. Lichtenburg

Leading change is never easy. Many a physician has joined a committee, hired a promising project manager, assumed responsibility for an operational or clinical task—only to have it painfully falter or agonizingly fail. Unfortunately, some of us become disillusioned with the process, donning our white coats to return to the safe ensconce of clinical work rather than take on another perilous change or leadership task. But ask those that have tried and failed and those that have succeeded and they will tell you this: the lessons learned in the journey were invaluable.

Academic medical centers and healthcare organizations are increasingly turning to hospitalists to assume a myriad of leadership roles. With very little formal training, many of us jump in to improve organizational culture, financial accountability, and patient safety, literally building the bridge as we walk on it. The practical knowledge and know-how gleaned in efforts during these endeavors are perhaps just as important as evidence-based medicine. And yet, few venues to share and disseminate these insights currently exist.

This void represents the motivation behind the new Journal series entitled, “Leadership & Professional Development” or “LPD.” In these brief excerpts, lessons on leadership/followership, mentorship/menteeship, leading change and professional development will be shared using a conversational and pragmatic tone. Like a clinical case, pearls to help you navigate development and organizational challenges will be shared. The goal is simple: read an LPD and walk away with an “a-ha,” a new tool, or a strategy that you can use ASAP. For example, in the debut LPD—Hire Hard1—we emphasize a cardinal rule for hiring: wait for the right person. Waiting is not easy, but it is well worth it in the long run—the right person will make your job that much better. Remember the aphorism: A’s hire A’s while B’s hire C’s.

Many other nuggets of wisdom can fit an LPD model. For example, when it comes to stress, a technique that brings mindfulness to your day—one you can practice with every patient encounter—might be the ticket.2 Interested in mentoring? You’ll need to know the Six Golden Rules.3 And don’t forget about emotional intelligence, tight-loose-tight management or the tree-climbing monkey! Don’t know what these are? Time to read an LPD or two to find out!

As you might have guessed—some of these pieces are already written. They come from a book that my colleague, Sanjay Saint and I have been busy writing for over a year. The book distills much of what we have learned as clinicians, researchers and administrators into a collection we call, “Thirty Leadership Rules for Healthcare Providers.” But LPD is not an advert for the book; rather, our contributions will only account for some of the series. We hope this venue will become a platform in where readers like you can offer “pearls” to the broader community. The rules are simple: coin a rule/pearl, open with an illustrative quote, frame it in 650 words with no more than five references, and write it so that a reader can apply it to their work tomorrow. And don’t worry—we on the editorial team will help you craft th

Disclosures

Dr. Chopra has nothing to disclose.

1. Chopra V, Saint S. Hire Hard. Manage Easy. J Hosp Med. 2019;14(2):74. doi: 10.12788/jhm.3158.

2. Gilmartin H, Saint S, Rogers M, et al. Pilot randomised controlled trial to improve hand hygiene through mindful moments. BMJ Qual Saf. 2018;27(10):799-806. PubMed

3. Chopra V, Saint S. What Mentors Wish Their Mentees Knew. Harvard Business Review. 2017. https://hbr.org/2017/11/what-mentors-wish-their-mentees-knew. Accessed December 17, 2018. PubMed

“I cannot say whether things will get better if we change; what I can say is they must change if they are to get better.”

—Georg C. Lichtenburg

Leading change is never easy. Many a physician has joined a committee, hired a promising project manager, assumed responsibility for an operational or clinical task—only to have it painfully falter or agonizingly fail. Unfortunately, some of us become disillusioned with the process, donning our white coats to return to the safe ensconce of clinical work rather than take on another perilous change or leadership task. But ask those that have tried and failed and those that have succeeded and they will tell you this: the lessons learned in the journey were invaluable.

Academic medical centers and healthcare organizations are increasingly turning to hospitalists to assume a myriad of leadership roles. With very little formal training, many of us jump in to improve organizational culture, financial accountability, and patient safety, literally building the bridge as we walk on it. The practical knowledge and know-how gleaned in efforts during these endeavors are perhaps just as important as evidence-based medicine. And yet, few venues to share and disseminate these insights currently exist.

This void represents the motivation behind the new Journal series entitled, “Leadership & Professional Development” or “LPD.” In these brief excerpts, lessons on leadership/followership, mentorship/menteeship, leading change and professional development will be shared using a conversational and pragmatic tone. Like a clinical case, pearls to help you navigate development and organizational challenges will be shared. The goal is simple: read an LPD and walk away with an “a-ha,” a new tool, or a strategy that you can use ASAP. For example, in the debut LPD—Hire Hard1—we emphasize a cardinal rule for hiring: wait for the right person. Waiting is not easy, but it is well worth it in the long run—the right person will make your job that much better. Remember the aphorism: A’s hire A’s while B’s hire C’s.

Many other nuggets of wisdom can fit an LPD model. For example, when it comes to stress, a technique that brings mindfulness to your day—one you can practice with every patient encounter—might be the ticket.2 Interested in mentoring? You’ll need to know the Six Golden Rules.3 And don’t forget about emotional intelligence, tight-loose-tight management or the tree-climbing monkey! Don’t know what these are? Time to read an LPD or two to find out!

As you might have guessed—some of these pieces are already written. They come from a book that my colleague, Sanjay Saint and I have been busy writing for over a year. The book distills much of what we have learned as clinicians, researchers and administrators into a collection we call, “Thirty Leadership Rules for Healthcare Providers.” But LPD is not an advert for the book; rather, our contributions will only account for some of the series. We hope this venue will become a platform in where readers like you can offer “pearls” to the broader community. The rules are simple: coin a rule/pearl, open with an illustrative quote, frame it in 650 words with no more than five references, and write it so that a reader can apply it to their work tomorrow. And don’t worry—we on the editorial team will help you craft th

Disclosures

Dr. Chopra has nothing to disclose.

“I cannot say whether things will get better if we change; what I can say is they must change if they are to get better.”

—Georg C. Lichtenburg