User login

A Nationwide Survey and Needs Assessment of Colonoscopy Quality Assurance Programs

Colorectal cancer (CRC) is an important concern for the VA, and colonoscopy is one primary screening, surveillance, and diagnostic modality used. The observed reductions in CRC incidence and mortality over the past decade largely have been attributed to the widespread use of CRC screening options.1,2 Colonoscopy quality is critical to CRC prevention in veterans. However, endoscopy skills to detect and remove colorectal polyps using colonoscopy vary in practice.3-5

Quality benchmarks, linked to patient outcomes, have been established by specialty societies and proposed by the Centers for Medicare and Medicaid Services as reportable quality metrics.6 Colonoscopy quality metrics have been shown to be associated with patient outcomes, such as the risk of developing CRC after colonoscopy. The adenoma detection rate (ADR), defined as the proportion of average-risk screening colonoscopies in which 1 or more adenomas are detected, has the strongest association to interval or “missed” CRC after screening colonoscopy and has been linked to a risk for fatal CRC despite colonoscopy.3

In a landmark study of 314,872 examinations performed by 136 gastroenterologists, the ADR ranged from 7.4% to 52.5%.3 Among patients with ADRs in the highest quintile compared with patients in the lowest, the adjusted hazard ratios (HRs) for any interval cancer was 0.52 (95% confidence interval [CI], 0.39-0.69) and for fatal interval cancers was 0.38 (95% CI, 0.22-0.65).3 Another pooled analysis from 8 surveillance studies that followed more than 800 participants with adenoma(s) after a baseline colonoscopy showed 52% of incident cancers as probable missed lesions, 19% as possibly related to incomplete resection of an earlier, noninvasive lesion, and only 24% as probable new lesions.7 These interval cancers highlight the current imperfections of colonoscopy and the focus on measurement and reporting of quality indicators for colonoscopy.8-12

According to VHA Directive 1015, in December 2014, colonoscopy quality should be monitored as part of an ongoing quality assurance program.13 A recent report from the VA Office of the Inspector General (OIG) highlighted colonoscopy-quality deficiencies.14 The OIG report strongly recommended that the “Acting Under Secretary for Health require standardized documentation of quality indicators based on professional society guidelines and published literature.”14However, no currently standardized and readily available VHA resource measures, reports, and ensures colonoscopy quality.

The authors hypothesized that colonoscopy quality assurance programs vary widely across VHA sites. The objective of this survey was to assess the measurement and reporting practices for colonoscopy quality and identify both strengths and areas for improvement to facilitate implementation of quality assurance programs across the VA health care system.

Methods

The authors performed an online survey of VA sites to assess current colonoscopy quality assurance practices. The institutional review boards (IRBs) at the University of Utah and VA Salt Lake City Health Care System and University of California, San Francisco and San Francisco VA Health Care System classified the study as a quality improvement project that did not qualify for human subjects’ research requiring IRB review.

The authors iteratively developed and refined the questionnaire with a survey methodologist and 2 clinical domain experts. The National Program Director for Gastroenterology, and the National Gastroenterology Field Advisory Committee reviewed the survey content and pretested the survey instrument prior to final data collection. The National Program Office for Gastroenterology provided an e-mail list of all known VA gastroenterology section chiefs. The authors administered the final survey via e-mail, using the Research Electronic Data Capture (REDCap; Vanderbilt University Medical Center) platform beginning January 9, 2017.15

A follow-up reminder e-mail was sent to nonresponders after 2 weeks. After this second invitation, sites were contacted by telephone to verify that the correct contact information had been captured. Subsequently, 50 contacts were updated if e-mails bounced back or the correct contact was obtained. Points of contact received a total of 3 reminder e-mails until the final closeout of the survey on March 28, 2017; 65 of 89 (73%) of the original contacts completed the survey vs 31 of 50 (62%) of the updated contacts.

Analysis

Descriptive statistics of the responses were calculated to determine the overall proportion of VA sites measuring colonoscopy quality metrics and identification of areas in need of quality improvement. The response rate for the survey was defined as the total number of responses obtained as a proportion of the total number of points of contact. This corresponds to the American Association of Public Opinion Research’s RR1, or minimum response rate, formula.16 All categoric responses are presented as proportions. Statistical analyses were performed using STATA SE12.0 (College Station, TX).

Results

Of the 139 points of contact invited, 96 completed the survey (response rate of 69.0%), representing 93 VA facilities (of 141 possible facilities) in 44 different states. Three sites had 2 responses. Sites used various and often a combination of methods to measure quality (Table 1).

A majority of sites’ (63.5%) quality reports represented individual provider data, whereas fewer provided quality reports for physician groups (22.9%) or for the entire facility (40.6%). Provider quality information was de-identified in 43.8% of reporting sites’ quality reports and identifiable in 37.5% of reporting sites’ quality reports. A majority of sites (74.0%) reported that the local gastroenterology section chief or quality manager has access to the quality reports. Fewer sites reported providing data to individual endoscopists (44.8% for personal and peer data and 32.3% for personal data only). One site (1%) responded that quality reports were available for public access. Survey respondents also were asked to provide the estimated time (hours required per month) to collect the data for quality metrics. Of 75 respondents providing data for this question, 28 (29.2%) and 17 (17.7%), estimated between 1 to 5 and 6 to 10 hours per month, respectively. Ten sites estimated spending between 11 to 20 hours, and 7 sites estimated spending more than 20 hours per month collecting quality metrics. A total of 13 respondents (13.5%) stated uncertainty about the time burden.

As shown in the Figure, numerous quality metrics were collected across sites with more than 80% of sites collecting information on bowel preparation quality (88.5%), cecal intubation rate (87.5%), and complications (83.3%). A majority of sites also reported collecting data on appropriateness of surveillance intervals (62.5%), colonoscopy withdrawal times (62.5%), and ADRs (61.5%). Seven sites (7.3%) did not collect quality metrics.

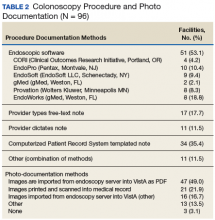

Information also was collected on colonoscopy procedure documentation to inform future efforts at standardization. A small majority (53.1%) of sites reported using endoscopic software to generate colonoscopy procedure documentation. Within these sites, 6 different types of endoscopic note writing software were used to generate procedure notes (Table 2).

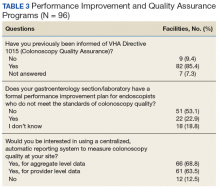

Most sites (85.4%) were aware of VHA Directive 1015 recommendations for colonoscopy quality assurance programs. A significant majority (89.5%) of respondents also indicated interest in a centralized automatic reporting system to measure and report colonoscopy quality in some form, either with aggregate data, provider data, or both (Table 3).

Discussion

This survey on colonoscopy quality assurance programs is the first assessment of the VHA’s efforts to measure and report colonoscopy quality indicators. The findings indicated that the majority of VA sites are measuring and reporting at least some measures of colonoscopy quality. However, the programs are significantly variable in terms of methods used to collect quality metrics, specific quality measures obtained, and how quality is reported.

The authors’ work is novel in that this is the first report of the status of colonoscopy quality assurance programs in a large U.S. health care system. The VA health care system is the largest integrated health system in the U.S., serving more than 9 million veterans annually. This survey’s high response rate further strengthens the findings. Specifically, the survey found that VA sites are making a strong concerted effort to measure and report colonoscopy quality. However, there is significant variability in documentation, measurement, and reporting practices. Moreover, the majority of VA sites do not have formal performance improvement plans in place for endoscopists who do not meet thresholds for colonoscopy quality.

Screening colonoscopy for CRC offers known mortality benefits to patients.1,17-19 Significant prior work has described and validated the importance of colonoscopy quality metrics, including bowel preparation quality, cecal intubation rate, and ADR and their association with interval colorectal cancer and death.20-23 Gastroenterology professional societies, including the American College of Gastroenterology and the American Society for Gastrointestinal Endoscopy, have recommended and endorsed measurement and reporting of colonoscopy metrics.24 There is general agreement among endoscopists that colonoscopy quality is an important aspect of performing the procedure.

The lack of formal performance improvement programs is a key finding of this survey. Recent studies have shown that improvements in quality metrics, such as the ADR, by individual endoscopists result in reductions in interval colorectal cancer and death.25 Kahi and colleagues previously showed that providing a quarterly report card improves colonoscopy quality.26 Keswani and colleagues studied a combination of a report card and implementation of standards of practice with resultant improvement in colonoscopy quality.27 Most recently, in a large prospective cohort study of individuals who underwent a screening colonoscopy, 294 of the screening endoscopists received annual feedback and quality benchmark indicators to improve colonoscopy performance.25 The majority of the endoscopists (74.5%) increased their annual ADR category over the study period. Moreover, patients examined by endoscopists who reached or maintained the highest ADR quintile (> 24.6%) had significantly lower risk of interval CRC and death. The lack of formal performance improvement programs across the VHA is concerning but reveals a significant opportunity to improve veteran health outcomes on a large scale.

This study’s findings also highlight the intense resources necessary to measure and report colonoscopy quality. The ability to measure and report quality metrics requires having adequate documentation and data to obtain quality metrics. Administrative databases from electronic health records offer some potential for routine monitoring of quality metrics.28 However, most administrative databases, including the VA Corporate Data Warehouse (CDW), contain administrative billing codes (ICD and CPT) linked to limited patient data, including demographics and structured medical record data. The actual data required for quality reporting of important metrics (bowel preparation quality, cecal intubation rates, and ADRs) are usually found in clinical text notes or endoscopic note documentation and not available as structured data. Due to this issue, the majority of VA sites (79.2%) are using manual chart review to collect quality metric data, resulting in widely variable estimates on time burden. A minority of sites in this study (39.6%) reported using automated endoscopic software reporting capability that can help with the time burden. However, even in the VA, an integrated health system, a wide variety of software brands, documentation practices, and photo documentation was found.

Future endoscopy budget and purchase decisions for the individual VA sites should take into account how new technology and software can more easily facilitate accurate quality reporting. A specific policy recommendation would be for the VA to consider a uniform endoscopic note writer for procedure notes. Pathology data, which is necessary for the calculation of ADR, also should be available as structured data in the CDW to more easily measure colonoscopy quality. Continuous measurement and reporting of quality also requires ongoing information technology infrastructure and quality control of the measurement process.

Limitations

This survey was a cross-section of VA sites’ points of contact regarding colonoscopy quality assurance programs, so the results are descriptive in nature. However, the instrument was carefully developed, using both subject matter and survey method expertise. The questionnaire also was refined through pretesting prior to data collection. The initial contact list was found to have errors, and the list had to be updated after launching the survey. Updated information for most of the contacts was available.

Another limitation was the inability to survey nongastroenterologist-run endoscopy centers, because many centers use surgeons or other nongastroenterology providers. The authors speculate that quality monitoring may be less likely to be present at these facilities as they may not be aware of the gastroenterology professional society recommendations. The authors did not require or insist that all questions be answered, so some data were missing from sites. However, 93.7% of respondents completed the entire survey.

Conclusion

The authors have described the status of colonoscopy quality assurance programs across the VA health care system. Many sites are making robust efforts to measure and report quality especially of process measures. However, there are significant time and manual workforce efforts required, and this work is likely associated with the variability in programs. Importantly, ADR, which is the quality metric that has been most strongly associated with risk of colon cancer mortality, is not being measured by 38% of sites.

These results reinforce a critical need for a centralized, automated quality reporting infrastructure to standardize colonoscopy quality reporting, reduce workload, and ensure veterans receive high-quality colonoscopy.

Acknowledgments

The authors acknowledge the support and feedback of the National Gastroenterology Program Field Advisory Committee for survey development and testing. The authors coordinated the survey through the Salt Lake City Specialty Care Center of Innovation in partnership with the National Gastroenterology Program Office and the Quality Enhancement Research Initiative: Quality Enhancement Research Initiative, Measurement Science Program, QUE15-283. The work also was partially supported by the National Center for Advancing Translational Sciences of the National Institutes of Health Award UL1TR001067 and Merit Review Award 1 I01 HX001574-01A1 from the United States Department of Veterans Affairs Health Services Research & Development Service of the VA Office of Research and Development.

1. Brenner H, Stock C, Hoffmeister M. Effect of screening sigmoidoscopy and screening colonoscopy on colorectal cancer incidence and mortality: systematic review and meta-analysis of randomised controlled trials and observational studies. BMJ. 2014;348:g2467.

2. Meester RGS, Doubeni CA, Lansdorp-Vogelaar I, et al. Colorectal cancer deaths attributable to nonuse of screening in the United States. Ann Epidemiol. 2015;25(3):208-213.e1.

3. Corley DA, Jensen CD, Marks AR, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370(26):1298-1306.

4. Meester RGS, Doubeni CA, Lansdorp-Vogelaar I, et al. Variation in adenoma detection rate and the lifetime benefits and cost of colorectal cancer screening: a microsimulation model. JAMA. 2015;313(23):2349-2358.

5. Boroff ES, Gurudu SR, Hentz JG, Leighton JA, Ramirez FC. Polyp and adenoma detection rates in the proximal and distal colon. Am J Gastroenterol. 2013;108(6):993-999.

6. Center for Medicare and Medicaid Services. Quality measures. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/Qual ityMeasures/index.html. Updated December 19, 2017. Accessed January 17, 2018.

7. Robertson DJ, Lieberman DA, Winawer SJ, et al. Colorectal cancers soon after colonoscopy: a pooled multicohort analysis. Gut. 2014;63(6):949-956.

8. Fayad NF, Kahi CJ. Colonoscopy quality assessment. Gastrointest Endosc Clin N Am. 2015;25(2):373-386.

9. de Jonge V, Sint Nicolaas J, Cahen DL, et al; SCoPE Consortium. Quality evaluation of colonoscopy reporting and colonoscopy performance in daily clinical practice. Gastrointest Endosc. 2012;75(1):98-106.

10. Johnson DA. Quality benchmarking for colonoscopy: how do we pick products from the shelf? Gastrointest Endosc. 2012;75(1):107-109.

11. Anderson JC, Butterly LF. Colonoscopy: quality indicators. Clin Transl Gastroenterol. 2015;6(2):e77.

12. Kaminski MF, Regula J, Kraszewska E, et al. Quality indicators for colonoscopy and the risk of interval cancer. N Engl J Med. 2010;362(19):1795-1803.

13. U.S. Department of Veterans Affairs, Veterans Health Administration. Colorectal cancer screening. VHA Directive 1015. Published December 30, 2014.

14. U.S. Department of Veterans Affairs, VA Office of the Inspector General, Office of Healthcare Inspections. Healthcare inspection: alleged access delays and surgery service concerns, VA Roseburg Healthcare System, Roseburg, Oregon. Report No.15-00506-535. https://www.va.gov/oig /pubs/VAOIG-15-00506-535.pdf. Published July 11, 2017. Accessed January 9, 2018.

15. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381.

16. The American Association for Public Opinion Research. Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys. 9th edition. http://www.aapor.org/AAPOR_Main/media/publications/Standard-Definitions20169theditionfinal.pdf. Revised 2016. Accessed January 9, 2018.

17. Kahi CJ, Imperiale TF, Juliar BE, Rex DK. Effect of screening colonoscopy on colorectal cancer incidence and mortality. Clin Gastroenterol Hepatol. 2009;7(7):770-775.

18. Manser CN, Bachmann LM, Brunner J, Hunold F, Bauerfeind P, Marbet UA. Colonoscopy screening markedly reduces the occurrence of colon carcinomas and carcinoma-related death: a closed cohort study. Gastrointest Endosc. 2012;76(1):110-117.

19. Nishihara R, Wu K, Lochhead P, et al. Long-term colorectal-cancer incidence and mortality after lower endoscopy. N Engl J Med. 2013;369(12):1095-1105.

20. Harewood GC, Sharma VK, de Garmo P. Impact of colonoscopy preparation quality on detection of suspected colonic neoplasia. Gastrointest Endosc. 2003;58(1):76-79.

21. Hillyer GC, Lebwohl B, Rosenberg RM, et al. Assessing bowel preparation quality using the mean number of adenomas per colonoscopy. Therap Adv Gastroenterol. 2014;7(6):238-246.

22. Clark BT, Rustagi T, Laine L. What level of bowel prep quality requires early repeat colonoscopy: systematic review and meta-analysis of the impact of preparation quality on adenoma detection rate. Am J Gastroenterol. 2014;109(11):1714-1723; quiz 1724.

23. Johnson DA, Barkun AN, Cohen LB, et al; US Multi-Society Task Force on Colorectal Cancer. Optimizing adequacy of bowel cleansing for colonoscopy: recommendations from the US multi-society task force on colorectal cancer. Gastroenterology. 2014;147(4):903-924.

24. Rex DK, Petrini JL, Baron TH, et al; ASGE/ACG Taskforce on Quality in Endoscopy. Quality indicators for colonoscopy. Am J Gastroenterol. 2006;101(4):873-885.

25. Kaminski MF, Wieszczy P, Rupinski M, et al. Increased rate of adenoma detection associates with reduced risk of colorectal cancer and death. Gastroenterology. 2017;153(1):98-105.

26. Kahi CJ, Ballard D, Shah AS, Mears R, Johnson CS. Impact of a quarterly report card on colonoscopy quality measures. Gastrointest. Endosc. 2013;77(6):925-931.

27. Keswani RN, Yadlapati R, Gleason KM, et al. Physician report cards and implementing standards of practice are both significantly associated with improved screening colonoscopy quality. Am J Gastroenterol. 2015;110(8):1134-1139.

28. Logan JR, Lieberman DA. The use of databases and registries to enhance colonoscopy quality. Gastrointest Endosc Clin N Am. 2010;20(4):717-734.

Colorectal cancer (CRC) is an important concern for the VA, and colonoscopy is one primary screening, surveillance, and diagnostic modality used. The observed reductions in CRC incidence and mortality over the past decade largely have been attributed to the widespread use of CRC screening options.1,2 Colonoscopy quality is critical to CRC prevention in veterans. However, endoscopy skills to detect and remove colorectal polyps using colonoscopy vary in practice.3-5

Quality benchmarks, linked to patient outcomes, have been established by specialty societies and proposed by the Centers for Medicare and Medicaid Services as reportable quality metrics.6 Colonoscopy quality metrics have been shown to be associated with patient outcomes, such as the risk of developing CRC after colonoscopy. The adenoma detection rate (ADR), defined as the proportion of average-risk screening colonoscopies in which 1 or more adenomas are detected, has the strongest association to interval or “missed” CRC after screening colonoscopy and has been linked to a risk for fatal CRC despite colonoscopy.3

In a landmark study of 314,872 examinations performed by 136 gastroenterologists, the ADR ranged from 7.4% to 52.5%.3 Among patients with ADRs in the highest quintile compared with patients in the lowest, the adjusted hazard ratios (HRs) for any interval cancer was 0.52 (95% confidence interval [CI], 0.39-0.69) and for fatal interval cancers was 0.38 (95% CI, 0.22-0.65).3 Another pooled analysis from 8 surveillance studies that followed more than 800 participants with adenoma(s) after a baseline colonoscopy showed 52% of incident cancers as probable missed lesions, 19% as possibly related to incomplete resection of an earlier, noninvasive lesion, and only 24% as probable new lesions.7 These interval cancers highlight the current imperfections of colonoscopy and the focus on measurement and reporting of quality indicators for colonoscopy.8-12

According to VHA Directive 1015, in December 2014, colonoscopy quality should be monitored as part of an ongoing quality assurance program.13 A recent report from the VA Office of the Inspector General (OIG) highlighted colonoscopy-quality deficiencies.14 The OIG report strongly recommended that the “Acting Under Secretary for Health require standardized documentation of quality indicators based on professional society guidelines and published literature.”14However, no currently standardized and readily available VHA resource measures, reports, and ensures colonoscopy quality.

The authors hypothesized that colonoscopy quality assurance programs vary widely across VHA sites. The objective of this survey was to assess the measurement and reporting practices for colonoscopy quality and identify both strengths and areas for improvement to facilitate implementation of quality assurance programs across the VA health care system.

Methods

The authors performed an online survey of VA sites to assess current colonoscopy quality assurance practices. The institutional review boards (IRBs) at the University of Utah and VA Salt Lake City Health Care System and University of California, San Francisco and San Francisco VA Health Care System classified the study as a quality improvement project that did not qualify for human subjects’ research requiring IRB review.

The authors iteratively developed and refined the questionnaire with a survey methodologist and 2 clinical domain experts. The National Program Director for Gastroenterology, and the National Gastroenterology Field Advisory Committee reviewed the survey content and pretested the survey instrument prior to final data collection. The National Program Office for Gastroenterology provided an e-mail list of all known VA gastroenterology section chiefs. The authors administered the final survey via e-mail, using the Research Electronic Data Capture (REDCap; Vanderbilt University Medical Center) platform beginning January 9, 2017.15

A follow-up reminder e-mail was sent to nonresponders after 2 weeks. After this second invitation, sites were contacted by telephone to verify that the correct contact information had been captured. Subsequently, 50 contacts were updated if e-mails bounced back or the correct contact was obtained. Points of contact received a total of 3 reminder e-mails until the final closeout of the survey on March 28, 2017; 65 of 89 (73%) of the original contacts completed the survey vs 31 of 50 (62%) of the updated contacts.

Analysis

Descriptive statistics of the responses were calculated to determine the overall proportion of VA sites measuring colonoscopy quality metrics and identification of areas in need of quality improvement. The response rate for the survey was defined as the total number of responses obtained as a proportion of the total number of points of contact. This corresponds to the American Association of Public Opinion Research’s RR1, or minimum response rate, formula.16 All categoric responses are presented as proportions. Statistical analyses were performed using STATA SE12.0 (College Station, TX).

Results

Of the 139 points of contact invited, 96 completed the survey (response rate of 69.0%), representing 93 VA facilities (of 141 possible facilities) in 44 different states. Three sites had 2 responses. Sites used various and often a combination of methods to measure quality (Table 1).

A majority of sites’ (63.5%) quality reports represented individual provider data, whereas fewer provided quality reports for physician groups (22.9%) or for the entire facility (40.6%). Provider quality information was de-identified in 43.8% of reporting sites’ quality reports and identifiable in 37.5% of reporting sites’ quality reports. A majority of sites (74.0%) reported that the local gastroenterology section chief or quality manager has access to the quality reports. Fewer sites reported providing data to individual endoscopists (44.8% for personal and peer data and 32.3% for personal data only). One site (1%) responded that quality reports were available for public access. Survey respondents also were asked to provide the estimated time (hours required per month) to collect the data for quality metrics. Of 75 respondents providing data for this question, 28 (29.2%) and 17 (17.7%), estimated between 1 to 5 and 6 to 10 hours per month, respectively. Ten sites estimated spending between 11 to 20 hours, and 7 sites estimated spending more than 20 hours per month collecting quality metrics. A total of 13 respondents (13.5%) stated uncertainty about the time burden.

As shown in the Figure, numerous quality metrics were collected across sites with more than 80% of sites collecting information on bowel preparation quality (88.5%), cecal intubation rate (87.5%), and complications (83.3%). A majority of sites also reported collecting data on appropriateness of surveillance intervals (62.5%), colonoscopy withdrawal times (62.5%), and ADRs (61.5%). Seven sites (7.3%) did not collect quality metrics.

Information also was collected on colonoscopy procedure documentation to inform future efforts at standardization. A small majority (53.1%) of sites reported using endoscopic software to generate colonoscopy procedure documentation. Within these sites, 6 different types of endoscopic note writing software were used to generate procedure notes (Table 2).

Most sites (85.4%) were aware of VHA Directive 1015 recommendations for colonoscopy quality assurance programs. A significant majority (89.5%) of respondents also indicated interest in a centralized automatic reporting system to measure and report colonoscopy quality in some form, either with aggregate data, provider data, or both (Table 3).

Discussion

This survey on colonoscopy quality assurance programs is the first assessment of the VHA’s efforts to measure and report colonoscopy quality indicators. The findings indicated that the majority of VA sites are measuring and reporting at least some measures of colonoscopy quality. However, the programs are significantly variable in terms of methods used to collect quality metrics, specific quality measures obtained, and how quality is reported.

The authors’ work is novel in that this is the first report of the status of colonoscopy quality assurance programs in a large U.S. health care system. The VA health care system is the largest integrated health system in the U.S., serving more than 9 million veterans annually. This survey’s high response rate further strengthens the findings. Specifically, the survey found that VA sites are making a strong concerted effort to measure and report colonoscopy quality. However, there is significant variability in documentation, measurement, and reporting practices. Moreover, the majority of VA sites do not have formal performance improvement plans in place for endoscopists who do not meet thresholds for colonoscopy quality.

Screening colonoscopy for CRC offers known mortality benefits to patients.1,17-19 Significant prior work has described and validated the importance of colonoscopy quality metrics, including bowel preparation quality, cecal intubation rate, and ADR and their association with interval colorectal cancer and death.20-23 Gastroenterology professional societies, including the American College of Gastroenterology and the American Society for Gastrointestinal Endoscopy, have recommended and endorsed measurement and reporting of colonoscopy metrics.24 There is general agreement among endoscopists that colonoscopy quality is an important aspect of performing the procedure.

The lack of formal performance improvement programs is a key finding of this survey. Recent studies have shown that improvements in quality metrics, such as the ADR, by individual endoscopists result in reductions in interval colorectal cancer and death.25 Kahi and colleagues previously showed that providing a quarterly report card improves colonoscopy quality.26 Keswani and colleagues studied a combination of a report card and implementation of standards of practice with resultant improvement in colonoscopy quality.27 Most recently, in a large prospective cohort study of individuals who underwent a screening colonoscopy, 294 of the screening endoscopists received annual feedback and quality benchmark indicators to improve colonoscopy performance.25 The majority of the endoscopists (74.5%) increased their annual ADR category over the study period. Moreover, patients examined by endoscopists who reached or maintained the highest ADR quintile (> 24.6%) had significantly lower risk of interval CRC and death. The lack of formal performance improvement programs across the VHA is concerning but reveals a significant opportunity to improve veteran health outcomes on a large scale.

This study’s findings also highlight the intense resources necessary to measure and report colonoscopy quality. The ability to measure and report quality metrics requires having adequate documentation and data to obtain quality metrics. Administrative databases from electronic health records offer some potential for routine monitoring of quality metrics.28 However, most administrative databases, including the VA Corporate Data Warehouse (CDW), contain administrative billing codes (ICD and CPT) linked to limited patient data, including demographics and structured medical record data. The actual data required for quality reporting of important metrics (bowel preparation quality, cecal intubation rates, and ADRs) are usually found in clinical text notes or endoscopic note documentation and not available as structured data. Due to this issue, the majority of VA sites (79.2%) are using manual chart review to collect quality metric data, resulting in widely variable estimates on time burden. A minority of sites in this study (39.6%) reported using automated endoscopic software reporting capability that can help with the time burden. However, even in the VA, an integrated health system, a wide variety of software brands, documentation practices, and photo documentation was found.

Future endoscopy budget and purchase decisions for the individual VA sites should take into account how new technology and software can more easily facilitate accurate quality reporting. A specific policy recommendation would be for the VA to consider a uniform endoscopic note writer for procedure notes. Pathology data, which is necessary for the calculation of ADR, also should be available as structured data in the CDW to more easily measure colonoscopy quality. Continuous measurement and reporting of quality also requires ongoing information technology infrastructure and quality control of the measurement process.

Limitations

This survey was a cross-section of VA sites’ points of contact regarding colonoscopy quality assurance programs, so the results are descriptive in nature. However, the instrument was carefully developed, using both subject matter and survey method expertise. The questionnaire also was refined through pretesting prior to data collection. The initial contact list was found to have errors, and the list had to be updated after launching the survey. Updated information for most of the contacts was available.

Another limitation was the inability to survey nongastroenterologist-run endoscopy centers, because many centers use surgeons or other nongastroenterology providers. The authors speculate that quality monitoring may be less likely to be present at these facilities as they may not be aware of the gastroenterology professional society recommendations. The authors did not require or insist that all questions be answered, so some data were missing from sites. However, 93.7% of respondents completed the entire survey.

Conclusion

The authors have described the status of colonoscopy quality assurance programs across the VA health care system. Many sites are making robust efforts to measure and report quality especially of process measures. However, there are significant time and manual workforce efforts required, and this work is likely associated with the variability in programs. Importantly, ADR, which is the quality metric that has been most strongly associated with risk of colon cancer mortality, is not being measured by 38% of sites.

These results reinforce a critical need for a centralized, automated quality reporting infrastructure to standardize colonoscopy quality reporting, reduce workload, and ensure veterans receive high-quality colonoscopy.

Acknowledgments

The authors acknowledge the support and feedback of the National Gastroenterology Program Field Advisory Committee for survey development and testing. The authors coordinated the survey through the Salt Lake City Specialty Care Center of Innovation in partnership with the National Gastroenterology Program Office and the Quality Enhancement Research Initiative: Quality Enhancement Research Initiative, Measurement Science Program, QUE15-283. The work also was partially supported by the National Center for Advancing Translational Sciences of the National Institutes of Health Award UL1TR001067 and Merit Review Award 1 I01 HX001574-01A1 from the United States Department of Veterans Affairs Health Services Research & Development Service of the VA Office of Research and Development.

Colorectal cancer (CRC) is an important concern for the VA, and colonoscopy is one primary screening, surveillance, and diagnostic modality used. The observed reductions in CRC incidence and mortality over the past decade largely have been attributed to the widespread use of CRC screening options.1,2 Colonoscopy quality is critical to CRC prevention in veterans. However, endoscopy skills to detect and remove colorectal polyps using colonoscopy vary in practice.3-5

Quality benchmarks, linked to patient outcomes, have been established by specialty societies and proposed by the Centers for Medicare and Medicaid Services as reportable quality metrics.6 Colonoscopy quality metrics have been shown to be associated with patient outcomes, such as the risk of developing CRC after colonoscopy. The adenoma detection rate (ADR), defined as the proportion of average-risk screening colonoscopies in which 1 or more adenomas are detected, has the strongest association to interval or “missed” CRC after screening colonoscopy and has been linked to a risk for fatal CRC despite colonoscopy.3

In a landmark study of 314,872 examinations performed by 136 gastroenterologists, the ADR ranged from 7.4% to 52.5%.3 Among patients with ADRs in the highest quintile compared with patients in the lowest, the adjusted hazard ratios (HRs) for any interval cancer was 0.52 (95% confidence interval [CI], 0.39-0.69) and for fatal interval cancers was 0.38 (95% CI, 0.22-0.65).3 Another pooled analysis from 8 surveillance studies that followed more than 800 participants with adenoma(s) after a baseline colonoscopy showed 52% of incident cancers as probable missed lesions, 19% as possibly related to incomplete resection of an earlier, noninvasive lesion, and only 24% as probable new lesions.7 These interval cancers highlight the current imperfections of colonoscopy and the focus on measurement and reporting of quality indicators for colonoscopy.8-12

According to VHA Directive 1015, in December 2014, colonoscopy quality should be monitored as part of an ongoing quality assurance program.13 A recent report from the VA Office of the Inspector General (OIG) highlighted colonoscopy-quality deficiencies.14 The OIG report strongly recommended that the “Acting Under Secretary for Health require standardized documentation of quality indicators based on professional society guidelines and published literature.”14However, no currently standardized and readily available VHA resource measures, reports, and ensures colonoscopy quality.

The authors hypothesized that colonoscopy quality assurance programs vary widely across VHA sites. The objective of this survey was to assess the measurement and reporting practices for colonoscopy quality and identify both strengths and areas for improvement to facilitate implementation of quality assurance programs across the VA health care system.

Methods

The authors performed an online survey of VA sites to assess current colonoscopy quality assurance practices. The institutional review boards (IRBs) at the University of Utah and VA Salt Lake City Health Care System and University of California, San Francisco and San Francisco VA Health Care System classified the study as a quality improvement project that did not qualify for human subjects’ research requiring IRB review.

The authors iteratively developed and refined the questionnaire with a survey methodologist and 2 clinical domain experts. The National Program Director for Gastroenterology, and the National Gastroenterology Field Advisory Committee reviewed the survey content and pretested the survey instrument prior to final data collection. The National Program Office for Gastroenterology provided an e-mail list of all known VA gastroenterology section chiefs. The authors administered the final survey via e-mail, using the Research Electronic Data Capture (REDCap; Vanderbilt University Medical Center) platform beginning January 9, 2017.15

A follow-up reminder e-mail was sent to nonresponders after 2 weeks. After this second invitation, sites were contacted by telephone to verify that the correct contact information had been captured. Subsequently, 50 contacts were updated if e-mails bounced back or the correct contact was obtained. Points of contact received a total of 3 reminder e-mails until the final closeout of the survey on March 28, 2017; 65 of 89 (73%) of the original contacts completed the survey vs 31 of 50 (62%) of the updated contacts.

Analysis

Descriptive statistics of the responses were calculated to determine the overall proportion of VA sites measuring colonoscopy quality metrics and identification of areas in need of quality improvement. The response rate for the survey was defined as the total number of responses obtained as a proportion of the total number of points of contact. This corresponds to the American Association of Public Opinion Research’s RR1, or minimum response rate, formula.16 All categoric responses are presented as proportions. Statistical analyses were performed using STATA SE12.0 (College Station, TX).

Results

Of the 139 points of contact invited, 96 completed the survey (response rate of 69.0%), representing 93 VA facilities (of 141 possible facilities) in 44 different states. Three sites had 2 responses. Sites used various and often a combination of methods to measure quality (Table 1).

A majority of sites’ (63.5%) quality reports represented individual provider data, whereas fewer provided quality reports for physician groups (22.9%) or for the entire facility (40.6%). Provider quality information was de-identified in 43.8% of reporting sites’ quality reports and identifiable in 37.5% of reporting sites’ quality reports. A majority of sites (74.0%) reported that the local gastroenterology section chief or quality manager has access to the quality reports. Fewer sites reported providing data to individual endoscopists (44.8% for personal and peer data and 32.3% for personal data only). One site (1%) responded that quality reports were available for public access. Survey respondents also were asked to provide the estimated time (hours required per month) to collect the data for quality metrics. Of 75 respondents providing data for this question, 28 (29.2%) and 17 (17.7%), estimated between 1 to 5 and 6 to 10 hours per month, respectively. Ten sites estimated spending between 11 to 20 hours, and 7 sites estimated spending more than 20 hours per month collecting quality metrics. A total of 13 respondents (13.5%) stated uncertainty about the time burden.

As shown in the Figure, numerous quality metrics were collected across sites with more than 80% of sites collecting information on bowel preparation quality (88.5%), cecal intubation rate (87.5%), and complications (83.3%). A majority of sites also reported collecting data on appropriateness of surveillance intervals (62.5%), colonoscopy withdrawal times (62.5%), and ADRs (61.5%). Seven sites (7.3%) did not collect quality metrics.

Information also was collected on colonoscopy procedure documentation to inform future efforts at standardization. A small majority (53.1%) of sites reported using endoscopic software to generate colonoscopy procedure documentation. Within these sites, 6 different types of endoscopic note writing software were used to generate procedure notes (Table 2).

Most sites (85.4%) were aware of VHA Directive 1015 recommendations for colonoscopy quality assurance programs. A significant majority (89.5%) of respondents also indicated interest in a centralized automatic reporting system to measure and report colonoscopy quality in some form, either with aggregate data, provider data, or both (Table 3).

Discussion

This survey on colonoscopy quality assurance programs is the first assessment of the VHA’s efforts to measure and report colonoscopy quality indicators. The findings indicated that the majority of VA sites are measuring and reporting at least some measures of colonoscopy quality. However, the programs are significantly variable in terms of methods used to collect quality metrics, specific quality measures obtained, and how quality is reported.

The authors’ work is novel in that this is the first report of the status of colonoscopy quality assurance programs in a large U.S. health care system. The VA health care system is the largest integrated health system in the U.S., serving more than 9 million veterans annually. This survey’s high response rate further strengthens the findings. Specifically, the survey found that VA sites are making a strong concerted effort to measure and report colonoscopy quality. However, there is significant variability in documentation, measurement, and reporting practices. Moreover, the majority of VA sites do not have formal performance improvement plans in place for endoscopists who do not meet thresholds for colonoscopy quality.

Screening colonoscopy for CRC offers known mortality benefits to patients.1,17-19 Significant prior work has described and validated the importance of colonoscopy quality metrics, including bowel preparation quality, cecal intubation rate, and ADR and their association with interval colorectal cancer and death.20-23 Gastroenterology professional societies, including the American College of Gastroenterology and the American Society for Gastrointestinal Endoscopy, have recommended and endorsed measurement and reporting of colonoscopy metrics.24 There is general agreement among endoscopists that colonoscopy quality is an important aspect of performing the procedure.

The lack of formal performance improvement programs is a key finding of this survey. Recent studies have shown that improvements in quality metrics, such as the ADR, by individual endoscopists result in reductions in interval colorectal cancer and death.25 Kahi and colleagues previously showed that providing a quarterly report card improves colonoscopy quality.26 Keswani and colleagues studied a combination of a report card and implementation of standards of practice with resultant improvement in colonoscopy quality.27 Most recently, in a large prospective cohort study of individuals who underwent a screening colonoscopy, 294 of the screening endoscopists received annual feedback and quality benchmark indicators to improve colonoscopy performance.25 The majority of the endoscopists (74.5%) increased their annual ADR category over the study period. Moreover, patients examined by endoscopists who reached or maintained the highest ADR quintile (> 24.6%) had significantly lower risk of interval CRC and death. The lack of formal performance improvement programs across the VHA is concerning but reveals a significant opportunity to improve veteran health outcomes on a large scale.

This study’s findings also highlight the intense resources necessary to measure and report colonoscopy quality. The ability to measure and report quality metrics requires having adequate documentation and data to obtain quality metrics. Administrative databases from electronic health records offer some potential for routine monitoring of quality metrics.28 However, most administrative databases, including the VA Corporate Data Warehouse (CDW), contain administrative billing codes (ICD and CPT) linked to limited patient data, including demographics and structured medical record data. The actual data required for quality reporting of important metrics (bowel preparation quality, cecal intubation rates, and ADRs) are usually found in clinical text notes or endoscopic note documentation and not available as structured data. Due to this issue, the majority of VA sites (79.2%) are using manual chart review to collect quality metric data, resulting in widely variable estimates on time burden. A minority of sites in this study (39.6%) reported using automated endoscopic software reporting capability that can help with the time burden. However, even in the VA, an integrated health system, a wide variety of software brands, documentation practices, and photo documentation was found.

Future endoscopy budget and purchase decisions for the individual VA sites should take into account how new technology and software can more easily facilitate accurate quality reporting. A specific policy recommendation would be for the VA to consider a uniform endoscopic note writer for procedure notes. Pathology data, which is necessary for the calculation of ADR, also should be available as structured data in the CDW to more easily measure colonoscopy quality. Continuous measurement and reporting of quality also requires ongoing information technology infrastructure and quality control of the measurement process.

Limitations

This survey was a cross-section of VA sites’ points of contact regarding colonoscopy quality assurance programs, so the results are descriptive in nature. However, the instrument was carefully developed, using both subject matter and survey method expertise. The questionnaire also was refined through pretesting prior to data collection. The initial contact list was found to have errors, and the list had to be updated after launching the survey. Updated information for most of the contacts was available.

Another limitation was the inability to survey nongastroenterologist-run endoscopy centers, because many centers use surgeons or other nongastroenterology providers. The authors speculate that quality monitoring may be less likely to be present at these facilities as they may not be aware of the gastroenterology professional society recommendations. The authors did not require or insist that all questions be answered, so some data were missing from sites. However, 93.7% of respondents completed the entire survey.

Conclusion

The authors have described the status of colonoscopy quality assurance programs across the VA health care system. Many sites are making robust efforts to measure and report quality especially of process measures. However, there are significant time and manual workforce efforts required, and this work is likely associated with the variability in programs. Importantly, ADR, which is the quality metric that has been most strongly associated with risk of colon cancer mortality, is not being measured by 38% of sites.

These results reinforce a critical need for a centralized, automated quality reporting infrastructure to standardize colonoscopy quality reporting, reduce workload, and ensure veterans receive high-quality colonoscopy.

Acknowledgments

The authors acknowledge the support and feedback of the National Gastroenterology Program Field Advisory Committee for survey development and testing. The authors coordinated the survey through the Salt Lake City Specialty Care Center of Innovation in partnership with the National Gastroenterology Program Office and the Quality Enhancement Research Initiative: Quality Enhancement Research Initiative, Measurement Science Program, QUE15-283. The work also was partially supported by the National Center for Advancing Translational Sciences of the National Institutes of Health Award UL1TR001067 and Merit Review Award 1 I01 HX001574-01A1 from the United States Department of Veterans Affairs Health Services Research & Development Service of the VA Office of Research and Development.

1. Brenner H, Stock C, Hoffmeister M. Effect of screening sigmoidoscopy and screening colonoscopy on colorectal cancer incidence and mortality: systematic review and meta-analysis of randomised controlled trials and observational studies. BMJ. 2014;348:g2467.

2. Meester RGS, Doubeni CA, Lansdorp-Vogelaar I, et al. Colorectal cancer deaths attributable to nonuse of screening in the United States. Ann Epidemiol. 2015;25(3):208-213.e1.

3. Corley DA, Jensen CD, Marks AR, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370(26):1298-1306.

4. Meester RGS, Doubeni CA, Lansdorp-Vogelaar I, et al. Variation in adenoma detection rate and the lifetime benefits and cost of colorectal cancer screening: a microsimulation model. JAMA. 2015;313(23):2349-2358.

5. Boroff ES, Gurudu SR, Hentz JG, Leighton JA, Ramirez FC. Polyp and adenoma detection rates in the proximal and distal colon. Am J Gastroenterol. 2013;108(6):993-999.

6. Center for Medicare and Medicaid Services. Quality measures. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/Qual ityMeasures/index.html. Updated December 19, 2017. Accessed January 17, 2018.

7. Robertson DJ, Lieberman DA, Winawer SJ, et al. Colorectal cancers soon after colonoscopy: a pooled multicohort analysis. Gut. 2014;63(6):949-956.

8. Fayad NF, Kahi CJ. Colonoscopy quality assessment. Gastrointest Endosc Clin N Am. 2015;25(2):373-386.

9. de Jonge V, Sint Nicolaas J, Cahen DL, et al; SCoPE Consortium. Quality evaluation of colonoscopy reporting and colonoscopy performance in daily clinical practice. Gastrointest Endosc. 2012;75(1):98-106.

10. Johnson DA. Quality benchmarking for colonoscopy: how do we pick products from the shelf? Gastrointest Endosc. 2012;75(1):107-109.

11. Anderson JC, Butterly LF. Colonoscopy: quality indicators. Clin Transl Gastroenterol. 2015;6(2):e77.

12. Kaminski MF, Regula J, Kraszewska E, et al. Quality indicators for colonoscopy and the risk of interval cancer. N Engl J Med. 2010;362(19):1795-1803.

13. U.S. Department of Veterans Affairs, Veterans Health Administration. Colorectal cancer screening. VHA Directive 1015. Published December 30, 2014.

14. U.S. Department of Veterans Affairs, VA Office of the Inspector General, Office of Healthcare Inspections. Healthcare inspection: alleged access delays and surgery service concerns, VA Roseburg Healthcare System, Roseburg, Oregon. Report No.15-00506-535. https://www.va.gov/oig /pubs/VAOIG-15-00506-535.pdf. Published July 11, 2017. Accessed January 9, 2018.

15. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381.

16. The American Association for Public Opinion Research. Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys. 9th edition. http://www.aapor.org/AAPOR_Main/media/publications/Standard-Definitions20169theditionfinal.pdf. Revised 2016. Accessed January 9, 2018.

17. Kahi CJ, Imperiale TF, Juliar BE, Rex DK. Effect of screening colonoscopy on colorectal cancer incidence and mortality. Clin Gastroenterol Hepatol. 2009;7(7):770-775.

18. Manser CN, Bachmann LM, Brunner J, Hunold F, Bauerfeind P, Marbet UA. Colonoscopy screening markedly reduces the occurrence of colon carcinomas and carcinoma-related death: a closed cohort study. Gastrointest Endosc. 2012;76(1):110-117.

19. Nishihara R, Wu K, Lochhead P, et al. Long-term colorectal-cancer incidence and mortality after lower endoscopy. N Engl J Med. 2013;369(12):1095-1105.

20. Harewood GC, Sharma VK, de Garmo P. Impact of colonoscopy preparation quality on detection of suspected colonic neoplasia. Gastrointest Endosc. 2003;58(1):76-79.

21. Hillyer GC, Lebwohl B, Rosenberg RM, et al. Assessing bowel preparation quality using the mean number of adenomas per colonoscopy. Therap Adv Gastroenterol. 2014;7(6):238-246.

22. Clark BT, Rustagi T, Laine L. What level of bowel prep quality requires early repeat colonoscopy: systematic review and meta-analysis of the impact of preparation quality on adenoma detection rate. Am J Gastroenterol. 2014;109(11):1714-1723; quiz 1724.

23. Johnson DA, Barkun AN, Cohen LB, et al; US Multi-Society Task Force on Colorectal Cancer. Optimizing adequacy of bowel cleansing for colonoscopy: recommendations from the US multi-society task force on colorectal cancer. Gastroenterology. 2014;147(4):903-924.

24. Rex DK, Petrini JL, Baron TH, et al; ASGE/ACG Taskforce on Quality in Endoscopy. Quality indicators for colonoscopy. Am J Gastroenterol. 2006;101(4):873-885.

25. Kaminski MF, Wieszczy P, Rupinski M, et al. Increased rate of adenoma detection associates with reduced risk of colorectal cancer and death. Gastroenterology. 2017;153(1):98-105.

26. Kahi CJ, Ballard D, Shah AS, Mears R, Johnson CS. Impact of a quarterly report card on colonoscopy quality measures. Gastrointest. Endosc. 2013;77(6):925-931.

27. Keswani RN, Yadlapati R, Gleason KM, et al. Physician report cards and implementing standards of practice are both significantly associated with improved screening colonoscopy quality. Am J Gastroenterol. 2015;110(8):1134-1139.

28. Logan JR, Lieberman DA. The use of databases and registries to enhance colonoscopy quality. Gastrointest Endosc Clin N Am. 2010;20(4):717-734.

1. Brenner H, Stock C, Hoffmeister M. Effect of screening sigmoidoscopy and screening colonoscopy on colorectal cancer incidence and mortality: systematic review and meta-analysis of randomised controlled trials and observational studies. BMJ. 2014;348:g2467.

2. Meester RGS, Doubeni CA, Lansdorp-Vogelaar I, et al. Colorectal cancer deaths attributable to nonuse of screening in the United States. Ann Epidemiol. 2015;25(3):208-213.e1.

3. Corley DA, Jensen CD, Marks AR, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370(26):1298-1306.

4. Meester RGS, Doubeni CA, Lansdorp-Vogelaar I, et al. Variation in adenoma detection rate and the lifetime benefits and cost of colorectal cancer screening: a microsimulation model. JAMA. 2015;313(23):2349-2358.

5. Boroff ES, Gurudu SR, Hentz JG, Leighton JA, Ramirez FC. Polyp and adenoma detection rates in the proximal and distal colon. Am J Gastroenterol. 2013;108(6):993-999.

6. Center for Medicare and Medicaid Services. Quality measures. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/Qual ityMeasures/index.html. Updated December 19, 2017. Accessed January 17, 2018.

7. Robertson DJ, Lieberman DA, Winawer SJ, et al. Colorectal cancers soon after colonoscopy: a pooled multicohort analysis. Gut. 2014;63(6):949-956.

8. Fayad NF, Kahi CJ. Colonoscopy quality assessment. Gastrointest Endosc Clin N Am. 2015;25(2):373-386.

9. de Jonge V, Sint Nicolaas J, Cahen DL, et al; SCoPE Consortium. Quality evaluation of colonoscopy reporting and colonoscopy performance in daily clinical practice. Gastrointest Endosc. 2012;75(1):98-106.

10. Johnson DA. Quality benchmarking for colonoscopy: how do we pick products from the shelf? Gastrointest Endosc. 2012;75(1):107-109.

11. Anderson JC, Butterly LF. Colonoscopy: quality indicators. Clin Transl Gastroenterol. 2015;6(2):e77.

12. Kaminski MF, Regula J, Kraszewska E, et al. Quality indicators for colonoscopy and the risk of interval cancer. N Engl J Med. 2010;362(19):1795-1803.

13. U.S. Department of Veterans Affairs, Veterans Health Administration. Colorectal cancer screening. VHA Directive 1015. Published December 30, 2014.

14. U.S. Department of Veterans Affairs, VA Office of the Inspector General, Office of Healthcare Inspections. Healthcare inspection: alleged access delays and surgery service concerns, VA Roseburg Healthcare System, Roseburg, Oregon. Report No.15-00506-535. https://www.va.gov/oig /pubs/VAOIG-15-00506-535.pdf. Published July 11, 2017. Accessed January 9, 2018.

15. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381.

16. The American Association for Public Opinion Research. Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys. 9th edition. http://www.aapor.org/AAPOR_Main/media/publications/Standard-Definitions20169theditionfinal.pdf. Revised 2016. Accessed January 9, 2018.

17. Kahi CJ, Imperiale TF, Juliar BE, Rex DK. Effect of screening colonoscopy on colorectal cancer incidence and mortality. Clin Gastroenterol Hepatol. 2009;7(7):770-775.

18. Manser CN, Bachmann LM, Brunner J, Hunold F, Bauerfeind P, Marbet UA. Colonoscopy screening markedly reduces the occurrence of colon carcinomas and carcinoma-related death: a closed cohort study. Gastrointest Endosc. 2012;76(1):110-117.

19. Nishihara R, Wu K, Lochhead P, et al. Long-term colorectal-cancer incidence and mortality after lower endoscopy. N Engl J Med. 2013;369(12):1095-1105.

20. Harewood GC, Sharma VK, de Garmo P. Impact of colonoscopy preparation quality on detection of suspected colonic neoplasia. Gastrointest Endosc. 2003;58(1):76-79.

21. Hillyer GC, Lebwohl B, Rosenberg RM, et al. Assessing bowel preparation quality using the mean number of adenomas per colonoscopy. Therap Adv Gastroenterol. 2014;7(6):238-246.

22. Clark BT, Rustagi T, Laine L. What level of bowel prep quality requires early repeat colonoscopy: systematic review and meta-analysis of the impact of preparation quality on adenoma detection rate. Am J Gastroenterol. 2014;109(11):1714-1723; quiz 1724.

23. Johnson DA, Barkun AN, Cohen LB, et al; US Multi-Society Task Force on Colorectal Cancer. Optimizing adequacy of bowel cleansing for colonoscopy: recommendations from the US multi-society task force on colorectal cancer. Gastroenterology. 2014;147(4):903-924.

24. Rex DK, Petrini JL, Baron TH, et al; ASGE/ACG Taskforce on Quality in Endoscopy. Quality indicators for colonoscopy. Am J Gastroenterol. 2006;101(4):873-885.

25. Kaminski MF, Wieszczy P, Rupinski M, et al. Increased rate of adenoma detection associates with reduced risk of colorectal cancer and death. Gastroenterology. 2017;153(1):98-105.

26. Kahi CJ, Ballard D, Shah AS, Mears R, Johnson CS. Impact of a quarterly report card on colonoscopy quality measures. Gastrointest. Endosc. 2013;77(6):925-931.

27. Keswani RN, Yadlapati R, Gleason KM, et al. Physician report cards and implementing standards of practice are both significantly associated with improved screening colonoscopy quality. Am J Gastroenterol. 2015;110(8):1134-1139.

28. Logan JR, Lieberman DA. The use of databases and registries to enhance colonoscopy quality. Gastrointest Endosc Clin N Am. 2010;20(4):717-734.

Practice Management Toolbox: Implementation of optical diagnosis for colorectal polyps

In 1993, the National Polyp Study (NPS) was published in the New England Journal of Medicine and demonstrated the power of colonoscopy and polypectomy to reduce subsequent risk of colorectal cancer. The protocol for NPS included removal of all visible lesions and all were sent for pathological review. This process became standard practice in gastroenterology. Recently, the concept of “optical biopsy,” where hyperplastic polyps can be accurately identified and discarded in lieu of pathologic analysis might be safely accomplished, thus reducing costs without compromising patient health outcomes. Issues related to accuracy of optical biopsies, potential liability, and practice reimbursement have all been barriers to widespread implementation. In this month’s column, Dr. Kaltenbach and colleagues outline a process to standardize studies, training, and classification of optical biopsies; a needed step in the evolution of our colonoscopy practice.

John I Allen, M.D. , MBA, AGAF,

Special Section Editor

The potential application of optical diagnosis for diminutive colorectal polyp is at a crossroads. Recent studies have shown its feasibility; the diagnostic operating characteristics for the real-time diagnosis of diminutive colorectal polyps are similar to those of pathologists. These studies showed 93% concordance between the surveillance interval recommendations that are based on optical and pathologic diagnoses and ≥90% negative predictive value for polyps in the rectosigmoid colon.1 These findings may open the applications of optical diagnosis for diminutive colorectal polyps in practice, which in turn may lead to improved cost-effectiveness of colonoscopy for colorectal cancer screening.2

However, some recent reports of optical diagnosis conducted beyond the academic setting did not reproduce the high levels of accuracy, eliciting reservation on the generalizability of optical diagnosis in practice. A variety of explanations could account for or contribute to these results. These studies (as well as some studies from academia) have not followed the key steps for a system redesign, the underlying basis for implementation of optical diagnosis. Because of the recent pattern of results, we propose a set of recommendations to be considered by investigators in the design of future studies. Our objective is to share the lessons learned from successful optical diagnosis studies1 and thereby to suggest a framework in which to conduct and report such studies.

Designing an optical diagnosis study

General framework

The implementation of optical diagnosis, a system redesign, should be evidence-based and adopt a quality improvement model. It requires participants to recognize that learning is experiential: “a cyclic process of doing, noticing, questioning, reflecting, exploring concepts and models (evidence), and then doing again – only doing it better the next time (PDSA cycle)” [Supplementary Figure 1; www.cghjournal.org/article/S1542-3565(14)01469-4/fulltext].3 The iterative process of “checking” the correlation of endoscopic diagnosis to pathology findings is important. Without it, the study participants miss a significant opportunity to continuously improve the quality of their optical diagnoses.

Studies should commence once there is consistency in the ability to provide optical and pathology diagnoses, and this ability should be periodically checked, following the Plan-Do-Study-Act cycle. A study can only be successful when the participants remain interested in learning, engaged, and committed to the process. Published guidelines on the general framework for study conduct and standards for the reporting of results can be very useful. As such, the research team should be deliberate to include the key elements of diagnostic studies before, during, and after the study [Supplementary Table 1; www.cghjournal.org/article/S1542-3565(14)01469-4/fulltext]. These standards are necessary to minimize biased results from incompletely designed, conducted, or analyzed diagnostic studies.

Optical diagnosis specific framework

Training

The knowledge and skills required to perform optical diagnosis are not innate but can be learned by people with varying levels of expertise. As such, training modules have been developed and studied. In an early report of training of a short teaching session on optical diagnosis for the endoscopic differentiation of colorectal polyp histology, Raghavendra et al4 showed attainment of high accuracy (90.8%) and good interobserver agreement (kappa = 0.69) by using high-definition still photographs of polyps. Ignjatovic et al5 assessed the construct and content validity of a still image–based teaching module on the basic principles of narrow band imaging (NBI), the microvessel patterns, and the role of NBI in differentiating between adenomas and hyperplastic polyps. After training, they found improved accuracy and specificity of optical diagnosis in novices, trainees, and experts with moderate agreement (kappa = 0.56, 0.70, and 0.54, respectively). Rastogi et al6 showed the importance of active feedback to achieve high performance. After a 20-minute training module, community and academic practitioners reviewed 80 short clips of diminutive polyps, with feedback provided after each video. They made significant improvements in accuracy and the proportion of high confidence predictions as they progressed through consecutive video blocks of 20. Although none of the studies used consecutively collected images or video content and none assessed durability of performance after the training in real-time in vivo setting, their findings underscore the importance of learning before engagement in a formal study or the practice of optical diagnosis.

A teaching video entitled “Optical Diagnosis of Colorectal Polyps” is available through the American Society for Gastrointestinal Endoscopy On-line Learning Center. The program outlines the steps necessary to practice the technique. It provides a review of the concepts of optical diagnosis and numerous illustrative case examples.

Documentation of competence

The documentation of successful completion of training is important. The formal training should be based on a validated tool, should be periodic, and should include an in vivo component. Ex vivo competency should be assessed before evaluation of clinical performance. After achievement of ex vivo performance thresholds, study participants should then be evaluated in real time to ensure sustained performance before study initiation. Finally, and consistent with the Plan-Do-Study-Act quality improvement model, participants should undergo additional ex vivo testing periodically throughout the study to ensure sustained performance and evaluate the need for further training. By using this approach of regular self-training and a robust teaching tool, we observed no significant difference in a group of experienced endoscopists between performance in the first and second halves of the study. Agreement in surveillance interval recommendations between optical-based and pathology-based strategies exceeded 95% in both halves of the study.7,8

Standardized optical diagnostic criteria

When feasible, investigators should use validated criteria for the endoscopic diagnosis of colorectal polyps. An example is the Narrow Band Imaging International Colorectal Endoscopic (NICE) classification by using NBI, which describes real-time differentiation of non-neoplastic (type 1) and neoplastic (type 2) colorectal polyps,9 as well as for lesions with deep submucosal invasion (type 3). Other endoscopic classifications of colorectal polyps by using NBI, i-Scan, or chromoendoscopy have been described with and without optical magnification but have not yet been validated.

Although sessile serrated adenoma/polyps exhibit features of non-neoplastic lesions, their distinction from hyperplastic polyps is challenging because of the variations in pathologic diagnoses. Until such endoscopic and pathologic distinctions are further described, investigated, and reproducible, it may be necessary to remove and submit to pathology all proximal and/or large NICE type 1 polyps.

Training should include a working definition of the application of confidence levels. The principle of confidence levels (high vs. low) is easily understood but lacks a formal operational definition. A recent study suggested that the speed of the diagnostic determination correlated with confidence, with a cutoff of 5 seconds predicting high confidence. Five expert endoscopists performed optical diagnosis of 1309 polyps in 558 patients. The average time to diagnosis was 20 seconds, and this was an independent predictor of accuracy. An optical diagnosis made in 5 seconds or less had an accuracy greater than 90%, with 90% high-confidence determinations; those made in 6–60 seconds had an accuracy of 85%, with 77% high confidence, and diagnoses that took more than 60 seconds had an accuracy of 68%, with only 64% high confidence.

Standardized pathologic diagnostic criteria

It is critically important to use a diagnostic standard for the colorectal polyp histopathology. The World Health Organization criteria have been the most used. It is key to recognize that even standardized pathology is not a gold standard but rather a reference standard, which is often less than 100% accurate. In clinical practice, many diminutive polyps are not retrieved after polypectomy (6%–13%), are unsuitable for analysis because of diathermy artifacts (7%–19%), or may be misclassified because of incorrect orientation or limited sectioning. Furthermore, errors in differentiating conventional adenomas from hyperplastic/serrated lesions by pathologists can be as high as 10%. This may be because the normal surrounding tissue in a polypectomy specimen of a diminutive lesion has been sectioned and interpreted. Efforts to strengthen pathology as a reference standard in clinical trials must be taken, including the need to have centralized blinded pathology reading and the need to perform re-cuts of the individual polyp specimens, especially those that are interpreted by pathologists as normal tissue.

Standardized outcomes

The ASGE Preservation and Incorporation of Valuable Endoscopic Innovations working group has established a priori diagnostic thresholds for real-time endoscopic assessment of the histology of diminutive colorectal polyps.10 These thresholds are meant to define clinically important roles for imaging technology and acceptable thresholds of performance for which ASGE could support their use as an alternative paradigm for management of diminutive polyps in clinical practice. These are the two proposed thresholds for optical diagnosis. (1) For colorectal polyps ≤5 mm in size to be resected and discarded without pathologic assessment, endoscopic technology (when used with high confidence) and histopathologic assessment of polyps ≤5 mm in size should provide ≥90% agreement in assignment of post-polypectomy surveillance intervals when compared with decisions that are based on pathology assessment of all identified polyps. (2) For a technology to be used to guide the decision to leave suspected rectosigmoid hyperplastic polyps ≤5 mm in size in place (without resection), the technology should provide ≥90% negative predictive value (when used with high confidence) for adenomatous histology.

We emphasize the importance of confidence levels in making an optical diagnosis. The use of confidence levels allows calibration and standardization between endoscopists with varying levels of diagnostic ability and reduces interobserver variation. Thus, if a polyp lacks clear endoscopic features precluding confident endoscopic assignment of histology, the endoscopist could still resect and submit it for pathologic assessment (Figure 1).

Documentation

Photo documentation and archiving are a key component in both the study and clinical implementation of optical diagnosis for accreditation and quality assurance.

The first step is to optimize the processor, monitor, and capture settings to display and capture high-quality representative images of the polyp. The endoscope manufacturer may assist to set optimal image parameters on the endoscope processor. Digital integration of the polyp image and optical diagnosis into the endoscopy reporting system is necessary for efficient real-time relay of information as well as reliability for review. Such archiving would permit both self and formal audits.

Limitations

Other factors may hinder the outcomes of a study of optical diagnosis. A lack of academic interest or lack of financial incentive may influence the commitment or performance during a study. Physicians with direct or indirect ownership in a pathology facility should at a minimum declare a potential conflict of interest.

Discussion

Our comments are directed only as an effort to make results of published trials more consistent. Whether and to what extent specific factors unique to community physicians may contribute to the recent less promising results remain uncertain. These and other as yet unconsidered factors will likely also affect academic physicians who do not have a special interest in endoscopy or in this endoscopic issue. Such variable performance in optical diagnosis could be interpreted to mean that optical diagnosis can and should be implemented in the context of a credentialing program. Thus, because many studies have met proposed thresholds and some have not, accurate optical diagnosis is possible, but individual physicians need to prove their skill to start the practice. Such a policy could be implemented in any practice whether academic or community-based.

The actual implementation of optical diagnosis must also address other obstacles. For example, there are often institutional policies requiring submission of resected tissue to pathology. Furthermore, adenoma detection rate (ADR) has emerged as the most important quality indicator in colonoscopy. In a resect and discard policy, ADR would have to be measured by photography, which would require endoscope manufacturers to provide image storage with quality that reproduces the image seen in real time and that can be easily audited by experts to verify ADR. Such image storage would also be necessary to provide medical-legal protection for endoscopists. Finally, the current fee-for-service reimbursement model does not result in optimal financial incentives to drive a resect and discard policy forward. However, certain reimbursement models under consideration such as bundled payment and reference payment could make resect and discard more attractive to endoscopists.

Conclusion

We hope that the framework we describe will be useful in improving the accuracy, completeness of reporting, and meta-analysis of future studies of the diagnostic characteristics of optical diagnosis, with the ultimate goal of incorporating this paradigm shift into routine day-to-day clinical practice.

Supplementary Material

Supplementary Figure 1

References

1. McGill, S.K., Evangelou, E., Ioannidis, J.P., et al. Narrow band imaging to differentiate neoplastic and non-neoplastic colorectal polyps in real time: a meta-analysis of diagnostic operating characteristics. Gut 2013;62:1704-13.

2 . Hassan, C., Pickhardt, P.J., Rex, D.K. A resect and discard strategy would improve cost-effectiveness of colorectal cancer screening. Clin. Gastroenterol. Hepatol. 2010;8:865-9.

3. Glasziou, P., Ogrinc, G., Goodman, S. Can evidence-based medicine and clinical quality improvement learn from each other? BMJ Qual. Saf. 2011;20: i13-i17.

4. Raghavendra, M., Hewett, D.G., Rex, D.K. Differentiating adenomas from hyperplastic colorectal polyps: narrow-band imaging can be learned in 20 minutes. Gastrointest. Endosc. 2010;72:572-6.

5. Ignjatovic, A., Thomas-Gibson, S., East, J.E., et al. Development and validation of a training module on the use of narrow-band imaging in differentiation of small adenomas from hyperplastic colorectal polyps. Gastrointest. Endosc. 2011;73:128-33.

6. Rastogi, A., Rao, S.D., Gupta, N., et al. Impact of a computer-based teaching module on characterization of diminutive colon polyps by using narrow band imaging by non-experts in academic and community practice: a video-based study. Gastrointest. Endosc. 2014;79:390-8.