User login

PCP Referral

Over the past decade, research has demonstrated a value gap in US healthcare, characterized by rapidly rising costs and substandard quality.1, 2 Public reporting of hospital performance data is one of several strategies promoted to help address these deficiencies. To this end, a number of hospital rating services have created Web sites aimed at healthcare consumers.3 These services provide information about multiple aspects of healthcare quality, which in theory might be used by patients when deciding where to seek medical care.

Despite the increasing availability of publicly reported quality data comparing doctors and hospitals, a 2008 survey found that only 14% of Americans have seen and used such information in the past year, a decrease from 2006 (36%).4 A similar study in 2007 found that after seeking input from family and friends, patients generally rely on their primary care physician (PCP) to assist them to make decisions about where to have elective surgery.5 Surprisingly, almost nothing is known about how publicly reported data is used, if at all, by PCPs in the referral of patients to hospitals.

The physician is an important intermediary in the buying process for many healthcare services.6 Tertiary care hospitals depend on physician referrals for much of their patient volume.7 Until the emergence of the hospitalist model of care, most primary care physicians cared for their own hospitalized patients, and thus hospital referral decisions were largely driven by the PCP's admitting privileges. However, following the rapid expansion of the hospitalist movement,8, 9 there has been a sharp decrease in the number of PCPs who provide direct patient care for their hospitalized patients.8 As a result, PCPs may now have more choice in regards to hospital referrals for general medical conditions. Potential factors influencing a PCP's referral decisions might include familiarity with the hospital, care quality, patient convenience, satisfaction with the hospital, or hospital reputation.

Studies of cardiac surgery report cards in New York9 and Pennsylvania,10 conducted in the mid‐1990s, found that cardiologists did not use publicly reported mortality data in referral decisions, nor did they share it with patients. Over the past 2 decades, public reporting has grown exponentially, and now includes many measures of structure, processes, and outcomes for almost all US hospitals, available for free over the Internet. The growth of the patient safety movement and mandated public reporting might also have affected physicians' views about publicly reported quality data. We surveyed primary care physicians to determine the extent to which they use information about hospital quality in their referral decisions for community‐acquired pneumonia, and to identify other factors that might influence referral decisions.

METHODS

We obtained an e‐mail list of primary care physicians from the medical staff offices of all area hospitals within a 10‐mile radius of Springfield, MA (Baystate Medical Center, Holyoke Medical Center, and Mercy Medical Center). Baystate Medical Center is a 659‐bed academic medical center and Level 1 trauma center, while Holyoke and Mercy Medical Center are both 180‐bed acute care hospitals. Physicians were contacted via e‐mail from June through September of 2009, and asked to participate in an anonymous, 10‐minute, online survey accessible through an Internet link (SurveyMonkey.com) about factors influencing a primary care physician's hospital referral choice for a patient with pneumonia. To facilitate participation, we sent 2 follow‐up e‐mail reminders, and respondents who completed the entire survey received a $15 gift card. The study was approved by the institutional review board of Baystate Medical Center and closed to participation on September 23, 2009.

We created the online survey based on previous research7 and approximately 10 key informant interviews. The survey (see Supporting Information, Appendix, in the online version of this article) contained 13 demographic questions and 10 questions based on a case study of pneumonia (Figure 1). The instrument was pilot tested for clarity with a small group of primary care physicians at the author's institution and subsequently modified. We chose pneumonia because it is a common reason for a PCP to make an urgent hospital referral,11 and because there is a well‐established set of quality measures that are publicly reported.12 Unlike elective surgery, for which patients might research hospitals or surgeons on their own, patients with pneumonia would likely rely on their PCP to recommend a hospital for urgent referral. In contrast, PCPs know they will refer a number of pneumonia patients to hospitals each year and therefore might have an interest in comparing the publicly reported quality measures for local hospitals.

Respondents were shown the case study and asked to refer the hypothetical patient to 1 of 4 area hospitals. Respondents were asked to rate (on a 3‐point scale: not at all, somewhat, or very) the importance of the following factors in their referral decision: waiting time in the emergency room, distance traveled by the patient, experience of other patients, severity of patient's illness, patient's insurance, hospital's reputation among other physicians and partners, admitting privileges with a specific hospital, admitting arrangements with a hospitalist group, familiarity with the hospital, availability of subspecialists, quality of subspecialists, nursing quality, nursing staffing ratios, hospital's case volume for pneumonia, publicly available quality measures, patient preference, distance from your practice, shared electronic record system, and quality of hospital discharge summaries. Next, we measured provider's awareness of publicly reported hospital quality data and whether they used such data in referring patients or choosing their own medical care. Specifically, we asked about familiarity with the following 4 Web sites: Massachusetts Quality and Cost (a state‐specific Web site produced by the Massachusetts Executive Office of Health and Human Services)13; Hospital Compare (a Web site developed and maintained by Centers for Medicare and Medicaid Services [CMS] and the Department of Health and Human Services)14; Leapfrog Group (a private, nonprofit organization)15; and Health Grades (a private, for‐profit company).16

We then asked participants to rate the importance of the following performance measures when judging a hospital's performance: antibiotics within 6 hours of arrival to the hospital, appropriate initial antibiotic, blood culture drawn before antibiotics given, smoking cessation advice/counseling, oxygenation assessment, risk‐adjusted mortality, intensive care unit staffing, influenza vaccination, pneumococcal vaccination, Leapfrog's never events,15 volume, Leapfrog safe practices score, cost, computerized physician order entry system, Magnet status,17 and U.S. News & World Report's Best Hospitals designation.18 Lastly, we asked participants to state, using a 3‐point scale (agree, disagree, neutral), their level of agreement that the following factors, adapted from Schneider and Epstein,10 represented limitations of public reporting: 1) risk‐adjusted methods are inadequate to compare hospitals fairly; 2) mortality rates are an incomplete indication of the quality of a hospital's care; 3) hospitals can manipulate the data; and 4) ratings are inaccurate for hospitals with small caseloads.

Factors associated with physicians' knowledge of publicly reported data were analyzed with bivariate analysis. Since all factors are categorical, chi‐square analysis was used for bivariate analysis. No factor had a P value <0.2 on bivariate analysis, thus multiple logistic regression was not performed.

RESULTS

Of 194 primary care physicians who received invitations, 92 responded (response rate of 47%). See Table 1 for respondents' characteristics. All age groups were represented; most were male and between 3554 years of age. Respondents were evenly divided between those who owned their own practices (54%) and those working for a health system (46%). Ninety‐three percent of PCPs maintained admitting privileges (45% to more than 1 hospital), but only 20% continued to admit their own patients. When asked where they would send a hypothetical pneumonia patient, only 4% of PCPs chose a hospital to which they had never had admitting privileges.

| Variable | No. (%) of Respondents |

|---|---|

| Age | |

| 2534 | 5 (5) |

| 3544 | 27 (29) |

| 4554 | 24 (26) |

| >55 | 36 (39) |

| Gender | |

| Male | 65 (71) |

| Female | 27 (29) |

| Years out of medical school | |

| <6 | 6 (7) |

| 610 | 9 (10) |

| 1115 | 17 (18) |

| >15 | 60 (65) |

| % Patients seen who are covered by | |

| Medicaid: Mean (SD) | 28 (26) |

| Medicare: Mean (SD) | 31 (18) |

| Private: Mean (SD) | 40 (25) |

| Number of time doing patient care: Mean (SD) | 85 (23) |

| Number of patients admitted/sent to hospital/mo | |

| <6 | 40 (47) |

| 610 | 25 (29) |

| 1120 | 12 (14) |

| >20 | 8 (9) |

| Practice type | |

| Solo | 13 (15) |

| Single specialty group | 36 (42) |

| Multi‐specialty group | 36 (42) |

| Practice ownership | |

| Independent | 45 (54) |

| Health system | 38 (46) |

| Currently admits own patients | |

| Yes | 17 (20) |

| No | 66 (80) |

| Current hospital admitting privileges | |

| A | 63 (76) |

| B | 41 (49) |

| C | 3 (4) |

| D | 12 (14) |

| None | 6 (7) |

| Other | 2 (2) |

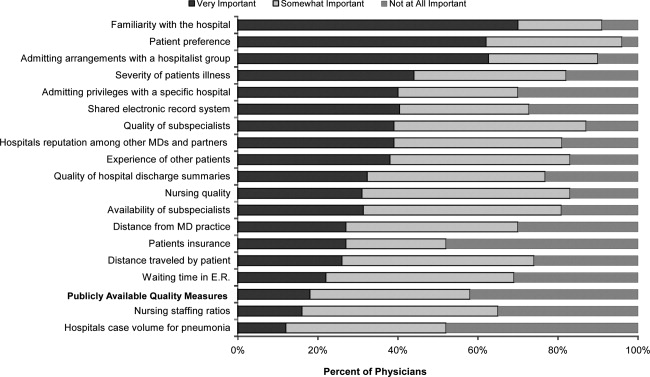

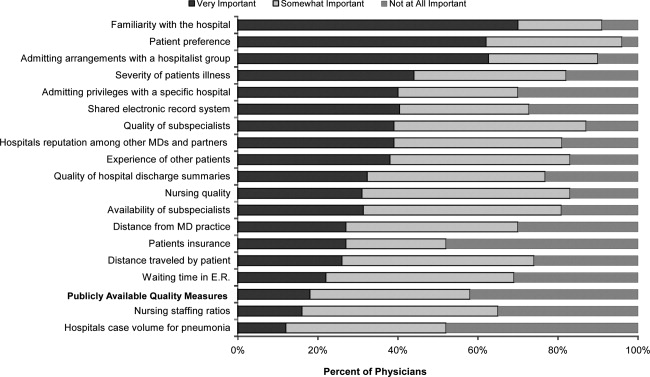

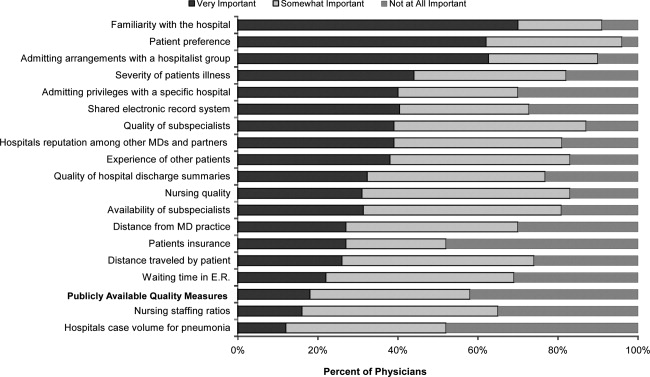

Physician's ratings of the importance of various factors in their referral decision are shown in Figure 2. The following factors were most often considered very important: familiarity with the hospital (70%), patient preference (62%), and admitting arrangements with a hospitalist group (62%). In contrast, only 18% of physicians viewed publicly available hospital quality measures as very important when making a referral decision. Factors most often rated not at all important to participants' decisions were patient insurance (48%), hospital's case volume for pneumonia (48%), and publicly available quality measures (42%).

Of the 61% who were aware of Web sites that report hospital quality, most (52%) were familiar with Massachusetts Quality and Cost, while few (27%) were familiar with Hospital Compare. None of the physicians we surveyed reported having used publicly reported quality information when making a referral decision or having discussed such data with their patients. However, 49% stated that publicly reported performance data was somewhat and 10% very important to decisions regarding the medical care they receive. None of the demographic characteristics that we assessed (including age, gender, or years out of medical school) were associated with awareness of publicly reported data in bivariate analyses.

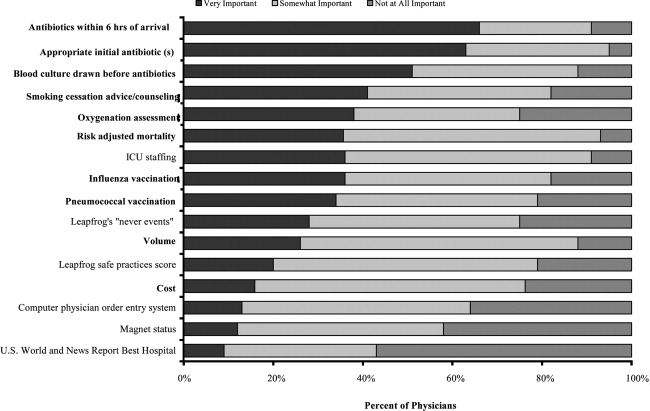

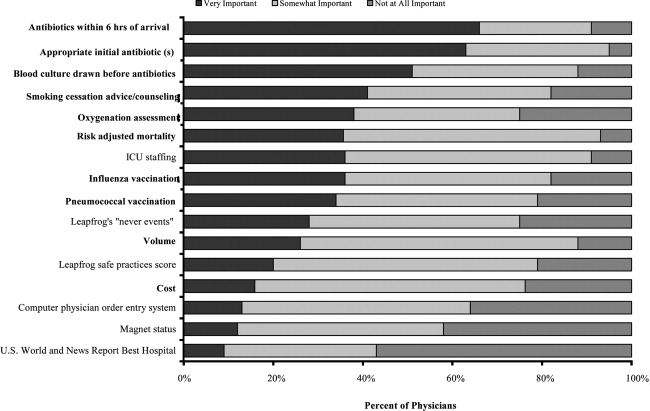

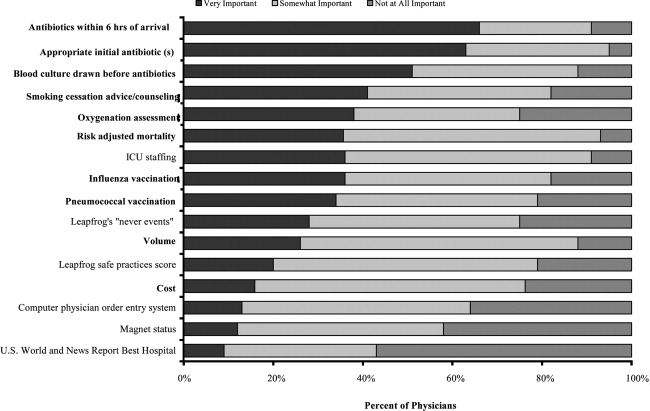

Respondents' ratings of specific quality measures appear in Figure 3. PCPs most often identified the following factors as being very important when judging hospital quality: percent of pneumonia patients given initial antibiotics within 6 hours after arrival (66%), percent of pneumonia patients given the most appropriate initial antibiotic (63%), and percent of pneumonia patients whose initial emergency room (ER) blood culture was performed prior to the administration of the first hospital dose of antibiotics (51%). The factors most often rated not at all important included: U.S. News & World Report's Best Hospitals designation (57%), Magnet Status (42%), and computer physician order entry system (40%).

When asked about limitations of publicly reported performance data, 42% agreed that risk‐adjusted methods were inadequate to compare hospitals fairly, 76% agreed that mortality rates were an incomplete indication of the quality of hospitals care, 62% agreed that hospitals could manipulate the data, and 72% agreed that the ratings were inaccurate for hospitals with small caseloads.

DISCUSSION

In 2003, the Hospital Quality Alliance began a voluntary public reporting program of hospital performance measures, for pneumonia, acute myocardial infarction, and congestive heart failure, that was intended to encourage quality improvement activity by hospitals, and to provide patients and referring physicians with information to make better‐informed choices.19 These data are now easily available to the public through a free Web site (

Despite their lack of familiarity with Hospital Compare, it was the quality measures that are reported by Hospital Compare that they identified as the best indicators of hospital quality: appropriate initial antibiotic, antibiotics within 6 hours, and blood cultures performed prior to the administration of antibiotics. In fact, the 5 measures most often cited as very important to judging hospital quality were all measures reported on Hospital Compare.

As the US healthcare system becomes increasingly complex and costly, there is a growing interest in providing patients with physician and hospital performance data to help them select the provider.21 It is postulated that if patients took a more active role in choosing healthcare providers, and were forced to assume greater financial responsibility, then consumerism will force improvements in quality of care while maintaining or even lowering costs.21 However, studies demonstrate that most patients are unaware of performance data and, if they are aware, still value familiarity over quality ratings.4 Moreover, patients rely on the knowledge of their primary care physician to guide them.5

This is the first study we are aware of that examines how primary care physicians use publicly reported quality data in hospital referral decisions. Studies from more than a decade ago found that publicly reported data had minimal impact on referral decisions from cardiologists to cardiac surgeons. A survey of Pennsylvania's cardiologists and cardiac surgeons showed that although 82% were aware of risk‐adjusted mortality rates published for surgeons, only 10% of cardiologists reported these to be very important when evaluating the performance of a cardiothoracic surgeon. Furthermore, 87% of cardiologists stated that mortality and case volume information reported on cardiac surgeons had minimal or no influence on their referral practices.10 In 1997, a survey of cardiologists in New York found that only 38% of respondents reported that risk‐adjusted outcome data had affected their referrals to surgeons very much or somewhat.9 In addition, most authors conclude that public reporting has had little or no effect on market share.22 Despite growth in the number of measures and improved accessibility, our physicians were even less likely to be aware of, or use, publicly reported data than physicians a decade earlier.

Of course, even if public reporting does not influence referral patterns, it could still improve healthcare quality in several ways. First, feedback about performance may focus quality improvement activities in specific areas that represent gaps in care.10 This could take the form of an appeal to professionalism,23 or the desire to preserve one's reputation by not appearing on a list of poor performers.24 Second, hospitals' desire to appear on lists of high performers, such as U.S. News & World Report's hospital rankings, for marketing purposes, might stimulate improvement activities.10 Finally, publicly reported measures could form the basis for pay‐for‐performance incentives that further speed improvement.25

Our study has several limitations. First, our sample size was small and restricted to 1 region of 1 state, and may not be representative of either the state or nation as a whole. Still, our area has a high level of Internet use, and several local hospitals have been at the vanguard of the quality movement, generally scoring above both state and national averages on Hospital Compare. In addition, Massachusetts has made substantial efforts to promote its own public reporting program, and half the surveyed physicians reported being aware of the Massachusetts Quality and Cost Web site. The fact that not a single area physician surveyed used publicly reported data when making referral decisions is sobering. We believe it is unlikely that other areas of the country will have a substantially higher rate of use. Similarly, our response rate was under 50%. Physicians who did not take the survey may have differed in important ways from those who did. Nevertheless, our sample included a broad range of physician ages, practice types, and affiliations. It seems unlikely that those who did not respond would be more inclined to use publicly reported data than those who did. Second, we assessed decision‐making around a single medical condition. Physicians may have used publicly reported data for other decisions. However, the condition we chose was both urgent (as opposed to emergent) and possesses a robust set of publicly reported quality measures. If physicians do not use publicly reported data for this decision, it seems unlikely they would use it for conditions that have fewer reliable measures (eg, gall bladder surgery) or where the choice of hospital is generally made in an ambulance (eg, myocardial infarction). Finally, the low awareness of public reporting made it difficult for some physicians to answer some of the questions regarding publicly reported hospital quality data because they were unfamiliar with the language utilized by the Web sites (eg, magnet status, Leapfrog never events). It is possible that our results may have been altered slightly if a glossary had been provided.

Despite these limitations, our study suggests that more than 6 years after the launch of the Hospital Quality Alliance, primary care physicians do not appear to make use of these data when choosing a hospital for their patients suffering from pneumonia. Instead, they rely on familiarity with a hospital and past relationships. Even though a majority of the physicians surveyed no longer admitted their own patients, they continue to send patients to hospitals where they had privileges. This finding is not surprising, as physicians also cling to familiar therapies, and may be reluctant to prescribe a new medication or perform an unfamiliar procedure, even if it is indicated. Such reliance on familiarity may make physicians feel comfortable, but does not always result in the best care for patients. Acquiring familiarity, however, requires time and effort, something that physicians generally have in short supply; and while there are plenty of industry representatives to overcome physicians' hesitancy to prescribe new treatments, there are no analogous agents to educate physicians about public reporting or to help them overcome hesitancy about trying a new hospital.

Suspicion about the validity of public reporting may also play a role in the physicians' reported behavior. In past studies of cardiac report cards, cardiologists were most concerned that risk adjustment methods were inadequate (77%) and that mortality rates were an incomplete indicator of the quality of surgical care (74%). They were less concerned about manipulation of data (52%) or small caseloads (15%).10 Our physicians were also concerned that mortality rates were an incomplete measure of quality (76%) but less concerned about risk adjustment (42%), perhaps because many structure and process measures are not subject to risk adjustment. In contrast, they were somewhat more concerned that hospitals could manipulate the data (62%), which again may reflect process measures versus mortality statistics. Other reasons for not using the data may include a lack of awareness of the data or how to access it, or a belief that hospitals do not vary in quality.

Interestingly, even though most respondents were not aware of Hospital Compare, they found the information presented there to best reflect the overall hospital quality. Also, while respondents indicated that they did not use publicly reported data when referring patients, almost half of PCPs reported that publicly reported performance data was at least somewhat important in choosing their own medical care. Thus, although public reporting appears not to have reached its full potential, some publicly reported quality measures have clearly entered the consciousness of PCPs. In contrast, other highly touted measures such as computerized physician order entry systems were not appreciated, and popular designations such as U.S. News & World Report's Best Hospitals were least valued, even though 1 area hospital carries this designation. One conclusion might be that CMS should abandon Hospital Compare since neither patients4 nor providers use it. However, public reporting may improve quality in other ways. Moreover, physicians appear interested in the data even if they are not aware of it. Therefore, given the large investment by CMS and individual hospitals in collecting the data required for Hospital Compare, CMS might consider making greater efforts to increase primary care physician awareness of the Hospital Compare Web site. At the same time, high‐performing hospitals may want to communicate their performance scores to local PCPs as part of their marketing strategy. Future studies could assess whether such practices affect physician referral decisions and subsequent market share of high‐performing hospitals.

Acknowledgements

The authors of this study thank Jane Garb for her help with statistical analysis.

- Centers for Medicare and Medicaid Services. National Health Care Expenditures Data.2010. Available at: http://www.2.cms.gov/NationalHealthExpendData/25_NHE_Fact_Sheet.asp. Accessed April 22,year="2010"2010.

- ,,, et al.The quality of health care delivered to adults in the United States.N Engl J Med.2003;348(26):2635–2645.

- ,. The State‐of‐the‐Art of Online Hospital Public Reporting: a Review of Fifty‐One Websites. 2005. Available at: http://www.delmarvafoundation.org/newsAndPublications/reports/documents/WebSummariesFinal9.2.04.pdf. Accessed February 24,2012.

- . Kaiser Family Foundation. 2008 Update on Consumers' Views of Patient Safety and Quality Information. 2010. Available at: http://www.kff.org/kaiserpolls/upload/7819.pdf. Accessed April 20,2010.

- ,,.Choosing where to have major surgery: who makes the decision?Arch Surg.2007;142(3):242–246.

- ,,, et al.Resolving the gatekeeper conundrum: what patients value in primary care and referrals to specialists.JAMA.1999;282(3):261–266.

- ,,,.How physicians make referrals.J Health Care Mark.1993;13(2):6–17.

- ,,,.Growth in the care of older patients by hospitalists in the United States.N Engl J Med.2009;360(11):1102–1112.

- ,,,DeBuono BA. Public release of cardiac surgery outcomes data in New York: what do New York state cardiologists think of it?Am Heart J.1997;134(6):1120–1128.

- ,.Influence of cardiac‐surgery performance reports on referral practices and access to care. A survey of cardiovascular specialists.N Engl J Med.1996;335(4):251–256.

- ,,,,.Primary care summary of the British Thoracic Society Guidelines for the management of community acquired pneumonia in adults: 2009 update. Endorsed by the Royal College of General Practitioners and the Primary Care Respiratory Society UK.Prim Care Respir J.2010;19(1):21–27.

- Hospital Quality Alliance Quality Measures.2010. Available at: http://www.hospitalqualityalliance.org/hospitalqualityalliance/qualitymeasures/qualitymeasures.html. Accessed April 25,year="2010"2010.

- Massachusetts Executive Office of Health and Human Services. Massachusetts Executive Quality and Cost.2010. Available at: http://www.mass.gov/healthcareqc. Accessed February 24,year="2012"2012.

- Centers for Medicare and Medicaid Services. Hospital Compare.2010. Available at: http://www.hospitalcompare.hhs.gov. Accessed April 19,year="2010"2010.

- The Leapfrog Group for Patient Safety.2010. Available at: http://www.leapfroggroup.org/. Accessed April 23,year="2010"2010.

- Health Grades. 2010. Available at: http://www.healthgrades.com. Accessed April 19,2010.

- American Nurses Credentialing Center. Magnet Recognition Program. 2010. Available at: http://www.nursecredentialing.org/Magnet.aspx. Accessed April 15,2010.

- U.S. News 353(3):265–274.

- . US ads push patients to shop for hospitals. USA Today. May 20, 2008. Available at: http://www.usatoday.com/news/health/2008‐05‐20‐Hospitalads_N.htm. Accessed February 24, 2012.

- ,,.How do elderly patients decide where to go for major surgery? Telephone interview survey.BMJ.2005;331(7520):821.

- ,,, et al.Public reporting of cardiac surgery performance: part 1—history, rationale, consequences.Ann Thorac Surg.2011;92(3 suppl):S2–S11.

- ,,.Public reporting of hospital quality: recommendations to benefit patients and hospitals.J Hosp Med.2009;4(9):541–545.

- ,,, et al.When things go wrong: the impact of being a statistical outlier in publicly reported coronary artery bypass graft surgery mortality data.Am J Med Qual.2008;23(2):90–95.

- ,,, et al.Public reporting and pay for performance in hospital quality improvement.N Engl J Med.2007;356(5):486–496.

Over the past decade, research has demonstrated a value gap in US healthcare, characterized by rapidly rising costs and substandard quality.1, 2 Public reporting of hospital performance data is one of several strategies promoted to help address these deficiencies. To this end, a number of hospital rating services have created Web sites aimed at healthcare consumers.3 These services provide information about multiple aspects of healthcare quality, which in theory might be used by patients when deciding where to seek medical care.

Despite the increasing availability of publicly reported quality data comparing doctors and hospitals, a 2008 survey found that only 14% of Americans have seen and used such information in the past year, a decrease from 2006 (36%).4 A similar study in 2007 found that after seeking input from family and friends, patients generally rely on their primary care physician (PCP) to assist them to make decisions about where to have elective surgery.5 Surprisingly, almost nothing is known about how publicly reported data is used, if at all, by PCPs in the referral of patients to hospitals.

The physician is an important intermediary in the buying process for many healthcare services.6 Tertiary care hospitals depend on physician referrals for much of their patient volume.7 Until the emergence of the hospitalist model of care, most primary care physicians cared for their own hospitalized patients, and thus hospital referral decisions were largely driven by the PCP's admitting privileges. However, following the rapid expansion of the hospitalist movement,8, 9 there has been a sharp decrease in the number of PCPs who provide direct patient care for their hospitalized patients.8 As a result, PCPs may now have more choice in regards to hospital referrals for general medical conditions. Potential factors influencing a PCP's referral decisions might include familiarity with the hospital, care quality, patient convenience, satisfaction with the hospital, or hospital reputation.

Studies of cardiac surgery report cards in New York9 and Pennsylvania,10 conducted in the mid‐1990s, found that cardiologists did not use publicly reported mortality data in referral decisions, nor did they share it with patients. Over the past 2 decades, public reporting has grown exponentially, and now includes many measures of structure, processes, and outcomes for almost all US hospitals, available for free over the Internet. The growth of the patient safety movement and mandated public reporting might also have affected physicians' views about publicly reported quality data. We surveyed primary care physicians to determine the extent to which they use information about hospital quality in their referral decisions for community‐acquired pneumonia, and to identify other factors that might influence referral decisions.

METHODS

We obtained an e‐mail list of primary care physicians from the medical staff offices of all area hospitals within a 10‐mile radius of Springfield, MA (Baystate Medical Center, Holyoke Medical Center, and Mercy Medical Center). Baystate Medical Center is a 659‐bed academic medical center and Level 1 trauma center, while Holyoke and Mercy Medical Center are both 180‐bed acute care hospitals. Physicians were contacted via e‐mail from June through September of 2009, and asked to participate in an anonymous, 10‐minute, online survey accessible through an Internet link (SurveyMonkey.com) about factors influencing a primary care physician's hospital referral choice for a patient with pneumonia. To facilitate participation, we sent 2 follow‐up e‐mail reminders, and respondents who completed the entire survey received a $15 gift card. The study was approved by the institutional review board of Baystate Medical Center and closed to participation on September 23, 2009.

We created the online survey based on previous research7 and approximately 10 key informant interviews. The survey (see Supporting Information, Appendix, in the online version of this article) contained 13 demographic questions and 10 questions based on a case study of pneumonia (Figure 1). The instrument was pilot tested for clarity with a small group of primary care physicians at the author's institution and subsequently modified. We chose pneumonia because it is a common reason for a PCP to make an urgent hospital referral,11 and because there is a well‐established set of quality measures that are publicly reported.12 Unlike elective surgery, for which patients might research hospitals or surgeons on their own, patients with pneumonia would likely rely on their PCP to recommend a hospital for urgent referral. In contrast, PCPs know they will refer a number of pneumonia patients to hospitals each year and therefore might have an interest in comparing the publicly reported quality measures for local hospitals.

Respondents were shown the case study and asked to refer the hypothetical patient to 1 of 4 area hospitals. Respondents were asked to rate (on a 3‐point scale: not at all, somewhat, or very) the importance of the following factors in their referral decision: waiting time in the emergency room, distance traveled by the patient, experience of other patients, severity of patient's illness, patient's insurance, hospital's reputation among other physicians and partners, admitting privileges with a specific hospital, admitting arrangements with a hospitalist group, familiarity with the hospital, availability of subspecialists, quality of subspecialists, nursing quality, nursing staffing ratios, hospital's case volume for pneumonia, publicly available quality measures, patient preference, distance from your practice, shared electronic record system, and quality of hospital discharge summaries. Next, we measured provider's awareness of publicly reported hospital quality data and whether they used such data in referring patients or choosing their own medical care. Specifically, we asked about familiarity with the following 4 Web sites: Massachusetts Quality and Cost (a state‐specific Web site produced by the Massachusetts Executive Office of Health and Human Services)13; Hospital Compare (a Web site developed and maintained by Centers for Medicare and Medicaid Services [CMS] and the Department of Health and Human Services)14; Leapfrog Group (a private, nonprofit organization)15; and Health Grades (a private, for‐profit company).16

We then asked participants to rate the importance of the following performance measures when judging a hospital's performance: antibiotics within 6 hours of arrival to the hospital, appropriate initial antibiotic, blood culture drawn before antibiotics given, smoking cessation advice/counseling, oxygenation assessment, risk‐adjusted mortality, intensive care unit staffing, influenza vaccination, pneumococcal vaccination, Leapfrog's never events,15 volume, Leapfrog safe practices score, cost, computerized physician order entry system, Magnet status,17 and U.S. News & World Report's Best Hospitals designation.18 Lastly, we asked participants to state, using a 3‐point scale (agree, disagree, neutral), their level of agreement that the following factors, adapted from Schneider and Epstein,10 represented limitations of public reporting: 1) risk‐adjusted methods are inadequate to compare hospitals fairly; 2) mortality rates are an incomplete indication of the quality of a hospital's care; 3) hospitals can manipulate the data; and 4) ratings are inaccurate for hospitals with small caseloads.

Factors associated with physicians' knowledge of publicly reported data were analyzed with bivariate analysis. Since all factors are categorical, chi‐square analysis was used for bivariate analysis. No factor had a P value <0.2 on bivariate analysis, thus multiple logistic regression was not performed.

RESULTS

Of 194 primary care physicians who received invitations, 92 responded (response rate of 47%). See Table 1 for respondents' characteristics. All age groups were represented; most were male and between 3554 years of age. Respondents were evenly divided between those who owned their own practices (54%) and those working for a health system (46%). Ninety‐three percent of PCPs maintained admitting privileges (45% to more than 1 hospital), but only 20% continued to admit their own patients. When asked where they would send a hypothetical pneumonia patient, only 4% of PCPs chose a hospital to which they had never had admitting privileges.

| Variable | No. (%) of Respondents |

|---|---|

| Age | |

| 2534 | 5 (5) |

| 3544 | 27 (29) |

| 4554 | 24 (26) |

| >55 | 36 (39) |

| Gender | |

| Male | 65 (71) |

| Female | 27 (29) |

| Years out of medical school | |

| <6 | 6 (7) |

| 610 | 9 (10) |

| 1115 | 17 (18) |

| >15 | 60 (65) |

| % Patients seen who are covered by | |

| Medicaid: Mean (SD) | 28 (26) |

| Medicare: Mean (SD) | 31 (18) |

| Private: Mean (SD) | 40 (25) |

| Number of time doing patient care: Mean (SD) | 85 (23) |

| Number of patients admitted/sent to hospital/mo | |

| <6 | 40 (47) |

| 610 | 25 (29) |

| 1120 | 12 (14) |

| >20 | 8 (9) |

| Practice type | |

| Solo | 13 (15) |

| Single specialty group | 36 (42) |

| Multi‐specialty group | 36 (42) |

| Practice ownership | |

| Independent | 45 (54) |

| Health system | 38 (46) |

| Currently admits own patients | |

| Yes | 17 (20) |

| No | 66 (80) |

| Current hospital admitting privileges | |

| A | 63 (76) |

| B | 41 (49) |

| C | 3 (4) |

| D | 12 (14) |

| None | 6 (7) |

| Other | 2 (2) |

Physician's ratings of the importance of various factors in their referral decision are shown in Figure 2. The following factors were most often considered very important: familiarity with the hospital (70%), patient preference (62%), and admitting arrangements with a hospitalist group (62%). In contrast, only 18% of physicians viewed publicly available hospital quality measures as very important when making a referral decision. Factors most often rated not at all important to participants' decisions were patient insurance (48%), hospital's case volume for pneumonia (48%), and publicly available quality measures (42%).

Of the 61% who were aware of Web sites that report hospital quality, most (52%) were familiar with Massachusetts Quality and Cost, while few (27%) were familiar with Hospital Compare. None of the physicians we surveyed reported having used publicly reported quality information when making a referral decision or having discussed such data with their patients. However, 49% stated that publicly reported performance data was somewhat and 10% very important to decisions regarding the medical care they receive. None of the demographic characteristics that we assessed (including age, gender, or years out of medical school) were associated with awareness of publicly reported data in bivariate analyses.

Respondents' ratings of specific quality measures appear in Figure 3. PCPs most often identified the following factors as being very important when judging hospital quality: percent of pneumonia patients given initial antibiotics within 6 hours after arrival (66%), percent of pneumonia patients given the most appropriate initial antibiotic (63%), and percent of pneumonia patients whose initial emergency room (ER) blood culture was performed prior to the administration of the first hospital dose of antibiotics (51%). The factors most often rated not at all important included: U.S. News & World Report's Best Hospitals designation (57%), Magnet Status (42%), and computer physician order entry system (40%).

When asked about limitations of publicly reported performance data, 42% agreed that risk‐adjusted methods were inadequate to compare hospitals fairly, 76% agreed that mortality rates were an incomplete indication of the quality of hospitals care, 62% agreed that hospitals could manipulate the data, and 72% agreed that the ratings were inaccurate for hospitals with small caseloads.

DISCUSSION

In 2003, the Hospital Quality Alliance began a voluntary public reporting program of hospital performance measures, for pneumonia, acute myocardial infarction, and congestive heart failure, that was intended to encourage quality improvement activity by hospitals, and to provide patients and referring physicians with information to make better‐informed choices.19 These data are now easily available to the public through a free Web site (

Despite their lack of familiarity with Hospital Compare, it was the quality measures that are reported by Hospital Compare that they identified as the best indicators of hospital quality: appropriate initial antibiotic, antibiotics within 6 hours, and blood cultures performed prior to the administration of antibiotics. In fact, the 5 measures most often cited as very important to judging hospital quality were all measures reported on Hospital Compare.

As the US healthcare system becomes increasingly complex and costly, there is a growing interest in providing patients with physician and hospital performance data to help them select the provider.21 It is postulated that if patients took a more active role in choosing healthcare providers, and were forced to assume greater financial responsibility, then consumerism will force improvements in quality of care while maintaining or even lowering costs.21 However, studies demonstrate that most patients are unaware of performance data and, if they are aware, still value familiarity over quality ratings.4 Moreover, patients rely on the knowledge of their primary care physician to guide them.5

This is the first study we are aware of that examines how primary care physicians use publicly reported quality data in hospital referral decisions. Studies from more than a decade ago found that publicly reported data had minimal impact on referral decisions from cardiologists to cardiac surgeons. A survey of Pennsylvania's cardiologists and cardiac surgeons showed that although 82% were aware of risk‐adjusted mortality rates published for surgeons, only 10% of cardiologists reported these to be very important when evaluating the performance of a cardiothoracic surgeon. Furthermore, 87% of cardiologists stated that mortality and case volume information reported on cardiac surgeons had minimal or no influence on their referral practices.10 In 1997, a survey of cardiologists in New York found that only 38% of respondents reported that risk‐adjusted outcome data had affected their referrals to surgeons very much or somewhat.9 In addition, most authors conclude that public reporting has had little or no effect on market share.22 Despite growth in the number of measures and improved accessibility, our physicians were even less likely to be aware of, or use, publicly reported data than physicians a decade earlier.

Of course, even if public reporting does not influence referral patterns, it could still improve healthcare quality in several ways. First, feedback about performance may focus quality improvement activities in specific areas that represent gaps in care.10 This could take the form of an appeal to professionalism,23 or the desire to preserve one's reputation by not appearing on a list of poor performers.24 Second, hospitals' desire to appear on lists of high performers, such as U.S. News & World Report's hospital rankings, for marketing purposes, might stimulate improvement activities.10 Finally, publicly reported measures could form the basis for pay‐for‐performance incentives that further speed improvement.25

Our study has several limitations. First, our sample size was small and restricted to 1 region of 1 state, and may not be representative of either the state or nation as a whole. Still, our area has a high level of Internet use, and several local hospitals have been at the vanguard of the quality movement, generally scoring above both state and national averages on Hospital Compare. In addition, Massachusetts has made substantial efforts to promote its own public reporting program, and half the surveyed physicians reported being aware of the Massachusetts Quality and Cost Web site. The fact that not a single area physician surveyed used publicly reported data when making referral decisions is sobering. We believe it is unlikely that other areas of the country will have a substantially higher rate of use. Similarly, our response rate was under 50%. Physicians who did not take the survey may have differed in important ways from those who did. Nevertheless, our sample included a broad range of physician ages, practice types, and affiliations. It seems unlikely that those who did not respond would be more inclined to use publicly reported data than those who did. Second, we assessed decision‐making around a single medical condition. Physicians may have used publicly reported data for other decisions. However, the condition we chose was both urgent (as opposed to emergent) and possesses a robust set of publicly reported quality measures. If physicians do not use publicly reported data for this decision, it seems unlikely they would use it for conditions that have fewer reliable measures (eg, gall bladder surgery) or where the choice of hospital is generally made in an ambulance (eg, myocardial infarction). Finally, the low awareness of public reporting made it difficult for some physicians to answer some of the questions regarding publicly reported hospital quality data because they were unfamiliar with the language utilized by the Web sites (eg, magnet status, Leapfrog never events). It is possible that our results may have been altered slightly if a glossary had been provided.

Despite these limitations, our study suggests that more than 6 years after the launch of the Hospital Quality Alliance, primary care physicians do not appear to make use of these data when choosing a hospital for their patients suffering from pneumonia. Instead, they rely on familiarity with a hospital and past relationships. Even though a majority of the physicians surveyed no longer admitted their own patients, they continue to send patients to hospitals where they had privileges. This finding is not surprising, as physicians also cling to familiar therapies, and may be reluctant to prescribe a new medication or perform an unfamiliar procedure, even if it is indicated. Such reliance on familiarity may make physicians feel comfortable, but does not always result in the best care for patients. Acquiring familiarity, however, requires time and effort, something that physicians generally have in short supply; and while there are plenty of industry representatives to overcome physicians' hesitancy to prescribe new treatments, there are no analogous agents to educate physicians about public reporting or to help them overcome hesitancy about trying a new hospital.

Suspicion about the validity of public reporting may also play a role in the physicians' reported behavior. In past studies of cardiac report cards, cardiologists were most concerned that risk adjustment methods were inadequate (77%) and that mortality rates were an incomplete indicator of the quality of surgical care (74%). They were less concerned about manipulation of data (52%) or small caseloads (15%).10 Our physicians were also concerned that mortality rates were an incomplete measure of quality (76%) but less concerned about risk adjustment (42%), perhaps because many structure and process measures are not subject to risk adjustment. In contrast, they were somewhat more concerned that hospitals could manipulate the data (62%), which again may reflect process measures versus mortality statistics. Other reasons for not using the data may include a lack of awareness of the data or how to access it, or a belief that hospitals do not vary in quality.

Interestingly, even though most respondents were not aware of Hospital Compare, they found the information presented there to best reflect the overall hospital quality. Also, while respondents indicated that they did not use publicly reported data when referring patients, almost half of PCPs reported that publicly reported performance data was at least somewhat important in choosing their own medical care. Thus, although public reporting appears not to have reached its full potential, some publicly reported quality measures have clearly entered the consciousness of PCPs. In contrast, other highly touted measures such as computerized physician order entry systems were not appreciated, and popular designations such as U.S. News & World Report's Best Hospitals were least valued, even though 1 area hospital carries this designation. One conclusion might be that CMS should abandon Hospital Compare since neither patients4 nor providers use it. However, public reporting may improve quality in other ways. Moreover, physicians appear interested in the data even if they are not aware of it. Therefore, given the large investment by CMS and individual hospitals in collecting the data required for Hospital Compare, CMS might consider making greater efforts to increase primary care physician awareness of the Hospital Compare Web site. At the same time, high‐performing hospitals may want to communicate their performance scores to local PCPs as part of their marketing strategy. Future studies could assess whether such practices affect physician referral decisions and subsequent market share of high‐performing hospitals.

Acknowledgements

The authors of this study thank Jane Garb for her help with statistical analysis.

Over the past decade, research has demonstrated a value gap in US healthcare, characterized by rapidly rising costs and substandard quality.1, 2 Public reporting of hospital performance data is one of several strategies promoted to help address these deficiencies. To this end, a number of hospital rating services have created Web sites aimed at healthcare consumers.3 These services provide information about multiple aspects of healthcare quality, which in theory might be used by patients when deciding where to seek medical care.

Despite the increasing availability of publicly reported quality data comparing doctors and hospitals, a 2008 survey found that only 14% of Americans have seen and used such information in the past year, a decrease from 2006 (36%).4 A similar study in 2007 found that after seeking input from family and friends, patients generally rely on their primary care physician (PCP) to assist them to make decisions about where to have elective surgery.5 Surprisingly, almost nothing is known about how publicly reported data is used, if at all, by PCPs in the referral of patients to hospitals.

The physician is an important intermediary in the buying process for many healthcare services.6 Tertiary care hospitals depend on physician referrals for much of their patient volume.7 Until the emergence of the hospitalist model of care, most primary care physicians cared for their own hospitalized patients, and thus hospital referral decisions were largely driven by the PCP's admitting privileges. However, following the rapid expansion of the hospitalist movement,8, 9 there has been a sharp decrease in the number of PCPs who provide direct patient care for their hospitalized patients.8 As a result, PCPs may now have more choice in regards to hospital referrals for general medical conditions. Potential factors influencing a PCP's referral decisions might include familiarity with the hospital, care quality, patient convenience, satisfaction with the hospital, or hospital reputation.

Studies of cardiac surgery report cards in New York9 and Pennsylvania,10 conducted in the mid‐1990s, found that cardiologists did not use publicly reported mortality data in referral decisions, nor did they share it with patients. Over the past 2 decades, public reporting has grown exponentially, and now includes many measures of structure, processes, and outcomes for almost all US hospitals, available for free over the Internet. The growth of the patient safety movement and mandated public reporting might also have affected physicians' views about publicly reported quality data. We surveyed primary care physicians to determine the extent to which they use information about hospital quality in their referral decisions for community‐acquired pneumonia, and to identify other factors that might influence referral decisions.

METHODS

We obtained an e‐mail list of primary care physicians from the medical staff offices of all area hospitals within a 10‐mile radius of Springfield, MA (Baystate Medical Center, Holyoke Medical Center, and Mercy Medical Center). Baystate Medical Center is a 659‐bed academic medical center and Level 1 trauma center, while Holyoke and Mercy Medical Center are both 180‐bed acute care hospitals. Physicians were contacted via e‐mail from June through September of 2009, and asked to participate in an anonymous, 10‐minute, online survey accessible through an Internet link (SurveyMonkey.com) about factors influencing a primary care physician's hospital referral choice for a patient with pneumonia. To facilitate participation, we sent 2 follow‐up e‐mail reminders, and respondents who completed the entire survey received a $15 gift card. The study was approved by the institutional review board of Baystate Medical Center and closed to participation on September 23, 2009.

We created the online survey based on previous research7 and approximately 10 key informant interviews. The survey (see Supporting Information, Appendix, in the online version of this article) contained 13 demographic questions and 10 questions based on a case study of pneumonia (Figure 1). The instrument was pilot tested for clarity with a small group of primary care physicians at the author's institution and subsequently modified. We chose pneumonia because it is a common reason for a PCP to make an urgent hospital referral,11 and because there is a well‐established set of quality measures that are publicly reported.12 Unlike elective surgery, for which patients might research hospitals or surgeons on their own, patients with pneumonia would likely rely on their PCP to recommend a hospital for urgent referral. In contrast, PCPs know they will refer a number of pneumonia patients to hospitals each year and therefore might have an interest in comparing the publicly reported quality measures for local hospitals.

Respondents were shown the case study and asked to refer the hypothetical patient to 1 of 4 area hospitals. Respondents were asked to rate (on a 3‐point scale: not at all, somewhat, or very) the importance of the following factors in their referral decision: waiting time in the emergency room, distance traveled by the patient, experience of other patients, severity of patient's illness, patient's insurance, hospital's reputation among other physicians and partners, admitting privileges with a specific hospital, admitting arrangements with a hospitalist group, familiarity with the hospital, availability of subspecialists, quality of subspecialists, nursing quality, nursing staffing ratios, hospital's case volume for pneumonia, publicly available quality measures, patient preference, distance from your practice, shared electronic record system, and quality of hospital discharge summaries. Next, we measured provider's awareness of publicly reported hospital quality data and whether they used such data in referring patients or choosing their own medical care. Specifically, we asked about familiarity with the following 4 Web sites: Massachusetts Quality and Cost (a state‐specific Web site produced by the Massachusetts Executive Office of Health and Human Services)13; Hospital Compare (a Web site developed and maintained by Centers for Medicare and Medicaid Services [CMS] and the Department of Health and Human Services)14; Leapfrog Group (a private, nonprofit organization)15; and Health Grades (a private, for‐profit company).16

We then asked participants to rate the importance of the following performance measures when judging a hospital's performance: antibiotics within 6 hours of arrival to the hospital, appropriate initial antibiotic, blood culture drawn before antibiotics given, smoking cessation advice/counseling, oxygenation assessment, risk‐adjusted mortality, intensive care unit staffing, influenza vaccination, pneumococcal vaccination, Leapfrog's never events,15 volume, Leapfrog safe practices score, cost, computerized physician order entry system, Magnet status,17 and U.S. News & World Report's Best Hospitals designation.18 Lastly, we asked participants to state, using a 3‐point scale (agree, disagree, neutral), their level of agreement that the following factors, adapted from Schneider and Epstein,10 represented limitations of public reporting: 1) risk‐adjusted methods are inadequate to compare hospitals fairly; 2) mortality rates are an incomplete indication of the quality of a hospital's care; 3) hospitals can manipulate the data; and 4) ratings are inaccurate for hospitals with small caseloads.

Factors associated with physicians' knowledge of publicly reported data were analyzed with bivariate analysis. Since all factors are categorical, chi‐square analysis was used for bivariate analysis. No factor had a P value <0.2 on bivariate analysis, thus multiple logistic regression was not performed.

RESULTS

Of 194 primary care physicians who received invitations, 92 responded (response rate of 47%). See Table 1 for respondents' characteristics. All age groups were represented; most were male and between 3554 years of age. Respondents were evenly divided between those who owned their own practices (54%) and those working for a health system (46%). Ninety‐three percent of PCPs maintained admitting privileges (45% to more than 1 hospital), but only 20% continued to admit their own patients. When asked where they would send a hypothetical pneumonia patient, only 4% of PCPs chose a hospital to which they had never had admitting privileges.

| Variable | No. (%) of Respondents |

|---|---|

| Age | |

| 2534 | 5 (5) |

| 3544 | 27 (29) |

| 4554 | 24 (26) |

| >55 | 36 (39) |

| Gender | |

| Male | 65 (71) |

| Female | 27 (29) |

| Years out of medical school | |

| <6 | 6 (7) |

| 610 | 9 (10) |

| 1115 | 17 (18) |

| >15 | 60 (65) |

| % Patients seen who are covered by | |

| Medicaid: Mean (SD) | 28 (26) |

| Medicare: Mean (SD) | 31 (18) |

| Private: Mean (SD) | 40 (25) |

| Number of time doing patient care: Mean (SD) | 85 (23) |

| Number of patients admitted/sent to hospital/mo | |

| <6 | 40 (47) |

| 610 | 25 (29) |

| 1120 | 12 (14) |

| >20 | 8 (9) |

| Practice type | |

| Solo | 13 (15) |

| Single specialty group | 36 (42) |

| Multi‐specialty group | 36 (42) |

| Practice ownership | |

| Independent | 45 (54) |

| Health system | 38 (46) |

| Currently admits own patients | |

| Yes | 17 (20) |

| No | 66 (80) |

| Current hospital admitting privileges | |

| A | 63 (76) |

| B | 41 (49) |

| C | 3 (4) |

| D | 12 (14) |

| None | 6 (7) |

| Other | 2 (2) |

Physician's ratings of the importance of various factors in their referral decision are shown in Figure 2. The following factors were most often considered very important: familiarity with the hospital (70%), patient preference (62%), and admitting arrangements with a hospitalist group (62%). In contrast, only 18% of physicians viewed publicly available hospital quality measures as very important when making a referral decision. Factors most often rated not at all important to participants' decisions were patient insurance (48%), hospital's case volume for pneumonia (48%), and publicly available quality measures (42%).

Of the 61% who were aware of Web sites that report hospital quality, most (52%) were familiar with Massachusetts Quality and Cost, while few (27%) were familiar with Hospital Compare. None of the physicians we surveyed reported having used publicly reported quality information when making a referral decision or having discussed such data with their patients. However, 49% stated that publicly reported performance data was somewhat and 10% very important to decisions regarding the medical care they receive. None of the demographic characteristics that we assessed (including age, gender, or years out of medical school) were associated with awareness of publicly reported data in bivariate analyses.

Respondents' ratings of specific quality measures appear in Figure 3. PCPs most often identified the following factors as being very important when judging hospital quality: percent of pneumonia patients given initial antibiotics within 6 hours after arrival (66%), percent of pneumonia patients given the most appropriate initial antibiotic (63%), and percent of pneumonia patients whose initial emergency room (ER) blood culture was performed prior to the administration of the first hospital dose of antibiotics (51%). The factors most often rated not at all important included: U.S. News & World Report's Best Hospitals designation (57%), Magnet Status (42%), and computer physician order entry system (40%).

When asked about limitations of publicly reported performance data, 42% agreed that risk‐adjusted methods were inadequate to compare hospitals fairly, 76% agreed that mortality rates were an incomplete indication of the quality of hospitals care, 62% agreed that hospitals could manipulate the data, and 72% agreed that the ratings were inaccurate for hospitals with small caseloads.

DISCUSSION

In 2003, the Hospital Quality Alliance began a voluntary public reporting program of hospital performance measures, for pneumonia, acute myocardial infarction, and congestive heart failure, that was intended to encourage quality improvement activity by hospitals, and to provide patients and referring physicians with information to make better‐informed choices.19 These data are now easily available to the public through a free Web site (

Despite their lack of familiarity with Hospital Compare, it was the quality measures that are reported by Hospital Compare that they identified as the best indicators of hospital quality: appropriate initial antibiotic, antibiotics within 6 hours, and blood cultures performed prior to the administration of antibiotics. In fact, the 5 measures most often cited as very important to judging hospital quality were all measures reported on Hospital Compare.

As the US healthcare system becomes increasingly complex and costly, there is a growing interest in providing patients with physician and hospital performance data to help them select the provider.21 It is postulated that if patients took a more active role in choosing healthcare providers, and were forced to assume greater financial responsibility, then consumerism will force improvements in quality of care while maintaining or even lowering costs.21 However, studies demonstrate that most patients are unaware of performance data and, if they are aware, still value familiarity over quality ratings.4 Moreover, patients rely on the knowledge of their primary care physician to guide them.5

This is the first study we are aware of that examines how primary care physicians use publicly reported quality data in hospital referral decisions. Studies from more than a decade ago found that publicly reported data had minimal impact on referral decisions from cardiologists to cardiac surgeons. A survey of Pennsylvania's cardiologists and cardiac surgeons showed that although 82% were aware of risk‐adjusted mortality rates published for surgeons, only 10% of cardiologists reported these to be very important when evaluating the performance of a cardiothoracic surgeon. Furthermore, 87% of cardiologists stated that mortality and case volume information reported on cardiac surgeons had minimal or no influence on their referral practices.10 In 1997, a survey of cardiologists in New York found that only 38% of respondents reported that risk‐adjusted outcome data had affected their referrals to surgeons very much or somewhat.9 In addition, most authors conclude that public reporting has had little or no effect on market share.22 Despite growth in the number of measures and improved accessibility, our physicians were even less likely to be aware of, or use, publicly reported data than physicians a decade earlier.

Of course, even if public reporting does not influence referral patterns, it could still improve healthcare quality in several ways. First, feedback about performance may focus quality improvement activities in specific areas that represent gaps in care.10 This could take the form of an appeal to professionalism,23 or the desire to preserve one's reputation by not appearing on a list of poor performers.24 Second, hospitals' desire to appear on lists of high performers, such as U.S. News & World Report's hospital rankings, for marketing purposes, might stimulate improvement activities.10 Finally, publicly reported measures could form the basis for pay‐for‐performance incentives that further speed improvement.25

Our study has several limitations. First, our sample size was small and restricted to 1 region of 1 state, and may not be representative of either the state or nation as a whole. Still, our area has a high level of Internet use, and several local hospitals have been at the vanguard of the quality movement, generally scoring above both state and national averages on Hospital Compare. In addition, Massachusetts has made substantial efforts to promote its own public reporting program, and half the surveyed physicians reported being aware of the Massachusetts Quality and Cost Web site. The fact that not a single area physician surveyed used publicly reported data when making referral decisions is sobering. We believe it is unlikely that other areas of the country will have a substantially higher rate of use. Similarly, our response rate was under 50%. Physicians who did not take the survey may have differed in important ways from those who did. Nevertheless, our sample included a broad range of physician ages, practice types, and affiliations. It seems unlikely that those who did not respond would be more inclined to use publicly reported data than those who did. Second, we assessed decision‐making around a single medical condition. Physicians may have used publicly reported data for other decisions. However, the condition we chose was both urgent (as opposed to emergent) and possesses a robust set of publicly reported quality measures. If physicians do not use publicly reported data for this decision, it seems unlikely they would use it for conditions that have fewer reliable measures (eg, gall bladder surgery) or where the choice of hospital is generally made in an ambulance (eg, myocardial infarction). Finally, the low awareness of public reporting made it difficult for some physicians to answer some of the questions regarding publicly reported hospital quality data because they were unfamiliar with the language utilized by the Web sites (eg, magnet status, Leapfrog never events). It is possible that our results may have been altered slightly if a glossary had been provided.

Despite these limitations, our study suggests that more than 6 years after the launch of the Hospital Quality Alliance, primary care physicians do not appear to make use of these data when choosing a hospital for their patients suffering from pneumonia. Instead, they rely on familiarity with a hospital and past relationships. Even though a majority of the physicians surveyed no longer admitted their own patients, they continue to send patients to hospitals where they had privileges. This finding is not surprising, as physicians also cling to familiar therapies, and may be reluctant to prescribe a new medication or perform an unfamiliar procedure, even if it is indicated. Such reliance on familiarity may make physicians feel comfortable, but does not always result in the best care for patients. Acquiring familiarity, however, requires time and effort, something that physicians generally have in short supply; and while there are plenty of industry representatives to overcome physicians' hesitancy to prescribe new treatments, there are no analogous agents to educate physicians about public reporting or to help them overcome hesitancy about trying a new hospital.

Suspicion about the validity of public reporting may also play a role in the physicians' reported behavior. In past studies of cardiac report cards, cardiologists were most concerned that risk adjustment methods were inadequate (77%) and that mortality rates were an incomplete indicator of the quality of surgical care (74%). They were less concerned about manipulation of data (52%) or small caseloads (15%).10 Our physicians were also concerned that mortality rates were an incomplete measure of quality (76%) but less concerned about risk adjustment (42%), perhaps because many structure and process measures are not subject to risk adjustment. In contrast, they were somewhat more concerned that hospitals could manipulate the data (62%), which again may reflect process measures versus mortality statistics. Other reasons for not using the data may include a lack of awareness of the data or how to access it, or a belief that hospitals do not vary in quality.

Interestingly, even though most respondents were not aware of Hospital Compare, they found the information presented there to best reflect the overall hospital quality. Also, while respondents indicated that they did not use publicly reported data when referring patients, almost half of PCPs reported that publicly reported performance data was at least somewhat important in choosing their own medical care. Thus, although public reporting appears not to have reached its full potential, some publicly reported quality measures have clearly entered the consciousness of PCPs. In contrast, other highly touted measures such as computerized physician order entry systems were not appreciated, and popular designations such as U.S. News & World Report's Best Hospitals were least valued, even though 1 area hospital carries this designation. One conclusion might be that CMS should abandon Hospital Compare since neither patients4 nor providers use it. However, public reporting may improve quality in other ways. Moreover, physicians appear interested in the data even if they are not aware of it. Therefore, given the large investment by CMS and individual hospitals in collecting the data required for Hospital Compare, CMS might consider making greater efforts to increase primary care physician awareness of the Hospital Compare Web site. At the same time, high‐performing hospitals may want to communicate their performance scores to local PCPs as part of their marketing strategy. Future studies could assess whether such practices affect physician referral decisions and subsequent market share of high‐performing hospitals.

Acknowledgements

The authors of this study thank Jane Garb for her help with statistical analysis.

- Centers for Medicare and Medicaid Services. National Health Care Expenditures Data.2010. Available at: http://www.2.cms.gov/NationalHealthExpendData/25_NHE_Fact_Sheet.asp. Accessed April 22,year="2010"2010.

- ,,, et al.The quality of health care delivered to adults in the United States.N Engl J Med.2003;348(26):2635–2645.

- ,. The State‐of‐the‐Art of Online Hospital Public Reporting: a Review of Fifty‐One Websites. 2005. Available at: http://www.delmarvafoundation.org/newsAndPublications/reports/documents/WebSummariesFinal9.2.04.pdf. Accessed February 24,2012.

- . Kaiser Family Foundation. 2008 Update on Consumers' Views of Patient Safety and Quality Information. 2010. Available at: http://www.kff.org/kaiserpolls/upload/7819.pdf. Accessed April 20,2010.

- ,,.Choosing where to have major surgery: who makes the decision?Arch Surg.2007;142(3):242–246.

- ,,, et al.Resolving the gatekeeper conundrum: what patients value in primary care and referrals to specialists.JAMA.1999;282(3):261–266.

- ,,,.How physicians make referrals.J Health Care Mark.1993;13(2):6–17.

- ,,,.Growth in the care of older patients by hospitalists in the United States.N Engl J Med.2009;360(11):1102–1112.

- ,,,DeBuono BA. Public release of cardiac surgery outcomes data in New York: what do New York state cardiologists think of it?Am Heart J.1997;134(6):1120–1128.

- ,.Influence of cardiac‐surgery performance reports on referral practices and access to care. A survey of cardiovascular specialists.N Engl J Med.1996;335(4):251–256.

- ,,,,.Primary care summary of the British Thoracic Society Guidelines for the management of community acquired pneumonia in adults: 2009 update. Endorsed by the Royal College of General Practitioners and the Primary Care Respiratory Society UK.Prim Care Respir J.2010;19(1):21–27.

- Hospital Quality Alliance Quality Measures.2010. Available at: http://www.hospitalqualityalliance.org/hospitalqualityalliance/qualitymeasures/qualitymeasures.html. Accessed April 25,year="2010"2010.

- Massachusetts Executive Office of Health and Human Services. Massachusetts Executive Quality and Cost.2010. Available at: http://www.mass.gov/healthcareqc. Accessed February 24,year="2012"2012.

- Centers for Medicare and Medicaid Services. Hospital Compare.2010. Available at: http://www.hospitalcompare.hhs.gov. Accessed April 19,year="2010"2010.

- The Leapfrog Group for Patient Safety.2010. Available at: http://www.leapfroggroup.org/. Accessed April 23,year="2010"2010.

- Health Grades. 2010. Available at: http://www.healthgrades.com. Accessed April 19,2010.

- American Nurses Credentialing Center. Magnet Recognition Program. 2010. Available at: http://www.nursecredentialing.org/Magnet.aspx. Accessed April 15,2010.

- U.S. News 353(3):265–274.

- . US ads push patients to shop for hospitals. USA Today. May 20, 2008. Available at: http://www.usatoday.com/news/health/2008‐05‐20‐Hospitalads_N.htm. Accessed February 24, 2012.

- ,,.How do elderly patients decide where to go for major surgery? Telephone interview survey.BMJ.2005;331(7520):821.

- ,,, et al.Public reporting of cardiac surgery performance: part 1—history, rationale, consequences.Ann Thorac Surg.2011;92(3 suppl):S2–S11.

- ,,.Public reporting of hospital quality: recommendations to benefit patients and hospitals.J Hosp Med.2009;4(9):541–545.

- ,,, et al.When things go wrong: the impact of being a statistical outlier in publicly reported coronary artery bypass graft surgery mortality data.Am J Med Qual.2008;23(2):90–95.

- ,,, et al.Public reporting and pay for performance in hospital quality improvement.N Engl J Med.2007;356(5):486–496.

- Centers for Medicare and Medicaid Services. National Health Care Expenditures Data.2010. Available at: http://www.2.cms.gov/NationalHealthExpendData/25_NHE_Fact_Sheet.asp. Accessed April 22,year="2010"2010.

- ,,, et al.The quality of health care delivered to adults in the United States.N Engl J Med.2003;348(26):2635–2645.

- ,. The State‐of‐the‐Art of Online Hospital Public Reporting: a Review of Fifty‐One Websites. 2005. Available at: http://www.delmarvafoundation.org/newsAndPublications/reports/documents/WebSummariesFinal9.2.04.pdf. Accessed February 24,2012.

- . Kaiser Family Foundation. 2008 Update on Consumers' Views of Patient Safety and Quality Information. 2010. Available at: http://www.kff.org/kaiserpolls/upload/7819.pdf. Accessed April 20,2010.

- ,,.Choosing where to have major surgery: who makes the decision?Arch Surg.2007;142(3):242–246.

- ,,, et al.Resolving the gatekeeper conundrum: what patients value in primary care and referrals to specialists.JAMA.1999;282(3):261–266.

- ,,,.How physicians make referrals.J Health Care Mark.1993;13(2):6–17.

- ,,,.Growth in the care of older patients by hospitalists in the United States.N Engl J Med.2009;360(11):1102–1112.

- ,,,DeBuono BA. Public release of cardiac surgery outcomes data in New York: what do New York state cardiologists think of it?Am Heart J.1997;134(6):1120–1128.

- ,.Influence of cardiac‐surgery performance reports on referral practices and access to care. A survey of cardiovascular specialists.N Engl J Med.1996;335(4):251–256.

- ,,,,.Primary care summary of the British Thoracic Society Guidelines for the management of community acquired pneumonia in adults: 2009 update. Endorsed by the Royal College of General Practitioners and the Primary Care Respiratory Society UK.Prim Care Respir J.2010;19(1):21–27.

- Hospital Quality Alliance Quality Measures.2010. Available at: http://www.hospitalqualityalliance.org/hospitalqualityalliance/qualitymeasures/qualitymeasures.html. Accessed April 25,year="2010"2010.

- Massachusetts Executive Office of Health and Human Services. Massachusetts Executive Quality and Cost.2010. Available at: http://www.mass.gov/healthcareqc. Accessed February 24,year="2012"2012.

- Centers for Medicare and Medicaid Services. Hospital Compare.2010. Available at: http://www.hospitalcompare.hhs.gov. Accessed April 19,year="2010"2010.

- The Leapfrog Group for Patient Safety.2010. Available at: http://www.leapfroggroup.org/. Accessed April 23,year="2010"2010.

- Health Grades. 2010. Available at: http://www.healthgrades.com. Accessed April 19,2010.

- American Nurses Credentialing Center. Magnet Recognition Program. 2010. Available at: http://www.nursecredentialing.org/Magnet.aspx. Accessed April 15,2010.

- U.S. News 353(3):265–274.

- . US ads push patients to shop for hospitals. USA Today. May 20, 2008. Available at: http://www.usatoday.com/news/health/2008‐05‐20‐Hospitalads_N.htm. Accessed February 24, 2012.

- ,,.How do elderly patients decide where to go for major surgery? Telephone interview survey.BMJ.2005;331(7520):821.

- ,,, et al.Public reporting of cardiac surgery performance: part 1—history, rationale, consequences.Ann Thorac Surg.2011;92(3 suppl):S2–S11.

- ,,.Public reporting of hospital quality: recommendations to benefit patients and hospitals.J Hosp Med.2009;4(9):541–545.

- ,,, et al.When things go wrong: the impact of being a statistical outlier in publicly reported coronary artery bypass graft surgery mortality data.Am J Med Qual.2008;23(2):90–95.

- ,,, et al.Public reporting and pay for performance in hospital quality improvement.N Engl J Med.2007;356(5):486–496.

Copyright © 2012 Society of Hospital Medicine