User login

Communicating Effectively With Hospitalized Patients and Families During the COVID-19 Pandemic

For parents of children with medical complexity (CMC), bringing a child to the hospital for needed expertise, equipment, and support is necessarily accompanied by a loss of power, freedom, and control. Two of our authors (K.L., P.M.) are parents of CMC—patients affectionately known as “frequent flyers” at their local hospitals. When health needs present, these experienced parents quickly identify what can be managed at home and what needs a higher level of care. The autonomy and security that accompany this parental expertise have been mitigated by, and in some cases even lost in, the COVID-19 pandemic. In particular, one of the most obvious changes to patients’ and families’ roles in inpatient care has been in communication practices, including changes to patient- and family-centered rounding that result from current isolation procedures and visitation policies. Over the past few months, we’ve learned a tremendous amount from providers and caregivers of hospitalized patients; in this article, we share some of what they’ve taught us.

Before we continue, we take a humble pause. The process of writing this piece spanned weeks during which certain areas of the world were overwhelmed. Our perspective has been informed by others who shared their experiences, and as a result, our health systems are more prepared. We offer this perspective recognizing the importance of learning from others and feeling a sense of gratitude to the providers and patients on the front lines.

CHANGING CIRCUMSTANCES OF CARE

As a group of parents, nurses, physicians, educators, and researchers who have spent the last 10 years studying how to communicate more effectively in the healthcare setting,1,2 we find ourselves in uncharted territory. Even now, we are engaged in an ongoing mentored implementation program examining the effects of a communication bundle on patient- and family- centered rounds (PFCRs) at 21 teaching hospitals across North America (the SHM I-PASS SCORE Study).3 COVID-19 has put that study on hold, and we have taken a step back to reassess the most basic communication needs of patients and families under any circumstance.

Even among our study group, our family advisors have also been on the front lines as patients and caregivers. One author (P.M.), shared a recent experience that she and her son, John Michael had:

“My son [who has autoimmune hepatitis and associated conditions] began coughing and had an intense sinus headache. As his symptoms continued, our concern steadily grew: Could we push through at home or would we have to go in [to the hospital] to seek care? My mind raced. We faced this decision many times, but never with the overwhelming threat of COVID-19 in the equation. My son, who is able to recognize troublesome symptoms, was afraid his sinuses were infected and decided that we should go in. My heart sank.”

Now, amid the COVID-19 pandemic, we have heard that patients like John Michael, who are accustomed to the healthcare setting, are “terrified with this additional concern of just being safe in the hospital,” reported a member of our Family Advisory Council. One of our members added, “We recognize this extends to the providers as well, who maintain great care despite their own family and personal safety concerns.” Although families affirmed the necessity of the enhanced isolation procedures and strict visitation policies, they also highlighted the effects of these changes on usual communication practices, including PFCRs.

CORE VALUES DURING COVID-19

In response to these sentiments, we reached out to all of our family advisors, as well as other team members, for suggestions on how healthcare teams could help patients and families best manage their hospital experiences in the setting of COVID-19. Additionally, we asked our physician and nursing colleagues across health systems about current inpatient unit adaptations. Their suggestions and adaptations reinforced and directly aligned with some of the core values of family engagement and patient- and family-centered care,4 namely, (1) prioritizing communication, (2) maintaining active engagement with patients and families, and (3) enhancing communication with technology.

Prioritizing Communication

Timely and clear communication can help providers manage the expectations of patients and families, build patient and family feelings of confidence, and reduce their feelings of anxiety and vulnerability. Almost universally, families acknowledged the importance of infection control and physical distancing measures while fearing that decreased entry into rooms would lead to decreased communication. “Since COVID-19 is contagious, families will want to see every precaution taken … but in a way that doesn’t cut off communication and leave an already sick and scared child and their family feeling emotionally isolated in a scary situation,” an Advisory Council member recounted. Importantly, one parent shared that hearing about personal protective equipment conservation could amplify stress because of fear their child wouldn’t be protected. These perspectives remind us that families may be experiencing heightened sensitivity and vulnerability during this pandemic.

Maintaining Active Engagement With Patients and Families

PFCRs continue to be an ideal setting for providers, patients, and families to communicate and build shared understanding, as well as build rapport and connection through human interactions. Maintaining rounding structures, when possible, reinforces familiarity with roles and expectations, among both patients who have been hospitalized in the past and those hospitalized for the first time. Adapting rounds may be as simple as opening the door during walk-rounds to invite caregiver participation while being aware of distancing. With large rounding teams, more substantial workflow changes may be necessary.

Beyond PFCRs, patients and family members can be further engaged through tasks/responsibilities for the time in between rounding communication. Examples include recording patient symptoms (eg, work of breathing) or actions (eg, how much water their child drinks). By doing this, patients and caregivers who feel helpless and anxious may be given a greater sense of control while at the same time making helpful contributions to medical care.

Parents also expressed value in reinforcing the message that patients and families are experts about themselves/their loved ones. Healthcare teams can invite their insights, questions, and concerns to show respect for their expertise and value. This builds trust and leads to a feeling of togetherness and teamwork. Across the board, families stressed the value of family engagement and communication in ideal conditions, and even more so in this time of upheaval.

Enhancing Communication With Technology

Many hospitals are leveraging technology to promote communication by integrating workstations on wheels & tablets with video-conferencing software (eg, Zoom, Skype) and even by adding communication via email and phone. While fewer team members are entering rooms, rounding teams are still including the voices of pharmacists, nutritionists, social workers, primary care physicians, and caregivers who are unable to be at the bedside.

These alternative communication methods may actually provide patients with more comfortable avenues for participating in their own care even beyond the pandemic. Children, in particular, may have strong opinions about their care but may not be comfortable speaking up in front of providers whom they don’t know very well. Telehealth, whiteboards, email, and limiting the number of providers in the room might actually create a more approachable environment for these patients even under routine conditions.

CONCLUSION

Patients, families, nurses, physicians, and other team members all feel the current stress on our healthcare system. As we continue to change workflows, alignment with principles of family engagement and patient- and family-centered care4 remain a priority for all involved. Prioritizing effective communication, maintaining engagement with patients and families, and using technology in new ways will all help us maintain high standards of care in both typical and completely atypical settings, such as during this pandemic. Nothing captures the benefits of effective communication better than P.M.’s description of John Michael’s experience during his hospitalization:

“Although usually an expedited triage patient, we spent hours in the ER among other ill and anxious patients. Ultimately, John Michael tested positive for influenza A. We spent 5 days in the hospital on droplet protection.

“The staff was amazing! The doctors and nurses communicated with us every step of the way. They made us aware of extra precautions and explained limitations, like not being able to go in the nutrition room or only having the doctors come in once midday. Whenever they did use [personal protective equipment] and come in, the nurses and team kept a safe distance but made sure to connect with John Michael, talking about what was on TV, what his favorite teams are, asking about his sisters, and always asking if we needed anything or if there was anything they could do. I am grateful for the kind, compassionate, and professional people who continue to care for our children under the intense danger and overwhelming magnitude of COVID-19.”

Disclosures

Dr Landrigan has served as a paid consultant to the Midwest Lighting Institute to help study the effect of blue light on health care provider performance and safety. He has consulted with and holds equity in the I-PASS Institute, which seeks to train institutions in best handoff practices and aid in their implementation. Dr Landrigan has received consulting fees from the Missouri Hospital Association/Executive Speakers Bureau for consulting on I-PASS. In addition, he has received monetary awards, honoraria, and travel reimbursement from multiple academic and professional organizations for teaching and consulting on sleep deprivation, physician performance, handoffs, and safety and has served as an expert witness in cases regarding patient safety and sleep deprivation. Drs Spector and Baird have also consulted with and hold equity in the I-PASS Institute. Dr Baird has consulted with the I-PASS Patient Safety Institute. Dr Patel holds equity/stock options in and has consulted for the I-PASS Patient Safety Institute. Dr Rosenbluth previously consulted with the I-PASS Patient Safety Institute, but not within the past 36 months. The other authors have no conflicts of interest or external support other than the existing PCORI funding for the Society of Hospital Medicine I-PASS SCORE study.

Disclaimer

The I-PASS Patient Safety Institute did not provide support to any authors for this work.

1. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803-1812. https://doi.org/10.1056/nejmsa1405556.

2. Khan A, Spector ND, Baird JD, et al. Patient safety after implementation of a coproduced family centered communication programme: multicenter before and after intervention study. BMJ. 2018;363:k4764. https://doi.org/10.1136/bmj.k4764.

3. Patient-Centered Outcomes Research Institute. Helping Children’s Hospitals Use a Program to Improve Communication with Families. December 27, 2019. https://www.pcori.org/research-results/2018/helping-childrens-hospitals-use-program-improve-communication-families. Accessed March 26, 2020.

4. Institute for Patient- and Family-Centered Care (IPFCC). PFCC and COVID-19. https://www.ipfcc.org/bestpractices/covid-19/index.html. Accessed April 10, 2020.

For parents of children with medical complexity (CMC), bringing a child to the hospital for needed expertise, equipment, and support is necessarily accompanied by a loss of power, freedom, and control. Two of our authors (K.L., P.M.) are parents of CMC—patients affectionately known as “frequent flyers” at their local hospitals. When health needs present, these experienced parents quickly identify what can be managed at home and what needs a higher level of care. The autonomy and security that accompany this parental expertise have been mitigated by, and in some cases even lost in, the COVID-19 pandemic. In particular, one of the most obvious changes to patients’ and families’ roles in inpatient care has been in communication practices, including changes to patient- and family-centered rounding that result from current isolation procedures and visitation policies. Over the past few months, we’ve learned a tremendous amount from providers and caregivers of hospitalized patients; in this article, we share some of what they’ve taught us.

Before we continue, we take a humble pause. The process of writing this piece spanned weeks during which certain areas of the world were overwhelmed. Our perspective has been informed by others who shared their experiences, and as a result, our health systems are more prepared. We offer this perspective recognizing the importance of learning from others and feeling a sense of gratitude to the providers and patients on the front lines.

CHANGING CIRCUMSTANCES OF CARE

As a group of parents, nurses, physicians, educators, and researchers who have spent the last 10 years studying how to communicate more effectively in the healthcare setting,1,2 we find ourselves in uncharted territory. Even now, we are engaged in an ongoing mentored implementation program examining the effects of a communication bundle on patient- and family- centered rounds (PFCRs) at 21 teaching hospitals across North America (the SHM I-PASS SCORE Study).3 COVID-19 has put that study on hold, and we have taken a step back to reassess the most basic communication needs of patients and families under any circumstance.

Even among our study group, our family advisors have also been on the front lines as patients and caregivers. One author (P.M.), shared a recent experience that she and her son, John Michael had:

“My son [who has autoimmune hepatitis and associated conditions] began coughing and had an intense sinus headache. As his symptoms continued, our concern steadily grew: Could we push through at home or would we have to go in [to the hospital] to seek care? My mind raced. We faced this decision many times, but never with the overwhelming threat of COVID-19 in the equation. My son, who is able to recognize troublesome symptoms, was afraid his sinuses were infected and decided that we should go in. My heart sank.”

Now, amid the COVID-19 pandemic, we have heard that patients like John Michael, who are accustomed to the healthcare setting, are “terrified with this additional concern of just being safe in the hospital,” reported a member of our Family Advisory Council. One of our members added, “We recognize this extends to the providers as well, who maintain great care despite their own family and personal safety concerns.” Although families affirmed the necessity of the enhanced isolation procedures and strict visitation policies, they also highlighted the effects of these changes on usual communication practices, including PFCRs.

CORE VALUES DURING COVID-19

In response to these sentiments, we reached out to all of our family advisors, as well as other team members, for suggestions on how healthcare teams could help patients and families best manage their hospital experiences in the setting of COVID-19. Additionally, we asked our physician and nursing colleagues across health systems about current inpatient unit adaptations. Their suggestions and adaptations reinforced and directly aligned with some of the core values of family engagement and patient- and family-centered care,4 namely, (1) prioritizing communication, (2) maintaining active engagement with patients and families, and (3) enhancing communication with technology.

Prioritizing Communication

Timely and clear communication can help providers manage the expectations of patients and families, build patient and family feelings of confidence, and reduce their feelings of anxiety and vulnerability. Almost universally, families acknowledged the importance of infection control and physical distancing measures while fearing that decreased entry into rooms would lead to decreased communication. “Since COVID-19 is contagious, families will want to see every precaution taken … but in a way that doesn’t cut off communication and leave an already sick and scared child and their family feeling emotionally isolated in a scary situation,” an Advisory Council member recounted. Importantly, one parent shared that hearing about personal protective equipment conservation could amplify stress because of fear their child wouldn’t be protected. These perspectives remind us that families may be experiencing heightened sensitivity and vulnerability during this pandemic.

Maintaining Active Engagement With Patients and Families

PFCRs continue to be an ideal setting for providers, patients, and families to communicate and build shared understanding, as well as build rapport and connection through human interactions. Maintaining rounding structures, when possible, reinforces familiarity with roles and expectations, among both patients who have been hospitalized in the past and those hospitalized for the first time. Adapting rounds may be as simple as opening the door during walk-rounds to invite caregiver participation while being aware of distancing. With large rounding teams, more substantial workflow changes may be necessary.

Beyond PFCRs, patients and family members can be further engaged through tasks/responsibilities for the time in between rounding communication. Examples include recording patient symptoms (eg, work of breathing) or actions (eg, how much water their child drinks). By doing this, patients and caregivers who feel helpless and anxious may be given a greater sense of control while at the same time making helpful contributions to medical care.

Parents also expressed value in reinforcing the message that patients and families are experts about themselves/their loved ones. Healthcare teams can invite their insights, questions, and concerns to show respect for their expertise and value. This builds trust and leads to a feeling of togetherness and teamwork. Across the board, families stressed the value of family engagement and communication in ideal conditions, and even more so in this time of upheaval.

Enhancing Communication With Technology

Many hospitals are leveraging technology to promote communication by integrating workstations on wheels & tablets with video-conferencing software (eg, Zoom, Skype) and even by adding communication via email and phone. While fewer team members are entering rooms, rounding teams are still including the voices of pharmacists, nutritionists, social workers, primary care physicians, and caregivers who are unable to be at the bedside.

These alternative communication methods may actually provide patients with more comfortable avenues for participating in their own care even beyond the pandemic. Children, in particular, may have strong opinions about their care but may not be comfortable speaking up in front of providers whom they don’t know very well. Telehealth, whiteboards, email, and limiting the number of providers in the room might actually create a more approachable environment for these patients even under routine conditions.

CONCLUSION

Patients, families, nurses, physicians, and other team members all feel the current stress on our healthcare system. As we continue to change workflows, alignment with principles of family engagement and patient- and family-centered care4 remain a priority for all involved. Prioritizing effective communication, maintaining engagement with patients and families, and using technology in new ways will all help us maintain high standards of care in both typical and completely atypical settings, such as during this pandemic. Nothing captures the benefits of effective communication better than P.M.’s description of John Michael’s experience during his hospitalization:

“Although usually an expedited triage patient, we spent hours in the ER among other ill and anxious patients. Ultimately, John Michael tested positive for influenza A. We spent 5 days in the hospital on droplet protection.

“The staff was amazing! The doctors and nurses communicated with us every step of the way. They made us aware of extra precautions and explained limitations, like not being able to go in the nutrition room or only having the doctors come in once midday. Whenever they did use [personal protective equipment] and come in, the nurses and team kept a safe distance but made sure to connect with John Michael, talking about what was on TV, what his favorite teams are, asking about his sisters, and always asking if we needed anything or if there was anything they could do. I am grateful for the kind, compassionate, and professional people who continue to care for our children under the intense danger and overwhelming magnitude of COVID-19.”

Disclosures

Dr Landrigan has served as a paid consultant to the Midwest Lighting Institute to help study the effect of blue light on health care provider performance and safety. He has consulted with and holds equity in the I-PASS Institute, which seeks to train institutions in best handoff practices and aid in their implementation. Dr Landrigan has received consulting fees from the Missouri Hospital Association/Executive Speakers Bureau for consulting on I-PASS. In addition, he has received monetary awards, honoraria, and travel reimbursement from multiple academic and professional organizations for teaching and consulting on sleep deprivation, physician performance, handoffs, and safety and has served as an expert witness in cases regarding patient safety and sleep deprivation. Drs Spector and Baird have also consulted with and hold equity in the I-PASS Institute. Dr Baird has consulted with the I-PASS Patient Safety Institute. Dr Patel holds equity/stock options in and has consulted for the I-PASS Patient Safety Institute. Dr Rosenbluth previously consulted with the I-PASS Patient Safety Institute, but not within the past 36 months. The other authors have no conflicts of interest or external support other than the existing PCORI funding for the Society of Hospital Medicine I-PASS SCORE study.

Disclaimer

The I-PASS Patient Safety Institute did not provide support to any authors for this work.

For parents of children with medical complexity (CMC), bringing a child to the hospital for needed expertise, equipment, and support is necessarily accompanied by a loss of power, freedom, and control. Two of our authors (K.L., P.M.) are parents of CMC—patients affectionately known as “frequent flyers” at their local hospitals. When health needs present, these experienced parents quickly identify what can be managed at home and what needs a higher level of care. The autonomy and security that accompany this parental expertise have been mitigated by, and in some cases even lost in, the COVID-19 pandemic. In particular, one of the most obvious changes to patients’ and families’ roles in inpatient care has been in communication practices, including changes to patient- and family-centered rounding that result from current isolation procedures and visitation policies. Over the past few months, we’ve learned a tremendous amount from providers and caregivers of hospitalized patients; in this article, we share some of what they’ve taught us.

Before we continue, we take a humble pause. The process of writing this piece spanned weeks during which certain areas of the world were overwhelmed. Our perspective has been informed by others who shared their experiences, and as a result, our health systems are more prepared. We offer this perspective recognizing the importance of learning from others and feeling a sense of gratitude to the providers and patients on the front lines.

CHANGING CIRCUMSTANCES OF CARE

As a group of parents, nurses, physicians, educators, and researchers who have spent the last 10 years studying how to communicate more effectively in the healthcare setting,1,2 we find ourselves in uncharted territory. Even now, we are engaged in an ongoing mentored implementation program examining the effects of a communication bundle on patient- and family- centered rounds (PFCRs) at 21 teaching hospitals across North America (the SHM I-PASS SCORE Study).3 COVID-19 has put that study on hold, and we have taken a step back to reassess the most basic communication needs of patients and families under any circumstance.

Even among our study group, our family advisors have also been on the front lines as patients and caregivers. One author (P.M.), shared a recent experience that she and her son, John Michael had:

“My son [who has autoimmune hepatitis and associated conditions] began coughing and had an intense sinus headache. As his symptoms continued, our concern steadily grew: Could we push through at home or would we have to go in [to the hospital] to seek care? My mind raced. We faced this decision many times, but never with the overwhelming threat of COVID-19 in the equation. My son, who is able to recognize troublesome symptoms, was afraid his sinuses were infected and decided that we should go in. My heart sank.”

Now, amid the COVID-19 pandemic, we have heard that patients like John Michael, who are accustomed to the healthcare setting, are “terrified with this additional concern of just being safe in the hospital,” reported a member of our Family Advisory Council. One of our members added, “We recognize this extends to the providers as well, who maintain great care despite their own family and personal safety concerns.” Although families affirmed the necessity of the enhanced isolation procedures and strict visitation policies, they also highlighted the effects of these changes on usual communication practices, including PFCRs.

CORE VALUES DURING COVID-19

In response to these sentiments, we reached out to all of our family advisors, as well as other team members, for suggestions on how healthcare teams could help patients and families best manage their hospital experiences in the setting of COVID-19. Additionally, we asked our physician and nursing colleagues across health systems about current inpatient unit adaptations. Their suggestions and adaptations reinforced and directly aligned with some of the core values of family engagement and patient- and family-centered care,4 namely, (1) prioritizing communication, (2) maintaining active engagement with patients and families, and (3) enhancing communication with technology.

Prioritizing Communication

Timely and clear communication can help providers manage the expectations of patients and families, build patient and family feelings of confidence, and reduce their feelings of anxiety and vulnerability. Almost universally, families acknowledged the importance of infection control and physical distancing measures while fearing that decreased entry into rooms would lead to decreased communication. “Since COVID-19 is contagious, families will want to see every precaution taken … but in a way that doesn’t cut off communication and leave an already sick and scared child and their family feeling emotionally isolated in a scary situation,” an Advisory Council member recounted. Importantly, one parent shared that hearing about personal protective equipment conservation could amplify stress because of fear their child wouldn’t be protected. These perspectives remind us that families may be experiencing heightened sensitivity and vulnerability during this pandemic.

Maintaining Active Engagement With Patients and Families

PFCRs continue to be an ideal setting for providers, patients, and families to communicate and build shared understanding, as well as build rapport and connection through human interactions. Maintaining rounding structures, when possible, reinforces familiarity with roles and expectations, among both patients who have been hospitalized in the past and those hospitalized for the first time. Adapting rounds may be as simple as opening the door during walk-rounds to invite caregiver participation while being aware of distancing. With large rounding teams, more substantial workflow changes may be necessary.

Beyond PFCRs, patients and family members can be further engaged through tasks/responsibilities for the time in between rounding communication. Examples include recording patient symptoms (eg, work of breathing) or actions (eg, how much water their child drinks). By doing this, patients and caregivers who feel helpless and anxious may be given a greater sense of control while at the same time making helpful contributions to medical care.

Parents also expressed value in reinforcing the message that patients and families are experts about themselves/their loved ones. Healthcare teams can invite their insights, questions, and concerns to show respect for their expertise and value. This builds trust and leads to a feeling of togetherness and teamwork. Across the board, families stressed the value of family engagement and communication in ideal conditions, and even more so in this time of upheaval.

Enhancing Communication With Technology

Many hospitals are leveraging technology to promote communication by integrating workstations on wheels & tablets with video-conferencing software (eg, Zoom, Skype) and even by adding communication via email and phone. While fewer team members are entering rooms, rounding teams are still including the voices of pharmacists, nutritionists, social workers, primary care physicians, and caregivers who are unable to be at the bedside.

These alternative communication methods may actually provide patients with more comfortable avenues for participating in their own care even beyond the pandemic. Children, in particular, may have strong opinions about their care but may not be comfortable speaking up in front of providers whom they don’t know very well. Telehealth, whiteboards, email, and limiting the number of providers in the room might actually create a more approachable environment for these patients even under routine conditions.

CONCLUSION

Patients, families, nurses, physicians, and other team members all feel the current stress on our healthcare system. As we continue to change workflows, alignment with principles of family engagement and patient- and family-centered care4 remain a priority for all involved. Prioritizing effective communication, maintaining engagement with patients and families, and using technology in new ways will all help us maintain high standards of care in both typical and completely atypical settings, such as during this pandemic. Nothing captures the benefits of effective communication better than P.M.’s description of John Michael’s experience during his hospitalization:

“Although usually an expedited triage patient, we spent hours in the ER among other ill and anxious patients. Ultimately, John Michael tested positive for influenza A. We spent 5 days in the hospital on droplet protection.

“The staff was amazing! The doctors and nurses communicated with us every step of the way. They made us aware of extra precautions and explained limitations, like not being able to go in the nutrition room or only having the doctors come in once midday. Whenever they did use [personal protective equipment] and come in, the nurses and team kept a safe distance but made sure to connect with John Michael, talking about what was on TV, what his favorite teams are, asking about his sisters, and always asking if we needed anything or if there was anything they could do. I am grateful for the kind, compassionate, and professional people who continue to care for our children under the intense danger and overwhelming magnitude of COVID-19.”

Disclosures

Dr Landrigan has served as a paid consultant to the Midwest Lighting Institute to help study the effect of blue light on health care provider performance and safety. He has consulted with and holds equity in the I-PASS Institute, which seeks to train institutions in best handoff practices and aid in their implementation. Dr Landrigan has received consulting fees from the Missouri Hospital Association/Executive Speakers Bureau for consulting on I-PASS. In addition, he has received monetary awards, honoraria, and travel reimbursement from multiple academic and professional organizations for teaching and consulting on sleep deprivation, physician performance, handoffs, and safety and has served as an expert witness in cases regarding patient safety and sleep deprivation. Drs Spector and Baird have also consulted with and hold equity in the I-PASS Institute. Dr Baird has consulted with the I-PASS Patient Safety Institute. Dr Patel holds equity/stock options in and has consulted for the I-PASS Patient Safety Institute. Dr Rosenbluth previously consulted with the I-PASS Patient Safety Institute, but not within the past 36 months. The other authors have no conflicts of interest or external support other than the existing PCORI funding for the Society of Hospital Medicine I-PASS SCORE study.

Disclaimer

The I-PASS Patient Safety Institute did not provide support to any authors for this work.

1. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803-1812. https://doi.org/10.1056/nejmsa1405556.

2. Khan A, Spector ND, Baird JD, et al. Patient safety after implementation of a coproduced family centered communication programme: multicenter before and after intervention study. BMJ. 2018;363:k4764. https://doi.org/10.1136/bmj.k4764.

3. Patient-Centered Outcomes Research Institute. Helping Children’s Hospitals Use a Program to Improve Communication with Families. December 27, 2019. https://www.pcori.org/research-results/2018/helping-childrens-hospitals-use-program-improve-communication-families. Accessed March 26, 2020.

4. Institute for Patient- and Family-Centered Care (IPFCC). PFCC and COVID-19. https://www.ipfcc.org/bestpractices/covid-19/index.html. Accessed April 10, 2020.

1. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803-1812. https://doi.org/10.1056/nejmsa1405556.

2. Khan A, Spector ND, Baird JD, et al. Patient safety after implementation of a coproduced family centered communication programme: multicenter before and after intervention study. BMJ. 2018;363:k4764. https://doi.org/10.1136/bmj.k4764.

3. Patient-Centered Outcomes Research Institute. Helping Children’s Hospitals Use a Program to Improve Communication with Families. December 27, 2019. https://www.pcori.org/research-results/2018/helping-childrens-hospitals-use-program-improve-communication-families. Accessed March 26, 2020.

4. Institute for Patient- and Family-Centered Care (IPFCC). PFCC and COVID-19. https://www.ipfcc.org/bestpractices/covid-19/index.html. Accessed April 10, 2020.

© 2020 Society of Hospital Medicine

Improving Handoffs: Teaching beyond “Watch One, Do One”

In this issue of the Journal of Hospital Medicine, Lee et al.1

Lee’s team trained 4 groups of residents in handoffs using 4 different hour-long sessions, each with a different focus and educational format. A control group received a 1-hour didactic, which they had already heard; an I-PASS–based training group included role plays; and Policy Mandate and PDSA (Plan, Do, Study, Act) groups included group discussions. The prioritization of content in the sessions varied considerably among the groups, and the results should be interpreted within the context of the variation in both delivery and content.

Consistent with the focus of each intervention, the I-PASS–based training group had the greatest improvement in transfer of patient information, the policy mandate training group (focused on specific tasks) had the greatest improvement in task accountability, and the PDSA-training group (focused on intern-driven improvements) had the greatest improvement in personal responsibility. The control 60-minute didactic group did not show significant improvement in any domains. The lack of improvement in the control group doesn’t imply that the content wasn’t valuable, just that repetition didn’t add anything to baseline. One takeaway from the primary results of this study is that residents are likely to practice and improve what they are taught, and therefore, faculty should teach them purposefully. If residents aren’t taught handoff skills, they are unlikely to master them.

The interventions used in this study are neither mutually exclusive nor duplicative. In the final conclusions, the authors described the potential for a curriculum that includes elements from all 3 interventions. One could certainly imagine a handoff training program that includes elements of the I-PASS handoff bundle including role plays, additional emphasis on personal responsibility for specific tasks, as well as a focus on PDSA cycles of improvement for handoff processes. This likely could be accomplished with efficiency and might add only an hour to the 1-hour trainings. Evidence from the I-PASS study5 suggests that improving handoffs can decrease medical errors by 21% and adverse events by 30%; this certainly seems worth the time.

Checklist-based observation tools can provide valuable data to assess handoffs.6 Lee’s study used a checklist based on TJC recommendations, and the 17 checklist elements overlapped somewhat with the SHM guidelines,2 providing some evidence for content validity. The dependent variable was total number of checklist items included in handoffs, a methodology that assumes that all handoff elements are equally important (eg, gender is weighted equally to if-then plans). This checklist also has a large proportion of items related to 2-way and closed-loop communication and therefore, places heavy weight on this component of handoffs. Adapting this checklist into an assessment tool would require additional validity evidence but could make it a very useful tool for completing handoff assessments and providing meaningful feedback.

The ideal data collection instrument would also include outcome measures, in addition to process measures. Improvements in outcome measures such as medical errors and adverse events, are more difficult to document but also provide more valuable data about the impact of curricula. In designing new hybrid curricula, it will be extremely important to focus on those outcomes that reflect the greatest impact on patient safety.

Finally, this study reminds us that the delivery modes of curricula are important factors in learning. The control group received an exclusively didactic presentation that they had heard before, while the other 3 groups had interactive components including role plays and group discussions. The improvements in different domains with different training formats provide evidence for the complementary nature. Interactive curricula involving role plays, simulations, and small-group discussions are more resource-intense than simple didactics, but they are also likely to be more impactful.

Teaching and assessing the quality of handoffs is critical to the safe practice of medicine. New ACGME duty hour requirements, which began in July, will allow for increased flexibility allowing longer shifts with shorter breaks.7 Regardless of the shift/call schedules programs design for their trainees, safe handoffs are essential. The strategies described here may be useful for helping institutions improve patient safety through better handoffs. This study adds to the bulk of data demonstrating that handoffs are a skill that should be both taught and assessed during residency training.

1. Lee SH, Terndrup C, Phan PH, et al. A Randomized Cohort Controlled Trial to Compare Intern Sign-Out Training Interventions. J Hosp Med. 2017;12(12):979-983.

2. Arora VM, Manjarrez E, Dressler DD, Basaviah P, Halasyamani L, Kripalani S. Hospitalist handoffs: a systematic review and task force recommendations. J Hosp Med. 2009;4(7):433-440. PubMed

3. Accreditation Council for Graduate Medical Education. Common Program Requirements. 2017. https://www.acgmecommon.org/2017_requirements Accessed November 10, 2017.

4. The Joint Commission. Improving Transitions of Care: Hand-off Communications. 2013; http://www.centerfortransforminghealthcare.org/tst_hoc.aspx. Accessed November 10, 2017.

5. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803-1812. PubMed

6. Feraco AM, Starmer AJ, Sectish TC, Spector ND, West DC, Landrigan CP. Reliability of Verbal Handoff Assessment and Handoff Quality Before and After Implementation of a Resident Handoff Bundle. Acad Pediatr. 2016;16(6):524-531. PubMed

7. Accreditation Council for Continuing Medical Education. Common Program Requirements. 2017; https://www.acgmecommon.org/2017_requirements. Accessed on June 12, 2017.

In this issue of the Journal of Hospital Medicine, Lee et al.1

Lee’s team trained 4 groups of residents in handoffs using 4 different hour-long sessions, each with a different focus and educational format. A control group received a 1-hour didactic, which they had already heard; an I-PASS–based training group included role plays; and Policy Mandate and PDSA (Plan, Do, Study, Act) groups included group discussions. The prioritization of content in the sessions varied considerably among the groups, and the results should be interpreted within the context of the variation in both delivery and content.

Consistent with the focus of each intervention, the I-PASS–based training group had the greatest improvement in transfer of patient information, the policy mandate training group (focused on specific tasks) had the greatest improvement in task accountability, and the PDSA-training group (focused on intern-driven improvements) had the greatest improvement in personal responsibility. The control 60-minute didactic group did not show significant improvement in any domains. The lack of improvement in the control group doesn’t imply that the content wasn’t valuable, just that repetition didn’t add anything to baseline. One takeaway from the primary results of this study is that residents are likely to practice and improve what they are taught, and therefore, faculty should teach them purposefully. If residents aren’t taught handoff skills, they are unlikely to master them.

The interventions used in this study are neither mutually exclusive nor duplicative. In the final conclusions, the authors described the potential for a curriculum that includes elements from all 3 interventions. One could certainly imagine a handoff training program that includes elements of the I-PASS handoff bundle including role plays, additional emphasis on personal responsibility for specific tasks, as well as a focus on PDSA cycles of improvement for handoff processes. This likely could be accomplished with efficiency and might add only an hour to the 1-hour trainings. Evidence from the I-PASS study5 suggests that improving handoffs can decrease medical errors by 21% and adverse events by 30%; this certainly seems worth the time.

Checklist-based observation tools can provide valuable data to assess handoffs.6 Lee’s study used a checklist based on TJC recommendations, and the 17 checklist elements overlapped somewhat with the SHM guidelines,2 providing some evidence for content validity. The dependent variable was total number of checklist items included in handoffs, a methodology that assumes that all handoff elements are equally important (eg, gender is weighted equally to if-then plans). This checklist also has a large proportion of items related to 2-way and closed-loop communication and therefore, places heavy weight on this component of handoffs. Adapting this checklist into an assessment tool would require additional validity evidence but could make it a very useful tool for completing handoff assessments and providing meaningful feedback.

The ideal data collection instrument would also include outcome measures, in addition to process measures. Improvements in outcome measures such as medical errors and adverse events, are more difficult to document but also provide more valuable data about the impact of curricula. In designing new hybrid curricula, it will be extremely important to focus on those outcomes that reflect the greatest impact on patient safety.

Finally, this study reminds us that the delivery modes of curricula are important factors in learning. The control group received an exclusively didactic presentation that they had heard before, while the other 3 groups had interactive components including role plays and group discussions. The improvements in different domains with different training formats provide evidence for the complementary nature. Interactive curricula involving role plays, simulations, and small-group discussions are more resource-intense than simple didactics, but they are also likely to be more impactful.

Teaching and assessing the quality of handoffs is critical to the safe practice of medicine. New ACGME duty hour requirements, which began in July, will allow for increased flexibility allowing longer shifts with shorter breaks.7 Regardless of the shift/call schedules programs design for their trainees, safe handoffs are essential. The strategies described here may be useful for helping institutions improve patient safety through better handoffs. This study adds to the bulk of data demonstrating that handoffs are a skill that should be both taught and assessed during residency training.

In this issue of the Journal of Hospital Medicine, Lee et al.1

Lee’s team trained 4 groups of residents in handoffs using 4 different hour-long sessions, each with a different focus and educational format. A control group received a 1-hour didactic, which they had already heard; an I-PASS–based training group included role plays; and Policy Mandate and PDSA (Plan, Do, Study, Act) groups included group discussions. The prioritization of content in the sessions varied considerably among the groups, and the results should be interpreted within the context of the variation in both delivery and content.

Consistent with the focus of each intervention, the I-PASS–based training group had the greatest improvement in transfer of patient information, the policy mandate training group (focused on specific tasks) had the greatest improvement in task accountability, and the PDSA-training group (focused on intern-driven improvements) had the greatest improvement in personal responsibility. The control 60-minute didactic group did not show significant improvement in any domains. The lack of improvement in the control group doesn’t imply that the content wasn’t valuable, just that repetition didn’t add anything to baseline. One takeaway from the primary results of this study is that residents are likely to practice and improve what they are taught, and therefore, faculty should teach them purposefully. If residents aren’t taught handoff skills, they are unlikely to master them.

The interventions used in this study are neither mutually exclusive nor duplicative. In the final conclusions, the authors described the potential for a curriculum that includes elements from all 3 interventions. One could certainly imagine a handoff training program that includes elements of the I-PASS handoff bundle including role plays, additional emphasis on personal responsibility for specific tasks, as well as a focus on PDSA cycles of improvement for handoff processes. This likely could be accomplished with efficiency and might add only an hour to the 1-hour trainings. Evidence from the I-PASS study5 suggests that improving handoffs can decrease medical errors by 21% and adverse events by 30%; this certainly seems worth the time.

Checklist-based observation tools can provide valuable data to assess handoffs.6 Lee’s study used a checklist based on TJC recommendations, and the 17 checklist elements overlapped somewhat with the SHM guidelines,2 providing some evidence for content validity. The dependent variable was total number of checklist items included in handoffs, a methodology that assumes that all handoff elements are equally important (eg, gender is weighted equally to if-then plans). This checklist also has a large proportion of items related to 2-way and closed-loop communication and therefore, places heavy weight on this component of handoffs. Adapting this checklist into an assessment tool would require additional validity evidence but could make it a very useful tool for completing handoff assessments and providing meaningful feedback.

The ideal data collection instrument would also include outcome measures, in addition to process measures. Improvements in outcome measures such as medical errors and adverse events, are more difficult to document but also provide more valuable data about the impact of curricula. In designing new hybrid curricula, it will be extremely important to focus on those outcomes that reflect the greatest impact on patient safety.

Finally, this study reminds us that the delivery modes of curricula are important factors in learning. The control group received an exclusively didactic presentation that they had heard before, while the other 3 groups had interactive components including role plays and group discussions. The improvements in different domains with different training formats provide evidence for the complementary nature. Interactive curricula involving role plays, simulations, and small-group discussions are more resource-intense than simple didactics, but they are also likely to be more impactful.

Teaching and assessing the quality of handoffs is critical to the safe practice of medicine. New ACGME duty hour requirements, which began in July, will allow for increased flexibility allowing longer shifts with shorter breaks.7 Regardless of the shift/call schedules programs design for their trainees, safe handoffs are essential. The strategies described here may be useful for helping institutions improve patient safety through better handoffs. This study adds to the bulk of data demonstrating that handoffs are a skill that should be both taught and assessed during residency training.

1. Lee SH, Terndrup C, Phan PH, et al. A Randomized Cohort Controlled Trial to Compare Intern Sign-Out Training Interventions. J Hosp Med. 2017;12(12):979-983.

2. Arora VM, Manjarrez E, Dressler DD, Basaviah P, Halasyamani L, Kripalani S. Hospitalist handoffs: a systematic review and task force recommendations. J Hosp Med. 2009;4(7):433-440. PubMed

3. Accreditation Council for Graduate Medical Education. Common Program Requirements. 2017. https://www.acgmecommon.org/2017_requirements Accessed November 10, 2017.

4. The Joint Commission. Improving Transitions of Care: Hand-off Communications. 2013; http://www.centerfortransforminghealthcare.org/tst_hoc.aspx. Accessed November 10, 2017.

5. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803-1812. PubMed

6. Feraco AM, Starmer AJ, Sectish TC, Spector ND, West DC, Landrigan CP. Reliability of Verbal Handoff Assessment and Handoff Quality Before and After Implementation of a Resident Handoff Bundle. Acad Pediatr. 2016;16(6):524-531. PubMed

7. Accreditation Council for Continuing Medical Education. Common Program Requirements. 2017; https://www.acgmecommon.org/2017_requirements. Accessed on June 12, 2017.

1. Lee SH, Terndrup C, Phan PH, et al. A Randomized Cohort Controlled Trial to Compare Intern Sign-Out Training Interventions. J Hosp Med. 2017;12(12):979-983.

2. Arora VM, Manjarrez E, Dressler DD, Basaviah P, Halasyamani L, Kripalani S. Hospitalist handoffs: a systematic review and task force recommendations. J Hosp Med. 2009;4(7):433-440. PubMed

3. Accreditation Council for Graduate Medical Education. Common Program Requirements. 2017. https://www.acgmecommon.org/2017_requirements Accessed November 10, 2017.

4. The Joint Commission. Improving Transitions of Care: Hand-off Communications. 2013; http://www.centerfortransforminghealthcare.org/tst_hoc.aspx. Accessed November 10, 2017.

5. Starmer AJ, Spector ND, Srivastava R, et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803-1812. PubMed

6. Feraco AM, Starmer AJ, Sectish TC, Spector ND, West DC, Landrigan CP. Reliability of Verbal Handoff Assessment and Handoff Quality Before and After Implementation of a Resident Handoff Bundle. Acad Pediatr. 2016;16(6):524-531. PubMed

7. Accreditation Council for Continuing Medical Education. Common Program Requirements. 2017; https://www.acgmecommon.org/2017_requirements. Accessed on June 12, 2017.

© 2017 Society of Hospital Medicine

Ordering Patterns in Shift‐Based Care

Duty‐hour restrictions were implemented by the Accreditation Council for Graduate Medical Education (ACGME) in 2003 in response to data showing that sleep deprivation was correlated with serious medical errors.[1] In 2011, the ACGME required more explicit restrictions in the number of hours worked and the maximal shift length.[2] These requirements have necessitated a transition from a traditional q4 call model for interns to one in which shifts are limited to a maximum of 16 hours.

Studies of interns working these shorter shifts have had varied results, and comprehensive reviews have failed to demonstrate consistent improvements.[3, 4, 5] Studies of shift‐length limitation initially suggested improvements in patient safety (decreased length of stay,[6, 7] cost of hospitalization,[6] medication errors,[7] serious medical errors,[8] and intensive care unit [ICU] admissions[9]) and resident quality of life.[10] However, other recent studies have reported an increased number of self‐reported medical errors[11] and either did not detect change[12] or reported perceived decreases[13] in quality of care and continuity of care.

We previously reported decreased length of stay and decreased cost of hospitalization in pediatric inpatients cared for in a day/night‐shiftbased care model.[6] An hypothesized reason for those care improvements is the restructured care model led to increased active clinical management during both day and night hours. Here we report the findings of a retrospective analysis to investigate this hypothesis.

PATIENTS AND METHODS

Study Population

We reviewed the charts of pediatric patients admitted to University of California, San Francisco Benioff Children's Hospital, a 175‐bed tertiary care facility, over a 2‐year period between September 15, 2007 and September 15, 2008 (preintervention) and September 16, 2008 and September 16, 2009 (postintervention). During this study period, our hospital was still dependent on paper orders. Admission order sets were preprinted paper forms that were unchanged for the study period. Using International Classification of Diseases, 9th Revision coding, we identified patients on the general pediatrics service with 1 of 6 common diagnosesdehydration, community‐acquired pneumonia, aspiration pneumonia, upper respiratory infection, asthma, and bronchiolitis. These diagnoses were chosen because it was hypothesized that their length of inpatient stay could be impacted by active clinical management. We excluded patients admitted to the ICU or transferred between services.

A list of medical record numbers (MRNs) corresponding to admissions for 1 of the 6 above diagnoses during the pre‐ and postintervention periods was compiled. MRNs were randomized and then sequentially reviewed until 50 admissions in each time period were obtained. After data collection was completed, we noted that 2 patients had been in the ICU for part of their hospitalization, and these were excluded, leaving 48 admissions from prior to the intervention and 50 admissions from after intervention who were examined.

Intervention

During the preintervention period, patients were cared for by interns who took call every sixth night (duty periods up to 30 hours), with cross‐coverage of patients on multiple teams. Cross‐coverage was defined as coverage of patients cared for during nonconsecutive shifts and for whom residents did not participate in attending rounds. Noncall shifts were typically 10 to 11 hours. They were supervised by senior residents who took call every fourth or fifth night and who provided similar cross‐coverage.

During the postintervention period, interns worked day and night shifts of 13 hours (1 hour overlap time between shifts for handoffs), with increased night staffing to eliminate intern‐level cross‐coverage of multiple teams and maintain interns as the primary providers. Interns covered the same team for 5 to 7 consecutive days on either the day or night shifts. Interns remained on the same teams when they switched from day shifts to night shifts to preserve continuity. There were some 24‐hour shifts for senior residents on weekends. Senior residents maintained supervisory responsibility for all patients (both hospitalist teams and a subspecialty team). They also worked 7 consecutive nights.

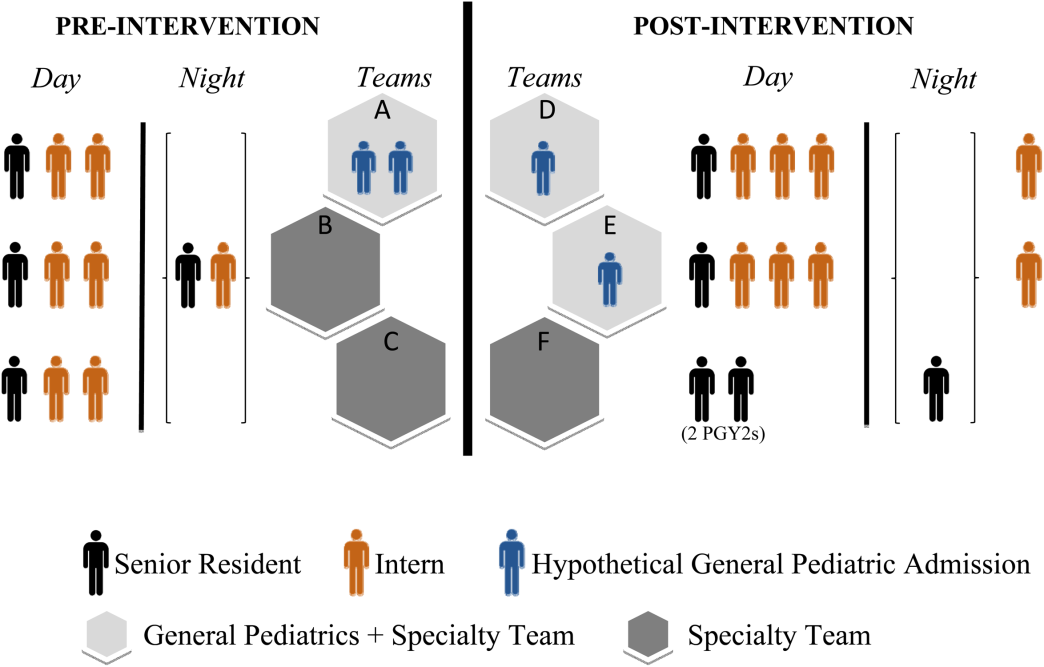

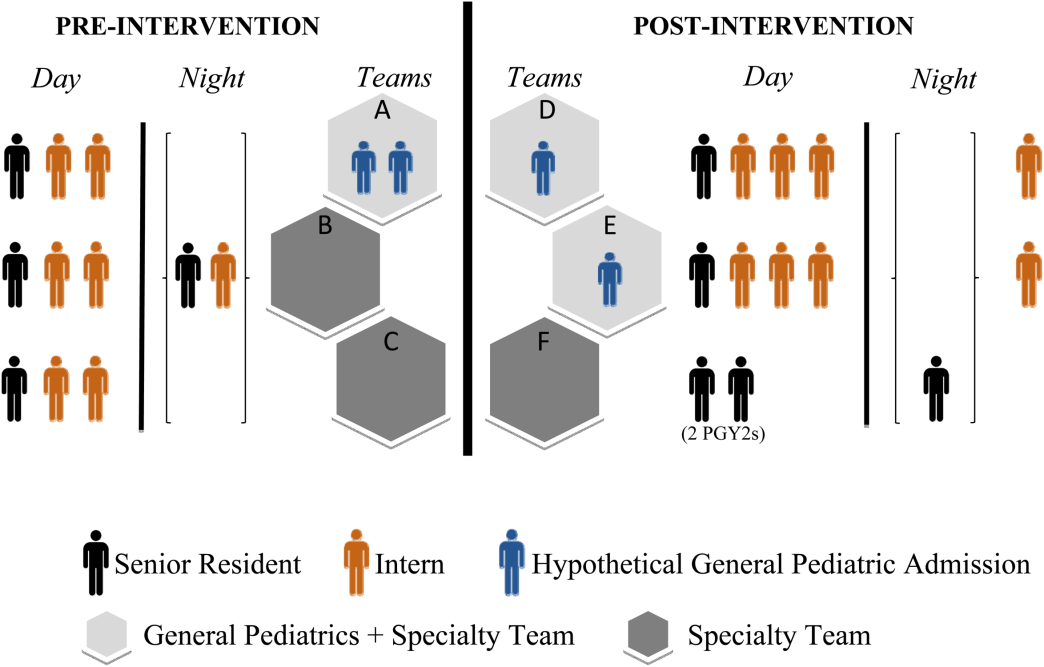

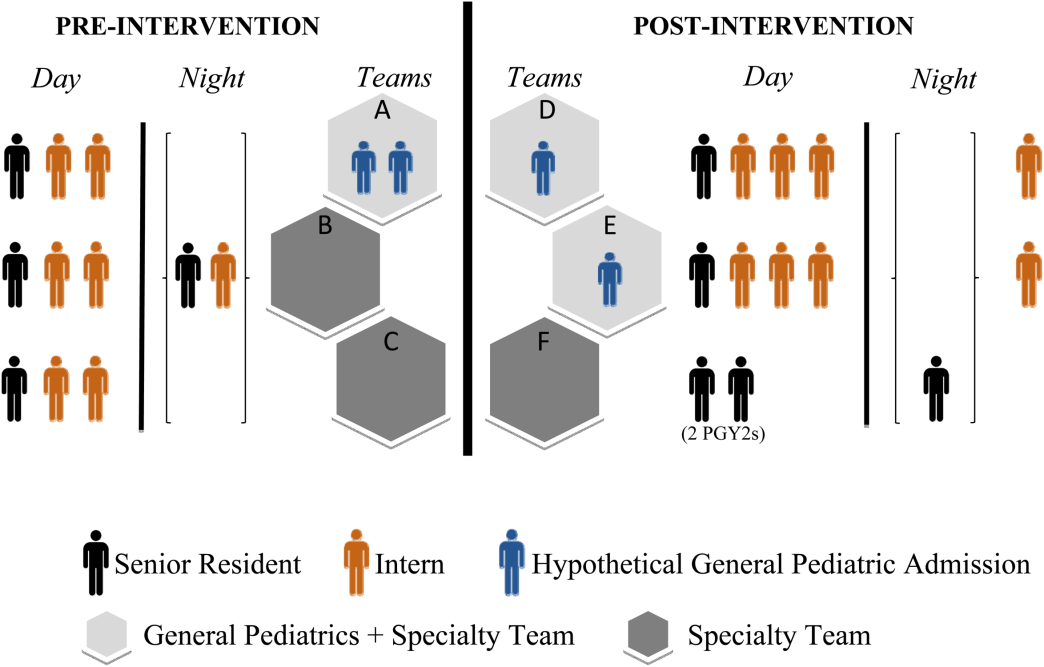

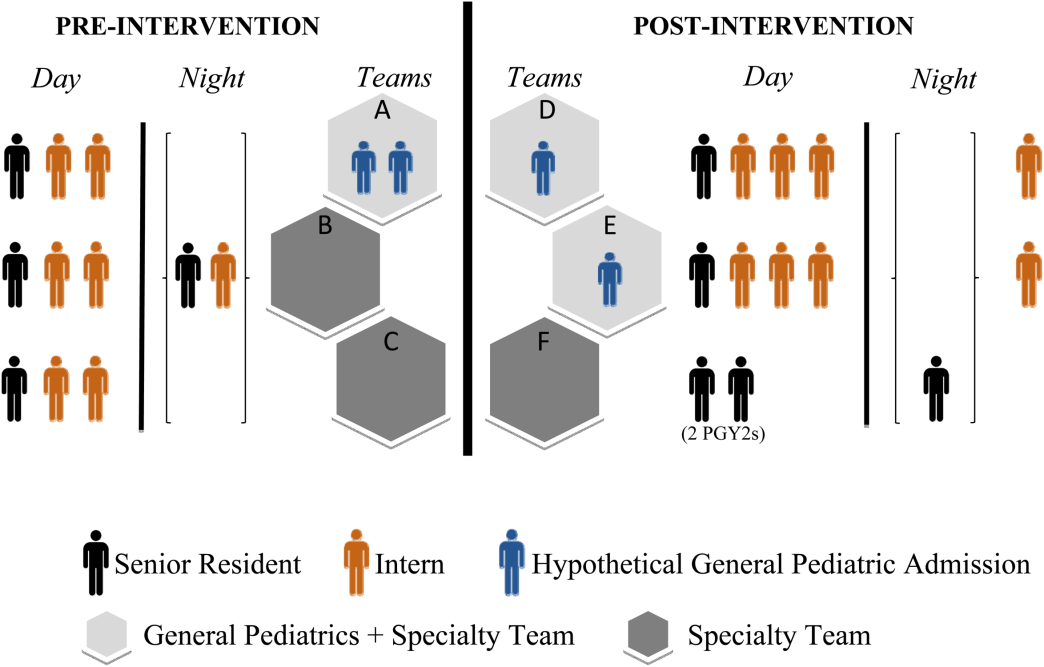

There were changes in the staffing ratios associated with the change to day and night teams (Table 1, Figure 1). In the preintervention period, general pediatrics patients were covered by a single hospitalist and cohorted on a single team (team A), which also covered several groups of subspecialty patients with subspecialty attendings. The team consisted of 2 interns and 1 senior resident, who shared extended (30‐hour) call in a cycle with 2 other inpatient teams. In the postintervention period, general pediatrics patients were split between 2 teams (teams D and E) and mixed with subspecialty patients. Hospitalist continued to be the attendings, and these hospitalists also covered specialty patients with subspecialists in consulting roles. The teams consisted of 3 interns on the day shift, and 1 on the night shift. There was 1 senior resident per team on day shift, and a single senior resident covering all teams at night.

| Preintervention | Postintervention | |||||

|---|---|---|---|---|---|---|

| ||||||

| General Pediatrics | Team A | Team B | Team C | Team D | Team E | Team F |

| Patient Distribution | General Pediatrics | GI/Liver | Renal | General Pediatrics | General Pediatrics | Liver |

| Pulmonary | Neurology | Rheumatology | Mixed Specialty | Mixed Specialty | Renal | |

| Adolescent | Endocrine | |||||

| Team membersa | 2 interns (q6 call) | 4 interns (3 on day shift/1 on night shift) | ||||

| 1 senior resident (q5 call) | 1 senior resident | |||||

| Night‐shift coveragea | 1 intern and 1 senior resident together covered all 3 teams. | 1 night intern per team (teams D/E) working 7 consecutive night shifts | ||||

| 1 supervising night resident covering all 3 teams | ||||||

| Intern cross‐coverage of other teams | Nights/clinic afternoons | None | ||||

| Length of night shift | 30 hours | 13 hours | ||||

There was no change in the paper‐order system, the electronic health record, timing of the morning blood draw, use of new facilities for patient care, or protocol for emergency department admission. Concomitant with the restructuring, most subspecialty patients were consolidated onto the hospitalist service, necessitating creation of a second hospitalist team. However, patients admitted with the diagnoses identified above would have been on the hospitalist service before and after the restructuring.

Data Collection/Analysis

We reviewed specific classes of orders and categorized by type: respiratory medication, oxygen, intravenous (IV) fluids, diet, monitoring, and activity, time of day (day vs night‐shift), and whether they were an escalation or de‐escalation of care. De‐escalation of care was defined as orders that decreased patient care such as weaning a patient off nebulized albuterol or decreasing their IV fluids. Orders between 07:00 to 18:00 were considered day‐shift orders and between 18:01 and 06:59 were classified as night‐shift orders. Only orders falling into 1 of the aforementioned categories were recorded. Admission order sets were not included. Initially, charts were reviewed by both investigators together; after comparing results for 10 charts to ensure consistency of methodology and criteria, the remaining charts were reviewed by 1 of the study investigators.

To compare demographics, diagnoses, and ordering patterns, t tests and 2 (SAS version 9.2 [SAS Institute, Cary, NC], Stata version 13.1 [StataCorp, College Station, TX]) were used. Multivariate gamma models (SAS version 9.2 [SAS Institute]) that adjusted for clustering at the attending level and patient age were used to compare severity of illness before and after the intervention. This study was approved by the University of California, San Francisco Committee on Human Research.

RESULTS

We analyzed data for 48 admissions preintervention and 50 postintervention. With the exception of insurance type, there was no difference in baseline demographics, diagnoses, or severity of illness between the groups (Table 2). Within the order classes above, we identified 212 orders preintervention and 231 orders postintervention.

| Preintervention,n = 48, N (%) | Postintervention, n = 50, N (%) | P Value | |

|---|---|---|---|

| |||

| Age, y, mean (SD) | 4.8 (4.6) | 5.5 (4.7) | 0.4474 |

| Race/ethnicity | 0.1953 | ||

| NH white | 12 (25.0%) | 9 (18.0%) | |

| NH black | 11 (22.9%) | 7 (14.0%) | |

| Hispanic | 16 (33.3%) | 13 (26.0%) | |

| Asian | 6 (12.5%) | 10 (20.0%) | |

| Other | 3 (6.3%) | 10 (20.0%) | |

| Missing | 0 | 1 (2.0%) | |

| Gender | 0.6577 | ||

| Female | 19 (39.6%) | 22 (44.0%) | |

| Male | 29 (60.4%) | 28 (56.0%) | |

| Primary language | 0.2601 | ||

| English | 38 (79.2%) | 45 (90.0%) | |

| Spanish | 9 (18.8%) | 5 (10.0%) | |

| Other | 1 (2.1%) | 0 | |

| Insurance | 0.0118 | ||

| Private | 13 (27.1%) | 26 (52.0%) | |

| Medical | 35 (72.9%) | 24 (48.0%) | |

| Other | 0 | 0 | |

| Admit source | 0.6581 | ||

| Referral | 20 (41.7%) | 18 (36.0%) | |

| ED | 26 (54.2%) | 31 (62.0%) | |

| Transfer | 2 (4.2%) | 1 (2.0%) | |

| Severity of illness | 0.1926 | ||

| Minor | 15 (31.3%) | 24 (48.0%) | |

| Moderate | 23 (47.9%) | 16 (32.0%) | |

| Severe | 10 (20.8%) | 10 (20.0%) | |

| Extreme | 0 | 0 | |

| Diagnoses | 0.562 | ||

| Asthma | 21 | 19 | |

| Bronchiolitis | 2 | 4 | |

| Pneumonia | 17 | 19 | |

| Dehydration | 6 | 7 | |

| URI | 0 | 1 | |

| Aspiration pneumonia | 2 | 0 | |

After the intervention, there was a statistically significant increase in the average number of orders written within the first 12 hours (pre: 0.58 orders vs post: 1.12, P = 0.009) and 24 hours (pre: 1.52 vs post: 2.38, P = 0.004) following admission (Table 3), not including the admission order set. The fraction of orders written at night was not significantly different (27% at night preintervention, 33% postintervention, P = 0.149). The fraction of admissions on the day shift compared to the night shift did not change (P = 0.72). There was no difference in the ratio of de‐escalation to escalation orders written during the night (Table 2).

| Preintervention, 48 Admissions | Postintervention, 50 Admissions | P Value | |

|---|---|---|---|

| |||

| Total no. of orders | 212 | 231 | |

| Mean no. of orders per admission | 4.42 | 4.62 | |

| Day shift orders, n (%) | 155 (73) | 155 (67) | 0.149 |

| Night shift orders, n (%) | 57 (27) | 76 (33) | |

| Mean no. of orders within first 12 hours* | 0.58 | 1.12 | 0.009 |

| Mean no. of orders within first 24 hours* | 1.52 | 2.38 | 0.004 |

| Night shift escalation orders (%) | 27 (47) | 33 (43) | 0.491 |

| Night shift de‐escalation orders (%) | 30 (53) | 43 (57) | |

DISCUSSION

In this study, we demonstrate increased patient care management early in the hospitalization, measured in this study by the mean number of orders written per patient in the first 12 and 24 hours after admission, after transition from a call schedule with extended (>16 hours) shifts to one with shorter shifts compliant with current ACGME duty‐hour restrictions and an explicit focus on greater continuity of care. We did not detect a change in the proportion of total orders written on the night shift compared to the day shift. Earlier active medical management, such as weaning nebulized albuterol or supplemental oxygen, can speed the time to discharge.[14]

Our failure to detect a significant change in the proportion or type of orders written at night may have been due to our small sample size. Anecdotally, after the intervention, medical students reported to us that they noticed a difference between our service, in which we expect night teams to advance care, and other services at our institution, in which nights are a time to focus on putting out fires. This was not something that had been reported to us prior. It is likely reflective of the overall approach to patient care taken by residents working a night shift as part of a longitudinal care team.

This study builds on previous findings that demonstrated lower costs and shorter length of stay after implementing a schedule based on day and night teams.[7] The reasons for such improvements are likely multifactorial. In our model, which was purposefully designed to create night‐team continuity and minimize cross‐coverage, it is likely that residents also felt a greater sense of responsibility for and familiarity with the patients[15] and therefore felt more comfortable advancing care. Not only were interns likely better rested, the patient‐to‐provider ratio was also lower than in the preintervention model. Increases in staffing are often necessary to eliminate cross‐coverage while maintaining safe, 24‐hour care. These findings suggest that increases in cost from additional staffing may be at least partially offset by more active patient management early in the hospitalization, which has the potential to lead to shorter hospital stays.

There are several limitations to our research. We studied a small sample, including a subset of general pediatrics diagnoses that are amenable to active management, limiting generalizability. We did not calculate a physician‐to‐patient ratio because this was not possible with the retrospective data we collected. Staffing ratios likely improved, and we consider that part of the overall improvements in staffing that may have contributed to the observed changes in ordering patterns. Although intern‐level cross‐coverage was eliminated, the senior resident continued to cover multiple teams overnight. This senior covered the same 3 teams for 7 consecutive nights. The addition of a hospitalist team, with subspecialists being placed in consultant roles, may have contributed to the increase in active management, though our study population did not include subspecialty patients. There was a difference in insurance status between the 2 groups. This was unlikely to affect resident physician practices as insurance information is not routinely discussed in the course of patient care. In the context of the ongoing debate about duty‐hour restrictions, it will be important for future studies to elucidate whether sleep or other variables are the primary contributors to this finding. Our data are derived solely from 1 inpatient service at a single academic medical center; however, we do feel there are lessons that may be applied to other settings.

CONCLUSION

A coverage system with improved nighttime resident coverage was associated with a greater number of orders written early in the hospitalization, suggesting more active management of clinical problems to advance care.

Acknowledgements

The authors thank Dr. I. Elaine Allen, John Kornak, and Dr. Derek Pappas for assistance with biostatistics, and Dr. Diana Bojorquez and Dr. Derek Pappas for assistance with review of the manuscript and creation of the figures.

Disclosures: None of the authors have financial relationships or other conflicts of interest to disclose. No external funding was secured for this study. Dr. Auerbach was supported by grant K24HL098372 during the course of this study. This project was supported by the National Center for Advancing Translational Sciences, National Institutes of Health (NIH), through University of California San FranciscoClinical and Translational Sciences Institute grant UL1 TR000004. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH. Dr. Rosenbluth had access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

- , , . New requirements for resident duty hours. JAMA. 2002;288(9):1112–1114.

- Accreditation Council for Graduate Medical Education. Common program requirements. 2011. Available at: http://www.acgme.org/acgmeweb/Portals/0/PDFs/Common_Program_Requirements_07012011[2].pdf. Accessed November 28, 2011.

- , , . Patient safety, resident education and resident well‐being following implementation of the 2003 ACGME duty hour rules. J Gen Intern Med. 2011;26(8):907–919.

- , , , et al. A systematic review of the effects of resident duty hour restrictions in surgery: impact on resident wellness, training, and patient outcomes. Ann Surg. 2014;259(6):1041–1053.

- , , , . Duty‐hour limits and patient care and resident outcomes: can high‐quality studies offer insight into complex relationships? Annu Rev Med. 2013;64:467–483.

- , , , , , . Association between adaptations to ACGME duty hour requirements, length of stay, and costs. Sleep. 2013;36(2):245–248.

- , , , . Effect of a change in house staff work schedule on resource utilization and patient care. Arch Intern Med. 1991;151(10):2065–2070.

- , , , et al. Effect of reducing interns' work hours on serious medical errors in intensive care units. N Engl J Med. 2004;351(18):1838–1848.

- , , , . Changes in outcomes for internal medicine inpatients after work‐hour regulations. Ann Intern Med. 2007;147(2):97–103.

- , , . Effects of reducing or eliminating resident work shifts over 16 hours: a systematic review. Sleep. 2010;33(8):1043–1053.

- , , , et al. Effects of the 2011 duty hour reforms on interns and their patients: a prospective longitudinal cohort study. JAMA Intern Med. 2013;173(8):657–662; discussion 663.

- , , , , . Effect of 16‐hour duty periods on patient care and resident education. Mayo Clin Proc. 2011;86(3):192–196.

- , , , et al. Effect of the 2011 vs 2003 duty hour regulation‐compliant models on sleep duration, trainee education, and continuity of patient care among internal medicine house staff: a randomized trial. JAMA Intern Med. 2013;173(8):649–655.

- , , , . Effectiveness of a clinical pathway for inpatient asthma management. Pediatrics. 2000;106(5):1006–1012.

- , , , . Resident perceptions of autonomy in a complex tertiary care environment improve when supervised by hospitalists. Hosp Pediatr. 2012;2(4):228–234.

Duty‐hour restrictions were implemented by the Accreditation Council for Graduate Medical Education (ACGME) in 2003 in response to data showing that sleep deprivation was correlated with serious medical errors.[1] In 2011, the ACGME required more explicit restrictions in the number of hours worked and the maximal shift length.[2] These requirements have necessitated a transition from a traditional q4 call model for interns to one in which shifts are limited to a maximum of 16 hours.

Studies of interns working these shorter shifts have had varied results, and comprehensive reviews have failed to demonstrate consistent improvements.[3, 4, 5] Studies of shift‐length limitation initially suggested improvements in patient safety (decreased length of stay,[6, 7] cost of hospitalization,[6] medication errors,[7] serious medical errors,[8] and intensive care unit [ICU] admissions[9]) and resident quality of life.[10] However, other recent studies have reported an increased number of self‐reported medical errors[11] and either did not detect change[12] or reported perceived decreases[13] in quality of care and continuity of care.

We previously reported decreased length of stay and decreased cost of hospitalization in pediatric inpatients cared for in a day/night‐shiftbased care model.[6] An hypothesized reason for those care improvements is the restructured care model led to increased active clinical management during both day and night hours. Here we report the findings of a retrospective analysis to investigate this hypothesis.

PATIENTS AND METHODS

Study Population

We reviewed the charts of pediatric patients admitted to University of California, San Francisco Benioff Children's Hospital, a 175‐bed tertiary care facility, over a 2‐year period between September 15, 2007 and September 15, 2008 (preintervention) and September 16, 2008 and September 16, 2009 (postintervention). During this study period, our hospital was still dependent on paper orders. Admission order sets were preprinted paper forms that were unchanged for the study period. Using International Classification of Diseases, 9th Revision coding, we identified patients on the general pediatrics service with 1 of 6 common diagnosesdehydration, community‐acquired pneumonia, aspiration pneumonia, upper respiratory infection, asthma, and bronchiolitis. These diagnoses were chosen because it was hypothesized that their length of inpatient stay could be impacted by active clinical management. We excluded patients admitted to the ICU or transferred between services.

A list of medical record numbers (MRNs) corresponding to admissions for 1 of the 6 above diagnoses during the pre‐ and postintervention periods was compiled. MRNs were randomized and then sequentially reviewed until 50 admissions in each time period were obtained. After data collection was completed, we noted that 2 patients had been in the ICU for part of their hospitalization, and these were excluded, leaving 48 admissions from prior to the intervention and 50 admissions from after intervention who were examined.

Intervention

During the preintervention period, patients were cared for by interns who took call every sixth night (duty periods up to 30 hours), with cross‐coverage of patients on multiple teams. Cross‐coverage was defined as coverage of patients cared for during nonconsecutive shifts and for whom residents did not participate in attending rounds. Noncall shifts were typically 10 to 11 hours. They were supervised by senior residents who took call every fourth or fifth night and who provided similar cross‐coverage.

During the postintervention period, interns worked day and night shifts of 13 hours (1 hour overlap time between shifts for handoffs), with increased night staffing to eliminate intern‐level cross‐coverage of multiple teams and maintain interns as the primary providers. Interns covered the same team for 5 to 7 consecutive days on either the day or night shifts. Interns remained on the same teams when they switched from day shifts to night shifts to preserve continuity. There were some 24‐hour shifts for senior residents on weekends. Senior residents maintained supervisory responsibility for all patients (both hospitalist teams and a subspecialty team). They also worked 7 consecutive nights.

There were changes in the staffing ratios associated with the change to day and night teams (Table 1, Figure 1). In the preintervention period, general pediatrics patients were covered by a single hospitalist and cohorted on a single team (team A), which also covered several groups of subspecialty patients with subspecialty attendings. The team consisted of 2 interns and 1 senior resident, who shared extended (30‐hour) call in a cycle with 2 other inpatient teams. In the postintervention period, general pediatrics patients were split between 2 teams (teams D and E) and mixed with subspecialty patients. Hospitalist continued to be the attendings, and these hospitalists also covered specialty patients with subspecialists in consulting roles. The teams consisted of 3 interns on the day shift, and 1 on the night shift. There was 1 senior resident per team on day shift, and a single senior resident covering all teams at night.

| Preintervention | Postintervention | |||||

|---|---|---|---|---|---|---|

| ||||||

| General Pediatrics | Team A | Team B | Team C | Team D | Team E | Team F |

| Patient Distribution | General Pediatrics | GI/Liver | Renal | General Pediatrics | General Pediatrics | Liver |

| Pulmonary | Neurology | Rheumatology | Mixed Specialty | Mixed Specialty | Renal | |

| Adolescent | Endocrine | |||||

| Team membersa | 2 interns (q6 call) | 4 interns (3 on day shift/1 on night shift) | ||||

| 1 senior resident (q5 call) | 1 senior resident | |||||

| Night‐shift coveragea | 1 intern and 1 senior resident together covered all 3 teams. | 1 night intern per team (teams D/E) working 7 consecutive night shifts | ||||

| 1 supervising night resident covering all 3 teams | ||||||

| Intern cross‐coverage of other teams | Nights/clinic afternoons | None | ||||

| Length of night shift | 30 hours | 13 hours | ||||

There was no change in the paper‐order system, the electronic health record, timing of the morning blood draw, use of new facilities for patient care, or protocol for emergency department admission. Concomitant with the restructuring, most subspecialty patients were consolidated onto the hospitalist service, necessitating creation of a second hospitalist team. However, patients admitted with the diagnoses identified above would have been on the hospitalist service before and after the restructuring.

Data Collection/Analysis