User login

Improving Resident Feedback on Diagnostic Reasoning after Handovers: The LOOP Project

One of the most promising methods for improving medical decision-making is learning from the outcomes of one’s decisions and either maintaining or modifying future decision-making based on those outcomes.1-3 This process of iterative improvement over time based on feedback is called calibration and is one of the most important drivers of lifelong learning and improvement.1

Despite the importance of knowing the outcomes of one’s decisions, this seldom occurs in modern medical education.4 Learners do not often obtain specific feedback about the decisions they make within a short enough time frame to intentionally reflect upon and modify that decision-making process.3,5 In addition, almost every patient admitted to a teaching hospital will be cared for by multiple physicians over the course of a hospitalization. These care transitions may be seen as barriers to high-quality care and education, but we suggest a different paradigm: transitions of care present opportunities for trainees to be teammates in each other’s calibration. Peers can provide specific feedback about the diagnostic process and inform one another about patient outcomes. Transitions of care allow for built-in “second opinions,” and trainees can intentionally learn by comparing the clinical reasoning involved at different points in a patient’s course. The diagnostic process is dynamic and complex; it is fundamental that trainees have the opportunity to reflect on the process to identify how and why the diagnostic process evolved throughout a patient’s hospitalization. Most inpatient diagnoses are “working diagnoses” that are likely to change. Thus, identifying the twists and turns in a patient’s diagnostic journey provides invaluable learning for future practice.

Herein, we describe the implementation and impact of a multisite initiative to engage residents in delivering feedback to their peers about medical decisions around transitions of care.

METHODS

The LOOP Project is a prospective clinical educational study that aimed to engage resident physicians to deliver feedback and updates about their colleagues’ diagnostic decision-making around care transitions. This study was deemed exempt from review by the University of Minnesota Institutional Review Board and either approved or deemed exempt by the corresponding Institutional Review Boards at all participating institutions. The study was conducted by seven programs at six institutions and included Internal Medicine, Pediatrics, and Internal Medicine–Pediatrics (PGY 1-4) residents from February 2017 to June 2017. Residents rotating through participating clinical services during the study period were invited to participate and given further information by site leads via informational presentations, written handouts, and/or emails.

The intervention entailed residents delivering structured feedback to their colleagues regarding their patients’ diagnoses after transitions of care. The predominant setting was the inpatient hospital medicine day-shift team providing feedback to the night-shift team regarding overnight admissions. Feedback about patients (usually chosen by the day-shift team) was delivered through completion of a standard templated form (Figure) usually sent within 24 hours after hospital admission through secure messaging (ie, EPIC In-Basket message utilizing a Smartphrase of the LOOP feedback form). A 24-hour time period was chosen to allow for rapid cycling of feedback focusing on initial diagnostic assessment. Site leads and resident champions promoted the project through presentations, informal discussions, and prizes for high completion rates of forms and surveys (ie, coffee cards and pizza).

Feedback forms were collected by site leads. A categorization rubric was developed during a pilot phase. Diagnoses before and after the transition of care were categorized as no change, diagnostic refinement (ie, the initial diagnosis was modified to be more specific), disease evolution (ie, the patient’s physiology or disease course changed), or major diagnostic change (ie, the initial and subsequent diagnoses differed substantially). Site leads acted as single-coders and conference calls were held to discuss coding and build consensus regarding the taxonomy. Diagnoses were not labeled as “right” or “wrong”; instead, categorization focused on differences between diagnoses before and after transitions of care.

Residents were invited to complete surveys before and after the rotation during which they had the opportunity to give or receive feedback. A unique identifier was entered by each participant to allow pairing of pre- and postsurveys. The survey (Appendix 1) was developed and refined during the initial pilot phase at the University of Minnesota. Surveys were collected using RedCap and analyzed using SAS version 9.3 (SAS Institute Inc., Cary, North Carolina). Differences between pre- and postsurveys were calculated using paired t-tests for continuous variables, and descriptive statistics were used for demographic and other items. Only surveys completed by individuals who completed both pre- and postsurveys were included in the analysis.

RESULTS

Overall, there were 716 current residents in the training programs that participated in this study; one site planned on participating but did not complete any forms. A total of 405 residents were eligible to participate during the study period. Overall, 221 (54.5%)

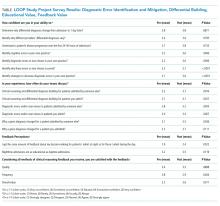

Survey results (Table) indicated significantly improved self-efficacy in identifying cognitive errors in residents’ own practice, identifying why those errors occurred, and identifying strategies to decrease future diagnostic errors. Participants noted increased frequency of discussions within teams regarding differential diagnoses, diagnostic errors, and why diagnoses changed over time. The feedback process was viewed positively by participants, who were also generally satisfied with the overall quality, frequency, and value of the feedback received. After the intervention, participants reported an increase in the amount of feedback received for night admissions and an overall increase in the perception that nighttime admissions were as “educational” as daytime admissions.

Of 544 collected forms, 238 (43.7%) showed some diagnostic change. These changes were further categorized into disease evolution (60 forms, 11.0%), diagnostic refinement (109 forms, 20.0%), and major diagnostic change (69 forms, 12.7%).

CONCLUSION

This study suggests that an intervention to operationalize standardized, structured feedback about diagnostic decision-making around transitions of care is a promising approach to improve residents’ understanding of changes in, and evolution of, the diagnostic process, as well as improve the perceived educational value of overnight admissions. In our results, over 40% of the patients admitted by residents had some change in their diagnoses after a transition of care during their early hospitalization. This finding highlights the importance of ensuring that trainees have the opportunity to know the outcomes of their decisions. Indeed, residents should be encouraged to follow-up on their own patients without prompting; however, studies show that this practice is uncommon and interventions beyond admonition are necessary.4

The diagnostic change rate observed in this study confirms that diagnosis is an iterative process and that the concept of a working diagnosis is key—a diagnosis made at admission will very likely be modified by time, the natural history of the disease, and new clinical information. When diagnoses are viewed as working diagnoses, trainees may be empowered to better understand the diagnostic process. As learners and teachers adopt this perspective, training programs are more likely to be successful in helping learners calibrate toward expertise.

Previous studies have questioned whether resident physicians view overnight admissions as valuable.6 After our intervention, we found an increase in both the amount of feedback received and the proportion of participants who agreed that night and day admissions were equally educational, suggesting that targeted diagnostic reasoning feedback can bolster educational value of nighttime admissions.

This study presents a number of limitations. First, the survey response rate was low, which could potentially lead to biased results. We excluded those respondents who did not respond to both the pre- and postsurveys from the analysis. Second, we did not measure actual change in diagnostic performance. While learners did report learning and saw feedback as valuable, self-identified learning points may not always translate to improved patient care. Additionally, residents chose the patients for whom feedback was provided, and the diagnostic change rate described may be overestimated. We did not track the total number of admissions for which feedback could have been delivered during the study. We did not include a control group, and the intervention may not be responsible for changing learners’ perceptions. However, the included programs were not implementing other new protocols focused on diagnostic reasoning during the study period. In addition, we addressed diagnostic changes early in a hospital course; a comprehensive program should address more feedback loops (eg, discharging team to admitting team).

This work is a pilot study; for future interventions focused on improving calibration to be sustainable, they should be congruent with existing clinical workflows and avoid adding to the stress and/or cognitive load of an already-busy clinical experience. The most optimal strategies for delivering feedback about clinical reasoning remain unclear.

In summary, a program to deliver structured feedback among resident physicians about diagnostic reasoning across care transitions for selected hospitalized patients is viewed positively by trainees, is feasible, and leads to changes in resident perception and self-efficacy. Future studies and interventions should aim to provide feedback more systematically, rather than just for selected patients, and objectively track diagnostic changes over time in hospitalized patients. While truly objective diagnostic information is challenging to obtain, comparing admission and other inpatient diagnoses to discharge diagnoses or diagnoses from primary care follow-up visits may be helpful. In addition, studies should aim to track trainees’ clinical decision-making over time and determine the effectiveness of feedback at improving diagnostic performance through calibration.

Acknowledgments

The authors thank the trainees who participated in this study, as well as the residency leadership at participating institutions. The authors also thank Qi Wang, PhD, for providing statistical analysis.

Disclosures

The authors have nothing to disclose.

Funding

The study was funded by an AAIM Innovation Grant and local support at each participating institution.

1. Croskerry P. The feedback sanction. Acad Emerg Med. 2000;7(11):1232-1238. https://doi.org/10.1111/j.1553-2712.2000.tb00468.x.

2. Trowbridge RL, Dhaliwal G, Cosby KS. Educational agenda for diagnostic error reduction. BMJ Qual Saf. 2013;22(Suppl 2):ii28-ii32. https://doi.org/10.1136/bmjqs-2012-001622.

3. Dhaliwal G. Clinical excellence: make it a habit. Acad Med. 2012;87(11):1473. https://doi.org/10.1097/ACM.0b013e31826d68d9.

4. Shenvi EC, Feupe SF, Yang H, El-Kareh R. Closing the loop: a mixed-methods study about resident learning from outcome feedback after patient handoffs. Diagnosis. 2018;5(4):235-242. https://doi.org/10.1515/dx-2018-0013.

5. Rencic J. Twelve tips for teaching expertise in clinical reasoning. Med Teach. 2011;33(11):887-892. https://doi.org/10.3109/0142159X.2011.558142.

6. Bump GM, Zimmer SM, McNeil MA, Elnicki DM. Hold-over admissions: are they educational for residents? J Gen Intern Med. 2014;29(3):463-467. https://doi.org/10.1007/s11606-013-2667-y.

One of the most promising methods for improving medical decision-making is learning from the outcomes of one’s decisions and either maintaining or modifying future decision-making based on those outcomes.1-3 This process of iterative improvement over time based on feedback is called calibration and is one of the most important drivers of lifelong learning and improvement.1

Despite the importance of knowing the outcomes of one’s decisions, this seldom occurs in modern medical education.4 Learners do not often obtain specific feedback about the decisions they make within a short enough time frame to intentionally reflect upon and modify that decision-making process.3,5 In addition, almost every patient admitted to a teaching hospital will be cared for by multiple physicians over the course of a hospitalization. These care transitions may be seen as barriers to high-quality care and education, but we suggest a different paradigm: transitions of care present opportunities for trainees to be teammates in each other’s calibration. Peers can provide specific feedback about the diagnostic process and inform one another about patient outcomes. Transitions of care allow for built-in “second opinions,” and trainees can intentionally learn by comparing the clinical reasoning involved at different points in a patient’s course. The diagnostic process is dynamic and complex; it is fundamental that trainees have the opportunity to reflect on the process to identify how and why the diagnostic process evolved throughout a patient’s hospitalization. Most inpatient diagnoses are “working diagnoses” that are likely to change. Thus, identifying the twists and turns in a patient’s diagnostic journey provides invaluable learning for future practice.

Herein, we describe the implementation and impact of a multisite initiative to engage residents in delivering feedback to their peers about medical decisions around transitions of care.

METHODS

The LOOP Project is a prospective clinical educational study that aimed to engage resident physicians to deliver feedback and updates about their colleagues’ diagnostic decision-making around care transitions. This study was deemed exempt from review by the University of Minnesota Institutional Review Board and either approved or deemed exempt by the corresponding Institutional Review Boards at all participating institutions. The study was conducted by seven programs at six institutions and included Internal Medicine, Pediatrics, and Internal Medicine–Pediatrics (PGY 1-4) residents from February 2017 to June 2017. Residents rotating through participating clinical services during the study period were invited to participate and given further information by site leads via informational presentations, written handouts, and/or emails.

The intervention entailed residents delivering structured feedback to their colleagues regarding their patients’ diagnoses after transitions of care. The predominant setting was the inpatient hospital medicine day-shift team providing feedback to the night-shift team regarding overnight admissions. Feedback about patients (usually chosen by the day-shift team) was delivered through completion of a standard templated form (Figure) usually sent within 24 hours after hospital admission through secure messaging (ie, EPIC In-Basket message utilizing a Smartphrase of the LOOP feedback form). A 24-hour time period was chosen to allow for rapid cycling of feedback focusing on initial diagnostic assessment. Site leads and resident champions promoted the project through presentations, informal discussions, and prizes for high completion rates of forms and surveys (ie, coffee cards and pizza).

Feedback forms were collected by site leads. A categorization rubric was developed during a pilot phase. Diagnoses before and after the transition of care were categorized as no change, diagnostic refinement (ie, the initial diagnosis was modified to be more specific), disease evolution (ie, the patient’s physiology or disease course changed), or major diagnostic change (ie, the initial and subsequent diagnoses differed substantially). Site leads acted as single-coders and conference calls were held to discuss coding and build consensus regarding the taxonomy. Diagnoses were not labeled as “right” or “wrong”; instead, categorization focused on differences between diagnoses before and after transitions of care.

Residents were invited to complete surveys before and after the rotation during which they had the opportunity to give or receive feedback. A unique identifier was entered by each participant to allow pairing of pre- and postsurveys. The survey (Appendix 1) was developed and refined during the initial pilot phase at the University of Minnesota. Surveys were collected using RedCap and analyzed using SAS version 9.3 (SAS Institute Inc., Cary, North Carolina). Differences between pre- and postsurveys were calculated using paired t-tests for continuous variables, and descriptive statistics were used for demographic and other items. Only surveys completed by individuals who completed both pre- and postsurveys were included in the analysis.

RESULTS

Overall, there were 716 current residents in the training programs that participated in this study; one site planned on participating but did not complete any forms. A total of 405 residents were eligible to participate during the study period. Overall, 221 (54.5%)

Survey results (Table) indicated significantly improved self-efficacy in identifying cognitive errors in residents’ own practice, identifying why those errors occurred, and identifying strategies to decrease future diagnostic errors. Participants noted increased frequency of discussions within teams regarding differential diagnoses, diagnostic errors, and why diagnoses changed over time. The feedback process was viewed positively by participants, who were also generally satisfied with the overall quality, frequency, and value of the feedback received. After the intervention, participants reported an increase in the amount of feedback received for night admissions and an overall increase in the perception that nighttime admissions were as “educational” as daytime admissions.

Of 544 collected forms, 238 (43.7%) showed some diagnostic change. These changes were further categorized into disease evolution (60 forms, 11.0%), diagnostic refinement (109 forms, 20.0%), and major diagnostic change (69 forms, 12.7%).

CONCLUSION

This study suggests that an intervention to operationalize standardized, structured feedback about diagnostic decision-making around transitions of care is a promising approach to improve residents’ understanding of changes in, and evolution of, the diagnostic process, as well as improve the perceived educational value of overnight admissions. In our results, over 40% of the patients admitted by residents had some change in their diagnoses after a transition of care during their early hospitalization. This finding highlights the importance of ensuring that trainees have the opportunity to know the outcomes of their decisions. Indeed, residents should be encouraged to follow-up on their own patients without prompting; however, studies show that this practice is uncommon and interventions beyond admonition are necessary.4

The diagnostic change rate observed in this study confirms that diagnosis is an iterative process and that the concept of a working diagnosis is key—a diagnosis made at admission will very likely be modified by time, the natural history of the disease, and new clinical information. When diagnoses are viewed as working diagnoses, trainees may be empowered to better understand the diagnostic process. As learners and teachers adopt this perspective, training programs are more likely to be successful in helping learners calibrate toward expertise.

Previous studies have questioned whether resident physicians view overnight admissions as valuable.6 After our intervention, we found an increase in both the amount of feedback received and the proportion of participants who agreed that night and day admissions were equally educational, suggesting that targeted diagnostic reasoning feedback can bolster educational value of nighttime admissions.

This study presents a number of limitations. First, the survey response rate was low, which could potentially lead to biased results. We excluded those respondents who did not respond to both the pre- and postsurveys from the analysis. Second, we did not measure actual change in diagnostic performance. While learners did report learning and saw feedback as valuable, self-identified learning points may not always translate to improved patient care. Additionally, residents chose the patients for whom feedback was provided, and the diagnostic change rate described may be overestimated. We did not track the total number of admissions for which feedback could have been delivered during the study. We did not include a control group, and the intervention may not be responsible for changing learners’ perceptions. However, the included programs were not implementing other new protocols focused on diagnostic reasoning during the study period. In addition, we addressed diagnostic changes early in a hospital course; a comprehensive program should address more feedback loops (eg, discharging team to admitting team).

This work is a pilot study; for future interventions focused on improving calibration to be sustainable, they should be congruent with existing clinical workflows and avoid adding to the stress and/or cognitive load of an already-busy clinical experience. The most optimal strategies for delivering feedback about clinical reasoning remain unclear.

In summary, a program to deliver structured feedback among resident physicians about diagnostic reasoning across care transitions for selected hospitalized patients is viewed positively by trainees, is feasible, and leads to changes in resident perception and self-efficacy. Future studies and interventions should aim to provide feedback more systematically, rather than just for selected patients, and objectively track diagnostic changes over time in hospitalized patients. While truly objective diagnostic information is challenging to obtain, comparing admission and other inpatient diagnoses to discharge diagnoses or diagnoses from primary care follow-up visits may be helpful. In addition, studies should aim to track trainees’ clinical decision-making over time and determine the effectiveness of feedback at improving diagnostic performance through calibration.

Acknowledgments

The authors thank the trainees who participated in this study, as well as the residency leadership at participating institutions. The authors also thank Qi Wang, PhD, for providing statistical analysis.

Disclosures

The authors have nothing to disclose.

Funding

The study was funded by an AAIM Innovation Grant and local support at each participating institution.

One of the most promising methods for improving medical decision-making is learning from the outcomes of one’s decisions and either maintaining or modifying future decision-making based on those outcomes.1-3 This process of iterative improvement over time based on feedback is called calibration and is one of the most important drivers of lifelong learning and improvement.1

Despite the importance of knowing the outcomes of one’s decisions, this seldom occurs in modern medical education.4 Learners do not often obtain specific feedback about the decisions they make within a short enough time frame to intentionally reflect upon and modify that decision-making process.3,5 In addition, almost every patient admitted to a teaching hospital will be cared for by multiple physicians over the course of a hospitalization. These care transitions may be seen as barriers to high-quality care and education, but we suggest a different paradigm: transitions of care present opportunities for trainees to be teammates in each other’s calibration. Peers can provide specific feedback about the diagnostic process and inform one another about patient outcomes. Transitions of care allow for built-in “second opinions,” and trainees can intentionally learn by comparing the clinical reasoning involved at different points in a patient’s course. The diagnostic process is dynamic and complex; it is fundamental that trainees have the opportunity to reflect on the process to identify how and why the diagnostic process evolved throughout a patient’s hospitalization. Most inpatient diagnoses are “working diagnoses” that are likely to change. Thus, identifying the twists and turns in a patient’s diagnostic journey provides invaluable learning for future practice.

Herein, we describe the implementation and impact of a multisite initiative to engage residents in delivering feedback to their peers about medical decisions around transitions of care.

METHODS

The LOOP Project is a prospective clinical educational study that aimed to engage resident physicians to deliver feedback and updates about their colleagues’ diagnostic decision-making around care transitions. This study was deemed exempt from review by the University of Minnesota Institutional Review Board and either approved or deemed exempt by the corresponding Institutional Review Boards at all participating institutions. The study was conducted by seven programs at six institutions and included Internal Medicine, Pediatrics, and Internal Medicine–Pediatrics (PGY 1-4) residents from February 2017 to June 2017. Residents rotating through participating clinical services during the study period were invited to participate and given further information by site leads via informational presentations, written handouts, and/or emails.

The intervention entailed residents delivering structured feedback to their colleagues regarding their patients’ diagnoses after transitions of care. The predominant setting was the inpatient hospital medicine day-shift team providing feedback to the night-shift team regarding overnight admissions. Feedback about patients (usually chosen by the day-shift team) was delivered through completion of a standard templated form (Figure) usually sent within 24 hours after hospital admission through secure messaging (ie, EPIC In-Basket message utilizing a Smartphrase of the LOOP feedback form). A 24-hour time period was chosen to allow for rapid cycling of feedback focusing on initial diagnostic assessment. Site leads and resident champions promoted the project through presentations, informal discussions, and prizes for high completion rates of forms and surveys (ie, coffee cards and pizza).

Feedback forms were collected by site leads. A categorization rubric was developed during a pilot phase. Diagnoses before and after the transition of care were categorized as no change, diagnostic refinement (ie, the initial diagnosis was modified to be more specific), disease evolution (ie, the patient’s physiology or disease course changed), or major diagnostic change (ie, the initial and subsequent diagnoses differed substantially). Site leads acted as single-coders and conference calls were held to discuss coding and build consensus regarding the taxonomy. Diagnoses were not labeled as “right” or “wrong”; instead, categorization focused on differences between diagnoses before and after transitions of care.

Residents were invited to complete surveys before and after the rotation during which they had the opportunity to give or receive feedback. A unique identifier was entered by each participant to allow pairing of pre- and postsurveys. The survey (Appendix 1) was developed and refined during the initial pilot phase at the University of Minnesota. Surveys were collected using RedCap and analyzed using SAS version 9.3 (SAS Institute Inc., Cary, North Carolina). Differences between pre- and postsurveys were calculated using paired t-tests for continuous variables, and descriptive statistics were used for demographic and other items. Only surveys completed by individuals who completed both pre- and postsurveys were included in the analysis.

RESULTS

Overall, there were 716 current residents in the training programs that participated in this study; one site planned on participating but did not complete any forms. A total of 405 residents were eligible to participate during the study period. Overall, 221 (54.5%)

Survey results (Table) indicated significantly improved self-efficacy in identifying cognitive errors in residents’ own practice, identifying why those errors occurred, and identifying strategies to decrease future diagnostic errors. Participants noted increased frequency of discussions within teams regarding differential diagnoses, diagnostic errors, and why diagnoses changed over time. The feedback process was viewed positively by participants, who were also generally satisfied with the overall quality, frequency, and value of the feedback received. After the intervention, participants reported an increase in the amount of feedback received for night admissions and an overall increase in the perception that nighttime admissions were as “educational” as daytime admissions.

Of 544 collected forms, 238 (43.7%) showed some diagnostic change. These changes were further categorized into disease evolution (60 forms, 11.0%), diagnostic refinement (109 forms, 20.0%), and major diagnostic change (69 forms, 12.7%).

CONCLUSION

This study suggests that an intervention to operationalize standardized, structured feedback about diagnostic decision-making around transitions of care is a promising approach to improve residents’ understanding of changes in, and evolution of, the diagnostic process, as well as improve the perceived educational value of overnight admissions. In our results, over 40% of the patients admitted by residents had some change in their diagnoses after a transition of care during their early hospitalization. This finding highlights the importance of ensuring that trainees have the opportunity to know the outcomes of their decisions. Indeed, residents should be encouraged to follow-up on their own patients without prompting; however, studies show that this practice is uncommon and interventions beyond admonition are necessary.4

The diagnostic change rate observed in this study confirms that diagnosis is an iterative process and that the concept of a working diagnosis is key—a diagnosis made at admission will very likely be modified by time, the natural history of the disease, and new clinical information. When diagnoses are viewed as working diagnoses, trainees may be empowered to better understand the diagnostic process. As learners and teachers adopt this perspective, training programs are more likely to be successful in helping learners calibrate toward expertise.

Previous studies have questioned whether resident physicians view overnight admissions as valuable.6 After our intervention, we found an increase in both the amount of feedback received and the proportion of participants who agreed that night and day admissions were equally educational, suggesting that targeted diagnostic reasoning feedback can bolster educational value of nighttime admissions.

This study presents a number of limitations. First, the survey response rate was low, which could potentially lead to biased results. We excluded those respondents who did not respond to both the pre- and postsurveys from the analysis. Second, we did not measure actual change in diagnostic performance. While learners did report learning and saw feedback as valuable, self-identified learning points may not always translate to improved patient care. Additionally, residents chose the patients for whom feedback was provided, and the diagnostic change rate described may be overestimated. We did not track the total number of admissions for which feedback could have been delivered during the study. We did not include a control group, and the intervention may not be responsible for changing learners’ perceptions. However, the included programs were not implementing other new protocols focused on diagnostic reasoning during the study period. In addition, we addressed diagnostic changes early in a hospital course; a comprehensive program should address more feedback loops (eg, discharging team to admitting team).

This work is a pilot study; for future interventions focused on improving calibration to be sustainable, they should be congruent with existing clinical workflows and avoid adding to the stress and/or cognitive load of an already-busy clinical experience. The most optimal strategies for delivering feedback about clinical reasoning remain unclear.

In summary, a program to deliver structured feedback among resident physicians about diagnostic reasoning across care transitions for selected hospitalized patients is viewed positively by trainees, is feasible, and leads to changes in resident perception and self-efficacy. Future studies and interventions should aim to provide feedback more systematically, rather than just for selected patients, and objectively track diagnostic changes over time in hospitalized patients. While truly objective diagnostic information is challenging to obtain, comparing admission and other inpatient diagnoses to discharge diagnoses or diagnoses from primary care follow-up visits may be helpful. In addition, studies should aim to track trainees’ clinical decision-making over time and determine the effectiveness of feedback at improving diagnostic performance through calibration.

Acknowledgments

The authors thank the trainees who participated in this study, as well as the residency leadership at participating institutions. The authors also thank Qi Wang, PhD, for providing statistical analysis.

Disclosures

The authors have nothing to disclose.

Funding

The study was funded by an AAIM Innovation Grant and local support at each participating institution.

1. Croskerry P. The feedback sanction. Acad Emerg Med. 2000;7(11):1232-1238. https://doi.org/10.1111/j.1553-2712.2000.tb00468.x.

2. Trowbridge RL, Dhaliwal G, Cosby KS. Educational agenda for diagnostic error reduction. BMJ Qual Saf. 2013;22(Suppl 2):ii28-ii32. https://doi.org/10.1136/bmjqs-2012-001622.

3. Dhaliwal G. Clinical excellence: make it a habit. Acad Med. 2012;87(11):1473. https://doi.org/10.1097/ACM.0b013e31826d68d9.

4. Shenvi EC, Feupe SF, Yang H, El-Kareh R. Closing the loop: a mixed-methods study about resident learning from outcome feedback after patient handoffs. Diagnosis. 2018;5(4):235-242. https://doi.org/10.1515/dx-2018-0013.

5. Rencic J. Twelve tips for teaching expertise in clinical reasoning. Med Teach. 2011;33(11):887-892. https://doi.org/10.3109/0142159X.2011.558142.

6. Bump GM, Zimmer SM, McNeil MA, Elnicki DM. Hold-over admissions: are they educational for residents? J Gen Intern Med. 2014;29(3):463-467. https://doi.org/10.1007/s11606-013-2667-y.

1. Croskerry P. The feedback sanction. Acad Emerg Med. 2000;7(11):1232-1238. https://doi.org/10.1111/j.1553-2712.2000.tb00468.x.

2. Trowbridge RL, Dhaliwal G, Cosby KS. Educational agenda for diagnostic error reduction. BMJ Qual Saf. 2013;22(Suppl 2):ii28-ii32. https://doi.org/10.1136/bmjqs-2012-001622.

3. Dhaliwal G. Clinical excellence: make it a habit. Acad Med. 2012;87(11):1473. https://doi.org/10.1097/ACM.0b013e31826d68d9.

4. Shenvi EC, Feupe SF, Yang H, El-Kareh R. Closing the loop: a mixed-methods study about resident learning from outcome feedback after patient handoffs. Diagnosis. 2018;5(4):235-242. https://doi.org/10.1515/dx-2018-0013.

5. Rencic J. Twelve tips for teaching expertise in clinical reasoning. Med Teach. 2011;33(11):887-892. https://doi.org/10.3109/0142159X.2011.558142.

6. Bump GM, Zimmer SM, McNeil MA, Elnicki DM. Hold-over admissions: are they educational for residents? J Gen Intern Med. 2014;29(3):463-467. https://doi.org/10.1007/s11606-013-2667-y.

© 2019 Society of Hospital Medicine